deep learning的一些知识点

softmax loss:

softmax:  softmax的作用,将fc的输出映射成为(0,1)的概率,并将其差距拉大。

softmax的作用,将fc的输出映射成为(0,1)的概率,并将其差距拉大。

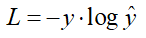

cross entropy loss:  y是样本的真实标签,为1,y'是样本的分数。单个样本的交叉熵计算方法。

y是样本的真实标签,为1,y'是样本的分数。单个样本的交叉熵计算方法。

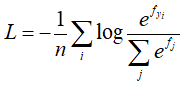

softmax loss:  计算n个样本的交叉熵,然后取均值。

计算n个样本的交叉熵,然后取均值。

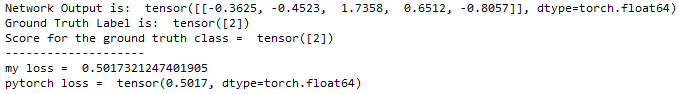

pytorch 的crossentropy loss的测试demo:

#coding=utf-8 import torch import torch.nn as nn import math import numpy as np # output = torch.randn(1, 5, requires_grad = True) #假设是网络的最后一层,5分类 output=np.array([[-0.3625, -0.4523, 1.7358, 0.6512, -0.8057]],dtype='float') output=torch.from_numpy(output) # label = torch.empty(1, dtype=torch.long).random_(5) # 0 - 4, 任意选取一个分类 label=np.array([2],dtype='int64') label=torch.from_numpy(label) print ('Network Output is: ', output) print ('Ground Truth Label is: ', label) score = output [0,label.item()].item() # label对应的class的logits(得分) print ('Score for the ground truth class = ', label) first = - score second = 0 for i in range(5): second += math.exp(output[0,i]) second = math.log(second) loss = first + second print ('-' * 20) print ('my loss = ', loss) loss = nn.CrossEntropyLoss() print ('pytorch loss = ', loss(output, label))

输出:

在计算方式上:

cross entropy loss:

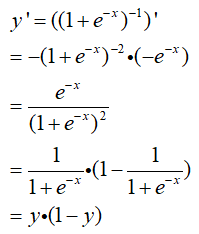

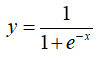

sigmoid:

sigmoid的导数: