编程实现hadoop相关功能

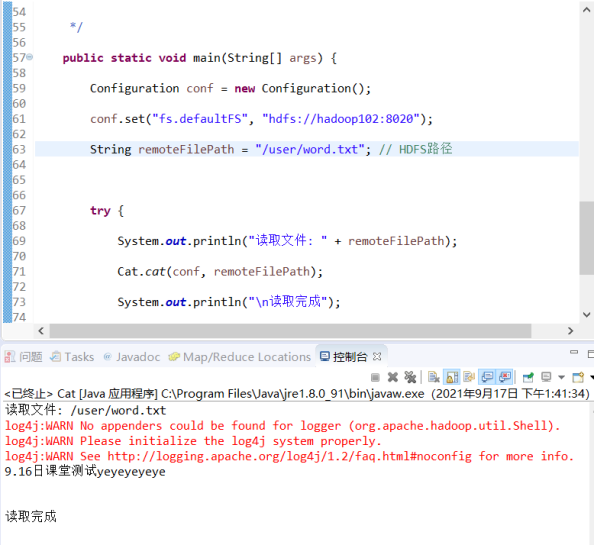

1、将HDFS中指定文件的内容输出到终端中;

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

|

import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import org.apache.hadoop.fs.FileSystem; import java.io.*; public class Cat { /** * 读取文件内容 */ public static void cat(Configuration conf, String remoteFilePath) { Path remotePath = new Path(remoteFilePath); try (FileSystem fs = FileSystem.get(conf); FSDataInputStream in = fs.open(remotePath); BufferedReader d = new BufferedReader(new InputStreamReader(in));) { String line; while ((line = d.readLine()) != null) { System.out.println(line); } } catch (IOException e) { e.printStackTrace(); } } /** * 主函数 */ public static void main(String[] args) { Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://hadoop102:8020"); String remoteFilePath = "/user/word.txt"; // HDFS路径 try { System.out.println("读取文件: " + remoteFilePath); Cat.cat(conf, remoteFilePath); System.out.println("\n读取完成"); } catch (Exception e) { e.printStackTrace(); } }} |

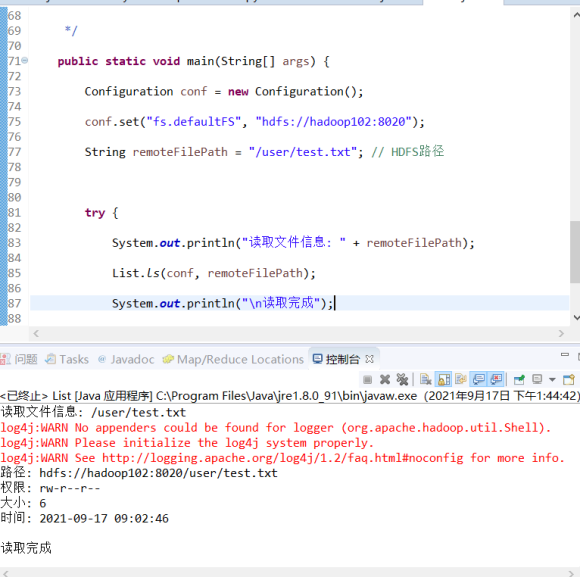

2、显示HDFS中指定的文件的读写权限、大小、创建时间、路径等信息;

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

|

import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import org.apache.hadoop.fs.FileSystem; import java.io.*;import java.text.SimpleDateFormat; public class List { /** * 显示指定文件的信息 */ public static void ls(Configuration conf, String remoteFilePath) { try (FileSystem fs = FileSystem.get(conf)) { Path remotePath = new Path(remoteFilePath); FileStatus[] fileStatuses = fs.listStatus(remotePath); for (FileStatus s : fileStatuses) { System.out.println("路径: " + s.getPath().toString()); System.out.println("权限: " + s.getPermission().toString()); System.out.println("大小: " + s.getLen()); /* 返回的是时间戳,转化为时间日期格式 */ long timeStamp = s.getModificationTime(); SimpleDateFormat format = new SimpleDateFormat( "yyyy-MM-dd HH:mm:ss"); String date = format.format(timeStamp); System.out.println("时间: " + date); } } catch (IOException e) { e.printStackTrace(); } } /** * 主函数 */ public static void main(String[] args) { Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://hadoop102:8020"); String remoteFilePath = "/user/test.txt"; // HDFS路径 try { System.out.println("读取文件信息: " + remoteFilePath); List.ls(conf, remoteFilePath); System.out.println("\n读取完成"); } catch (Exception e) { e.printStackTrace(); } }} |

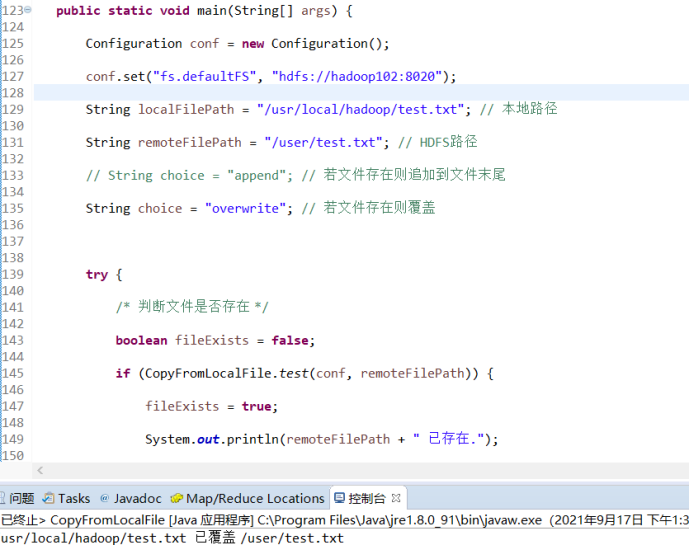

3、向HDFS中指定的文件追加内容,由用户指定内容追加到原有文件的开头或结尾;

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

|

import java.io.FileInputStream;import java.io.IOException; import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FSDataOutputStream;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.Path; public class CopyFromLocalFile { /** * 判断路径是否存在 */ public static boolean test(Configuration conf, String path) { try (FileSystem fs = FileSystem.get(conf)) { return fs.exists(new Path(path)); } catch (IOException e) { e.printStackTrace(); return false; } } /** * 复制文件到指定路径 若路径已存在,则进行覆盖 */ public static void copyFromLocalFile(Configuration conf, String localFilePath, String remoteFilePath) { Path localPath = new Path(localFilePath); Path remotePath = new Path(remoteFilePath); try (FileSystem fs = FileSystem.get(conf)) { /* fs.copyFromLocalFile 第一个参数表示是否删除源文件,第二个参数表示是否覆盖 */ fs.copyFromLocalFile(false, true, localPath, remotePath); } catch (IOException e) { e.printStackTrace(); } } /** * 追加文件内容 */ public static void appendToFile(Configuration conf, String localFilePath, String remoteFilePath) { Path remotePath = new Path(remoteFilePath); try (FileSystem fs = FileSystem.get(conf); FileInputStream in = new FileInputStream(localFilePath);) { FSDataOutputStream out = fs.append(remotePath); byte[] data = new byte[1024]; int read = -1; while ((read = in.read(data)) > 0) { out.write(data, 0, read); } out.close(); } catch (IOException e) { e.printStackTrace(); } } /** * 主函数 */ public static void main(String[] args) { Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://hadoop102:8020"); String localFilePath = "/test.txt"; // 本地路径 String remoteFilePath = "/user/test.txt"; // HDFS路径 // String choice = "append"; // 若文件存在则追加到文件末尾 String choice = "overwrite"; // 若文件存在则覆盖 try { /* 判断文件是否存在 */ boolean fileExists = false; if (CopyFromLocalFile.test(conf, remoteFilePath)) { fileExists = true; System.out.println(remoteFilePath + " 已存在."); } else { System.out.println(remoteFilePath + " 不存在."); } /* 进行处理 */ if (!fileExists) { // 文件不存在,则上传 CopyFromLocalFile.copyFromLocalFile(conf, localFilePath, remoteFilePath); System.out.println(localFilePath + " 已上传至 " + remoteFilePath); } else if (choice.equals("overwrite")) { // 选择覆盖 CopyFromLocalFile.copyFromLocalFile(conf, localFilePath, remoteFilePath); System.out.println(localFilePath + " 已覆盖 " + remoteFilePath); } else if (choice.equals("append")) { // 选择追加 CopyFromLocalFile.appendToFile(conf, localFilePath, remoteFilePath); System.out.println(localFilePath + " 已追加至 " + remoteFilePath); } } catch (Exception e) { e.printStackTrace(); } }} |

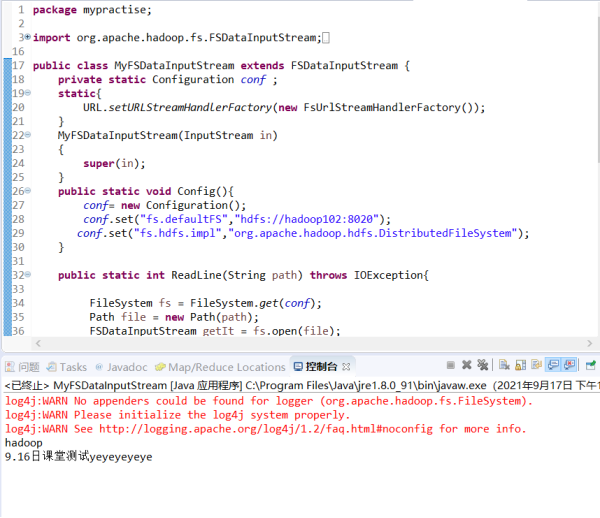

4、编程实现一个类“MyFSDataInputStream”,该类继承“org.apache.hadoop.fs.FSDataInputStream”,要求如下:实现按行读取HDFS中指定文件的方法“readLine()”,如果读到文件末尾,则返回空,否则返回文件一行的文本。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

|

import org.apache.hadoop.fs.FSDataInputStream;import org.apache.commons.io.IOUtils;import java.io.BufferedReader;import java.io.IOException;import java.io.InputStream;import java.io.InputStreamReader;import java.net.MalformedURLException;import java.net.URL;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;import org.apache.hadoop.fs.Path;public class MyFSDataInputStream extends FSDataInputStream { private static Configuration conf ; static{ URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory()); } MyFSDataInputStream(InputStream in) { super(in); } public static void Config(){ conf= new Configuration(); conf.set("fs.defaultFS","hdfs://hadoop102:8020"); conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem"); } public static int ReadLine(String path) throws IOException{ FileSystem fs = FileSystem.get(conf); Path file = new Path(path); FSDataInputStream getIt = fs.open(file); BufferedReader d = new BufferedReader(new InputStreamReader(getIt)); String content;// = d.readLine(); //读取文件一行 if((content=d.readLine())!=null){ System.out.println(content); } // System.out.println(content); d.close(); //关闭文件 fs.close(); //关闭hdfs return 0; } public static void PrintFile() throws MalformedURLException, IOException{ String FilePath="hdfs://hadoop102:8020/user/word.txt"; InputStream in=null; in=new URL(FilePath).openStream(); IOUtils.copy(in,System.out); } public static void main(String[] arg) throws IOException{ MyFSDataInputStream.Config(); MyFSDataInputStream.ReadLine("hdfs://hadoop102:8020/user/test.txt"); MyFSDataInputStream.PrintFile(); }} |

5、查看Java帮助手册或其它资料,用“java.net.URL”“org.apache.hadoop.fs.FsURLStreamHandlerFactory”编程完成输出HDFS中指定文件的文本到终端中。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

package mypractise;import java.io.IOException;import java.io.InputStream;import java.net.URL;import org.apache.hadoop.io.IOUtils;import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;public class FdUrl { static{ URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory()); } /* *param args*/ public static void cat(String remoteFilePath) { try(InputStream in=new URL("hdfs","hadoop102",8020,remoteFilePath).openStream()){ IOUtils.copyBytes(in, System.out, 4096, false); IOUtils.closeStream(in); }catch (IOException e){ e.printStackTrace(); } } public static void main(String[] args) { //TODO Auto-generated method stub String remoteFilePath="/user/word.txt"; try{ System.out.println("去读文件: "+remoteFilePath); FdUrl.cat(remoteFilePath); System.out.println("\n读取完成"); }catch(Exception e) { e.printStackTrace(); } }}

|