OEMCC 13.2 集群版本安装部署

2018-10-19 22:51 AlfredZhao 阅读(2484) 评论(0) 编辑 收藏 举报之前测试部署过OEMCC 13.2单机,具体可参考之前随笔:

当时环境:两台主机,系统RHEL 6.5,分别部署OMS和OMR:

OMS,也就是OEMCC的服务端 IP:192.168.1.88 内存:12G+ 硬盘:100G+

OMR,也就是OEM底层的资料库 IP:192.168.1.89 内存:8G+ 硬盘:100G+

相当于OMS和OMR都是单机版,然后有些客户对监控系统的要求也很高,这就需要集群来提升高可用性。

对于OMR来说,可以搭建对应版本的RAC来解决单点故障,那么对于OMS而言,又如何构建具备高可用性的集群呢?

最近遇到某客户有这样的高可用需求,本文总结记录一下OEMCC集群版本的完整安装过程。

1.需求描述

客户要求部署OEMCC13.2集群,包括OMR的集群和OMS的集群,其中OMR的集群就是对应Oracle 12.1.0.2 RAC;OMS的集群要求Active-Active模式,并配合SLB实现负载均衡。2.环境规划

使用两台虚拟机来实现部署。配置信息如下:

需要提前下载如下安装介质:

--oemcc13.2安装介质

em13200p1_linux64.bin

em13200p1_linux64-2.zip

em13200p1_linux64-3.zip

em13200p1_linux64-4.zip

em13200p1_linux64-5.zip

em13200p1_linux64-6.zip

em13200p1_linux64-7.zip

--oracle 12.1.0.2 RAC 安装介质:

p21419221_121020_Linux-x86-64_1of10.zip

p21419221_121020_Linux-x86-64_2of10.zip

p21419221_121020_Linux-x86-64_5of10.zip

p21419221_121020_Linux-x86-64_6of10.zip

--dbca针对oemcc13.2的建库模版:

12.1.0.2.0_Database_Template_for_EM13_2_0_0_0_Linux_x64.zip

3.OMR集群安装

OMR集群通过Oracle RAC来实现:OEMCC 13.2提供的模版,要求资料库(OMR)版本为12.1.0.2。3.1 环境准备

1) 配置yum源安装依赖rpm包:yum install binutils compat-libcap1 compat-libstdc++-33 \

e2fsprogs e2fsprogs-libs glibc glibc-devel ksh libaio-devel libaio libgcc libstdc++ libstdc++-devel \

libxcb libX11 libXau libXi libXtst make \

net-tools nfs-utils smartmontools sysstat

- 各节点关闭防火墙、SELinux:

--各节点关闭防火墙:

service iptables stop

chkconfig iptables off

--各节点关闭SELinux:

getenforce

修改/etc/selinux/config SELINUX= disabled

--临时关闭SELinux

setenforce 0

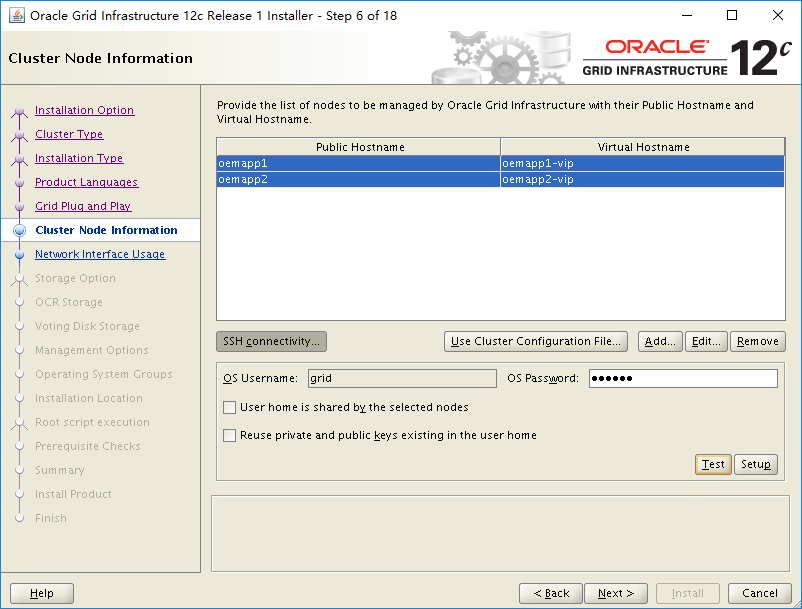

- 配置 /etc/hosts文件:

#public ip

10.1.43.211 oemapp1

10.1.43.212 oemapp2

#virtual ip

10.1.43.208 oemapp1-vip

10.1.43.209 oemapp2-vip

#scan ip

10.1.43.210 oemapp-scan

#private ip

172.16.43.211 oemapp1-priv

172.16.43.212 oemapp2-priv

- 创建用户、组;

--创建group & user:

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

groupadd -g 54330 racdba

useradd -u 54321 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle

useradd -u 54322 -g oinstall -G asmadmin,asmdba,asmoper,dba grid

--然后给oracle、grid设置密码:

passwd oracle

passwd grid

- 各节点创建安装目录(root用户):

mkdir -p /app/12.1.0.2/grid

mkdir -p /app/grid

mkdir -p /app/oracle

chown -R grid:oinstall /app

chown oracle:oinstall /app/oracle

chmod -R 775 /app

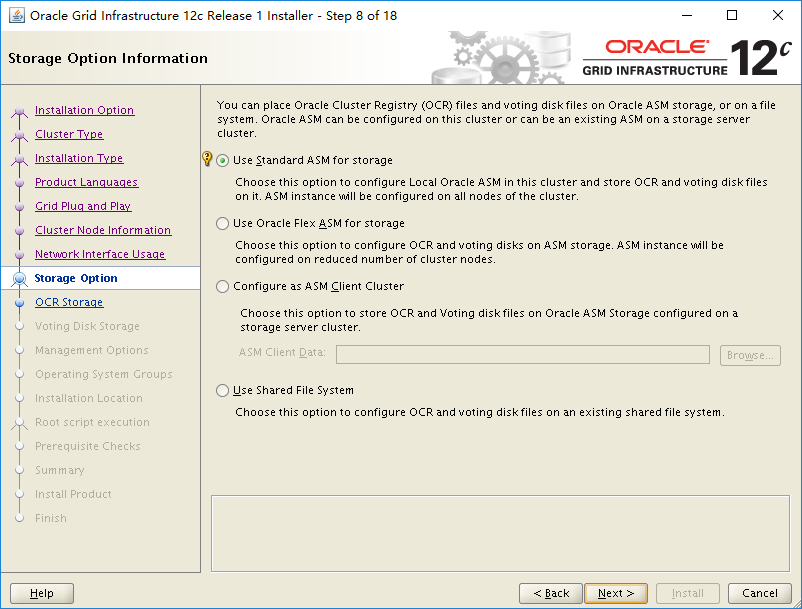

- 共享LUN规则配置:

vi /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29ad39372db383c7903d31788d0", NAME="asm-data1", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c298c085f4e57c1f9fcd7b3d1dbf", NAME="asm-data2", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c290b495ab0b6c1b57536f4b3cf8", NAME="asm-ocr1", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29e7743dca47419aca041b88221", NAME="asm-ocr2", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29608a9ddb8b3168936d01a4f7b", NAME="asm-ocr3", OWNER="grid", GROUP="asmadmin", MODE="0660"

重载规则后确认共享LUN名称和权限属组:

[root@oemapp1 media]# udevadm control --reload-rules

[root@oemapp1 media]# udevadm trigger

[root@oemapp1 media]# ls -l /dev/asm*

brw-rw----. 1 grid asmadmin 8, 16 Oct 9 12:27 /dev/asm-data1

brw-rw----. 1 grid asmadmin 8, 32 Oct 9 12:27 /dev/asm-data2

brw-rw----. 1 grid asmadmin 8, 48 Oct 9 12:27 /dev/asm-ocr1

brw-rw----. 1 grid asmadmin 8, 64 Oct 9 12:27 /dev/asm-ocr2

brw-rw----. 1 grid asmadmin 8, 80 Oct 9 12:27 /dev/asm-ocr3

- 内核参数修改:

vi /etc/sysctl.conf

# vi /etc/sysctl.conf 增加如下内容:

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 6597069766656

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.eth1.rp_filter = 2

net.ipv4.conf.eth0.rp_filter = 1

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

修改生效:

# /sbin/sysctl –p

- 用户shell的限制:

vi /etc/security/limits.conf

#在/etc/security/limits.conf 增加如下内容:

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

- 插入式认证模块配置:

vi /etc/pam.d/login

--加载 pam_limits.so 模块

使用 root 用户修改以下文件/etc/pam.d/login,增加如下内容:

session required pam_limits.so

说明:limits.conf 文件实际是 Linux PAM(插入式认证模块,Pluggable Authentication Modules)中 pam_limits.so 的配置文件,而且只针对于单个会话。

- 各节点设置用户的环境变量:

--第1个节点grid用户:

export ORACLE_SID=+ASM1;

export ORACLE_BASE=/app/grid

export ORACLE_HOME=/app/12.1.0.2/grid;

export PATH=$ORACLE_HOME/bin:$PATH;

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib;

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

--第2个节点grid用户:

export ORACLE_SID=+ASM2;

export ORACLE_BASE=/app/grid

export ORACLE_HOME=/app/12.1.0.2/grid;

export PATH=$ORACLE_HOME/bin:$PATH;

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib;

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib;

--第1个节点oracle用户:

export ORACLE_SID=omr1;

export ORACLE_BASE=/app/oracle;

export ORACLE_HOME=/app/oracle/product/12.1.0.2/db_1;

export ORACLE_HOSTNAME=;

export PATH=$ORACLE_HOME/bin:$PATH;

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib;

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib;

--第2个节点oracle用户:

export ORACLE_SID=omr2;

export ORACLE_BASE=/app/oracle;

export ORACLE_HOME=/app/oracle/product/12.1.0.2/db_1;

export ORACLE_HOSTNAME=;

export PATH=$ORACLE_HOME/bin:$PATH;

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib;

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib;

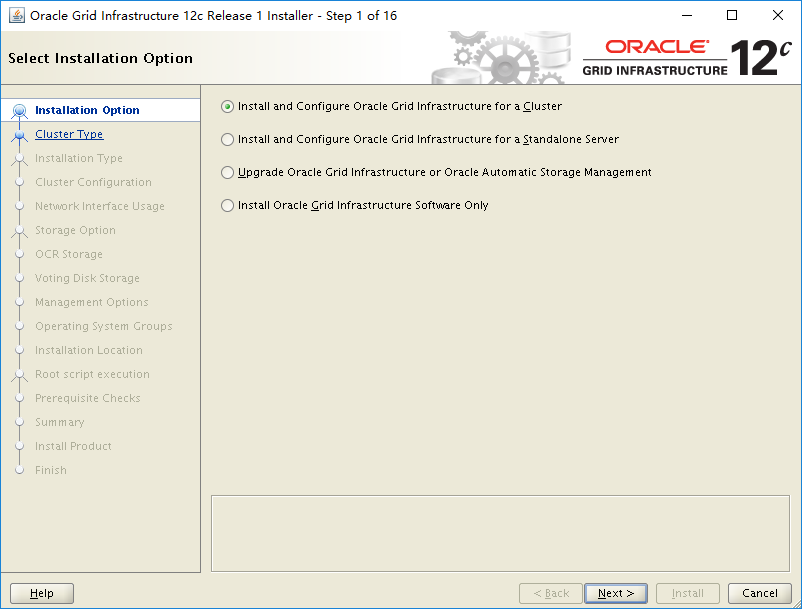

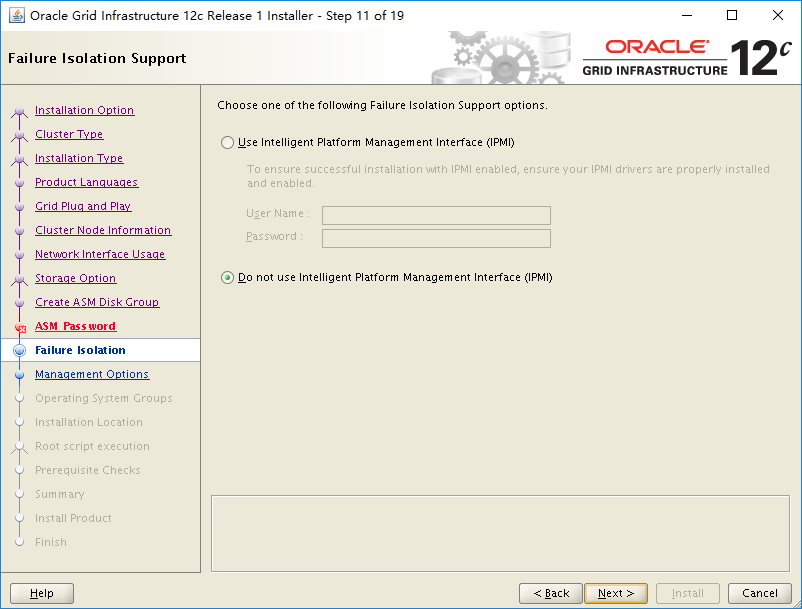

3.2 GI安装

解压安装介质:unzip p21419221_121020_Linux-x86-64_5of10.zip

unzip p21419221_121020_Linux-x86-64_6of10.zip

配置DISPLAY变量,调用图形界面安装GI:

[grid@oemapp1 grid]$ export DISPLAY=10.1.52.76:0.0

[grid@oemapp1 grid]$ ./runInstaller

3.3 创建ASM磁盘组、ACFS集群文件系统

配置DISPLAY变量,调用图形界面创建ASM磁盘组、ACFS集群文件系统:[grid@oemapp1 grid]$ export DISPLAY=10.1.52.76:0.0

[grid@oemapp1 grid]$ asmca

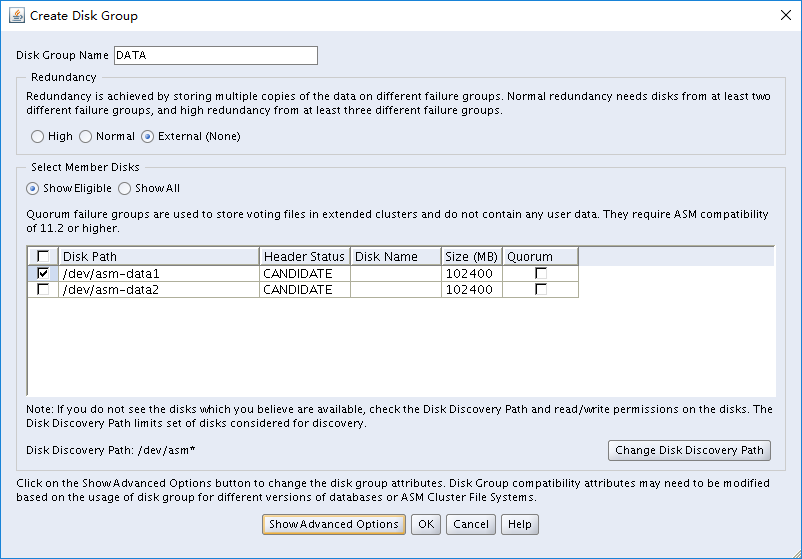

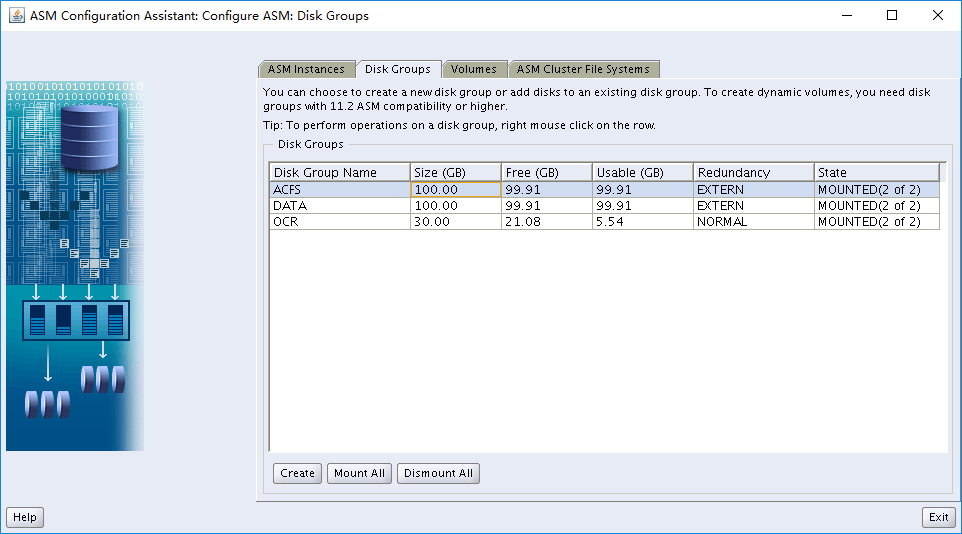

创建ASM磁盘组:

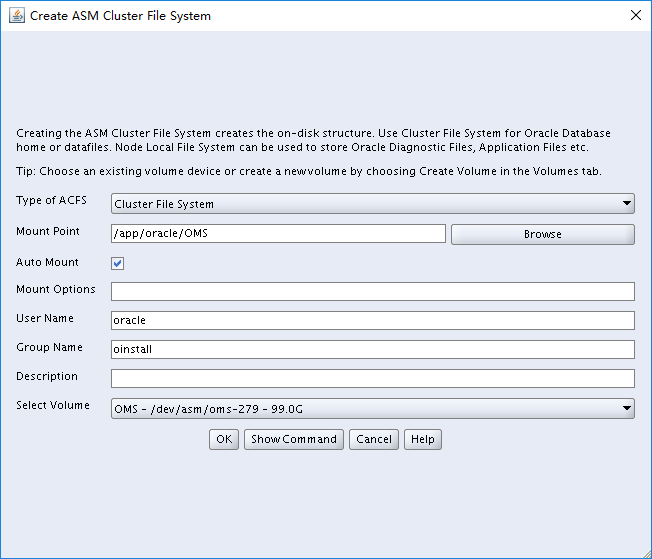

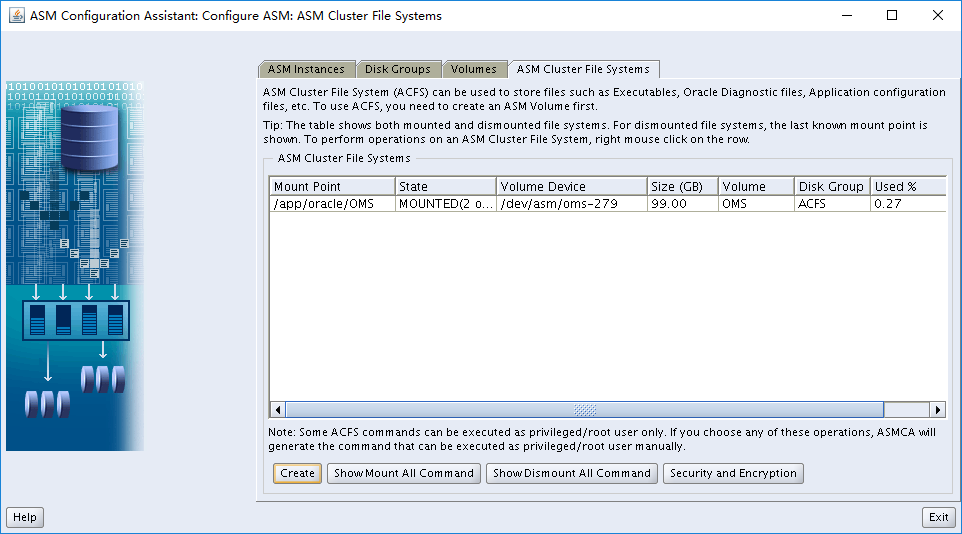

创建ACFS集群文件系统:

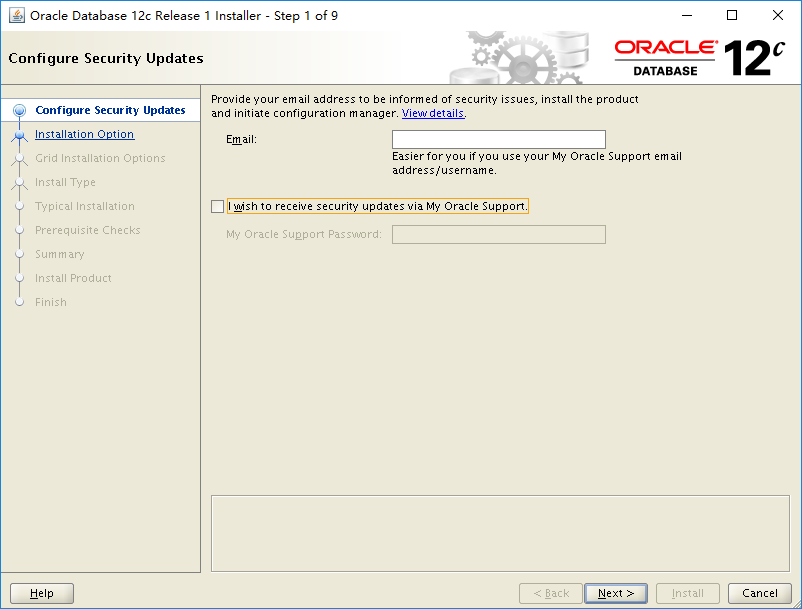

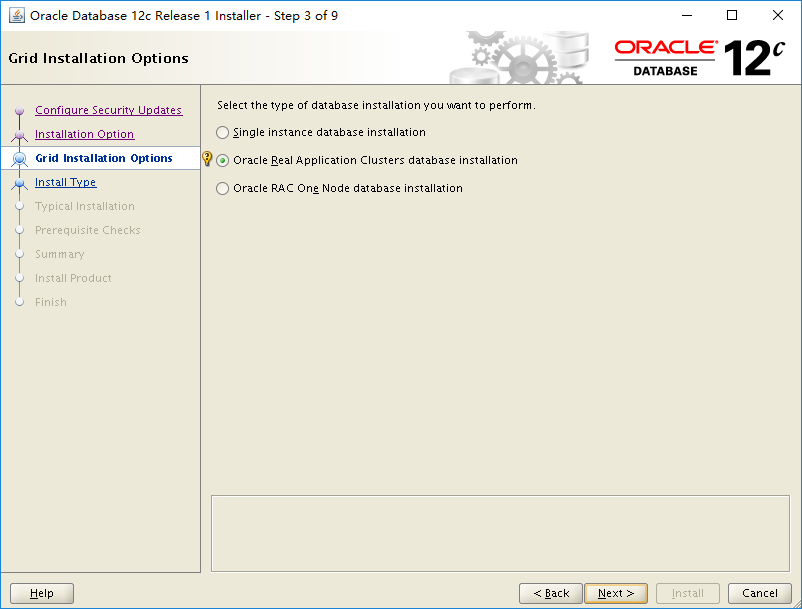

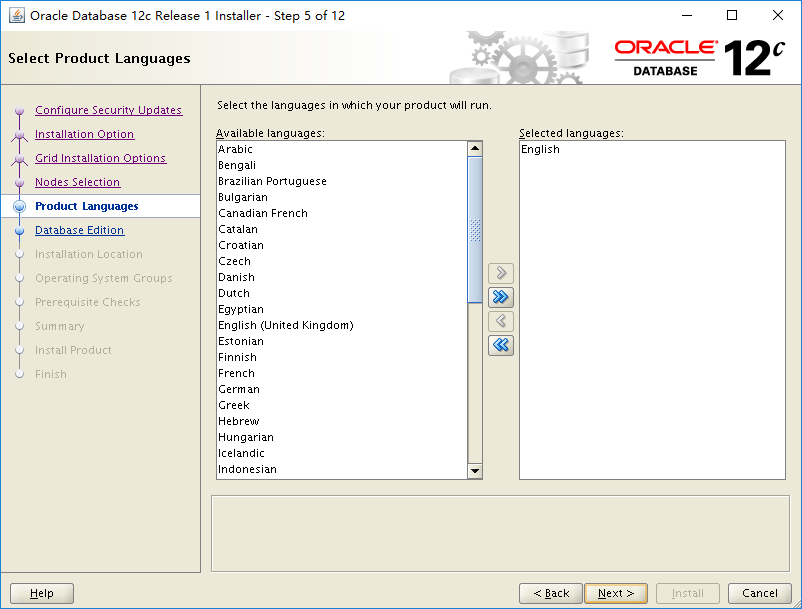

3.4 DB软件安装

解压安装介质:unzip p21419221_121020_Linux-x86-64_1of10.zip

unzip p21419221_121020_Linux-x86-64_2of10.zip

配置DISPLAY变量,调用图形界面安装DB软件:

[oracle@oemapp1 database]$ export DISPLAY=10.1.52.76:0.0

[oracle@oemapp1 database]$ ./runInstaller

安装db软件:

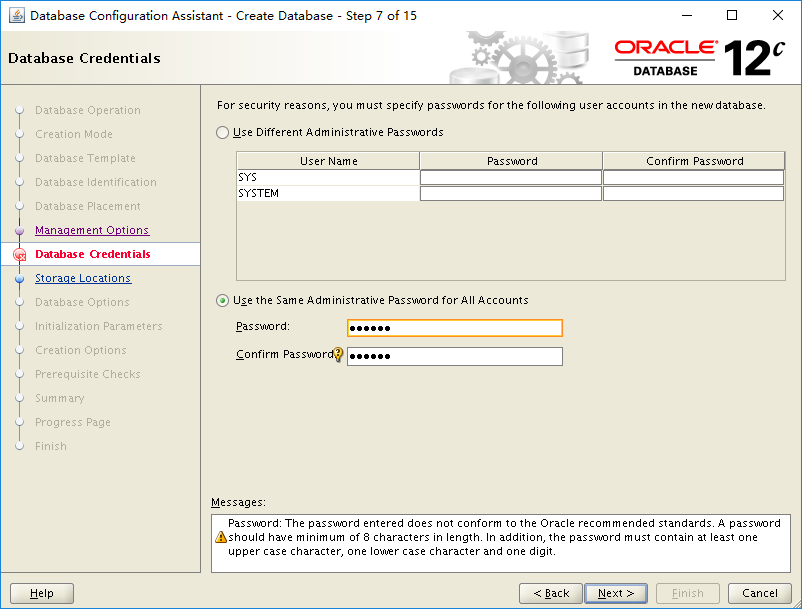

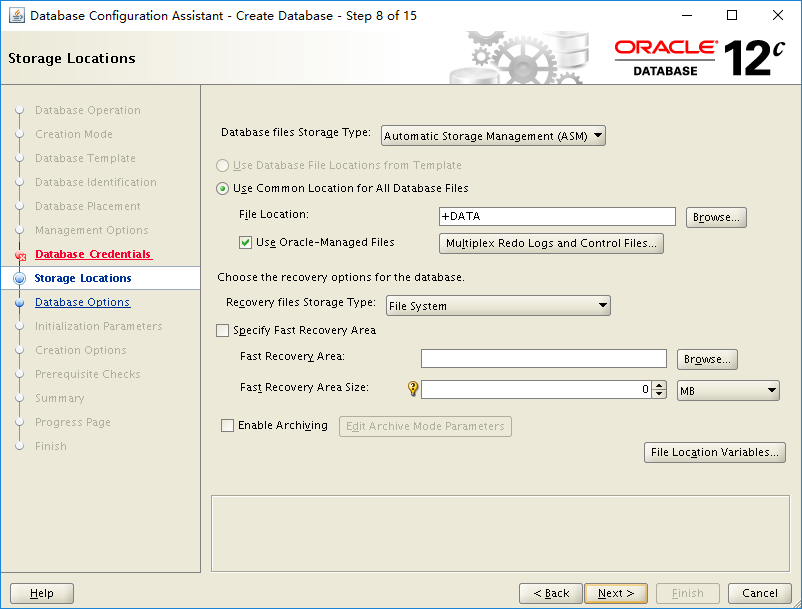

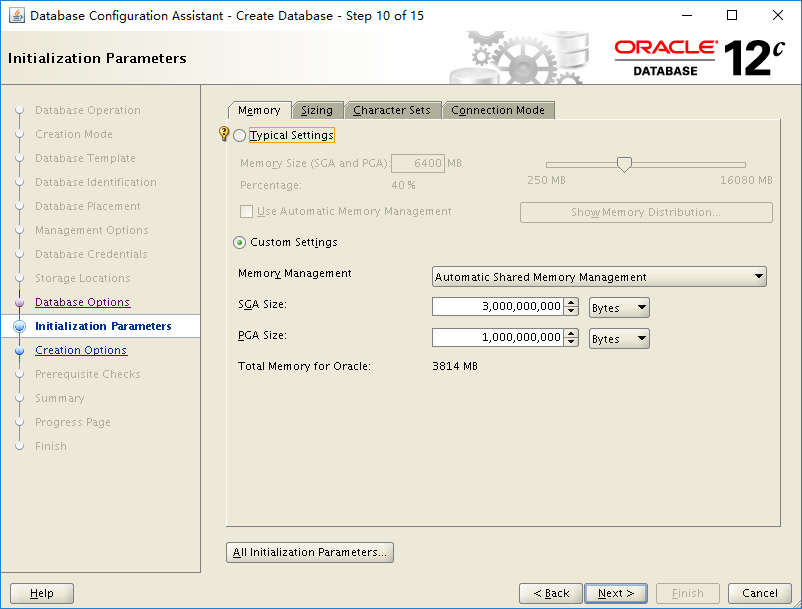

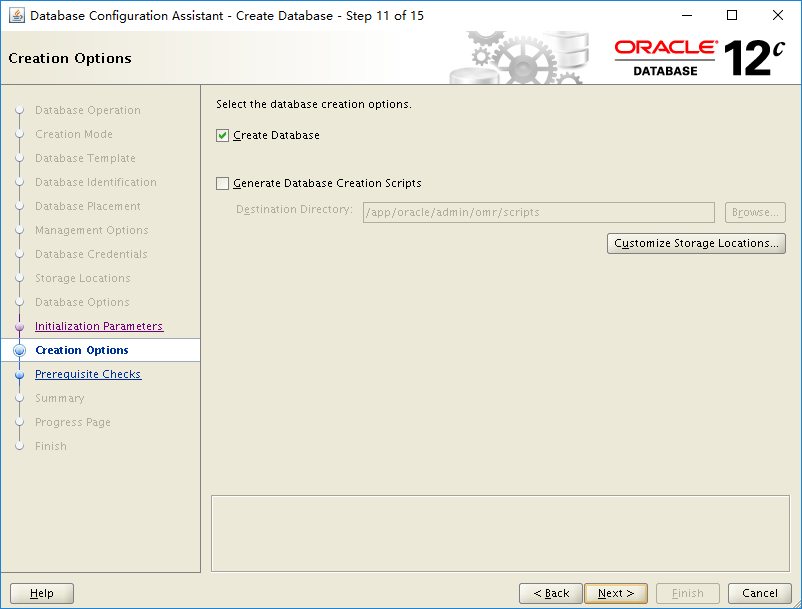

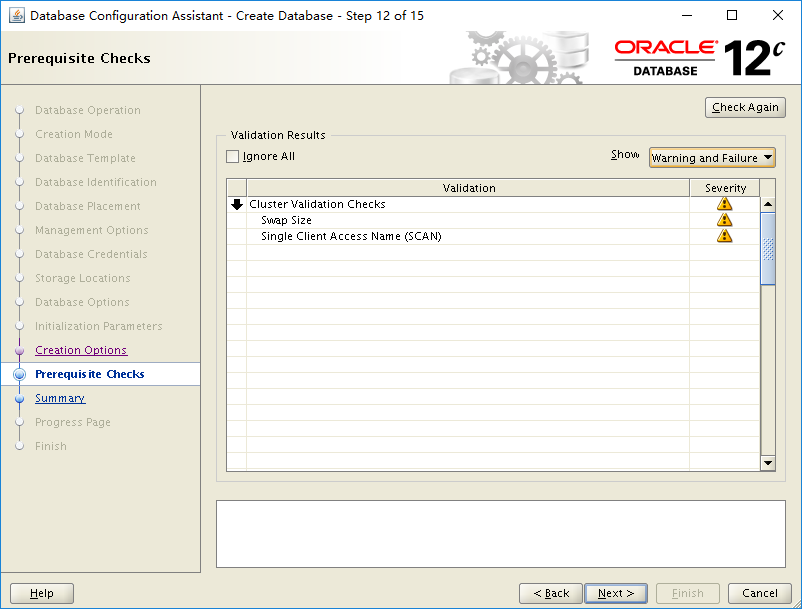

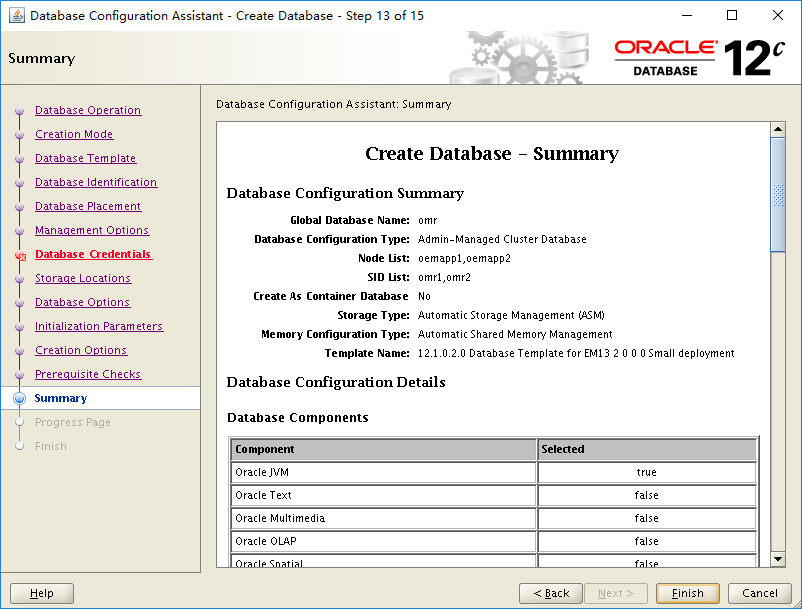

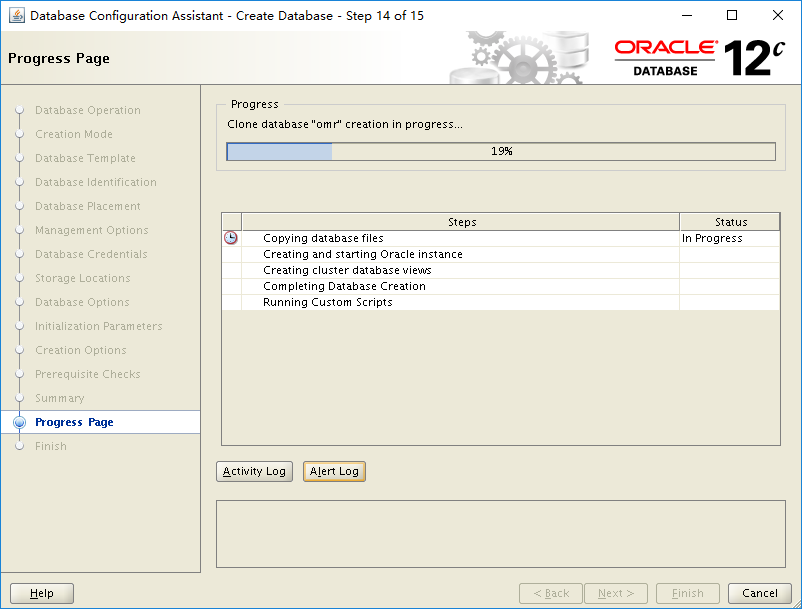

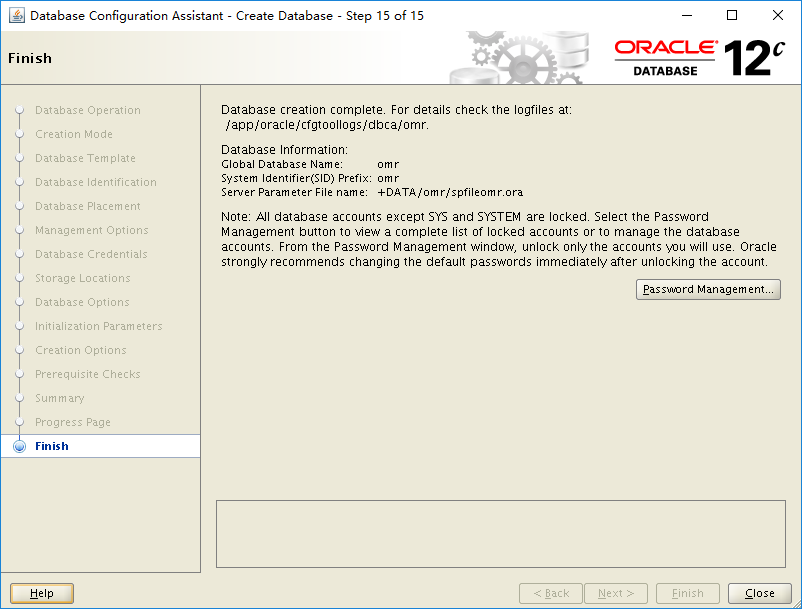

3.5 DBCA使用模版建库

解压模版文件到模版目录下,之后dbca就可以从这些模版中选择使用:[oracle@oemapp1 media]$ unzip 12.1.0.2.0_Database_Template_for_EM13_2_0_0_0_Linux_x64.zip -d /app/oracle/product/12.1.0.2/db_1/assistants/dbca/templates

DBCA建库步骤:

注意:数据库字符集强烈建议选择AL32UTF8,在后面配置OMS的时候会有对应提示。

4.OMS集群安装

本次OMS的集群要求Active-Active模式,并配合SLB实现负载均衡。4.1 环境准备

以下环境准备工作是对OMS的两个节点同步操作: oracle用户环境变量添加:#OMS

export OMS_HOME=$ORACLE_BASE/oms_local/middleware

export AGENT_HOME=$ORACLE_BASE/oms_local/agent/agent_13.2.0.0.0

创建目录:

su - oracle

mkdir -p /app/oracle/oms_local/agent

mkdir -p /app/oracle/oms_local/middleware

对/etc/hosts 修订,使符合oemcc对主机名称的要求(选做):

#public ip

10.1.43.211 oemapp1 oemapp1.oracle.com

10.1.43.212 oemapp2 oemapp2.oracle.com

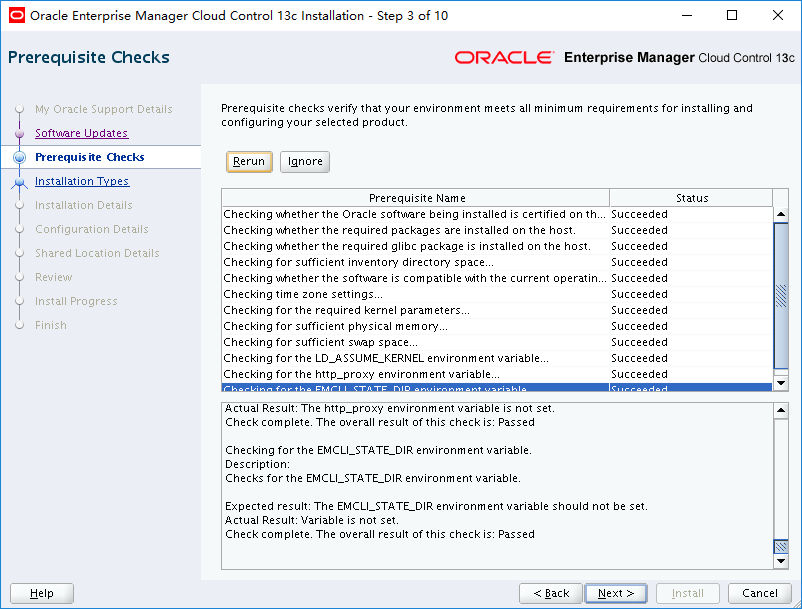

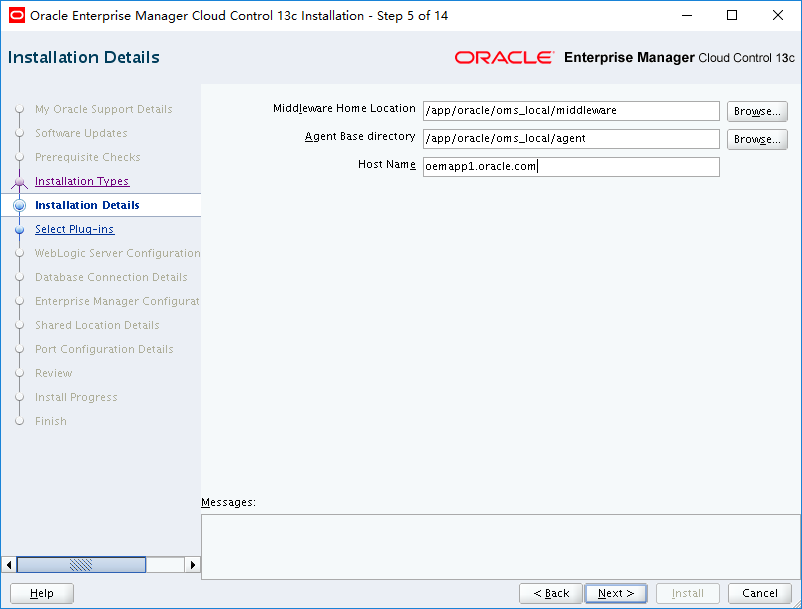

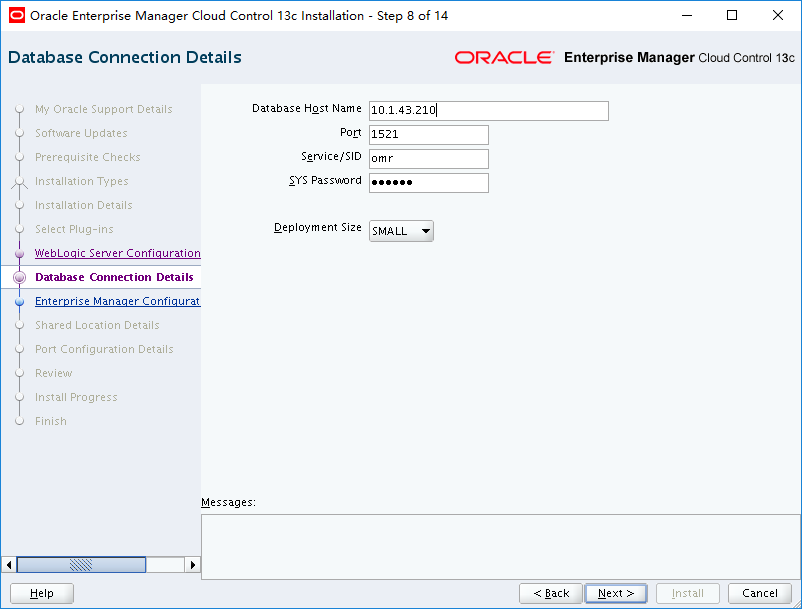

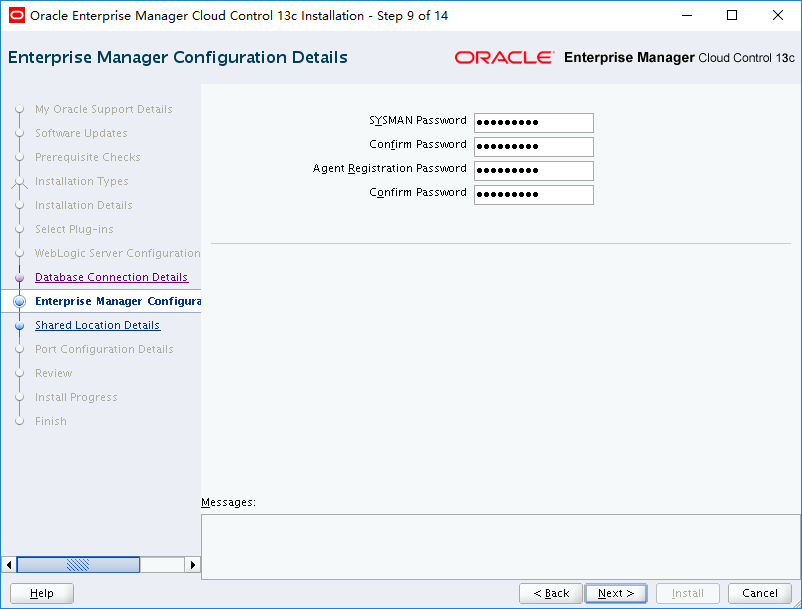

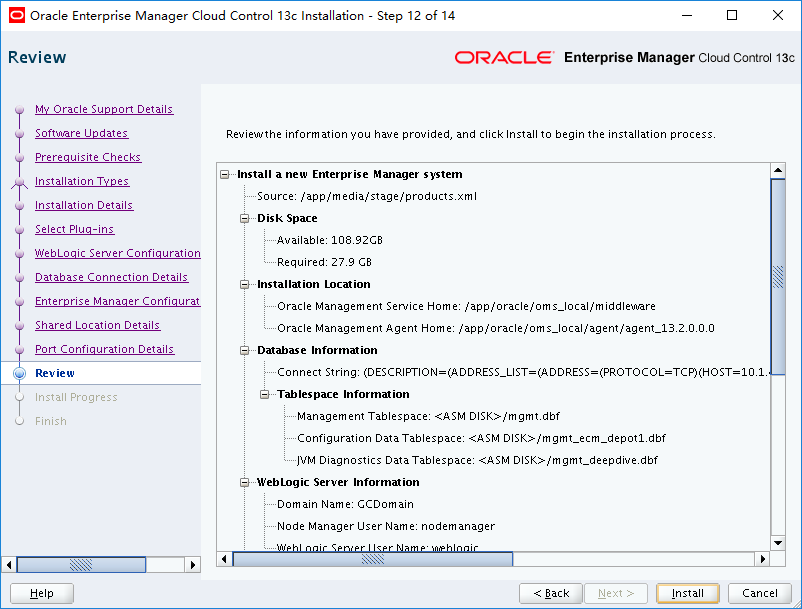

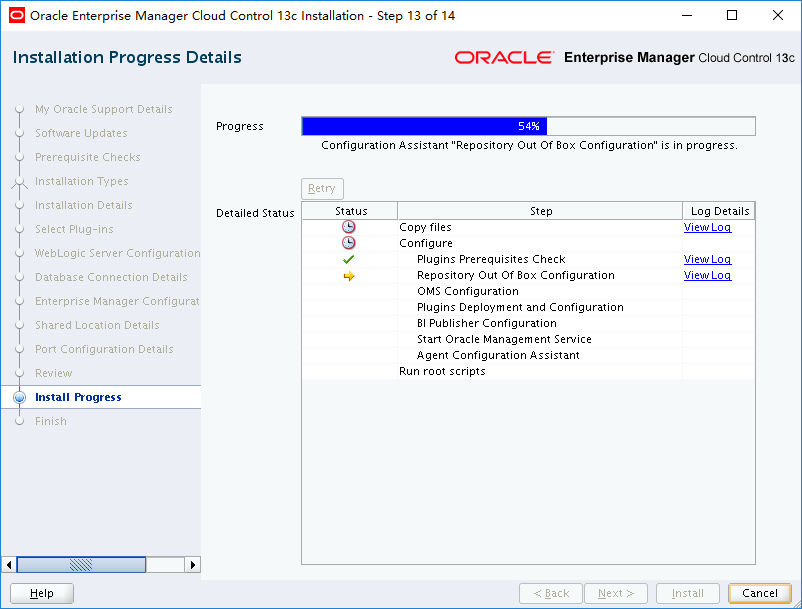

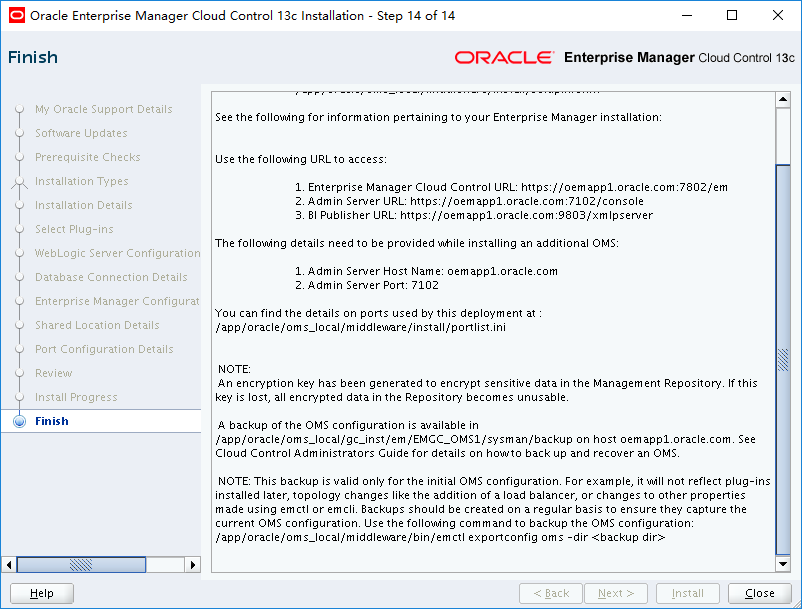

4.2 安装主节点

开始安装:su - oracle

export DISPLAY=10.1.52.76:0.0

./em13200p1_linux64.bin

安装步骤:

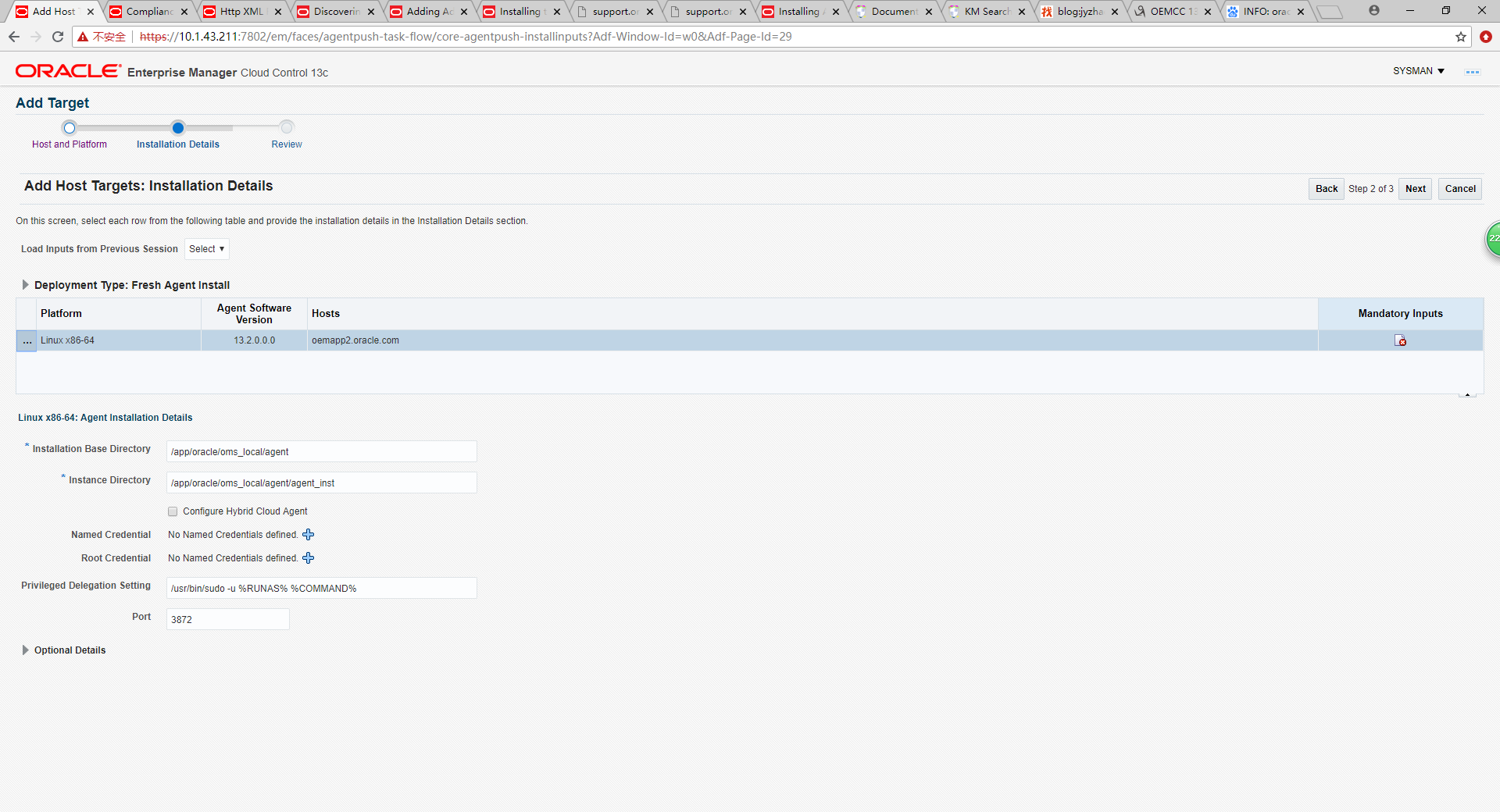

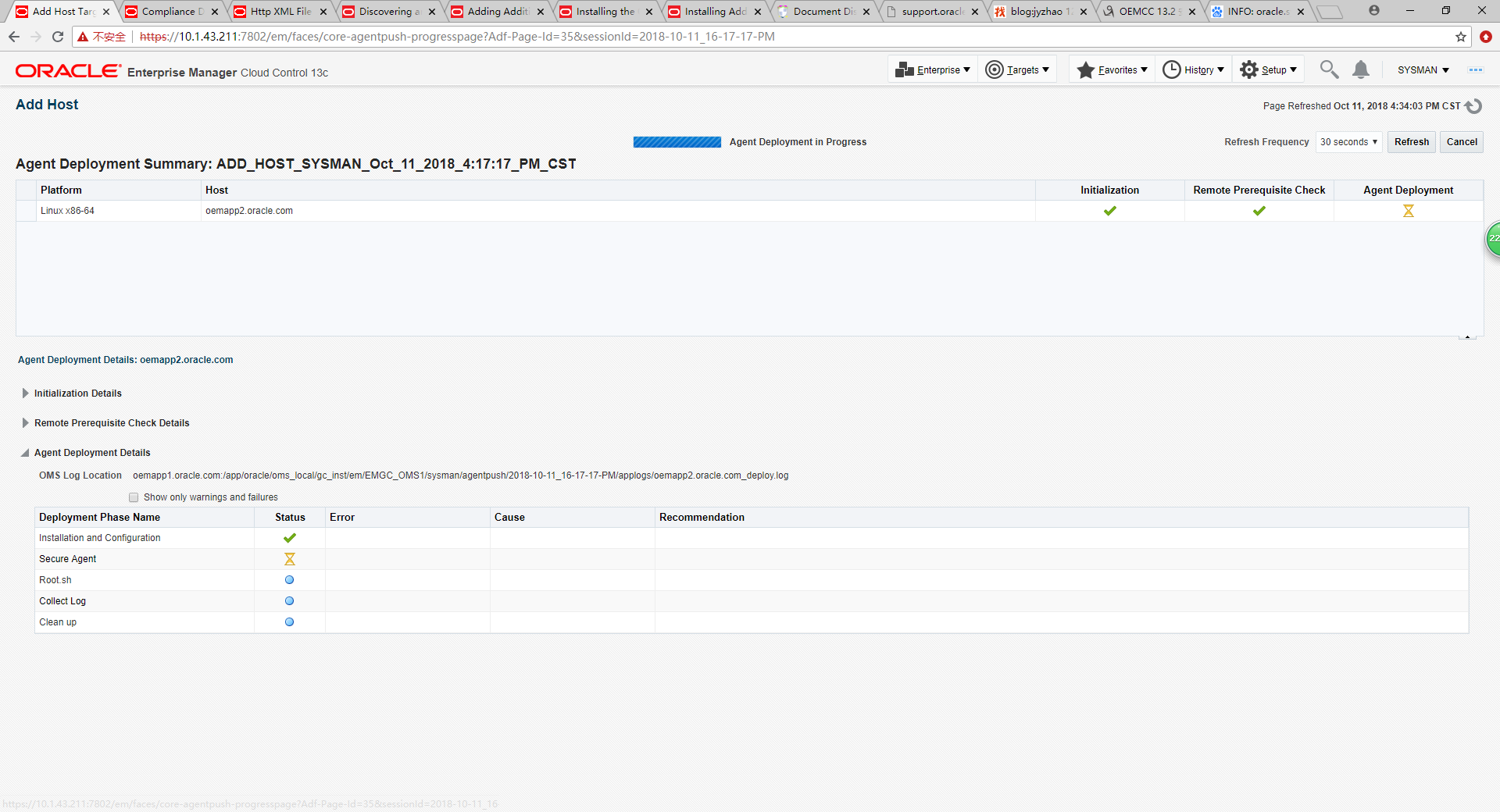

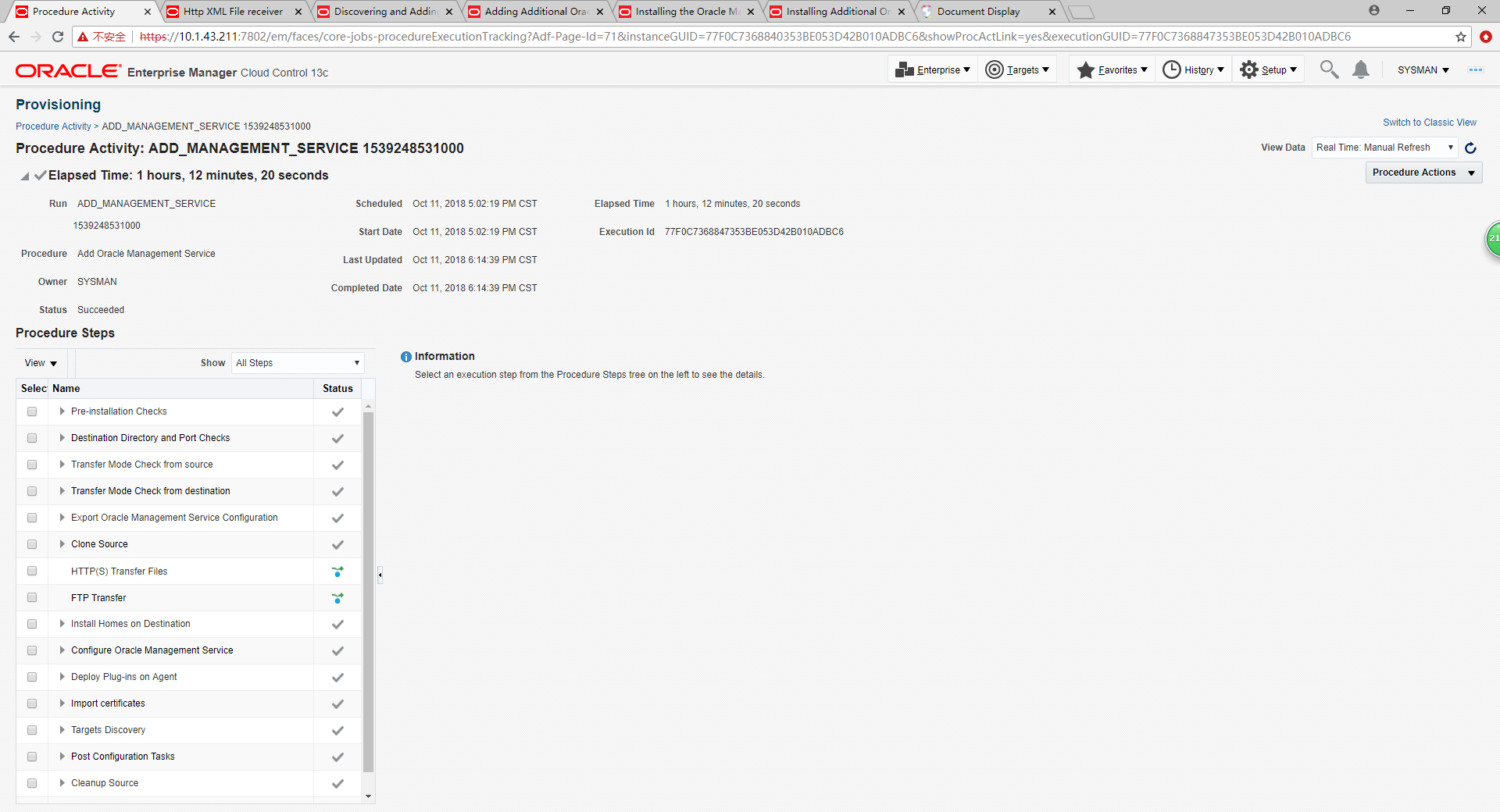

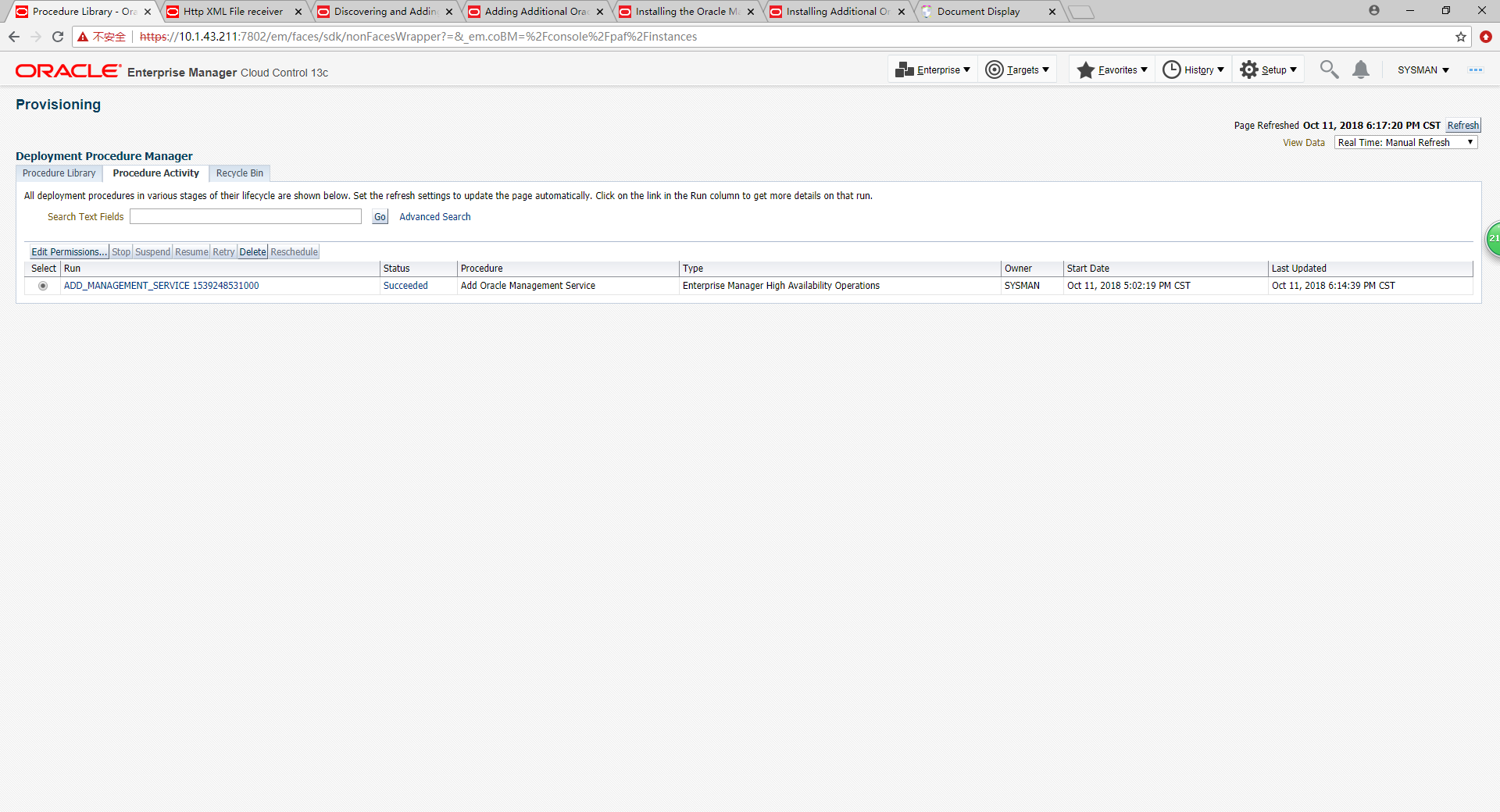

4.3 添加OMS节点

本节使用OEMCC来添加OMS节点,需要先添加agent,然后添加OMS节点:说明:

1./app/oracle/OMS是共享文件系统;

2./app/oracle/oms_local是各节点本地的文件系统;

3.OMR数据库的processes参数需要从默认300修改为600.

-

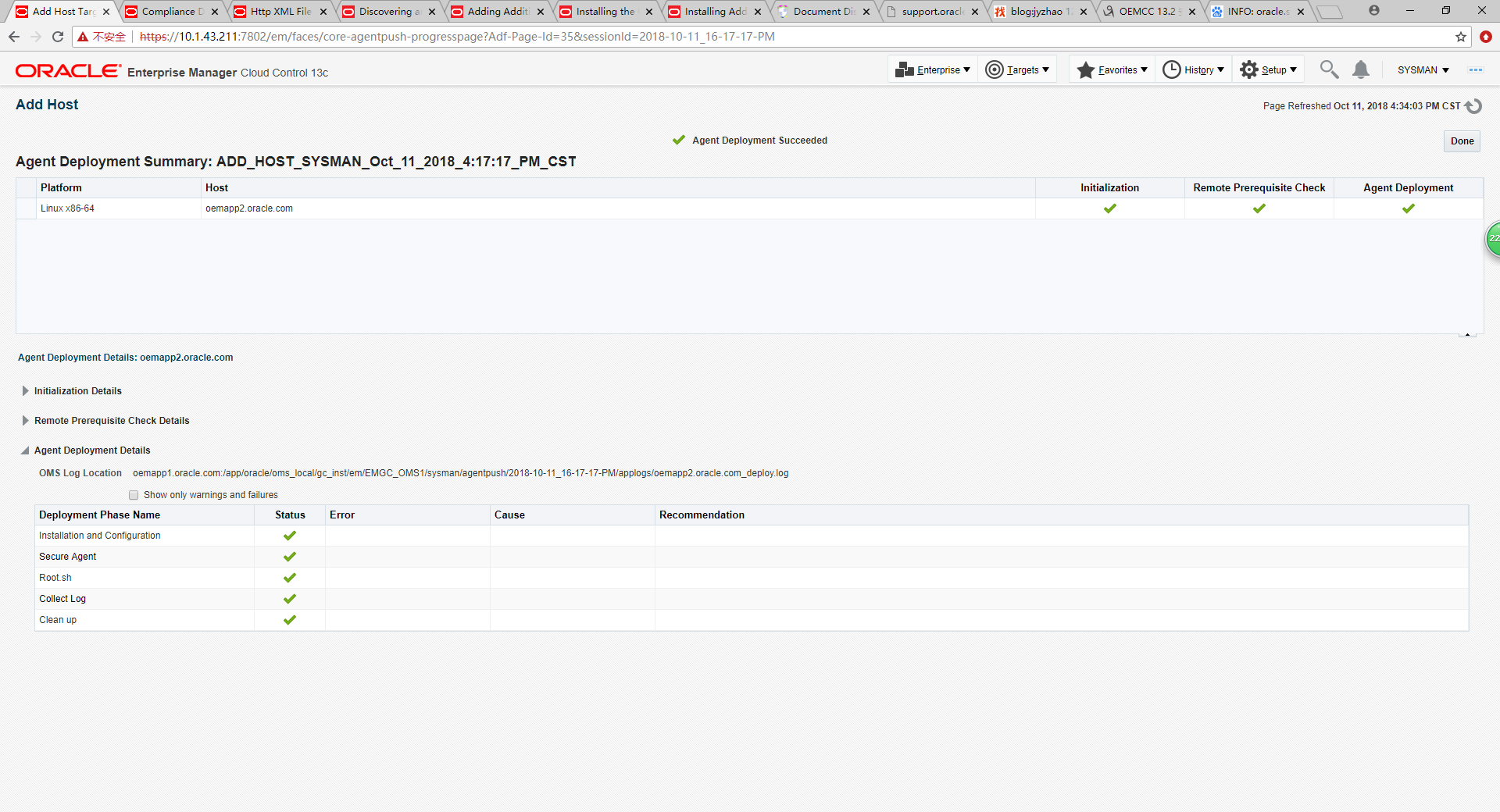

添加agent

-

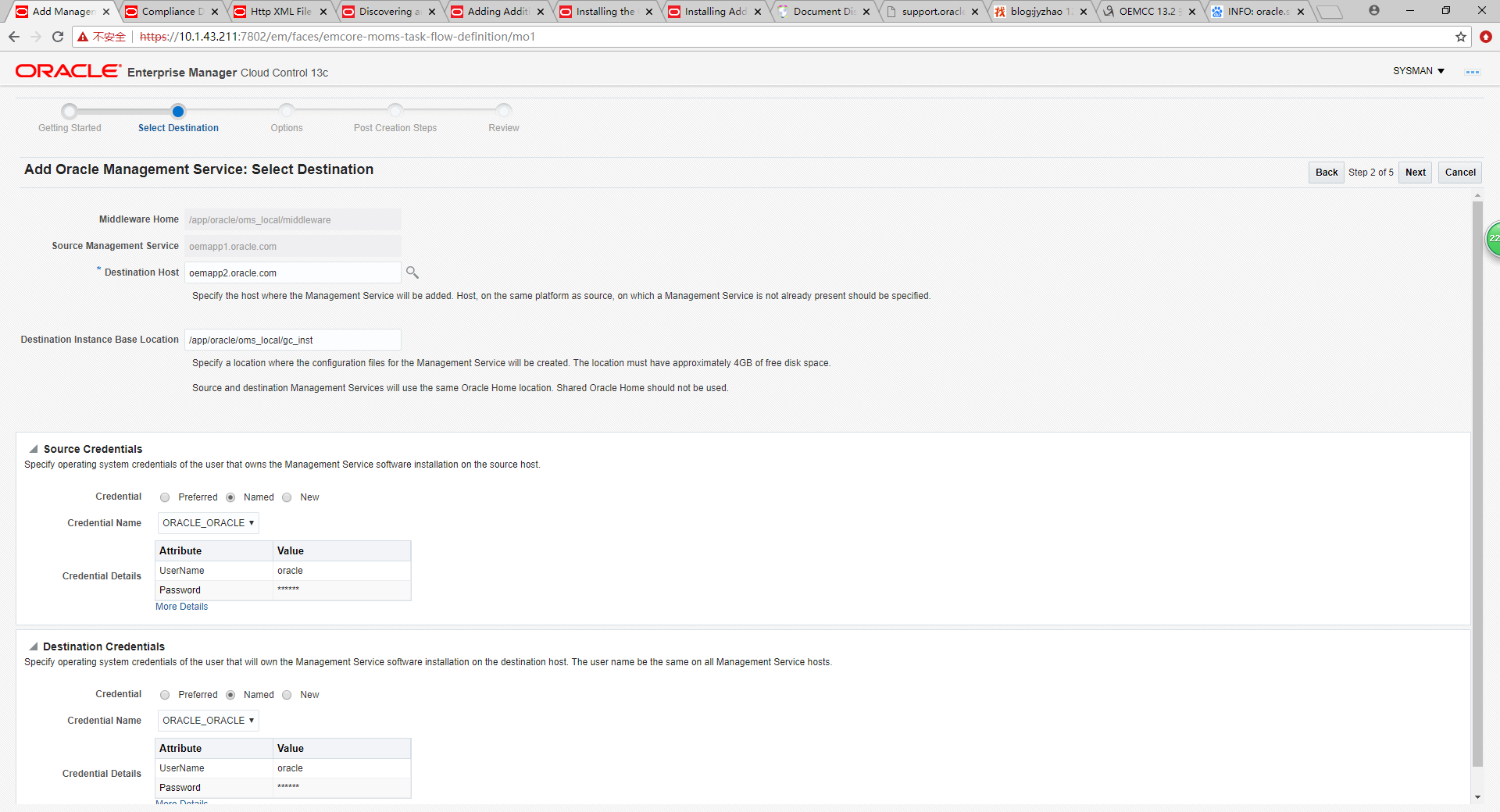

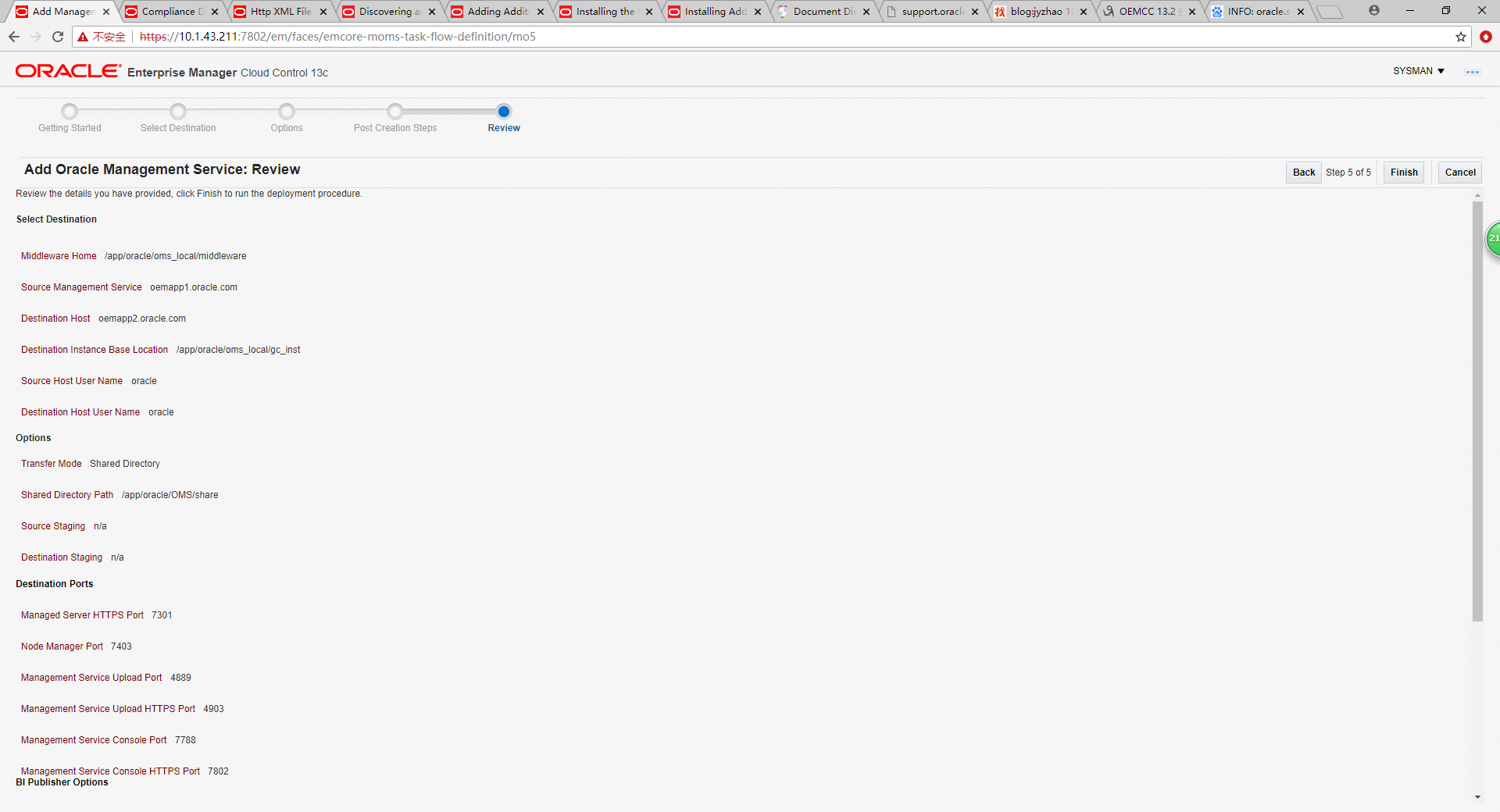

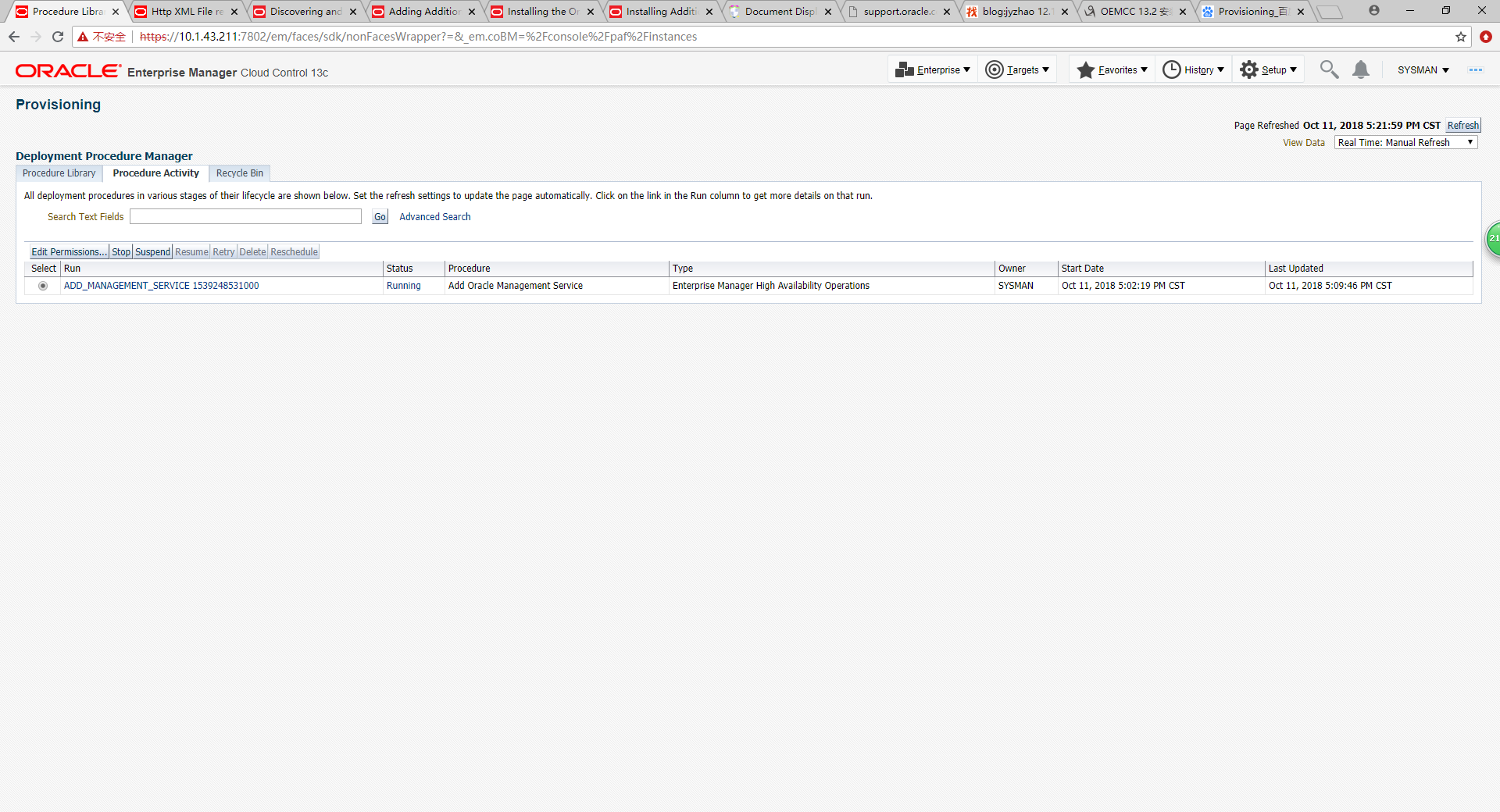

添加OMS节点

选择Enterprise menu -> Provisioning and Patching -> Procedure Library.

找到Add Oracle Management Service,点击Launch。

注意:OMS的相关端口,每个OMS节点尽可能保持一致,避免增加后续配置维护的复杂性。

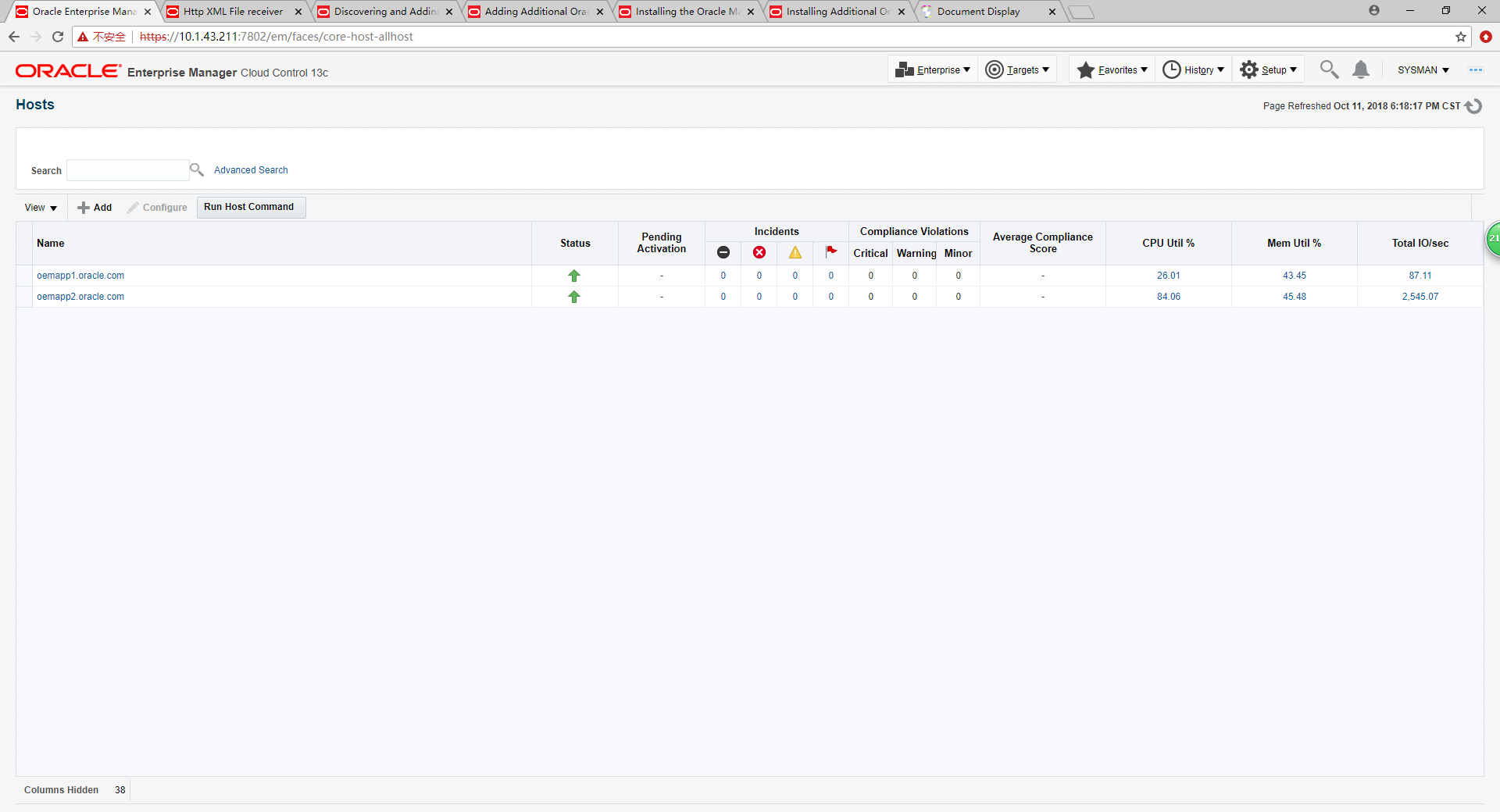

4.4 测试OMS高可用性

分别使用节点1和节点2的IP地址均可以正常访问到OEMCC网页界面:

且任意关掉某一节点,另外存活节点访问不受影响。

附:操作oms启动/停止/查看状态的命令:

--查看oms状态

$OMS_HOME/bin/emctl status oms

$OMS_HOME/bin/emctl status oms –details

--停止oms

$OMS_HOME/bin/emctl stop oms

$OMS_HOME/bin/emctl stop oms –all

--启动oms

$OMS_HOME/bin/emctl start oms

5.SLB配置

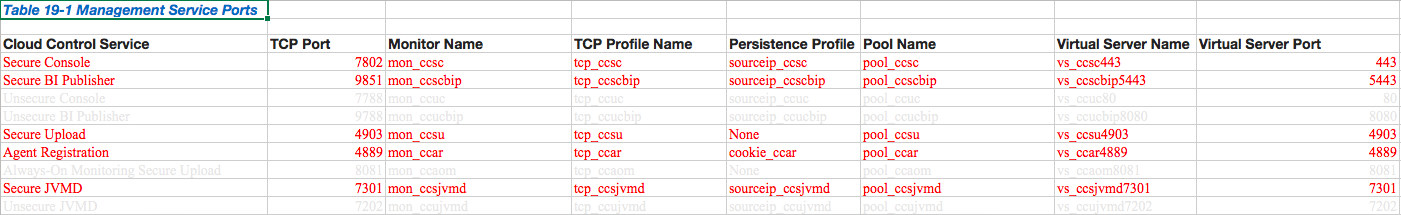

负载均衡使用的产品是radware,这部分需要负载均衡工程师进行配置。下面是根据Oracle官方文档结合本次需求整理出的配置要求,供参考:

其他具体配置项,例如Monitors、Pools、Required Virtual Servers等都按此为基准结合官方文档进行规划设计即可,不再赘述。

在/etc/hosts 添加负载均衡地址名称解析:

10.1.44.207 myslb.oracle.com

SLB配置后,OMS同步需要配置。

配置OMS:

$OMS_HOME/bin/emctl secure oms -host myslb.oracle.com -secure_port 4903 -slb_port 4903 -slb_console_port 443 -slb_bip_https_port 5443 -slb_jvmd_https_port 7301 -lock_console -lock_upload

配置agent:

$AGENT_HOME/bin/emctl secure agent –emdWalletSrcUrl https://myslb.oracle.com:4903/em

查看oms状态:

[oracle@oemapp1 backup]$ $OMS_HOME/bin/emctl status oms -details

Oracle Enterprise Manager Cloud Control 13c Release 2

Copyright (c) 1996, 2016 Oracle Corporation. All rights reserved.

Enter Enterprise Manager Root (SYSMAN) Password :

Console Server Host : oemapp1.oracle.com

HTTP Console Port : 7788

HTTPS Console Port : 7802

HTTP Upload Port : 4889

HTTPS Upload Port : 4903

EM Instance Home : /app/oracle/oms_local/gc_inst/em/EMGC_OMS1

OMS Log Directory Location : /app/oracle/oms_local/gc_inst/em/EMGC_OMS1/sysman/log

SLB or virtual hostname: myslb.oracle.com

HTTPS SLB Upload Port : 4903

HTTPS SLB Console Port : 443

HTTPS SLB JVMD Port : 7301

Agent Upload is locked.

OMS Console is locked.

Active CA ID: 1

Console URL: https://myslb.oracle.com:443/em

Upload URL: https://myslb.oracle.com:4903/empbs/upload

WLS Domain Information

Domain Name : GCDomain

Admin Server Host : oemapp1.oracle.com

Admin Server HTTPS Port: 7102

Admin Server is RUNNING

Oracle Management Server Information

Managed Server Instance Name: EMGC_OMS1

Oracle Management Server Instance Host: oemapp1.oracle.com

WebTier is Up

Oracle Management Server is Up

JVMD Engine is Up

BI Publisher Server Information

BI Publisher Managed Server Name: BIP

BI Publisher Server is Up

BI Publisher HTTP Managed Server Port : 9701

BI Publisher HTTPS Managed Server Port : 9803

BI Publisher HTTP OHS Port : 9788

BI Publisher HTTPS OHS Port : 9851

BI Publisher HTTPS SLB Port : 5443

BI Publisher is locked.

BI Publisher Server named 'BIP' running at URL: https://myslb.oracle.com:5443/xmlpserver

BI Publisher Server Logs: /app/oracle/oms_local/gc_inst/user_projects/domains/GCDomain/servers/BIP/logs/

BI Publisher Log : /app/oracle/oms_local/gc_inst/user_projects/domains/GCDomain/servers/BIP/logs/bipublisher/bipublisher.log

查看agent状态:

[oracle@oemapp1 backup]$ $AGENT_HOME/bin/emctl status agent

Oracle Enterprise Manager Cloud Control 13c Release 2

Copyright (c) 1996, 2016 Oracle Corporation. All rights reserved.

---------------------------------------------------------------

Agent Version : 13.2.0.0.0

OMS Version : 13.2.0.0.0

Protocol Version : 12.1.0.1.0

Agent Home : /app/oracle/oms_local/agent/agent_inst

Agent Log Directory : /app/oracle/oms_local/agent/agent_inst/sysman/log

Agent Binaries : /app/oracle/oms_local/agent/agent_13.2.0.0.0

Core JAR Location : /app/oracle/oms_local/agent/agent_13.2.0.0.0/jlib

Agent Process ID : 17263

Parent Process ID : 17060

Agent URL : https://oemapp1.oracle.com:3872/emd/main/

Local Agent URL in NAT : https://oemapp1.oracle.com:3872/emd/main/

Repository URL : https://myslb.oracle.com:4903/empbs/upload

Started at : 2018-10-12 15:49:58

Started by user : oracle

Operating System : Linux version 2.6.32-696.el6.x86_64 (amd64)

Number of Targets : 34

Last Reload : (none)

Last successful upload : 2018-10-12 15:50:53

Last attempted upload : 2018-10-12 15:50:53

Total Megabytes of XML files uploaded so far : 0.17

Number of XML files pending upload : 19

Size of XML files pending upload(MB) : 0.07

Available disk space on upload filesystem : 63.80%

Collection Status : Collections enabled

Heartbeat Status : Ok

Last attempted heartbeat to OMS : 2018-10-12 15:50:33

Last successful heartbeat to OMS : 2018-10-12 15:50:33

Next scheduled heartbeat to OMS : 2018-10-12 15:51:35

---------------------------------------------------------------

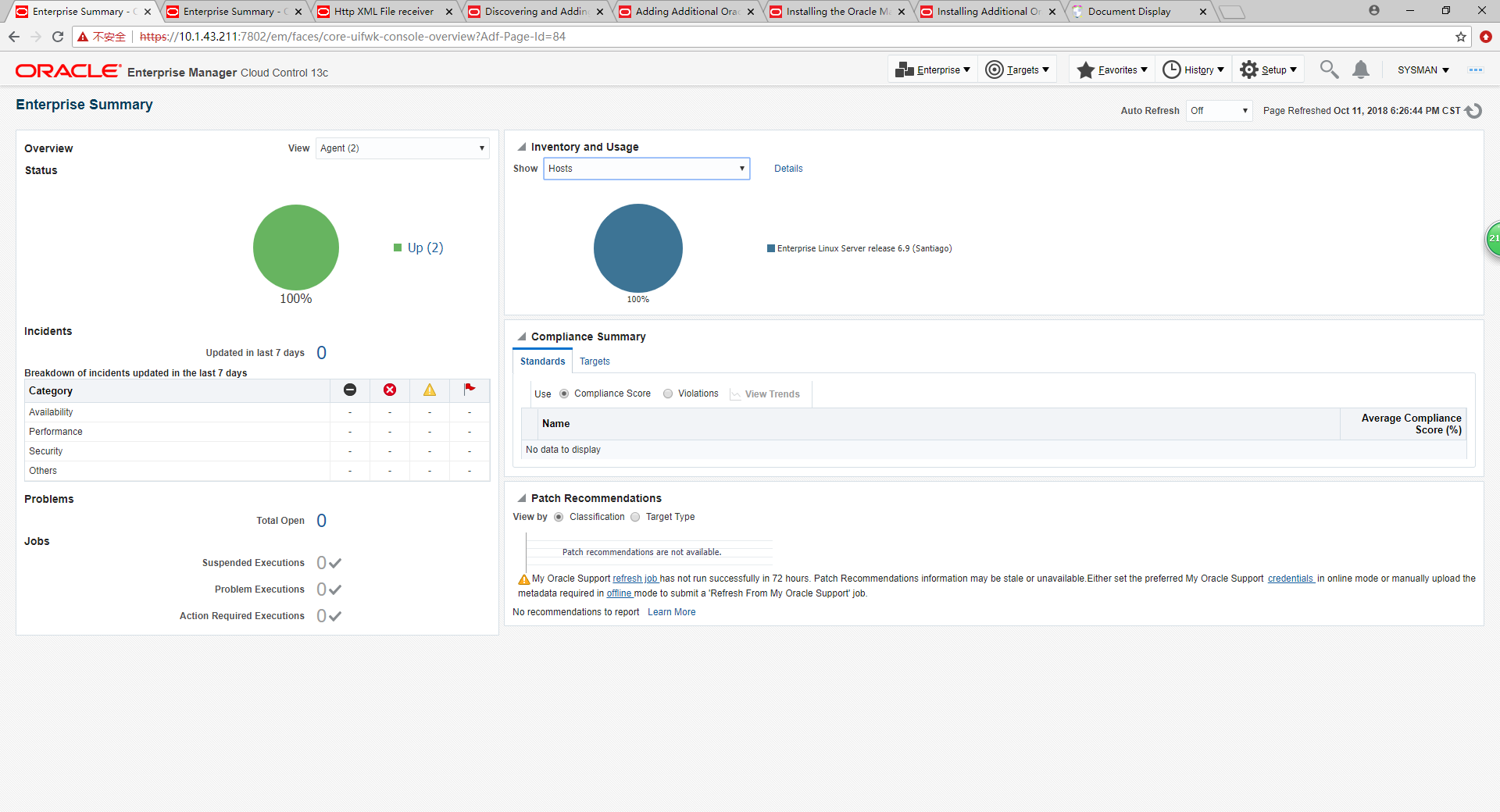

Agent is Running and Ready

最终测试,通过负载均衡地址10.1.44.207可以直接访问OEMCC,进行正常操作:

至此,OEMCC13.2集群安装已完成。