Raft共识算法

在寻找一个可以理解的共识算法【In Search of an Understandable Consensus Algorithm】

摘要【Abstract】

Raft是一种用于管理复制日志的共识算法。它产生了与( multi- ) Paxos等价的结果,并且它与Paxos一样高效,但是它的结构与Paxos不同;这使得Raft比Paxos更易于理解,也为构建实际系统提供了更好的基础。为了增强可理解性,Raft分离了领导人选举、日志复制和安全等共识的关键要素,并强制要求更强的一致性以减少必须考虑的区域数量。用户研究的结果表明,Raft比Paxos更容易让学生学习。Raft还包括一个用于更改群集成员资格的新机制,该机制使用重叠多数来保证安全性。

Raft is a consensus algorithm for managing a replicated log. It produces a result equivalent to (multi-)Paxos, and it is as efficient as Paxos, but its structure is different from Paxos; this makes Raft more understandable than Paxos and also provides a better foundation for building practical systems. In order to enhance understandability, Raft separates the key elements of consensus, such as leader election, log replication, and safety, and it enforces a stronger degree of coherency to reduce the number of states that must be considered. Results from a user study demonstrate that Raft is easier for students to learn than Paxos. Raft also includes a new mechanism for changing the cluster membership, which uses overlapping majorities to guarantee safety.

1 介绍【Introduction】

共识算法允许机器集合作为一个连贯的群体工作,能够在其部分成员的失败中生存下来。正因为如此,它们在构建可靠的大规模软件系统方面发挥着关键作用。在过去的十年中,Paxos [13、14]主导了共识算法的讨论:大多数共识的实现都是基于Paxos或受其影响,并且Paxos已经成为用于教学生共识的主要工具。

Consensus algorithms allow a collection of machines to work as a coherent group that can survive the failures of some of its members. Because of this, they play a key role in building reliable large-scale software systems. Paxos [13, 14] has dominated the discussion of consensus algorithms over the last decade: most implementations of consensus are based on Paxos or influenced by it, and Paxos has become the primary vehicle used to teach students about consensus.

遗憾的是,尽管Paxos试图让自己变得更加平易近人,但却很难理解。此外,其架构需要复杂的变化来支持实际系统。结果,无论是系统建设者还是学生,都在与Paxos斗争。

Unfortunately, Paxos is quite difficult to understand, in spite of numerous attempts to make it more approachable. Furthermore, its architecture requires complex changes to support practical systems. As a result, both system builders and students struggle with Paxos.

经过与Paxos的斗争,我们开始寻找一种新的共识算法,为系统构建和教育提供更好的基础。我们的方法是不寻常的,因为我们的首要目标是可理解性:我们能否为实际系统定义一个一致性算法,并以一种比Paxos更容易学习的方式来描述它?此外,我们希望该算法能够促进对系统构建者至关重要的直觉的开发。重要的不仅是算法能起作用,更重要的是要看它为什么能起作用。

After struggling with Paxos ourselves, we set out to find a new consensus algorithm that could provide a better foundation for system building and education. Our approach was unusual in that our primary goal was understandability: could we define a consensus algorithm for practical systems and describe it in a way that is significantly easier to learn than Paxos? Furthermore, we wanted the algorithm to facilitate the development of intuitions that are essential for system builders. It was important not just for the algorithm to work, but for it to be obvious why it works.

这项工作的结果是一个称为Raft的共识算法。在Raft的设计中,我们使用了特定的技术来提高可理解性,包括分解( Raft分离了领导者选举、日志复制和安全性)和状态空间缩减(相对于Paxos , Raft降低了非确定性的程度,并且服务方式可以不一致)。一项针对两所大学43名学生的用户研究表明,Raft明显比Paxos更容易理解:在学习了这两种算法后,这些学生中有33人能够比Paxos更好地回答关于Raft的问题。

The result of this work is a consensus algorithm called Raft. In designing Raft we applied specific techniques to improve understandability, including decomposition (Raft separates leader election, log replication, and safety) and state space reduction (relative to Paxos, Raft reduces the degree of nondeterminism and the ways servers can be inconsistent with each other). A user study with 43 students at two universities shows that Raft is significantly easier to understand than Paxos: after learning both algorithms, 33 of these students were able to answer questions about Raft better than questions about Paxos.

Raft在很多方面与现有的共识算法(最引人注目的是Oki和Liskov的Viewstamped Replication [ 27,20 ])相似,但是它有几个新颖的特性:

Raft is similar in many ways to existing consensus algorithms (most notably, Oki and Liskov’s Viewstamped Replication [27, 20]), but it has several novel features:

-

强领导者: Raft使用了比其他共识算法更强的领导形式。例如,日志条目仅从领导者流向其他服务器。这样简化了复制日志的管理,使Raft更容易理解。

-

Strong leader: Raft uses a stronger form of leadership than other consensus algorithms. For example, log entries only flow from the leader to other servers. This simplifies the management of the replicated log and makes Raft easier to understand.

-

领导人选举: Raft使用随机计时器选举领导人。这只在任何共识算法已经需要的心跳中添加少量的机制,同时简单快速地解决冲突。

-

Leader election: Raft uses randomized timers to elect leaders. This adds only a small amount of mechanism to the heartbeats already required for any consensus algorithm, while resolving conflicts simply and rapidly.

-

成员身份变更: Raft在集群中更改服务器集合的机制使用了一种新的联合共识方法,其中两种不同配置的多数在转换期间重叠。这使得集群在配置变化期间能够继续正常运行。

-

Membership changes: Raft’s mechanism for changing the set of servers in the cluster uses a new joint consensus approach where the majorities of two different configurations overlap during transitions. This allows the cluster to continue operating normally during configuration changes.

我们认为,Raft优于Paxos和其他共识算法,无论是出于教育目的,还是作为实现的基础。它比其他算法更简单、更容易理解;它被完全描述,足以满足实际系统的需要;它有多个开源实现,被多个公司使用;其安全特性已被正式规定和证明;而且其效率与其他算法相当。

We believe that Raft is superior to Paxos and other consensus algorithms, both for educational purposes and as a foundation for implementation. It is simpler and more understandable than other algorithms; it is described completely enough to meet the needs of a practical system; it has several open-source implementations and is used by several companies; its safety properties have been formally specified and proven; and its efficiency is comparable to other algorithms.

论文的其余部分介绍了复制状态机问题(第2节),讨论了Paxos的优缺点(第3节),描述了可理解性的一般方法(第4节),提出了Raft一致性算法(第5 - 7节),评估了Raft (第8节),并讨论了相关工作(第9节)。由于篇幅限制,这里省略了Raft算法的一些元素,但它们可以在扩展的技术报告中找到[ 29 ]。额外的材料描述了客户端如何与系统交互,以及如何回收Raft日志中的空间。

The remainder of the paper introduces the replicated state machine problem (Section 2), discusses the strengths and weaknesses of Paxos (Section 3), describes our general approach to understandability (Section 4), presents the Raft consensus algorithm (Sections 5–7), evaluates Raft (Section 8), and discusses related work (Section 9). A few elements of the Raft algorithm have been omitted here because of space limitations, but they are available in an extended technical report [29]. The additional material describes how clients interact with the system, and how space in the Raft log can be reclaimed.

2 状态机复制【Replicated state machines】

一致性算法通常出现在复制状态机的环境中[ 33 ]。在这种方法中,服务器集合上的状态机计算相同状态的相同副本,即使某些服务器宕机,也可以继续运行。复制状态机用于解决分布式系统中的各种容错问题。例如,具有单个群集领导者的大型系统,如GFS [ 7 ]、HDFS [ 34 ]和RAMCloud [ 30 ],通常使用单独的复制状态机来管理领导者选举,并存储必须在领导者崩溃后仍然存在的配置信息。复制状态机的例子包括Chubby [ 2 ]和ZooKeeper [ 9 ]。

Consensus algorithms typically arise in the context of replicated state machines [33]. In this approach, state machines on a collection of servers compute identical copies of the same state and can continue operating even if some of the servers are down. Replicated state machines are used to solve a variety of fault tolerance problems in distributed systems. For example, large-scale systems that have a single cluster leader, such as GFS [7], HDFS [34], and RAMCloud [30], typically use a separate replicated state machine to manage leader election and store configuration information that must survive leader crashes. Examples of replicated state machines include Chubby [2] and ZooKeeper [9].

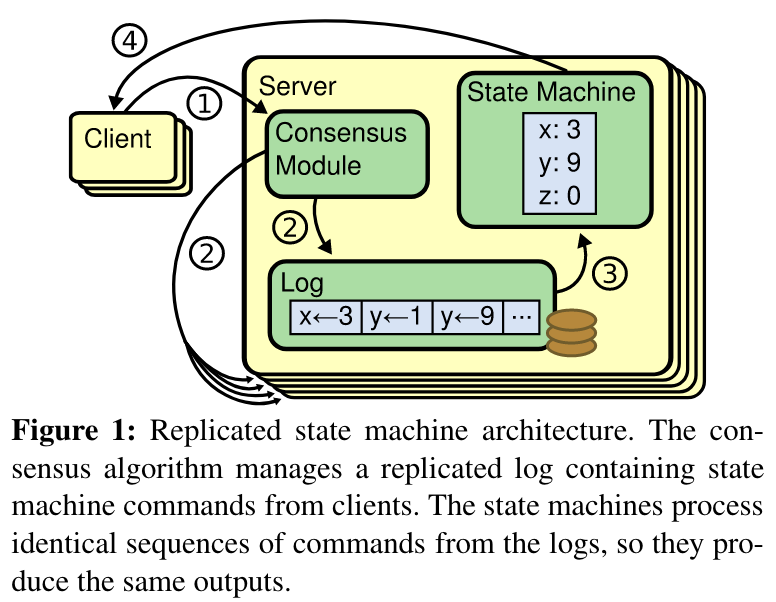

图1:复制状态机体系结构。共识算法管理来自客户端的包含状态机命令的复制日志。状态机从日志中处理相同的命令序列,因此它们产生相同的输出。

复制状态机通常使用复制日志实现,如图1所示。每个服务器存储一个包含一系列命令的日志,其状态机按顺序执行这些命令。每个日志中包含的命令顺序相同,因此每个状态机处理相同的命令序列。由于状态机是确定性的,每个状态机计算相同的状态和相同的输出序列。

Replicated state machines are typically implemented using a replicated log, as shown in Figure 1. Each server stores a log containing a series of commands, which its state machine executes in order. Each log contains the same commands in the same order, so each state machine processes the same sequence of commands. Since the state machines are deterministic, each computes the same state and the same sequence of outputs.

保持复制日志的一致性是共识算法的工作。服务器上的共识模块接收来自客户端的命令并将其添加到日志中。它与其他服务器上的共识模块进行通信,以确保每个日志最终以相同的顺序包含相同的请求,即使某些服务器发生故障。一旦命令被正确复制,每个服务器的状态机按日志顺序处理,并将输出返回给客户端。因此,服务器似乎形成了一个单一的、高度可靠的状态机。

Keeping the replicated log consistent is the job of the consensus algorithm. The consensus module on a server receives commands from clients and adds them to its log. It communicates with the consensus modules on other servers to ensure that every log eventually contains the same requests in the same order, even if some servers fail. Once commands are properly replicated, each server’s state machine processes them in log order, and the outputs are returned to clients. As a result, the servers appear to form a single, highly reliable state machine.

用于实际系统的共识算法通常具有以下属性:

Consensus algorithms for practical systems typically have the following properties:

-

它们确保了所有非拜占庭条件下的安全(从不返回错误的结果),包括网络延迟、分区和数据包丢失、重复和重新排序。

-

They ensure safety (never returning an incorrect result) under all non-Byzantine conditions, including network delays, partitions, and packet loss, duplication, and reordering.

-

它们是功能齐全的(可用的),因为大多数服务器都是可操作的,并且可以相互通信和与客户端通信。因此,一个典型的五台服务器集群可以容忍任意两台服务器的故障。假设服务器通过停止而失效;它们可能在稳定存储后从状态恢复并重新加入集群。

-

They are fully functional (available)aslongasanymajority of the servers are operational and can communicate with each other and with clients. Thus, a typical cluster of five servers can tolerate the failure of any two servers. Servers are assumed to fail by stopping; they may later recover from state on stable storage and rejoin the cluster.

-

它们不依赖于时间来确保日志的一致性:错误的时钟和极端的消息延迟在最坏的情况下会导致可用性问题。

-

They do not depend on timing to ensure the consistency of the logs: faulty clocks and extreme message delays can, at worst, cause availability problems.

-

在常见的情况下,只要大部分集群响应了单轮远程过程调用,一个命令就可以完成;少数慢速服务器不必影响系统整体性能。

-

In the common case, a command can complete as soon as a majority of the cluster has responded to a single round of remote procedure calls; a minority of slow servers need not impact overall system performance.

3 Paxos的问题【What‘s wrong with Paxos?】

近10年来,莱斯利·兰伯特的Paxos协议[ 13 ]几乎成为共识的代名词:它是课程中最常用的协议,大多数共识的实现都以它为起点。Paxos首先定义了一个能够就单个决策达成一致的协议,例如单个复制日志条目。我们把这个子集称为单序Paxos。然后Paxos将该协议的多个实例进行组合以方便日志( multi-Paxos )等一系列决策。Paxos既保证了安全性又保证了活性,并且支持簇成员的变化。其正确性已被证明,并且在正常情况下是有效的。

Over the last ten years, Leslie Lamport’s Paxos protocol [13] has become almost synonymous with consensus: it is the protocol most commonly taught in courses, and most implementations of consensus use it as a starting point. Paxos first defines a protocol capable of reaching agreement on a single decision, such as a single replicated log entry. We refer to this subset as single-decree Paxos. Paxos then combines multiple instances of this protocol to facilitate a series of decisions such as a log (multi-Paxos). Paxos ensures both safety and liveness, and it supports changes in cluster membership. Its correctness has been proven, and it is efficient in the normal case.

遗憾的是,Paxos有两个重大缺陷。第一个缺点是Paxos非常难以理解。完整的解释[ 13 ]是出了名的不透明;很少有人成功地理解它,并且只有付出巨大的努力。因此,有几次尝试用更简单的术语[ 14、18、19]来解释Paxos。这些解释聚焦于单一政令子集,但仍具有挑战性。在NSDI2012对与会者的非正式调查中,我们发现即使在经验丰富的研究人员中,也很少有人对Paxos感到满意。我们与Paxos自己进行了斗争;直到阅读了几个简化的解释并设计了我们自己的替代方案后,我们才能够理解完整的协议,这个过程花费了近一年的时间。

Unfortunately, Paxos has two significant drawbacks. The first drawback is that Paxos is exceptionally difficult to understand. The full explanation [13] is notoriously opaque; few people succeed in understanding it, and only with great effort. As a result, there have been several attempts to explain Paxos in simpler terms [14, 18, 19]. These explanations focus on the single-decree subset, yet they are still challenging. In an informal survey of attendees at NSDI 2012, we found few people who were comfortable with Paxos, even among seasoned researchers. We struggled with Paxos ourselves; we were not able to understand the complete protocol until after reading several simplified explanations and designing our own alternative protocol, a process that took almost a year.

我们假设Paxos的不透明性源于其选择单令子集作为基础。单律令Paxos是密集而微妙的:它分为两个阶段,没有简单直观的解释,无法独立理解。正因为如此,很难形成关于单一法令协议为什么有效的直觉。多Paxos的组合规则增加了额外的复杂性和微妙性。我们认为,多元决策共识的整体问题(即,一个日志而不是单个条目)可以通过其他更为直接和明显的方式进行分解。

We hypothesize that Paxos’ opaqueness derives from its choice of the single-decree subset as its foundation. Single-decree Paxos is dense and subtle: it is divided into two stages that do not have simple intuitive explanations and cannot be understood independently. Because of this, it is difficult to develop intuitions about why the singledecree protocol works. The composition rules for multiPaxos add significant additional complexity and subtlety. We believe that the overall problem of reaching consensus on multiple decisions (i.e., a log instead of a single entry) can be decomposed in other ways that are more direct and obvious.

Paxos的第二个问题是它没有为构建实际的实现提供良好的基础。其中一个原因是,对于多Paxos并没有广泛认同的算法。兰波特的描述多是关于单令Paxos的;他勾勒了多Paxos的可能途径,但遗漏了许多细节。已经有一些尝试充实和优化Paxos,例如[ 24 ],[ 35 ]和[ 11 ],但是这些尝试和兰波特的草图不同。像Chubby [ 4 ]这样的系统已经实现了类似Paxos的算法,但是在大多数情况下它们的细节还没有公开。

The second problem with Paxos is that it does not provide a good foundation for building practical implementations. One reason is that there is no widely agreedupon algorithm for multi-Paxos. Lamport’s descriptions are mostly about single-decree Paxos; he sketched possible approaches to multi-Paxos, but many details are missing. There have been several attempts to flesh out and optimize Paxos, such as [24], [35], and [11], but these differ from each other and from Lamport’s sketches. Systems such as Chubby [4] have implemented Paxos-like algorithms, but in most cases their details have not been published.

此外,Paxos架构对于构建实际系统来说是一个糟糕的架构;这是单级分解的另一个后果。例如,独立地选择日志项的集合,然后将它们合并成一个顺序日志,几乎没有什么好处;这只是增加了复杂性。围绕一个日志设计一个系统更简单、更高效,其中新条目按约束顺序依次追加。另一个问题是,Paxos在其核心(尽管它最终暗示了一种作为绩效优化的弱领导形式)处使用了对称对等方法。这在一个只有一个决策的简化世界里是有意义的,但很少有实际系统使用这种方法。如果必须做出一系列的决策,那么先选举出一个领导者,然后让领导者协调决策就更加简单快捷。

Furthermore, the Paxos architecture is a poor one for building practical systems; this is another consequence of the single-decree decomposition. For example, there is little benefit to choosing a collection of log entries independently and then melding them into a sequential log; this just adds complexity. It is simpler and more efficient to design a system around a log, where new entries are appended sequentially in a constrained order. Another problem is that Paxos uses a symmetric peer-to-peer approach at its core (though it eventually suggests a weak form of leadership as a performance optimization). This makes sense in a simplified world where only one decision will be made, but few practical systems use this approach. If a series of decisions must be made, it is simpler and faster to first elect a leader, then have the leader coordinate the decisions.

因此,实际系统与Paxos几乎没有相似之处。每一次实现都从Paxos开始,发现实现的困难,然后发展出明显不同的架构。这既费时又容易出错,理解Paxos的困难又加剧了问题。Paxos的公式对于证明其正确性的定理可能是一个很好的公式,但实际实现与Paxos的公式差别太大,证明价值不大。下面实施者Chubby的评论是典型的:

As a result, practical systems bear little resemblance to Paxos. Each implementation begins with Paxos, discovers the difficulties in implementing it, and then develops a significantly different architecture. This is timeconsuming and error-prone, and the difficulties of understanding Paxos exacerbate the problem. Paxos’ formulation may be a good one for proving theorems about its correctness, but real implementations are so different from Paxos that the proofs have little value. The following comment from the Chubby implementers is typical:

Paxos算法的描述与现实世界系统的需求之间存在显著差距.. ..最终的系统将基于一个未经证明的协议[ 4 ]。

There are significant gaps between the description of the Paxos algorithm and the needs of a real-world system. . . . the final system will be based on an unproven protocol [4].

正因为这些问题,我们得出结论,Paxos无论是在制度建设方面,还是在教育方面,都没有提供良好的基础。鉴于共识在大规模软件系统中的重要性,我们决定看看能否设计出一种性能优于Paxos的替代共识算法。Raft就是该实验的结果。

Because of these problems, we concluded that Paxos does not provide a good foundation either for system building or for education. Given the importance of consensus in large-scale software systems, we decided to see if we could design an alternative consensus algorithm with better properties than Paxos. Raft is the result of that experiment.

4 为理解性设计【Designing for understandability】

我们在设计Raft时有几个目标:它必须为系统的构建提供一个完整且实用的基础,这样就大大减少了开发人员所需的设计工作量;它必须在所有条件下都是安全的,并且在典型工况下是可用的;并且对于常见的操作必须高效。但我们最重要的目标- -也是最困难的挑战- -是可理解性。让广大受众舒适地理解算法,一定是可能的。此外,必须能够发展关于算法的直觉,以便系统构建者能够做出在现实世界实现中不可避免的扩展。

We had several goals in designing Raft: it must provide acompleteandpracticalfoundationforsystembuilding, so that it significantly reduces the amount of design work required of developers; it must be safe under all conditions and available under typical operating conditions; and it must be efficient for common operations. But our most important goal—and most difficult challenge—was understandability.Itmustbepossibleforalargeaudienceto understand the algorithm comfortably. In addition, it must be possible to develop intuitions about the algorithm, so that system builders can make the extensions that are inevitable in real-world implementations.

在Raft的设计中有许多要点,我们必须在备选方法中进行选择。在这些情况下,我们评估了基于可理解性的备选方案:解释每个备选方案(例如,它的状态空间有多复杂,它是否有微妙的含义?)有多困难,以及读者完全理解该方法及其含义有多容易?

There were numerous points in the design of Raft where we had to choose among alternative approaches. In these situations we evaluated the alternatives based on understandability: how hard is it to explain each alternative (for example, how complex is its state space, and does it have subtle implications?), and how easy will it be for a reader to completely understand the approach and its implications?

我们认识到这样的分析存在高度的主观性;尽管如此,我们使用了两种普遍适用的技术。第一种技术是众所周知的问题分解方法:在可能的情况下,我们将问题分解为可以相对独立地解决、解释和理解的独立部分。例如,在Raft中,我们分离了领导者选举、日志复制、安全性和成员变更。

We recognize that there is a high degree of subjectivity in such analysis; nonetheless, we used two techniques that are generally applicable. The first technique is the well-known approach of problem decomposition: wherever possible, we divided problems into separate pieces that could be solved, explained, and understood relatively independently. For example, in Raft we separated leader election, log replication, safety, and membership changes.

我们的第二种方法是通过减少要考虑的状态数来简化状态空间,使系统更加连贯,并在可能的情况下消除不确定性。具体来说,日志不允许有漏洞,Raft限制了日志之间不一致的方式。虽然在大多数情况下,我们试图消除非决定论,有些情况下,非决定论实际上提高了可理解性。特别地,随机方法引入了非决定论,但它们倾向于通过以类似( '选一个;它不重要')的方式处理所有可能的选择来减少状态空间。我们使用随机化来简化Raft领导人选举算法。

Our second approach was to simplify the state space by reducing the number of states to consider, making the system more coherent and eliminating nondeterminism where possible. Specifically, logs are not allowed to have holes, and Raft limits the ways in which logs can become inconsistent with each other. Although in most cases we tried to eliminate nondeterminism, there are some situations where nondeterminism actually improves understandability. In particular, randomized approaches introduce nondeterminism, but they tend to reduce the state space by handling all possible choices in a similar fashion (“choose any; it doesn’t matter”). We used randomization to simplify the Raft leader election algorithm.

5 Raft共识算法【The Raft consensus algorithm】

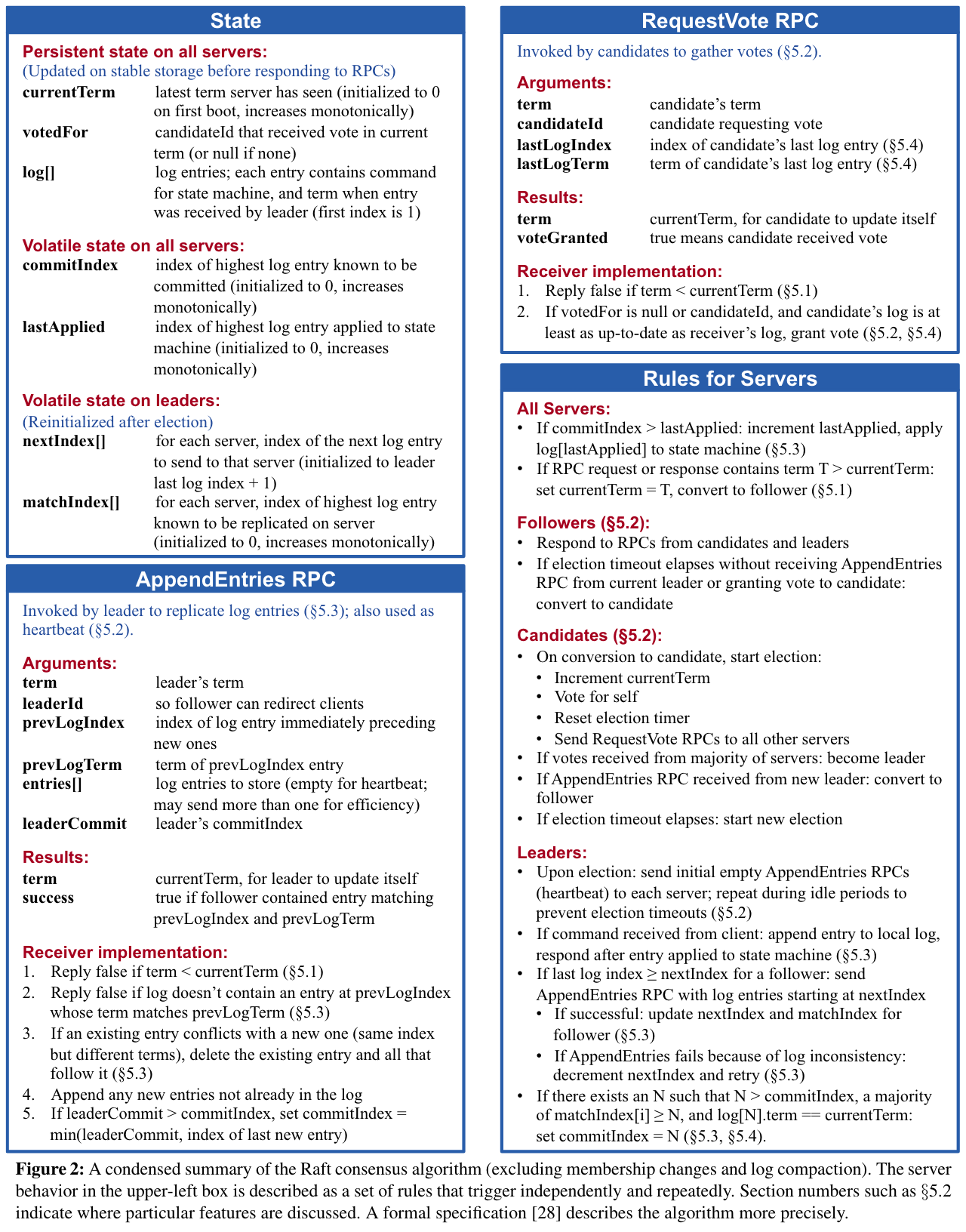

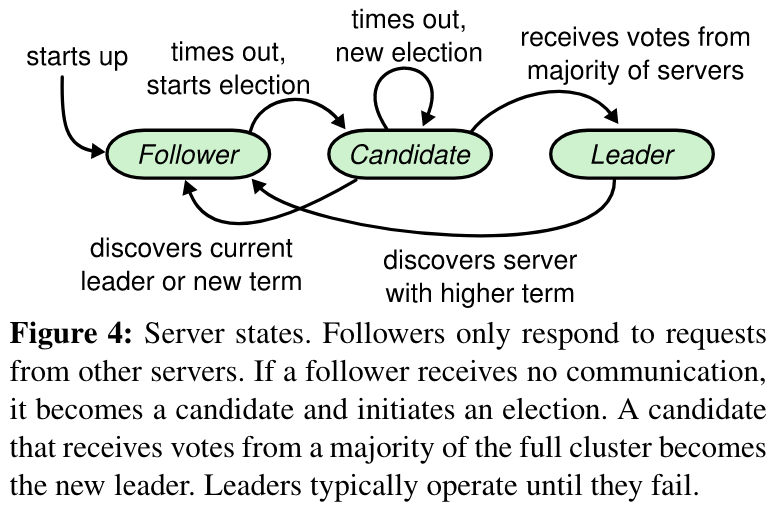

Raft是用于管理第2节所述表单的复制日志的算法。图2以浓缩形式对算法进行了总结以供参考,图3列出了算法的关键性质;这些图形的元素在本节的其余部分逐段讨论。

Raft is an algorithm for managing a replicated log of the form described in Section 2. Figure 2 summarizes the algorithm in condensed form for reference, and Figure 3 lists key properties of the algorithm; the elements of these figures are discussed piecewise over the rest of this section.

Raft通过首先选举一位杰出的领导人来实现共识,领导人负有管理复制日志的全部责任。领导者接受来自客户端的日志条目,将它们复制到其他服务器上,并告诉服务器何时可以安全地将日志条目应用到它们的状态机中。拥有领导者简化了复制日志的管理。例如,领导者可以在没有咨询其他服务器的情况下决定在日志中放置新条目的位置,数据以简单的方式从领导者流向其他服务器。一个领导者可以失败或与其他服务器断开连接,在这种情况下重新选择领导者。

Raft implements consensus by first electing a distinguished leader,thengivingtheleadercompleteresponsibility for managing the replicated log. The leader accepts log entries from clients, replicates them on other servers, and tells servers when it is safe to apply log entries to their state machines. Having a leader simplifies the management of the replicated log. For example, the leader can decide where to place new entries in the log without consulting other servers, and data flows in a simple fashion from the leader to other servers. A leader can fail or become disconnected from the other servers, in which case anewleaderiselected.

考虑到领导者方法,Raft将共识问题分解为三个相对独立的子问题,在接下来的小节中讨论:

Given the leader approach, Raft decomposes the consensus problem into three relatively independent subproblems, which are discussed in the subsections that follow:

-

领导人选举: 现有领导人失败时必须重新选举领导人( 5.2节)。

-

Leader election: a new leader must be chosen when an existing leader fails (Section 5.2).

-

日志复制: 领导者必须接受来自客户端的日志条目,并在集群中复制它们,迫使其他日志与自己的日志一致(第5.3节)。

-

Log replication: the leader must accept log entries from clients and replicate them across the cluster, forcing the other logs to agree with its own (Section 5.3).

-

安全性: Raft的关键安全属性是图3中的状态机安全属性:如果任何服务器对其状态机应用了特定的日志条目,那么任何其他服务器都不能对相同的日志索引应用不同的命令。第5.4节描述了Raft如何确保此属性;解决方案涉及对第5.2节所述选举机制的额外限制。

-

Safety: the key safety property for Raft is the State Machine Safety Property in Figure 3: if any server has applied a particular log entry to its state machine, then no other server may apply a different command for the same log index. Section 5.4 describes how Raft ensures this property; the solution involves an additional restriction on the election mechanism described in Section 5.2.

在给出共识算法之后,本节讨论了可用性问题以及时序在系统中的作用。

-

After presenting the consensus algorithm, this section discusses the issue of availability and the role of timing in the system.

图2:进一步共识算法(排除成员变化和日志压缩)的浓缩总结。左上方框中的服务器行为被描述为一组独立重复触发的规则。节编号如§ 5.2指出了讨论特定特征的地方。一个形式化规范[ 28 ]更精确地描述了算法。

图3:Raft保证每个属性在任何时候都是真的。节号表明了每个属性在哪里被讨论。

5.1 Raft基础【Raft basics】

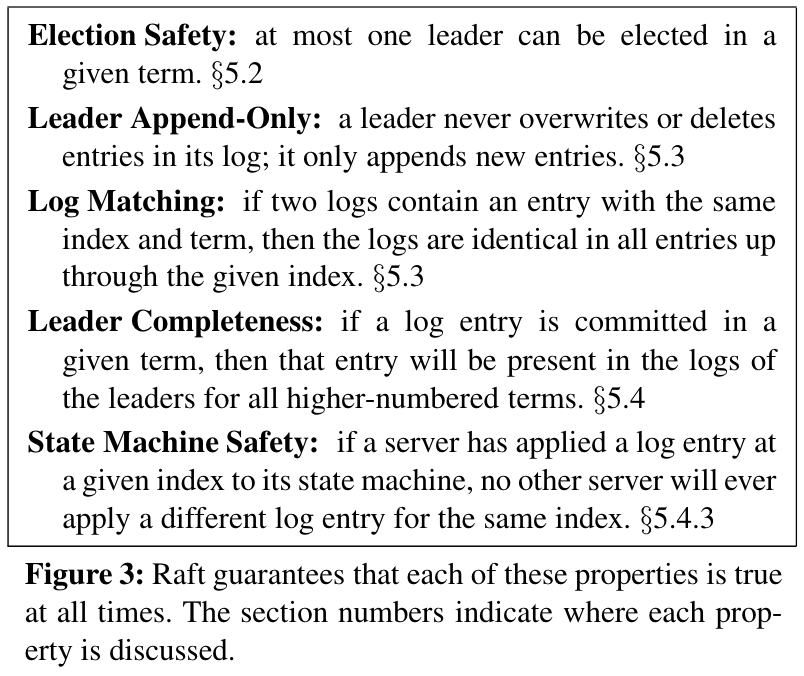

一个Raft集群包含多个服务器;五是一个典型数,允许系统容忍两个故障。在任一给定时刻,每个服务器处于三种状态之一:领导者、跟随者或候选者。正常运行时只有一个领导者,其他所有服务器都是跟随者。追随者是被动的:他们自己不发出请求,只是简单地回应领导和候选人的要求。领导者处理所有客户端请求(如果客户端接触到跟随者,则跟随者重定向到领导者)。第三个状态,候选人,用于选举一个新的领导者,如5.2节所述。图4给出了状态及其转换;下面讨论这些转变。

ARaftclustercontainsseveralservers;fiveisatypical number, which allows the system to tolerate two failures. At any given time each server is in one of three states: leader, follower,orcandidate.Innormaloperationthere is exactly one leader and all of the other servers are followers. Followers are passive: they issue no requests on their own but simply respond to requests from leaders and candidates. The leader handles all client requests (if aclientcontactsafollower,thefollowerredirectsittothe leader). The third state, candidate, is used to elect a new leader as described in Section 5.2. Figure 4 shows the states and their transitions; the transitions are discussed below.

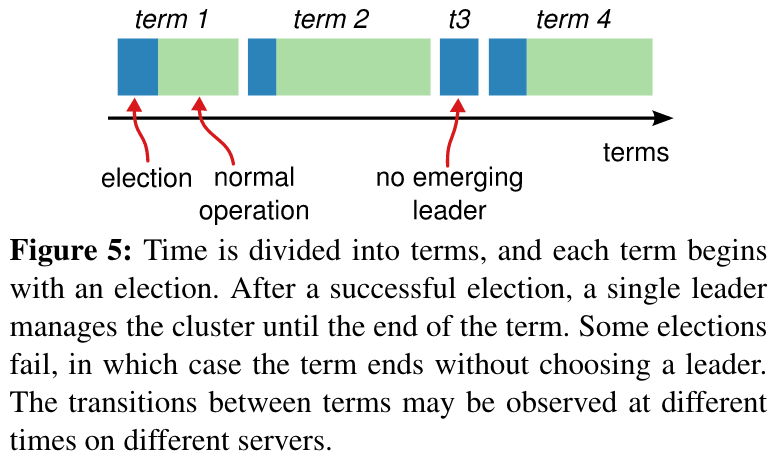

Raft将时间划分为任意长度的项,如图5所示。任期以连续整数编号。每个任期以一次选举开始,其中一个或多个候选人试图成为5.2节所述的领导人。如果一个候选人赢得选举,那么他在剩下的任期中担任领导人。在某些情况下,选举会导致分裂投票。在这种情况下,任期将以没有领导者而结束;一个新的任期(伴随着新的选举)即将开始。Raft保证了给定任期内至多有一个领导者。

Raft divides time into terms of arbitrary length, as shown in Figure 5. Terms are numbered with consecutive integers. Each term begins with an election,inwhichone or more candidates attempt to become leader as described in Section 5.2. If a candidate wins the election, then it serves as leader for the rest of the term. In some situations an election will result in a split vote. In this case the term will end with no leader; a new term (with a new election) will begin shortly. Raft ensures that there is at most one leader in a given term.

图4:服务器状态。追随者只响应来自其他服务器的请求。如果一个追随者没有收到任何通信,它就成为一个候选人并发起选举。获得整个集群多数选票的候选人成为新的领导人。领导者通常会一直工作到失败。

图5:时间分为任期,每个任期以选举开始。选举成功后,由单一领导人管理集群,直至任期结束。有些选举失败,在这种情况下,任期结束而没有选择领导人。术语之间的转换在不同的服务器上可能在不同的时间被观察到。

不同的服务器可能会在不同的时间观察到任期之间的转换,在某些情况下,服务器可能不会观察到选举甚至整个任期。任期在Raft中充当逻辑时钟[ 12 ],它们允许服务器检测过时的信息,如过时的领导者。每个服务器存储一个当前任期号,它随时间单调递增。当服务器进行通信时,交换当前的任期号;如果一个服务器的当前所处任期小于另一个服务器的当前所处任期,则它将其当前所处任期更新为较大的值。如果一个候选人或领导者发现自己的任期已经过时,它立即恢复到追随者状态。如果服务器接收到一个过期的任期号的请求,则拒绝该请求。

Different servers may observe the transitions between terms at different times, and in some situations a server may not observe an election or even entire terms. Terms act as a logical clock [12] in Raft, and they allow servers to detect obsolete information such as stale leaders. Each server stores a current term number, which increases monotonically over time. Current terms are exchanged whenever servers communicate; if one server’s current term is smaller than the other’s, then it updates its current term to the larger value. If a candidate or leader discovers that its term is out of date, it immediately reverts to follower state. If a server receives a request with a stale term number, it rejects the request.

Raft服务器使用远程过程调用( RPC )进行通信,而共识算法只需要两种类型的RPC。RequestVote RPC由候选人在选举期间发起(第5.2节),AppendEntries RPC由领导者发起以复制日志条目并提供心跳形式(第5.3节)。如果服务器没有及时收到响应,则重试RPC,并以并行方式发出RPC以获得最佳性能。

Raft servers communicate using remote procedure calls (RPCs), and the consensus algorithm requires only two types of RPCs. RequestVote RPCs are initiated by candidates during elections (Section 5.2), and AppendEntries RPCs are initiated by leaders to replicate log entries and to provide a form of heartbeat (Section 5.3). Servers retry RPCs if they do not receive a response in a timely manner, and they issue RPCs in parallel for best performance.

5.2 领导者选举【Leader election】

Raft使用心跳机制触发领导人选举。当服务器启动时,它们以跟随者的身份开始。服务器只要接收到领导者或候选者的有效RPC,就一直处于追随者状态。领导者为了维护自己的权威,向所有追随者发送周期性心跳(附录不带日志项的RPC)。如果一个追随者在一段时间内没有收到任何通信,称为选举超时,那么他假设有一个不可更换的领导者,并开始选举以选择新的领导者。

Raft uses a heartbeat mechanism to trigger leader election. When servers start up, they begin as followers. A server remains in follower state as long as it receives valid RPCs from a leader or candidate. Leaders send periodic heartbeats (AppendEntries RPCs that carry no log entries) to all followers in order to maintain their authority. If a follower receives no communication over a period of time called the election timeout,then it assumes there is noviable leader and begins an election to choose a new leader.

为了开始选举,一个追随者增加其当前的任期号并过渡到候选者状态。然后它为自己投票,并在集群中的每个其他服务器上并行地发出RequestVote RPC。一个候选人在这种状态下持续,直到三件事之一发生:( a )它赢得了选举,( b )另一个服务器建立自己作为领导者,或( c )一段时间没有赢家。这些结果在下文中分别讨论。

To begin an election, a follower increments its current term and transitions to candidate state. It then votes for itself and issues RequestVote RPCs in parallel to each of the other servers in the cluster. A candidate continues in this state until one of three things happens: (a) it wins the election, (b) another server establishes itself as leader, or (c) a period of time goes by with no winner. These outcomes are discussed separately in the paragraphs below.

如果候选人在同一任期内从整个集群中的大多数服务器获得选票,则该候选人赢得选举。每个服务器在给定任期内最多投票给一个候选人,先到先得(注:第5.4节增加了对投票的额外限制)。多数票规则保证了至少有一名候选人能够赢得特定任期(图3中的选举安全属性)的选举。一旦候选人赢得选举,他就会成为领袖。然后,它向所有其他服务器发送心跳消息,以建立其权威并防止新的选举。

A candidate wins an election if it receives votes from a majority of the servers in the full cluster for the same term. Each server will vote for at most one candidate in a given term, on a first-come-first-served basis (note: Section 5.4 adds an additional restriction on votes). The majority rule ensures that at most one candidate can win the election for a particular term (the Election Safety Property in Figure 3). Once a candidate wins an election, it becomes leader. It then sends heartbeat messages to all of the other servers to establish its authority and prevent new elections.

在等待投票时,候选人可能会从另一个声称是领导者的服务器收到一个AppendEntries RPC。如果领导者的任期(包含在其RPC中)至少与候选人的当前任期一样大,那么候选人承认领导者是合法的,并返回到追随者状态。如果RPC中的项小于候选人当前的项,则该候选人拒绝RPC,继续处于候选状态。

While waiting for votes, a candidate may receive an AppendEntries RPC from another server claiming to be leader. If the leader’s term (included in its RPC) is at least as large as the candidate’s current term, then the candidate recognizes the leader as legitimate and returns to follower state. If the term in the RPC is smaller than the candidate’s current term, then the candidate rejects the RPC and continues in candidate state.

第三种可能的结果是,一个候选人既没有赢得选举,也没有输掉选举:如果许多追随者同时成为候选人,那么选票就会被分割,以至于没有候选人获得多数。当这种情况发生时,每个候选人将超时并通过增加其任期和启动另一轮RequestVote RPCs来启动新的选举。然而,如果没有额外的措施,分裂投票可能会无限期地重复。

The third possible outcome is that a candidate neither wins nor loses the election: if many followers become candidates at the same time, votes could be split so that no candidate obtains a majority. When this happens, each candidate will time out and start a new election by incrementing its term and initiating another round of RequestVote RPCs. However, with out extra measures split votes could repeat indefinitely.

Raft使用随机的选举超时,以确保分裂投票是罕见的,并迅速解决。为了防止首先出现分裂投票,选举超时从固定间隔(如150 ~ 300ms)中随机选择。这样分散了服务器,以至于在大多数情况下只有单个服务器会超时;它赢得选举,并在任何其他服务器超时之前发送心跳。同样的机制用于处理分裂投票。每个候选人在选举开始时重新启动其随机选举超时,并在开始下一次选举之前等待该超时结束;这降低了在新的选举中出现另一次分裂投票的可能性。第8.3节表明,这种方法可以迅速选出领导人。

Raft uses randomized election timeouts to ensure that split votes are rare and that they are resolved quickly. To prevent split votes in the first place, election timeouts are chosen randomly from a fixed interval (e.g., 150–300ms). This spreads out the servers so that in most cases only a single server will time out; it wins the election and sends heartbeats before any other servers time out. The same mechanism is used to handle split votes. Each candidate restarts its randomized election timeout at the start of an election, and it waits for that timeout to elapse before starting the next election; this reduces the likelihood of another split vote in the new election. Section 8.3 shows that this approach elects a leader rapidly.

选举是可理解性如何指导我们在设计备选方案之间选择的一个例子。最初,我们计划使用一个排名系统:每个候选人被分配一个独特的排名,这是用来选择相互竞争的候选人。如果一个候选人发现了另一个级别较高的候选人,那么它就会回到追随者状态,从而使排名较高的候选人更容易赢得下一次选举。我们发现这种方法围绕可用性(如果排名较高的服务器失败,排名较低的服务器可能需要超时,并再次成为候选人,但如果它做得太快,它可以重置选举领导人的进展)产生了微妙的问题。我们对算法进行了多次调整,但每次调整后都会出现新的状况。最后,我们得出结论,随机重试方法更明显和更容易理解。

Elections are an example of how understandability guided our choice between design alternatives. Initially we planned to use a ranking system: each candidate was assigned a unique rank, which was used to select between competing candidates. If a candidate discovered another candidate with higher rank, it would return to follower state so that the higher ranking candidate could more easily win the next election. We found that this approach created subtle issues around availability (a lower-ranked server might need to time out and become a candidate again if a higher-ranked server fails, but if it does so too soon, it can reset progress towards electing a leader). We made adjustments to the algorithm several times, but after each adjustment new corner cases appeared. Eventually we concluded that the randomized retry approach is more obvious and understandable.

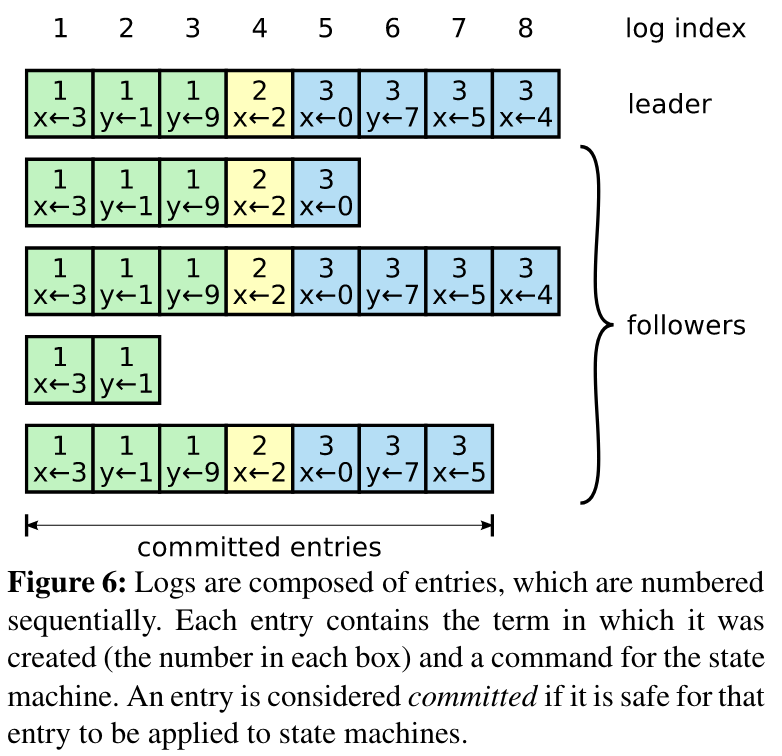

图6:日志由条目组成,条目依次编号。每个条目包含创建(每个方框内的数字)的术语和状态机的命令。如果一个条目能够安全地应用于状态机,则认为该条目是提交的。

5.3 日志复制【Log replication】

一旦一个领导者当选,它就开始为客户请求提供服务。每个客户端请求都包含一个命令,由复制的状态机执行。领导者将命令追加到日志中作为新的条目,然后在其他服务器上并行地发出AppendEntries RPC来复制条目。当条目被安全地复制(如下所述)时,领导者将该条目应用于其状态机,并将执行结果返回给客户机。如果跟随者崩溃或运行缓慢,或者网络数据包丢失,领导者无限期地重试AppendEntries RPCs (即使在回应了委托人之后),直到所有跟随者最终存储所有日志条目。

Once a leader has been elected, it begins servicing client requests. Each client request contains a command to be executed by the replicated state machines. The leader appends the command to its log as a new entry, then issues AppendEntries RPCs in parallel to each of the other servers to replicate the entry. When the entry has been safely replicated (as described below), the leader applies the entry to its state machine and returns the result of that execution to the client. If followers crash or run slowly, or if network packets are lost, the leader retries AppendEntries RPCs indefinitely (even after it has responded to the client) until all followers eventually store all log entries.

日志整理如图6所示。每个日志条目都存储一个状态机命令,以及领导者收到条目时的任期号。日志条目中的术语编号用于检测日志之间的不一致,并确保图3中的一些属性。每个日志条目也有一个整数索引,标识其在日志中的位置。

Logs are organized as shown in Figure 6. Each log entry stores a state machine command along with the term number when the entry was received by the leader. The term numbers in log entries are used to detect inconsistencies between logs and to ensure some of the properties in Figure 3. Each log entry also has an integer index identifying its position in the log.

领导者决定何时对状态机应用日志条目是安全的;这样的条目被称为committed。Raft保证承诺可执行,并最终由所有可用的状态机执行。一旦创建该条目的领导者在大多数服务器(例如,图6中的条目7)上复制了该条目,就会提交一个日志条目。这也提交了领导者日志中的所有前面的条目,包括以前的领导者创建的条目。第5.4节讨论了领导者变更后适用该规则时的一些细微之处,同时也表明这种承诺定义是安全的。领导者跟踪其知道要提交的最高索引,并将该索引包含在未来的AppendEntries RPC中(包括心跳),以便其他服务器最终发现。一旦一个追随者得知一个日志条目被提交,它将该条目应用到它的本地状态机(以log顺序)中。

The leader decides when it is safe to apply a log entry to the state machines; such an entry is called committed.Raft guarantees that committed entries are durable and will eventually be executed by all of the available state machines. A log entry is committed once the leader that created the entry has replicated it on a majority of the servers (e.g., entry 7 in Figure 6). This also commits all preceding entries in the leader’s log, including entries created by previous leaders. Section 5.4 discusses some subtleties when applying this rule after leader changes, and it also shows that this definition of commitment is safe. The leader keeps track of the highest index it knows to be committed, and it includes that index in future AppendEntries RPCs (including heartbeats) so that the other servers eventually find out. Once a follower learns that a log entry is committed, it applies the entry to its local state machine (in log order).

我们设计了Raft日志机制来保持不同服务器上日志之间的高一致性。这不仅简化了系统的行为,使其更可预测,而且是确保安全的重要组成部分。Raft维护以下属性,它们共同构成了图3中的Log Matching Property:

We designed the Raft log mechanism to maintain a high level of coherency between the logs on different servers. Not only does this simplify the system’s behavior and make it more predictable, but it is an important component of ensuring safety. Raft maintains the following properties, which together constitute the Log Matching Property in Figure 3:

-

如果不同日志中的两个条目具有相同的索引和任期号,那么它们存储相同的命令。

-

If two entries in different logs have the same index and term, then they store the same command.

-

如果不同日志中的两个条目具有相同的索引和术语,那么日志在所有前面的条目中都是相同的。

-

If two entries in different logs have the same index and term, then the logs are identical in all preceding entries.

第一个属性源于这样一个事实,即一个领导者在给定的任期中最多创建一个具有给定日志索引的条目,并且日志条目永远不会改变它们在日志中的位置。第二个属性由AppendEntries执行的简单一致性检查保证。当发送AppendEntries RPC时,领导者在其日志中包含条目的索引和任期号,这些条目紧接在新条目之前。如果关注者在其日志中没有找到具有相同索引和术语的条目,则拒绝新的条目。一致性检查充当诱导步骤:日志的初始空状态满足日志匹配属性,并且一致性检查在日志扩展时保留日志匹配属性。因此,每当AppendEntries成功返回时,领导者就知道跟随者的日志与它自己通过新条目的日志记录相同。

The first property follows from the fact that a leader creates at most one entry with a given log index in a given term, and log entries never change their position in the log. The second property is guaranteed by a simple consistency check performed by AppendEntries. When sending an AppendEntries RPC, the leader includes the index and term of the entry in its log that immediately precedes the new entries. If the follower does not find an entry in its log with the same index and term, then it refuses the new entries. The consistency check acts as an induction step: the initial empty state of the logs satisfies the Log Matching Property, and the consistency check preserves the Log Matching Property whenever logs are extended. As a result, whenever AppendEntries returns successfully, the leader knows that the follower’s log is identical to its own log up through the new entries.

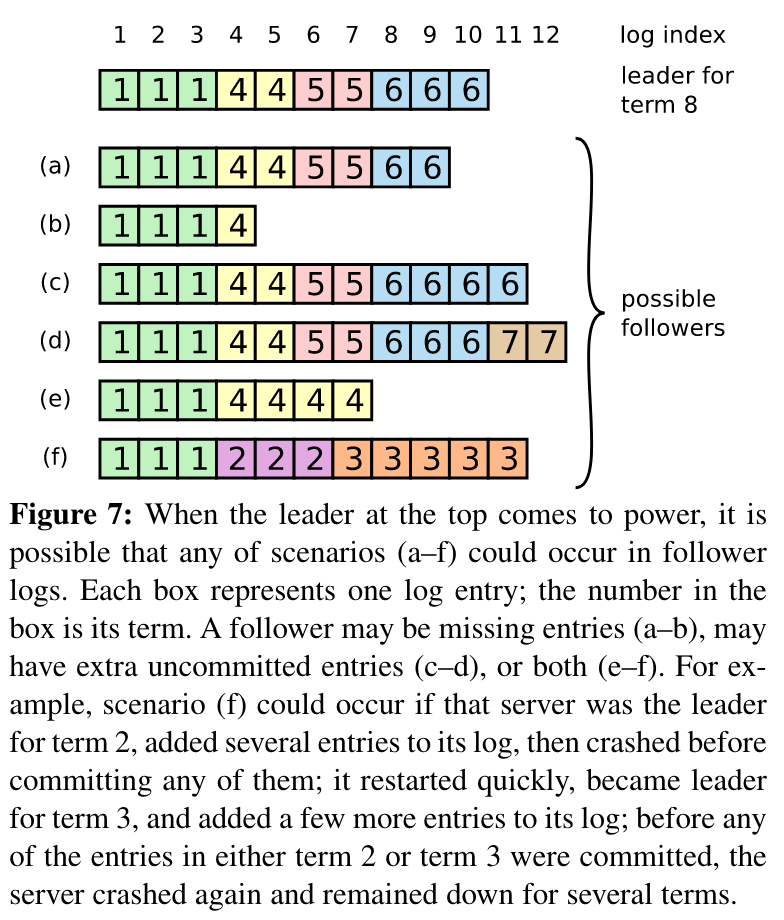

正常运行时,领导者和跟随者的日志保持一致,因此Append Entries一致性检查不会失败。但是,领导者崩溃可能会使日志不一致(旧的领导者可能没有完全复制其日志中的所有条目)。这些不一致性会叠加在一系列的领导者和跟随者崩溃上。图7说明了跟随者的日志可能与新领导的日志不同的方式。一个追随者可能缺少存在于领导者上的条目,也可能有不存在于领导者上的额外条目,或者两者都有。日志中的缺失项和无关项可能跨越多个任期。

During normal operation, the logs of the leader and followers stay consistent, so the AppendEntries consistency check never fails. However, leader crashes can leave the logs inconsistent (the old leader may not have fully replicated all of the entries in its log). These inconsistencies can compound over a series of leader and follower crashes. Figure 7 illustrates the ways in which followers’ logs may differ from that of a new leader. A follower may be missing entries that are present on the leader, it may have extra entries that are not present on the leader, or both. Missing and extraneous entries in a log may span multiple terms.

在Raft中,领导者通过强迫追随者的日志复制自己的日志来处理不一致。这意味着追随者日志中相互冲突的条目会被来自领导者日志的条目覆盖。第5.4节将表明,如果再加上一个限制,这是安全的。

In Raft, the leader handles inconsistencies by forcing the followers’ logs to duplicate its own. This means that conflicting entries in follower logs will be overwritten with entries from the leader’s log. Section 5.4 will show that this is safe when coupled with one more restriction.

图7:当处于顶端的领导者上台时,任何场景( a-f )都有可能出现在追随者日志中。每个方框代表一个日志条目;方框中的数字是它的任期。跟随者可能是缺失词条( a-b ),也可能有额外的未提交词条( c-d ),或者两者都有( e-f )。例如,场景( f )可能发生在如果该服务器是第2项的领导者,在其日志中添加了多个条目,然后在提交其中任何一个条目之前崩溃;它很快重新启动,成为第3项的领导者,并在其日志中添加了更多的条目;在第2项或第3项中的任何一个条目提交之前,服务器再次崩溃,并持续了几个条款。

为了使一个跟随者的日志与自己的日志保持一致,领导者必须找到两个日志一致的最新日志条目,在该点之后删除跟随者日志中的任何条目,并在该点之后将领导者的所有条目发送给跟随者。所有这些操作都是为了响应AppendEntries RPCs执行的一致性检查。领导者为每个跟随者维护一个nextIndex,它是领导者将要发送给该跟随者的下一个日志条目的索引。当一个领导者首次上台时,它会在其log (图7中11)中的最后一个索引值之后将所有next Index值初始化为该索引。如果一个追随者的日志与领导者的日志不一致,那么在下一个AppendEntries RPC中,AppendEntries一致性检查将失败。拒绝后,领导者对next Index进行递减,并重试Append Entries RPC。最终下一个Index将达到领导者和跟随者逻辑匹配的点。当这种情况发生时,AppendEntries会成功,它会删除跟随者日志中的任何冲突条目,并从领导者日志中追加条目(如果有的话)。一旦AppendEntries成功,跟随者的日志与领导者的日志保持一致,并且在剩余的任期内保持这种状态。

To bring a follower’s log into consistency with its own, the leader must find the latest log entry where the two logs agree, delete any entries in the follower’s log after that point, and send the follower all of the leader’s entries after that point. All of these actions happen in response to the consistency check performed by AppendEntries RPCs. The leader maintains a nextIndex for each follower, which is the index of the next log entry the leader will send to that follower. When a leader first comes to power, it initializes all nextIndex values to the index just after the last one in its log (11 in Figure 7). If a follower’s log is inconsistent with the leader’s, the AppendEntries consistency check will fail in the next AppendEntries RPC. After a rejection, the leader decrements nextIndex and retries the AppendEntries RPC. Eventually nextIndex will reach apointwheretheleaderandfollowerlogsmatch.When this happens, AppendEntries will succeed, which removes any conflicting entries in the follower’s log and appends entries from the leader’s log (if any). Once AppendEntries succeeds, the follower’s log is consistent with the leader’s, and it will remain that way for the rest of the term.

可以对协议进行优化,减少被拒绝的Append Entries RPC数量;详见[ 29 ]。

The protocol can be optimized to reduce the number of rejected AppendEntries RPCs; see [29] for details.

在这种机制下,领导人上台后不需要采取任何特别行动来恢复日志一致性。它刚开始正常运行,日志自动收敛以响应AppendEntries一致性检查的失败。领导者从不覆盖或删除自己日志(图3中的领导者附属性)中的条目。

With this mechanism, a leader does not need to take any special actions to restore log consistency when it comes to power. It just begins normal operation, and the logs automatically converge in response to failures of the AppendEntries consistency check. A leader never overwrites or deletes entries in its own log (the Leader Append-Only Property in Figure 3).

这种日志复制机制体现了第2节所描述的理想的一致性属性:只要有大量的服务器,Raft就可以接受、复制和应用新的日志条目;在正常情况下,一个新的条目可以用一轮RPC复制到集群的大部分;并且单个缓慢的跟随者不会影响性能。

This log replication mechanism exhibits the desirable consensus properties described in Section 2: Raft can accept, replicate, and apply new log entries as long as a majority of the servers are up; in the normal case a new entry can be replicated with a single round of RPCs to a majority of the cluster; and a single slow follower will not impact performance.

5.4 安全性【Safety】

前面的部分描述了Raft如何选举领导者和复制日志条目。然而,到目前为止所描述的机制还不足以确保每个状态机以相同的顺序执行完全相同的命令。例如,当领导者提交多个日志条目时,一个追随者可能不可用,那么它可以被选举为领导者,并用新的条目覆盖这些条目;因此,不同的状态机可能执行不同的命令序列。

The previous sections described how Raft elects leaders and replicates log entries. However, the mechanisms described so far are not quite sufficient to ensure that each state machine executes exactly the same commands in the same order. For example, a follower might be unavailable while the leader commits several log entries, then it could be elected leader and overwrite these entries with new ones; as a result, different state machines might execute different command sequences.

本节通过增加对哪些服务器可能被选为领导者的限制来完成Raft算法。该限制保证了任一任期的领导人包含前面的任期(由图3可知Leader Completeness Property)中提交的所有任期。考虑到选举限制,我们接着使承诺规则更加精确。最后,我们给出了Leader Completeness属性的证明示意图,并展示了它如何引导复制状态机的正确行为。

This section completes the Raft algorithm by adding a restriction on which servers may be elected leader. The restriction ensures that the leader for any given term contains all of the entries committed in previous terms (the Leader Completeness Property from Figure 3). Given the election restriction, we then make the rules for commitment more precise. Finally, we present a proof sketch for the Leader Completeness Property and show how it leads to correct behavior of the replicated state machine.

5.4.1 选举限制【Election restriction】

在任何强领导者共识算法中,领导者最终必须存储所有提交的日志条目。在一些共识算法中,如Viewstamped Replication [ 20 ],即使一个领导者最初不包含所有提交的条目,也可以被选举出来。这些算法包含额外的机制来识别缺失的条目,并将其传送给新的领导人,无论是在选举过程中还是在选举后不久。遗憾的是,这导致了相当大的额外机制和复杂性。Raft使用了一种更简单的方法,它保证每位新领导人从当选的那一刻起就有来自前任的所有承诺条目,而不需要将这些条目转移给领导人。这意味着日志条目只沿着一个方向流动,从领导者到追随者,并且领导者从不覆盖其日志中的现有条目。

In any leader-based consensus algorithm, the leader must eventually store all of the committed log entries. In some consensus algorithms, such as Viewstamped Replication [20], a leader can be elected even if it doesn’t initially contain all of the committed entries. These algorithms contain additional mechanisms to identify the missing entries and transmit them to the new leader, either during the election process or shortly afterwards. Unfortunately, this results in considerable additional mechanism and complexity. Raft uses a simpler approach where it guarantees that all the committed entries from previous terms are present on each new leader from the moment of its election, without the need to transfer those entries to the leader. This means that log entries only flow in one direction, from leaders to followers, and leaders never overwrite existing entries in their logs.

Raft使用投票过程来阻止候选人赢得选举,除非其日志包含所有提交的条目。候选人必须联系集群中的大多数才能当选,这意味着每个提交的条目必须至少存在于其中一个服务器中。如果候选者的日志至少与该多数(其中" up-to-date "的定义如下)中的任何其他日志一样是最新的,那么它将保留所有提交的条目。RequestVote RPC实现了这一限制:RPC包含关于候选人日志的信息,如果投票人自己的日志比候选人的日志更新,则投票人拒绝投票。

Raft uses the voting process to prevent a candidate from winning an election unless its log contains all committed entries. A candidate must contact a majority of the cluster in order to be elected, which means that every committed entry must be present in at least one of those servers. If the candidate’s log is at least as up-to-date as any other log in that majority (where “up-to-date” is defined precisely below), then it will hold all the committed entries. The RequestVote RPC implements this restriction: the RPC includes information about the candidate’s log, and the voter denies its vote if its own log is more up-to-date than that of the candidate.

Raft通过比较日志中最后一个条目的索引和词项来确定两个日志中哪个日志是最新的。如果日志的最后一个条目有不同的词项,那么后一个词项的日志更新更快。如果日志以相同的期限结束,那么无论哪个日志更长都是最新的。

Raft determines which of two logs is more up-to-date by comparing the index and term of the last entries in the logs. If the logs have last entries with different terms, then the log with the later term is more up-to-date. If the logs end with the same term, then whichever log is longer is more up-to-date.

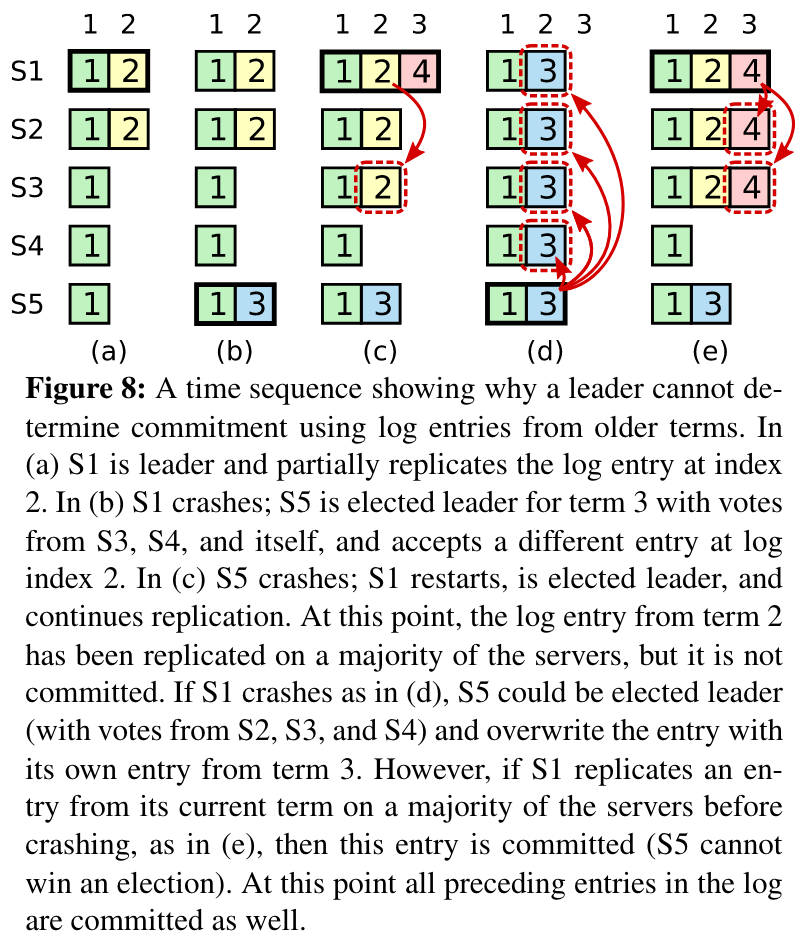

图8:a time sequence表示领导人无法使用旧术语的日志项确定承诺。在( a )中S1是领导者并且部分复制索引2处的日志条目。( b ) S1崩溃;S5以S3、S4和自身的票数当选第3任期的领导人,并在对数指标2接受不同的进入。( c ) S5崩溃;S1重新启动,当选领导者,继续复制。此时,term2的日志条目已经在大部分服务器上被复制,但并没有被提交。如果S1像( d )一样崩溃,则S5可以当选领导者(投票来自S2、S3和S4),并从第3项开始用自己的条目覆盖该条目。然而,如果S1在崩溃前在大多数服务器上复制了当前项,如( e )所示,则该项为提交( S5无法赢得选举)。此时,日志中的所有前项也被提交。

5.4.2 从以前的条款提交条目【Committing entries from previous terms】

正如在第5.3节中所描述的那样,一个领导者知道,当一个条目被存储在大多数服务器上时,它当前的条目将被提交。如果一个领导者在提交条目之前崩溃,未来的领导者将尝试完成条目的复制。然而,一个领导者不能立即断定来自上一个任期的条目一旦存储在大多数服务器上就会被提交。图8说明了一种情况,即旧日志条目存储在大多数服务器上,但仍然可以被未来的领导者覆盖。

As described in Section 5.3, a leader knows that an entry from its current term is committed once that entry is stored on a majority of the servers. If a leader crashes before committing an entry, future leaders will attempt to finish replicating the entry. However, a leader cannot immediately conclude that an entry from a previous term is committed once it is stored on a majority of servers. Figure 8 illustrates a situation where an old log entry is stored on a majority of servers, yet can still be overwritten by a future leader.

为了消除如图8所示的问题,Raft从不通过计数副本来提交之前任期中的日志项。只有来自领导者当前任期的日志条目通过计数副本提交;一旦以这种方式提交了来自当前任期的条目,那么由于日志匹配属性,所有以前的条目都是间接提交的。在某些情况下,领导者可以安全地得出结论,一个较旧的日志条目被提交(例如,如果该条目存储在每个服务器上),但是Raft为了简单起见采取了更保守的方法。

To eliminate problems like the one in Figure 8, Raft never commits log entries from previous terms by counting replicas. Only log entries from the leader’s current term are committed by counting replicas; once an entry from the current term has been committed in this way, then all prior entries are committed indirectly because of the Log Matching Property. There are some situations where a leader could safely conclude that an older log entry is committed (for example, if that entry is stored on every server), but Raft takes a more conservative approach for simplicity.

Raft在承诺规则中引起了这种额外的复杂性,因为当一个领导者从以前的任期复制条目时,日志条目会保留它们的原始任期号。在其他共识算法中,如果新的领导者从以前的"任期号"中复制条目,那么它必须用新的"任期号"来复制。Raft的方法使得对日志条目的推理变得更加容易,因为它们随着时间的推移和跨日志保持相同的任期号。此外,与其他算法(其他算法必须发送冗余的日志条目来重新编号,然后才能提交)相比,Raft中的新领导者从以前的术语发送的日志条目更少。

Raft incurs this extra complexity in the commitment rules because log entries retain their original term numbers when a leader replicates entries from previous terms. In other consensus algorithms, if a new leader rereplicates entries from prior “terms,” it must do so with its new “term number.” Raft’s approach makes it easier to reason about log entries, since they maintain the same term number over time and across logs. In addition, new leaders in Raft send fewer log entries from previous terms than in other algorithms (other algorithms must send redundant log entries to renumber them before they can be committed).

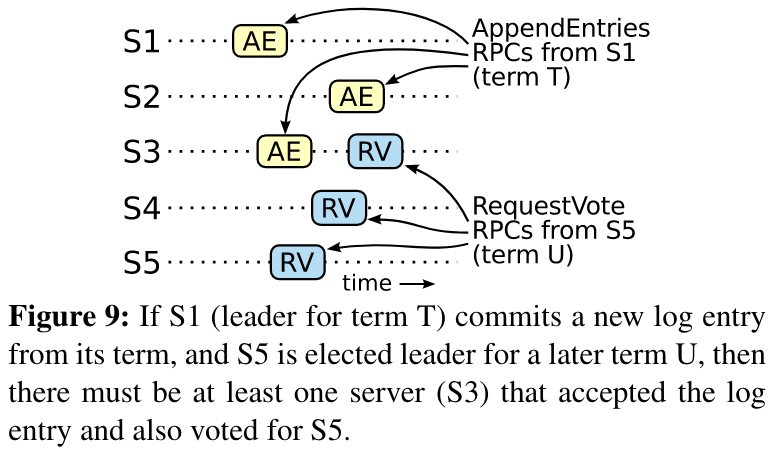

图9:如果S1 ( T任期的领导者)从它的任期中提交了一个新的日志条目,并且S5在以后的任期U中被选举为领导者,那么至少有一个服务器( S3 )接受了日志条目并且也投票给了S5。

5.4.3 安全论证【Safety argument】

考虑到完全Raft算法,我们现在可以更精确地论证Leader Completeness Property成立(这种论证是建立在安全性证明的基础上的;见8.2节)。我们假设Leader完备性不成立,那么我们证明了一个矛盾。假设任期T的领导者( leaderT )从其任期开始提交日志条目,但该日志条目不是由未来某个任期的领导者存储的。考虑最小项U > T,其领导者( leaderU )不存储条目。

Given the complete Raft algorithm, we can now argue more precisely that the Leader Completeness Property holds (this argument is based on the safety proof; see Section 8.2). We assume that the Leader Completeness Property does not hold, then we prove a contradiction. Suppose the leader for term T (leaderT)commitsalog entry from its term, but that log entry is not stored by the leader of some future term. Consider the smallest term U > T whose leader (leaderU) does not store the entry.

- 提交的条目在其当选(领导者从不删除或改写条目)时必须从leaderU的日志中缺席。

- The committed entry must have been absent from leaderU’s log at the time of its election (leaders never delete or overwrite entries).

- Leader T在多数集群上复制了该条目,Leader U获得了多数集群的投票。因此,至少有一个服务器( "选民")同时接受leaderT的进入并投票给leaderU,如图9所示。选民是达成矛盾的关键。

- leaderT replicated the entry on a majority of the cluster, and leaderU received votes from a majority of the cluster. Thus, at least one server (“the voter”) both accepted the entry from leaderT and voted for leaderU,as shown in Figure 9. The voter is key to reaching a contradiction.

- 投票者在投票给领导人U之前,必染已接受领导人T提交日志的事实;否则将拒绝Leader T (其当前任期将高于T)的Append Entries请求。

- The voter must have accepted the committed entry from leaderT before voting for leaderU;otherwiseitwould have rejected the AppendEntries request from leaderT (its current term would have been higher than T).

- 投票者在投票给leaderU时仍然保存条目,因为每个干预的领导者都包含条目(假设),领导者从不删除条目,而追随者只有在与领导者发生冲突时才删除条目。

- The voter still stored the entry when it voted for leaderU,since every intervening leader contained the entry (by assumption), leaders never remove entries, and followers only remove entries if they conflict with the leader.

- 选民将投票权授予领导人U,因此领导人U的日志必须与选民的日志一样最新。这就引出了两个矛盾之一。

- The voter granted its vote to leaderU,soleaderU’s log must have been as up-to-date as the voter’s. This leads to one of two contradictions.

- 首先,如果投票者和leaderU共享同一个最后一个日志项,那么leaderU的日志必须至少和投票者的日志一样长,所以它的日志包含了投票者日志中的每个条目。这是一个矛盾,因为投票者包含已提交的日志,而领导人U被假定不包含已提交的日志。

- First, if the voter and leaderU shared the same last log term, then leaderU’s log must have been at least as long as the voter’s, so its log contained every entry in the voter’s log. This is a contradiction, since the voter contained the committed entry and leaderU was assumed not to.

- 否则,领导人U的最后一个日志的所属任期一定大于投票者。而且,它大于T,因为投票者的最后一个任期至少为T (它包含从任期T中提交的条目)。创建leaderU的最后一个日志条目的早期leader (假设)必须在其日志中包含提交的条目。然后,根据日志匹配性质,leaderU的日志中也必须包含提交的条目,这是一个矛盾。

- Otherwise, leaderU’s last log term must have been larger than the voter’s. Moreover, it was larger than T, since the voter’s last log term was at least T (it contains the committed entry from term T). The earlier leader that created leaderU’s last log entry must have contained the committed entry in its log (by assumption). Then, by the Log Matching Property, leaderU’s log must also contain the committed entry, which is a contradiction.

- 这就完成了矛盾。因此,大于T的所有任期的领导人必须包含T任期中承诺的所有已提交日志。

- This completes the contradiction. Thus, the leaders of all terms greater than T must contain all entries from term T that are committed in term T.

- 日志匹配属性保证未来的领导者也会包含间接提交的条目,如图8 ( d )中的索引2。

- The Log Matching Property guarantees that future leaders will also contain entries that are committed indirectly, such as index 2 in Figure 8(d).

给定Leader Completeness属性,从图3中很容易证明状态机安全属性,所有状态机以相同的顺序应用相同的日志项(参见文献[ 29 ] )。

Given the Leader Completeness Property, it is easy to prove the State Machine Safety Property from Figure 3 and that all state machines apply the same log entries in the same order (see [29]).

5.5 跟随者和候选者崩溃【Follower and candidate crashes】

在此之前,我们一直关注领导者失败。追随者和候选者崩溃比领导者崩溃处理起来要简单得多,并且两者的处理方式相同。如果一个追随者或候选成员崩溃,那么未来发送给它的RequestVote和AppendEntries RPCs将失败。Raft通过无限期地重试来处理这些失败;如果崩溃的服务器重新启动,那么RPC将成功完成。如果一个服务器在完成一个RPC之后但在响应之前崩溃,那么它在重新启动之后会再次收到相同的RPC。Raft RPCs是幂等的,因此这不会造成伤害。例如,如果一个追随者收到一个包含其日志中已经存在的日志条目的AppendEntries请求,那么它会忽略新请求中的那些条目。

Until this point we have focused on leader failures. Follower and candidate crashes are much simpler to handle than leader crashes, and they are both handled in the same way. If a follower or candidate crashes, then future RequestVote and AppendEntries RPCs sent to it will fail. Raft handles these failures by retrying indefinitely; if the crashed server restarts, then the RPC will complete successfully. If a server crashes after completing an RPC but before responding, then it will receive the same RPC again after it restarts. Raft RPCs are idempotent, so this causes no harm. For example, if a follower receives an AppendEntries request that includes log entries already present in its log, it ignores those entries in the new request.

5.6 时机与可得性【Timing and availability】

我们对Raft的要求之一是安全不能依赖于时机:系统不能仅仅因为某些事件发生得比预期的更快或更慢而产生不正确的结果。然而,可用性(系统及时响应客户的能力)必然依赖于时间。例如,如果消息交换的时间比服务器崩溃之间的典型时间长,那么候选人就不会在选举中获胜;没有稳定的领导者,Raft就无法运行。

One of our requirements for Raft is that safety must not depend on timing: the system must not produce incorrect results just because some event happens more quickly or slowly than expected. However, availability (the ability of the system to respond to clients in a timely manner) must inevitably depend on timing. For example, if message exchanges take longer than the typical time between server crashes, candidates will not stay up long enough to win an election; without a steady leader, Raft cannot make progress.

领导人选举是Raft最为关键的环节。只要系统满足以下时序要求,Raft将能够选举并维持一个稳定的领导者:

Leader election is the aspect of Raft where timing is most critical. Raft will be able to elect and maintain a steady leader as long as the system satisfies the following timing requirement:

broadcastTime << electionTimeout << MTBF

在这个不等式中,BroadcastTime是服务器向集群中的每个服务器发送RPC并接收它们的响应所花费的平均时间;ElectionTimeout是5.2节中描述的选举超时;和MTBF是单个服务器的平均故障间隔时间。广播时间应该比选举超时少一个数量级,以便领导人能够可靠地发送阻止追随者开始选举所需的心跳信息;考虑到选举超时使用的随机方法,这种不平等也使得分裂投票不太可能。选举超时应该比MTBF少几个数量级才能使系统稳定运行。当领导者崩溃时,系统将因大概的选举超时而不可用;我们希望这只代表整体时间的一小部分。

In this inequality broadcastTime is the average time it takes a server to send RPCs in parallel to every server in the cluster and receive their responses; electionTimeout is the election timeout described in Section 5.2; and MTBF is the average time between failures for a single server. The broadcast time should be an order of magnitude less than the election timeout so that leaders can reliably send the heartbeat messages required to keep followers from starting elections; given the randomized approach used for election timeouts, this inequality also makes split votes unlikely. The election timeout should be a few orders of magnitude less than MTBF so that the system makes steady progress. When the leader crashes, the system will be unavailable for roughly the election timeout; we would like this to represent only a small fraction of overall time.

广播时间和MTBF是底层系统的属性,而选举超时是我们必须选择的。Raft的RPCs通常要求接收者将信息持久化以稳定存储,因此广播时间可能从0.5 ms到20ms不等,具体取决于存储技术。因此,选举超时很可能在10ms到500ms之间。典型的服务器MTBF为几个月或更长时间,容易满足时序要求。

The broadcast time and MTBF are properties of the underlying system, while the election timeout is something we must choose. Raft’s RPCs typically require the recipient to persist information to stable storage, so the broadcast time may range from 0.5ms to 20ms, depending on storage technology. As a result, the election timeout is likely to be somewhere between 10ms and 500ms. Typical server MTBFs are several months or more, which easily satisfies the timing requirement.

6 集群成员关系变化【Cluster membership changes】

到目前为止,我们假设集群配置(参与共识算法的服务器集合)是固定的。在实际应用中,偶尔会需要更改配置,例如在服务器出现故障时进行替换或者改变复制程度。虽然这可以通过将整个集群离线,更新配置文件,然后重新启动集群来完成,但这会使集群在切换期间不可用。此外,如果有任何手动步骤,都有操作者错误的风险。为了避免这些问题,我们决定自动化配置更改并将其合并到Raft共识算法中。

Up until now we have assumed that the cluster configuration (the set of servers participating in the consensus algorithm) is fixed. In practice, it will occasionally be necessary to change the configuration, for example to replace servers when they fail or to change the degree of replication. Although this can be done by taking the entire cluster off-line, updating configuration files, and then restarting the cluster, this would leave the cluster unavailable during the changeover. In addition, if there are any manual steps, they risk operator error. In order to avoid these issues, we decided to automate configuration changes and incorporate them into the Raft consensus algorithm.

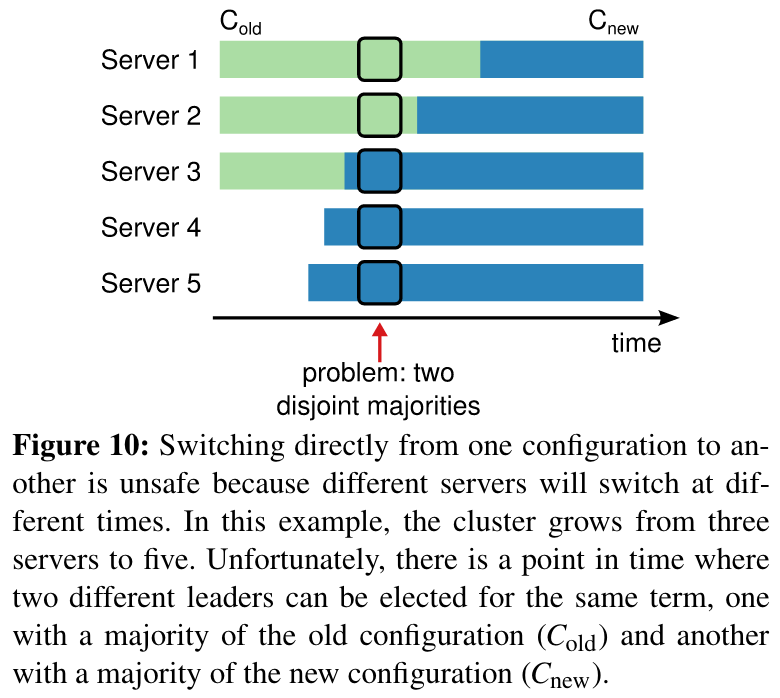

为了使配置变更机制是安全的,在过渡期内必须没有可能出现两个领导人连任的情况。遗憾的是,任何服务器直接从旧配置切换到新配置的方法都是不安全的。不可能一次性原子地切换所有的服务器,因此在过渡(见图10)期间,集群可能会分裂成两个独立的多数。

For the configuration change mechanism to be safe, there must be no point during the transition where it is possible for two leaders to be elected for the same term. Unfortunately, any approach where servers switch directly from the old configuration to the new configuration is unsafe. It isn’t possible to atomically switch all of the servers at once, so the cluster can potentially split into two independent majorities during the transition (see Figure 10).

为了保证安全性,配置变更必须采用两阶段的方式。有多种方法来实现这两个阶段。例如,一些系统(例如, )使用第一阶段来禁用旧的配置,因此无法处理客户端请求;然后第二阶段启用新的配置。在Raft中,集群首先切换到一个过渡配置,我们称之为联合一致性;一旦联合共识已经承诺,系统然后过渡到新的配置。联合共识结合了旧的和新的配置:

In order to ensure safety, configuration changes must use a two-phase approach. There are a variety of ways to implement the two phases. For example, some systems (e.g., [20]) use the first phase to disable the old configuration so it cannot process client requests; then the second phase enables the new configuration. In Raft the cluster first switches to a transitional configuration we call joint consensus; once the joint consensus has been committed, the system then transitions to the new configuration. The joint consensus combines both the old and new configurations:

-

日志项在两种配置下都被复制到所有服务器。

-

Log entries are replicated to all servers in both configurations.

-

来自任一配置的任何服务器都可以充当领导者。

-

Any server from either configuration may serve as leader.

-

协议(对于选举和进入承诺)要求从旧的和新的配置中分离出大多数。

-

Agreement (for elections and entry commitment) requires separate majorities from both the old and new configurations.

图10:直接从一个配置切换到另一个配置是不安全的,因为不同的服务器会在不同的时间切换。在这个例子中,集群从三个服务器增长到五个服务器。不幸的是,有一个时间点,两个不同的领导人可以在同一任期内当选,一个以旧的配置( Cold )的多数,另一个以新的配置( Cnew )的多数。

联合共识允许单个服务器在不同时间在配置之间进行转换,而不会损害安全性。此外,联合共识允许集群在整个配置更改期间继续服务客户端请求。

The joint consensus allows individual servers to transition between configurations at different times without compromising safety. Furthermore, joint consensus allows the cluster to continue servicing client requests throughout the configuration change.

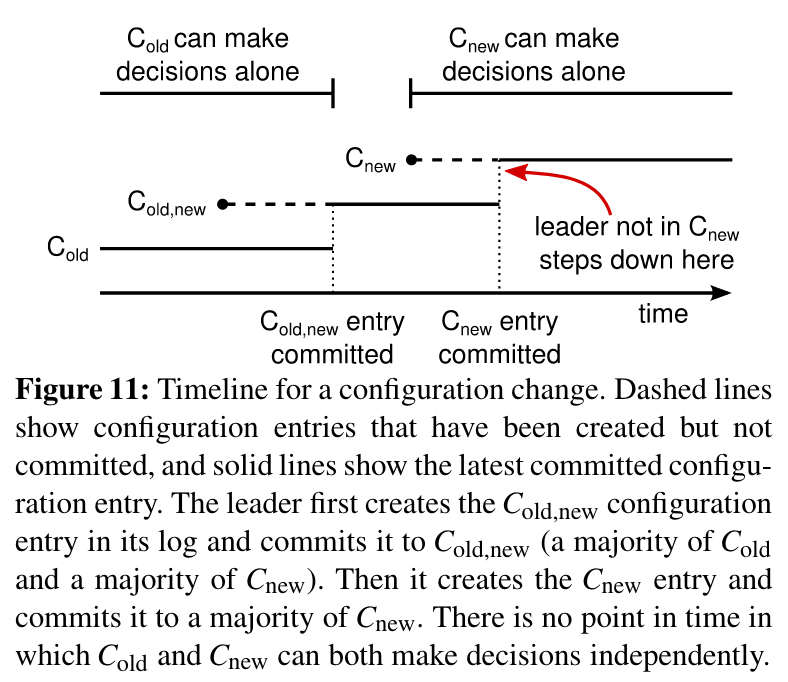

集群配置使用复制日志中的特殊条目进行存储和通信;图11为构型变化过程。当领导者收到将配置从Cold更改为Cnew的请求时,它将联合共识的配置(Cold,new在图中)存储为一个日志条目,并使用前面描述的机制复制该条目。一旦给定的服务器将新的配置条目添加到它的日志中,它就使用该配置进行所有未来的决策(服务器始终使用其日志中的最新配置,而不管条目是否提交)。这意味着领导将使用Cold,new的规则来确定日志条目何时为Cold,new被提交。如果领导者崩溃,根据获胜的候选人是否接受了Cold,new,可以在Cold或Cold,new下选择新的领导者。在任何情况下,Cnew都无法在此期间做出单边决定。

Cluster configurations are stored and communicated using special entries in the replicated log; Figure 11 illustrates the configuration change process. When the leader receives a request to change the configuration from Cold to Cnew,it stores the configuration for joint consensus (Cold,new in the figure) as a log entry and replicates that entry using the mechanisms described previously. Once a given server adds the new configuration entry to its log, it uses that configuration for all future decisions (a server always uses the latest configuration in its log, regardless of whether the entry is committed). This means that the leader will use the rules of Cold,new to determine when the log entry for Cold,new is committed. If the leader crashes, a new leader may be chosen under either Cold or Cold,new, depending on whether the winning candidate has received Cold,new.Inanycase,Cnew cannot make unilateral decisions during this period.

一旦Cold,new已经提交,Cold和Cnew都不能在未经对方批准的情况下做出决策,并且Leader Completeness Property保证只有具有Cold,new日志项的服务器才能被选为leader。现在,领导者创建一个描述Cnew的日志条目并将其复制到集群是安全的。再次,这种配置一经看到就会对每个服务器生效。当新配置在Cnew规则下提交时,旧配置变得无关紧要,不在新配置中的服务器可以关闭。如图11所示,不存在Cold和Cnew都可以进行单边决策的时刻;这保证了安全性。【脑裂问题】

Once Cold,new has been committed, neither Cold nor Cnew can make decisions without approval of the other, and the Leader Completeness Property ensures that only servers with the Cold,new log entry can be elected as leader. It is now safe for the leader to create a log entry describing Cnew and replicate it to the cluster. Again, this configuration will take effect on each server as soon as it is seen. When the new configuration has been committed under the rules of Cnew,the old configuration isirrelevant and servers not in the new configuration can be shut down. As shown in Figure 11, there is no time when Cold and Cnew can both make unilateral decisions; this guarantees safety.

图11:配置更改的时间轴。虚线表示已创建但未提交的配置项,实线表示最新提交的配置项。领导者首先在日志中创建Cold,new配置条目并将Cold,new (大多数Cold和大多数Cnew)提交。然后创建Cnew条目,并提交给大多数Cnew。在时间点上,Cold和Cnew都可以独立决策。

重构还有三个问题需要解决。第一个问题是新服务器最初可能不会存储任何日志项。如果在这种状态下将它们添加到集群中,它们可能需要相当长的时间才能赶上,在此期间可能无法提交新的日志条目。为了避免可用性缺口,Raft在配置变更前引入了一个额外的阶段,新服务器以无投票资格成员(领导者可以向他们复制日志条目,但他们不被考虑为多数)的身份加入集群。一旦新的服务器赶上了集群的其余部分,才可以进行如上所述的重构。

There are three more issues to address for reconfiguration. The first issue is that new servers may not initially store any log entries. If they are added to the cluster in this state, it could take quite a while for them to catch up, during which time it might not be possible to commit new log entries. In order to avoid availability gaps, Raft introduces an additional phase before the configuration change, in which the new servers join the cluster as non-voting members (the leader replicates log entries to them, but they are not considered for majorities). Once the new servers have caught up with the rest of the cluster, the reconfiguration can proceed as described above.

第二个问题是,集群领导者可能不是新构型的一部分。在这种情况下,领导者一旦提交了Cnew日志条目,就退出(返回到跟随状态)。这意味着,领导者会有一段时间管理一个不包含自身的集群时(而正在进行Cnew);它复制日志条目,但不以多数计算。Cnew提交时发生领导转换,因为这是新配置能够独立运行(从Cnew中选择一个领导者将永远是可能的)的第一点。在此之前,可能只有来自Cold的一名服务器才能当选领导人。

The second issue is that the cluster leader may not be part of the new configuration. In this case, the leader steps down (returns to follower state) once it has committed the Cnew log entry. This means that there will be a period of time (while it is committing Cnew) when the leader is managing a cluster that does not include itself; it replicates log entries but does not count itself in majorities. The leader transition occurs when Cnew is committed because this is the first point when the new configuration can operate independently (it will always be possible to choose a leader from Cnew). Before this point, it may be the case that only aserverfromCold can be elected leader.

第三个问题是移除的服务器(那些不在Cnew)会破坏集群。这些服务器不会接收到心跳,因此它们会超时并开始新的选举。然后他们会发送带有新项号的RequestVote RPC,这将导致当前领导者恢复到跟随者状态。最终会选出新的领导者,但是移除的服务器会再次超时,过程会重复,导致可用性差。

The third issue is that removed servers (those not in Cnew)can disrupt the cluster.These servers will not receive heartbeats, so they will time out and start new elections. They will then send RequestVote RPCs with new term numbers, and this will cause the current leader to revert to follower state. A new leader will eventually be elected, but the removed servers will time out again and the process will repeat, resulting in poor availability.

为了防止这个问题,服务器在相信当前领导者存在的情况下会忽略RequestVote RPC。具体来说,如果服务器在当前领导人听证的最低选举超时时间内收到RequestVote RPC,则不更新其任期或授予其投票权。这并不影响正常的选举,每个服务器在开始选举之前至少等待一个最小的选举超时。然而,它有助于避免从移除的服务器中断:如果一个领导者能够得到心跳到它的集群,那么它不会被更大的项号废黜。

To prevent this problem, servers disregard RequestVote RPCs when they believe a current leader exists. Specifically, if a server receives a RequestVote RPC within the minimum election timeout of hearing from a current leader, it does not update its term or grant its vote. This does not affect normal elections, where each server waits at least a minimum election timeout before starting an election. However, it helps avoid disruptions from removed servers: if a leader is able to get heartbeats to its cluster, then it will not be deposed by larger term numbers.

7 客户端和日志压缩【Clients and log compaction】

本节由于篇幅限制有所省略,但材料可在本文的扩展版本中获得[ 29 ]。它描述了客户端如何与Raft交互,包括客户端如何找到集群领导者以及Raft如何支持可线性化的语义[ 8 ]。扩展版本还描述了如何使用快照方法回收复制日志中的空间。这些问题适用于所有基于共识的系统,Raft的解决方案与其他系统类似。

This section has been omitted due to space limitations, but the material is available in the extended version of this paper [29]. It describes how clients interact with Raft, including how clients find the cluster leader and how Raft supports linearizable semantics [8]. The extended version also describes how space in the replicated log can be reclaimed using a snapshotting approach. These issues apply to all consensus-based systems, and Raft’s solutions are similar to other systems.

8 实施与评价【Implementation and evaluation】

我们实现了Raft作为复制状态机的一部分,存储内存云的配置信息[ 30 ],并协助内存云协调器进行故障转移。Raft实现包含大约2000行C + +代码,不包括测试、注释或空行。源代码免费可用[ 21 ]。基于本文的草案,Raft在各个发展阶段也有大约25个独立的第三方开源实现[ 31 ]。同时,各个公司都在部署基于Raft的系统[ 31 ]。

We have implemented Raft as part of a replicated state machine that stores configuration information for RAMCloud [30] and assists in failover of the RAMCloud coordinator. The Raft implementation contains roughly 2000 lines of C++ code, not including tests, comments, or blank lines. The source code is freely available [21]. There are also about 25 independent third-party open source implementations [31] of Raft in various stages of development, based on drafts of this paper. Also, various companies are deploying Raft-based systems [31].

本节余下部分将使用可理解性、正确性和性能三个标准来评估Raft。

The remainder of this section evaluates Raft using three criteria: understandability, correctness, and performance.

8.1 易理解【Understandability】

为了测量Raft相对于Paxos的可理解性,我们在斯坦福大学的高级操作系统课程和加州大学伯克利分布式计算课程中对高年级本科生和研究生进行了实验研究。我们录制了Raft和Paxos的视频讲座,并制作了相应的小测验。Raft讲座涵盖了本文的内容;Paxos讲座涵盖了创建等效复制状态机的足够材料,包括单级Paxos、多级Paxos、重新配置以及实践(例如领导人选举)所需的一些优化。测验测试了学生对算法的基本理解,并要求学生对角点案例进行推理。每个学生观看一个视频,进行相应的测验,观看第二个视频,进行第二个测验。大约一半的被试先做了Paxos部分,另一半先做了Raft部分,目的是考虑到从第一部分研究中获得的成绩和经验的个体差异。我们比较了参与者在每次测验中的得分,以确定参与者是否对Raft有更好的理解。

To measure Raft’s understandability relative to Paxos, we conducted an experimental study using upper-level undergraduate and graduate students in an Advanced Operating Systems course at Stanford University and a Distributed Computing course at U.C. Berkeley. We recorded a video lecture of Raft and another of Paxos,and created corresponding quizzes. The Raft lecture covered the content of this paper; the Paxos lecture covered enough material to create an equivalent replicated state machine, including single-decree Paxos, multi-decree Paxos, reconfiguration, and a few optimizations needed in practice (such as leader election). The quizzes tested basic understanding of the algorithms and also required students to reason about corner cases. Each student watched one video, took the corresponding quiz, watched the second video, and took the second quiz. About half of the participants did the Paxos portion first and the other half did the Raft portion first in order to account for both individual differences in performance and experience gained from the first portion of the study. We compared participants’ scores on each quiz to determine whether participants showed a better understanding of Raft.

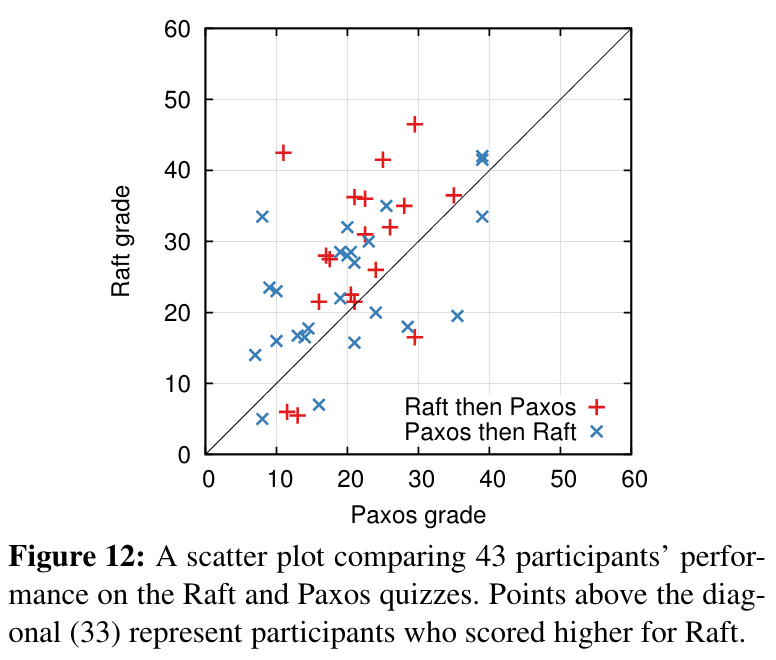

图12:比较43名被试在Raft和Paxos测验上表现的散点图。对角线上方的点( 33 )代表Raft得分较高的被试。

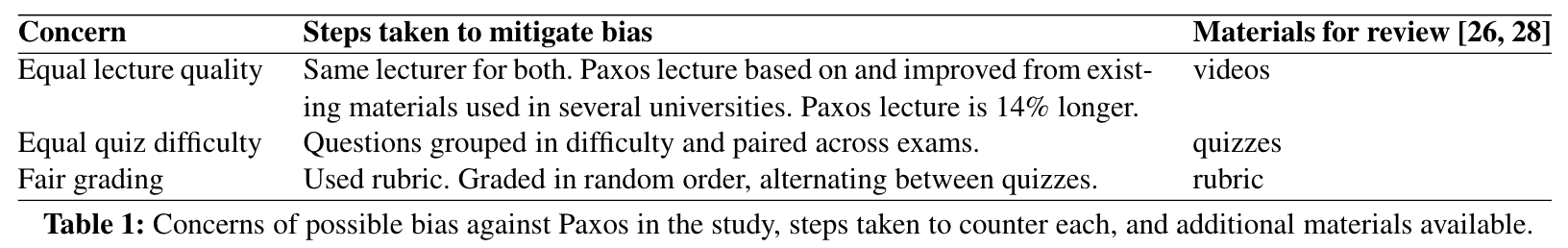

我们试图使Paxos与Raft的比较尽可能公正。实验对Paxos的偏爱表现在两个方面:43名被试中,有15名被试报告对Paxos有一定的先前经验,Paxos视频比Raft视频长14 %。如表1所示,我们已经采取措施来缓解潜在的偏倚来源。我们所有的材料都可用于审查[ 26、28]。

We tried to make the comparison between Paxos and Raft as fair as possible. The experiment favored Paxos in two ways: 15 of the 43 participants reported having some prior experience with Paxos, and the Paxos video is 14% longer than the Raft video. As summarized in Table 1, we have taken steps to mitigate potential sources of bias. All of our materials are available for review [26, 28].

表1:对研究中可能存在的对Paxos偏倚的担忧、采取的应对措施以及可获得的额外材料。

平均而言,被试在Raft测验上的得分比在Paxos测验( Raft评分平均25.7分, Paxos评分平均20.8分)上高4.9分;图12显示了他们的个人得分。Apairedt - test表明,在95 %的置信度下,Raft分数的真实分布比Paxos分数的真实分布至少大2.5个点。

On average, participants scored 4.9 points higher on the Raft quiz than on the Paxos quiz (out of a possible 60 points, the mean Raft score was 25.7 and the mean Paxos score was 20.8); Figure 12 shows their individual scores. Apairedt-test states that, with 95% confidence, the true distribution of Raft scores has a mean at least 2.5 points larger than the true distribution of Paxos scores.

我们还创建了一个线性回归模型,根据三个因素来预测新学生的测验成绩:他们参加的测验,他们以前的Paxos经验的程度,以及他们学习算法的顺序。模型预测,选择测验产生了有利于Raft的12.5分的差异。这显著高于观察到的4.9分的差异,因为许多实际学生有过Paxos的经验,这对Paxos的帮助很大,而对Raft的帮助略低。令人好奇的是,该模型还预测,对于已经参加过Paxos测验的人来说,Raft的得分要低6.3分;虽然我们不知道为什么,但这似乎是有统计学意义的。

We also created a linear regression model that predicts anewstudent’squizscoresbasedonthreefactors:which quiz they took, their degree of prior Paxos experience, and the order in which they learned the algorithms. The model predicts that the choice of quiz produces a 12.5-point difference in favor of Raft. This is significantly higher than the observed difference of 4.9 points, because many of the actual students had prior Paxos experience, which helped Paxos considerably, whereas it helped Raft slightly less. Curiously, the model also predicts scores 6.3 points lower on Raft for people that have already taken the Paxos quiz; although we don’t know why, this does appear to be statistically significant.

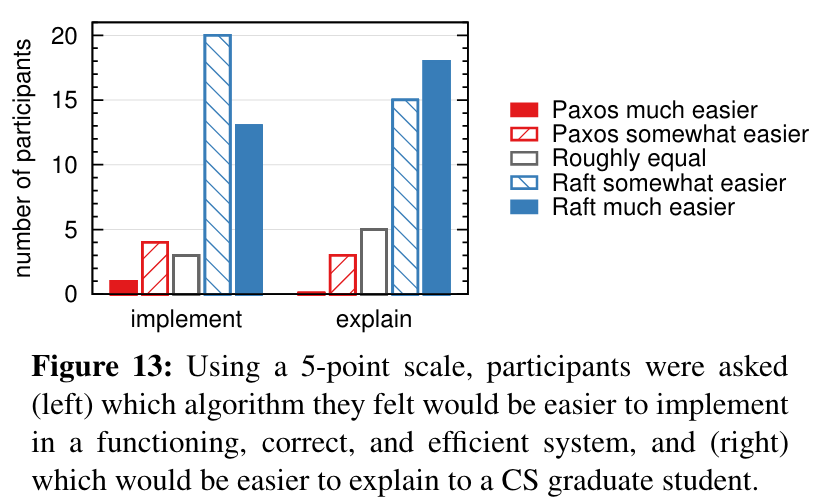

我们还在测试后对参与者进行了调查,以了解他们认为哪种算法更容易实现或解释;这些结果如图13所示。绝大多数参与者认为Raft更容易实现和解释(每个问题41个问题中33个)。然而,这些自我报告的感受可能比被试的测验成绩更不可靠,并且被试可能对我们的假设有偏见,即Raft更容易理解。

We also surveyed participants after their quizzes to see which algorithm they felt would be easier to implement or explain; these results are shown in Figure 13. An overwhelming majority of participants reported Raft would be easier to implement and explain (33 of 41 for each question). However, these self-reported feelings may be less reliable than participants’ quiz scores, and participants may have been biased by knowledge of our hypothesis that Raft is easier to understand.

图13:采用5点量表,询问被试(左)他们认为在一个功能、正确、高效的系统中哪种算法更容易实现,(右)哪种算法更容易向CS研究生解释。

进一步的用户研究可参见文献[ 28 ]。

AdetaileddiscussionoftheRaftuserstudyisavailable at [28].

8.2 正确性【Correctness】

我们已经为第5节所述的共识机制制定了形式化的规范和安全性证明。形式规格说明[ 28 ]使用TLA +规格说明语言[ 15 ]使图2中总结的信息完全精确。它长约400行,作为证明的主体。对于任何实现Raft的人来说,它本身也是有用的。我们已经用TLA证明系统[ 6 ]机械性地证明了对数完备性。然而,这种证明依赖于未经过机械检查(例如,我们还没有证明该规范的类型安全性)的不变量。进一步,我们对状态机安全属性写了一个非正式的证明[ 28 ],即完整的(它仅依赖于规范)和相对精确的(长约3500字)。

We have developed a formal specification and a proof of safety for the consensus mechanism described in Section 5. The formal specification [28] makes the information summarized in Figure 2 completely precise using the TLA+ specification language [15]. It is about 400 lines long and serves as the subject of the proof. It is also useful on its own for anyone implementing Raft. We have mechanically proven the Log Completeness Property using the TLA proof system [6]. However, this proof relies on invariants that have not been mechanically checked (for example, we have not proven the type safety of the specification). Furthermore, we have written an informal proof [28] of the State Machine Safety property which is complete (it relies on the specification alone) and relatively precise (it is about 3500 words long).

8.3 表现【Performance】

Raft的性能与Paxos等其他一致性算法类似。对于性能而言,最重要的情况是当一个已建立的领导者正在复制新的日志条目时。Raft使用最少的消息数量(从领导者到半个集群的单次往返)来实现。也有可能进一步提高Raft的性能。例如,它很容易支持批处理和流水线请求,以获得更高的吞吐量和更低的延迟。对于其他算法,文献中已经提出了各种优化;其中许多可以应用于Raft,但我们留给未来的工作。

Raft’s performance is similar to other consensus algorithms such as Paxos. The most important case for performance is when an established leader is replicating new log entries. Raft achieves this using the minimal number of messages (a single round-trip from the leader to half the cluster). It is also possible to further improve Raft’s performance. For example, it easily supports batching and pipelining requests for higher throughput and lower latency. Various optimizations have been proposed in the literature for other algorithms; many of these could be applied to Raft, but we leave this to future work.

我们使用我们的Raft实现来衡量Raft的领导人选举算法的表现,并回答两个问题。首先,选举过程是否快速收敛?第二,领导者崩溃后能够达到的最小停机时间是多少?

We used our Raft implementation to measure the performance of Raft’s leader election algorithm and answer two questions. First, does the election process converge quickly? Second, what is the minimum downtime that can be achieved after leader crashes?

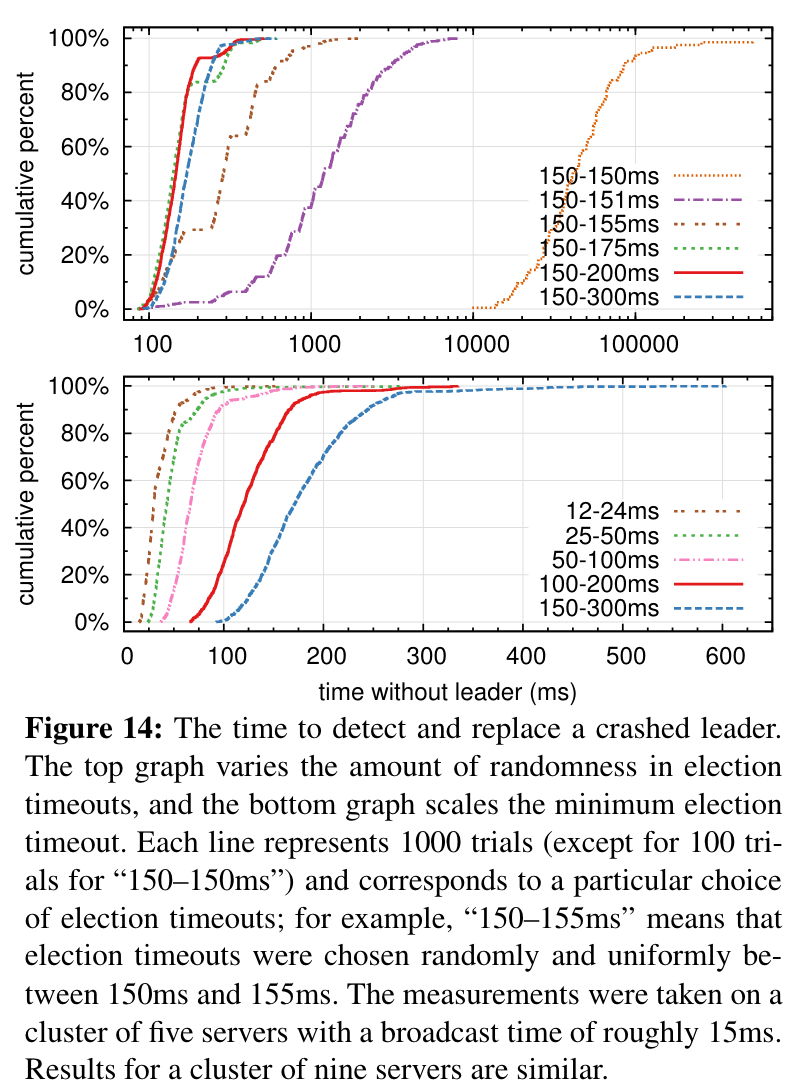

图14:检测并更换崩溃领导者的时间。上图改变了选举超时的随机性,下图调整了最小选举超时。每一行代表1000个试次( ' 150-150ms '的100个试次除外),对应特定的选举超时选择;例如," 150 ~ 155ms "是指选举超时在150 ~ 155ms之间随机均匀选择。测量在由5台服务器组成的集群上进行,广播时间约为15ms。对于由9台服务器组成的集群,结果类似。

为了衡量领导人选举,我们对一个由5台服务器组成的集群的领导人进行了多次崩溃,并确定了检测崩溃和选举新领导人(见图14)的时间。为了生成最坏情况的场景,每个试次中的服务器具有不同的日志长度,因此一些候选者没有资格成为领导者。此外,为了鼓励分割投票,我们的测试脚本在结束其进程(这近似了领导者在崩溃前复制新日志条目的行为)之前触发了来自领导者的心跳RPC的同步广播。领导者在其心跳间隔内均匀随机崩溃,这是所有测试的最小选举超时的一半。因此,最小可能的停机时间约为最小选举超时的一半。

To measure leader election, we repeatedly crashed the leader of a cluster of five servers and timed how long it took to detect the crash and elect a new leader (see Figure 14). To generate a worst-case scenario, the servers in each trial had different log lengths, so some candidates were not eligible to become leader. Furthermore, to encourage split votes, our test script triggered a synchronized broadcast of heartbeat RPCs from the leader before terminating its process (this approximates the behavior of the leader replicating a new log entry prior to crashing). The leader was crashed uniformly randomly within its heartbeat interval, which was half of the minimum election timeout for all tests. Thus, the smallest possible downtime was about half of the minimum election timeout.

图14中的上图表明,选举超时中少量的随机化就足以避免选举中的分裂投票。在没有随机性的情况下,由于许多分裂选票,在我们的测试中,领导人选举的时间持续超过10秒。仅增加5ms的随机性有显著帮助,导致中位停机时间为287ms。使用更多的随机性改善了最坏情况行为:随机性为50ms时,最坏情况完成时间(超过1000次试验)为513ms。

The top graph in Figure 14 shows that a small amount of randomization in the election timeout is enough to avoid split votes in elections. In the absence of randomness, leader election consistently took longer than 10 seconds in our tests due to many split votes. Adding just 5ms of randomness helps significantly, resulting in a median downtime of 287ms. Using more randomness improves worst-case behavior: with 50ms of randomness the worstcase completion time (over 1000 trials) was 513ms.

图14的下图表明,通过减少选举超时可以减少停机时间。在选举超时12 - 24ms的情况下,平均只需要35ms就可以选出一个领袖(最长试次为152 ms)。然而,将超时时间降低到这一点上,就违背了Raft的时间要求:在其他服务器开始新的选举之前,领导人很难广播心跳。这会造成不必要的领导者变更,降低整体系统可用性。我们建议使用保守的选举超时,如150 - 300ms;这样的超时不太可能引起不必要的领导变更,并且仍然会提供良好的可用性。

The bottom graph in Figure 14 shows that downtime can be reduced by reducing the election timeout. With an election timeout of 12–24ms, it takes only 35ms on average to elect a leader (the longest trial took 152ms). However, lowering the timeouts beyond this point violates Raft’s timing requirement: leaders have difficulty broadcasting heartbeats before other servers start new elections. This can cause unnecessary leader changes and lower overall system availability. We recommend using a conservative election timeout such as 150–300ms; such timeouts are unlikely to cause unnecessary leader changes and will still provide good availability.

9 相关工作【Related work】

与一致性算法相关的出版物不胜枚举,其中很多属于以下几类:

There have been numerous publications related to consensus algorithms, many of which fall into one of the following categories:

-

Lamport最初对Paxos的描述[ 13 ],并试图对其进行更清晰的解释[ 14、18、19]。

-

Lamport’s original description of Paxos [13], and attempts to explain it more clearly [14, 18, 19].

-

Paxos的阐述,填补了缺失的细节,修改了算法,为其实现提供了更好的基础[ 24、35、11]。

-

Elaborations of Paxos, which fill in missing details and modify the algorithm to provide a better foundation for implementation [24, 35, 11].

-

实现一致性算法的系统,如Chubby [ 2、4]、ZooKeeper [ 9、10]和Spanner[ 5 ]。虽然Chubby和Spanner的算法都声称是基于Paxos的,但是他们的算法并没有被详细的公布。ZooKeeper的算法已经发布的比较详细,但是与Paxos的差别比较大。

-

Systems that implement consensus algorithms, such as Chubby [2, 4], ZooKeeper [9, 10], and Spanner [5]. The algorithms for Chubby and Spanner have not been published in detail, though both claim to be based on Paxos. ZooKeeper’s algorithm has been published in more detail, but it is quite different from Paxos.

-

可应用于Paxos的性能优化[ 16、17、3、23、1、25]。

-

Performance optimizations that can be applied to Paxos [16, 17, 3, 23, 1, 25].

-

Oki和Liskov的VR ( Viewstamped Replication )是与Paxos( Paxos )几乎同时发展起来的共识替代方法。最初的描述[ 27 ]与一个分布式事务的协议交织在一起,但核心共识协议在更新[ 20 ]中已经分离。VR采用基于领导者的方法,与Raft有许多相似之处。

-

Oki and Liskov’s Viewstamped Replication (VR), an alternative approach to consensus developed around the same time as Paxos. The original description [27] was intertwined with a protocol for distributed transactions, but the core consensus protocol has been separated in arecentupdate[20].VR uses a leader-based approach with many similarities to Raft.

Raft与Paxos最大的区别在于Raft的强势领导权:Raft将领导人选举作为共识协议的重要组成部分,并将尽可能多的功能性集中在领导人身上。这种方法导致了更简单的算法,更容易理解。例如,在Paxos,领导人选举与基本共识协议是正交的:它只是一种性能优化,并不是达成共识所必需的。然而,这就产生了额外的机制:Paxos既包括达成基本共识的两阶段协议,也包括单独的领导人选举机制。相比之下,Raft将领导人选举直接纳入共识算法,并将其作为共识两阶段中的第一阶段。这导致了比Paxos中更少的机制。

The greatest difference between Raft and Paxos is Raft’s strong leadership: Raft uses leader election as an essential part of the consensus protocol, and it concentrates as much functionality as possible in the leader. This approach results in a simpler algorithm that is easier to understand. For example, in Paxos, leader election is orthogonal to the basic consensus protocol: it serves only as a performance optimization and is not required for achieving consensus. However, this results in additional mechanism: Paxos includes both a two-phase protocol for basic consensus and a separate mechanism for leader election. In contrast, Raft incorporates leader election directly into the consensus algorithm and uses it as the first of the two phases of consensus. This results in less mechanism than in Paxos.

与Raft一样,VR和ZooKeeper是基于领导者的,因此与Paxos相比,Raft具有许多优势。然而,Raft比VR或ZooKeeper的机制更少,因为它最小化了非领导者的功能。例如,Raft中的日志条目只在一个方向上流动:从AppendEntries RPCs中的领导者向外。在VR日志条目中双向流动(领导人可以在选举过程中收到日志条目);这导致了额外的机制和复杂性。ZooKeeper的公开描述也是将日志条目传递给领导者和传递给领导者,但实现显然更像Raft [ 32 ]。

Like Raft, VR and ZooKeeper are leader-based and therefore share many of Raft’s advantages over Paxos. However, Raft has less mechanism that VR or ZooKeeper because it minimizes the functionality in non-leaders. For example, log entries in Raft flow in only one direction: outward from the leader in AppendEntries RPCs. In VR log entries flow in both directions (leaders can receive log entries during the election process); this results in additional mechanism and complexity. The published description of ZooKeeper also transfers log entries both to and from the leader, but the implementation is apparently more like Raft [32].

Raft的消息类型比我们知道的任何其他基于共识的日志复制算法都要少。例如,VR和ZooKeeper各自定义了10种不同的消息类型,而Raft只有4种消息类型( 2个RPC请求及其响应)。Raft的消息比其他算法稍密集,但总体上更简单。此外,VR和ZooKeeper在领导者变更期间传输整个日志方面进行了描述;需要额外的消息类型来优化这些机制,使其具有实用性。

Raft has fewer message types than any other algorithm for consensus-based log replication that we are aware of. For example, VR and ZooKeeper each define 10 different message types, while Raft has only 4 message types (two RPC requests and their responses). Raft’s messages are a bit more dense than the other algorithms’, but they are simpler collectively. In addition, VR and ZooKeeper are described in terms of transmitting entire logs during leader changes; additional message types will be required to optimize these mechanisms so that they are practical.

在其他工作中已经提出或实现了几种不同的集群成员变更方法,包括Lamport的原始提议[ 13 ]、VR [ 20 ]和SMART [ 22 ]。我们选择了Raft的联合共识方法,因为它利用了共识协议的其余部分,因此成员变更所需的额外机制很少。Lamport的基于α的方法并不是Raft的一个选择,因为它假设在没有领导者的情况下可以达成共识。与VR和SMART相比,Raft的重构算法的优点是可以在不限制正常请求处理的情况下发生成员变化;相比之下,VR在配置变化时停止所有正常处理,SMART对未完成请求的数量施加类似α的限制。Raft的方法也比VR或SMART增加了更少的机制。