RAID磁盘冗余阵列

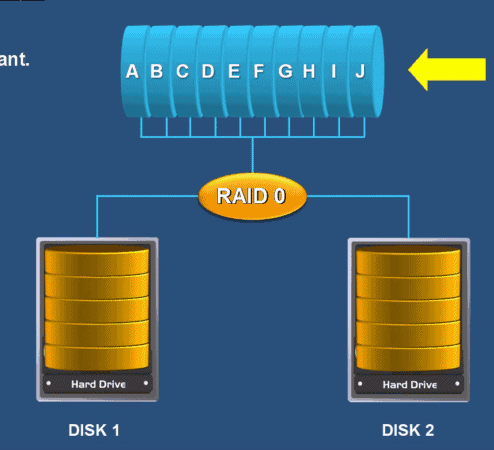

RAID 0 :条带化存储

- 连续以位或字节为单位分割数据,并行读/写于多个磁盘上,因此有很高的 传输率,但它没有数据冗余;

- 只是单纯地提高性能,并没有为数据的可靠性提供保证,其中一个磁盘失效将影响到所有数据;

- 不能应用于数据安全性要求高的场合。

RAID 1 :镜像存储

- 通过磁盘数据镜像实现数据冗余,在成对的独立磁盘上产生互为备份的数据;

- 当原始数据繁忙时,可以从镜像拷贝中读取数据,因此RAID 1 可以提高读取性能;

- 是磁盘阵列中单位成本最高的,当一个磁盘失效时,系统可以自动切换到镜像磁盘上读写,而不需要重组失效的数据。

raid 3

- raid 3 至少需要3块硬盘,只要校验盘没坏,坏了一块数据盘可以反推数据来恢复。

- 同样也浪费了一块磁盘,但是要比raid 1 节约不少;

- 采用的是N+1的数据保护方法。这意味着当有N个硬盘的用户数据需要保护时,需要一个额外的硬盘来存储校验信息。

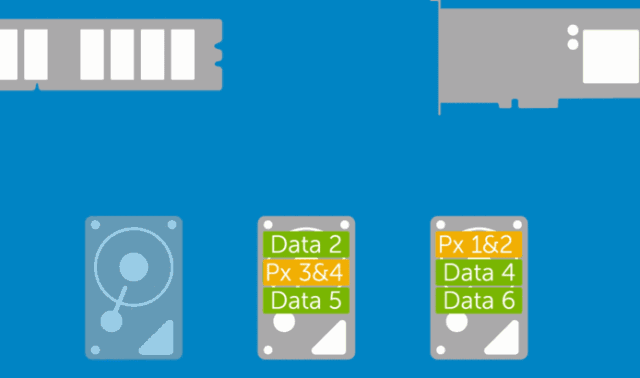

RAID 5

- N (N>=3)块盘组成阵列,一份数据产生N-1个条带,同时还有一份校验数据,供N份数据在N块盘上循环均衡存储

- N块盘同时读写,读 性能很高,但由于有校验机制的问题,写 性能相对不高

- (N-1) / N 磁盘利用率

- 可靠性高,允许坏一块盘,不影响所有数据

Raid 10(先做镜像,再做条带)

- N (偶数,n>=4)块盘两两镜像后,再组合成一个RAID 0,

- N/2 磁盘利用率,

- N/2块磁盘同时写入,N块磁盘同时读取 性能高,可靠性高

- RAID 0+1 (先做条带,再做镜像) 读写性能与RAID 1+0 相同, 安全性低于RAID 1+0 。

部署RAID 10

##添加4块新的磁盘

[root@junwu_server ~]# fdisk -l |grep "/dev/sd[a-z]" WARNING: fdisk GPT support is currently new, and therefore in an experimental phase. Use at your own discretion. Disk /dev/sda: 21.5 GB, 21474836480 bytes, 41943040 sectors /dev/sda1 * 2048 411647 204800 83 Linux /dev/sda2 411648 2050047 819200 82 Linux swap / Solaris /dev/sda3 2050048 41943039 19946496 83 Linux Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors Disk /dev/sde: 21.5 GB, 21474836480 bytes, 41943040 sectors Disk /dev/sdc: 10.7 GB, 10737418240 bytes, 20971520 sectors /dev/sdc1 2048 10487807 5242880 83 Linux Disk /dev/sdd: 21.5 GB, 21474836480 bytes, 41943040 sectors Disk /dev/sdf: 21.5 GB, 21474836480 bytes, 41943040 sectors Disk /dev/sdg: 21.5 GB, 21474836480 bytes, 41943040 sectors

mdadm命令,用于创建,管理和监控RAID阵列

yum install mdadm -y ##安装mdadm

| 参数 | 解释 |

|---|---|

| -C | 用未使用的设备,创建raid |

| -a | yes or no,自动创建阵列设备 |

| -A | 激活磁盘阵列 |

| -n | 指定设备数量 |

| -l | 指定raid级别 |

| -v | 显示过程 |

| -S | 停止RAID阵列 |

| -D | 显示阵列详细信息 |

| -f | 移除设备 |

| -x | 指定阵列中备用盘的数量 |

| -s | 扫描配置文件或/proc/mdstat,得到阵列信息 |

查看现有的新的磁盘

[root@junwu_server ~]# df -Th /dev/sd[d-g] Filesystem Type Size Used Avail Use% Mounted on devtmpfs devtmpfs 476M 0 476M 0% /dev devtmpfs devtmpfs 476M 0 476M 0% /dev devtmpfs devtmpfs 476M 0 476M 0% /dev devtmpfs devtmpfs 476M 0 476M 0% /dev

##创建raid10

[root@junwu_server ~]# mdadm -Cv /dev/md10 -a yes -n 4 -l 10 /dev/sdd /dev/sde /dev/sdf /dev/sdg mdadm: layout defaults to n2 mdadm: layout defaults to n2 mdadm: chunk size defaults to 512K mdadm: /dev/sdd appears to be part of a raid array: level=raid10 devices=4 ctime=Fri Mar 17 09:11:15 2023 mdadm: /dev/sde appears to be part of a raid array: level=raid10 devices=4 ctime=Fri Mar 17 09:11:15 2023 mdadm: /dev/sdf appears to be part of a raid array: level=raid10 devices=4 ctime=Fri Mar 17 09:11:15 2023 mdadm: /dev/sdg appears to be part of a raid array: level=raid10 devices=4 ctime=Fri Mar 17 09:11:15 2023 mdadm: size set to 20954112K Continue creating array? y mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md10 started.

##查看raid10相关信息

[root@junwu_server ~]# mdadm -D /dev/md10 /dev/md10: Version : 1.2 Creation Time : Fri Mar 17 09:54:48 2023 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Mar 17 09:55:15 2023 State : clean, resyncing Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Resync Status : 14% complete Name : junwu_server:10 (local to host junwu_server) UUID : 49f70d00:768b1042:236a6216:b45e79fb Events : 2 Number Major Minor RaidDevice State 0 8 48 0 active sync set-A /dev/sdd 1 8 64 1 active sync set-B /dev/sde 2 8 80 2 active sync set-A /dev/sdf 3 8 96 3 active sync set-B /dev/sdg

##格式化磁盘阵列文件系统

[root@junwu_server ~]# mkfs.xfs /dev/md/md_raid10 meta-data=/dev/md/md_raid10 isize=512 agcount=16, agsize=654720 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=10475520, imaxpct=25 = sunit=128 swidth=256 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=5120, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0

##新建文件夹,用于挂载分区

[root@junwu_server ~]# mkdir /mnt/new_sd_md/ [root@junwu_server ~]# mount /dev/md10 /mnt/new_sd_md/ [root@junwu_server ~]# mount -l |grep /dev/md10 /dev/md10 on /mnt/new_sd_md type xfs (rw,relatime,attr2,inode64,sunit=1024,swidth=2048,noquota)

##此时可以向磁盘写入数据,检查挂载分区使用情况

[root@junwu_server ~]# df -hT |grep /dev/md10 /dev/md10 xfs 40G 33M 40G 1% /mnt/new_sd_md

##将raid10加入开机自动挂载配置文件,使其永久生效

[root@junwu_server ~]# tail -1 /etc/fstab /dev/md10 /mnt/new_sd_md/ xfs defaults 0 0

生产情况:故障一块硬盘怎么办

##移除一块硬盘-f

[root@junwu_server ~]# mdadm /dev/md10 -f /dev/sde mdadm: set /dev/sde faulty in /dev/md10

##检查raid10运行状态

[root@junwu_server ~]# mdadm -D /dev/md10 /dev/md10: Version : 1.2 Creation Time : Fri Mar 17 09:54:48 2023 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Mar 17 10:09:52 2023 State : clean, degraded Active Devices : 3 Working Devices : 3 ##工作中的磁盘 Failed Devices : 1 ##坏掉的磁盘 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : junwu_server:10 (local to host junwu_server) UUID : 49f70d00:768b1042:236a6216:b45e79fb Events : 21 Number Major Minor RaidDevice State 0 8 48 0 active sync set-A /dev/sdd - 0 0 1 removed ##/dev/sde已经被移除 2 8 80 2 active sync set-A /dev/sdf 3 8 96 3 active sync set-B /dev/sdg 1 8 64 - faulty /dev/sde

RAID10磁盘阵列,挂掉一块硬盘并不影响使用,只需要购买新的设备,替换损坏的磁盘即可

##此时为了防止磁盘再损坏,需要添加一块新的磁盘加入raid10

##先取消挂载,注意是在没有人使用挂载的设备

[root@junwu_server ~]# umount /dev/md10

##重启操作系统

reboot

#添加新的设备

[root@junwu_server ~]# mdadm /dev/md10 -a /dev/sde

mdadm: added /dev/sde

##会有一个raid修复的过程

[root@junwu_server ~]# mdadm -D /dev/md10 /dev/md10: Version : 1.2 Creation Time : Fri Mar 17 09:54:48 2023 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Mar 17 10:17:51 2023 State : clean, degraded, recovering Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Rebuild Status : 21% complete ##修复的进度,达到100%即修复完毕 Name : junwu_server:10 (local to host junwu_server) UUID : 49f70d00:768b1042:236a6216:b45e79fb Events : 30 Number Major Minor RaidDevice State 0 8 48 0 active sync set-A /dev/sdd 4 8 64 1 spare rebuilding /dev/sde 2 8 80 2 active sync set-A /dev/sdf 3 8 96 3 active sync set-B /dev/sdg

重启软RAID

注意要配置软RAID的配置文件,否则如果停止软RAID后就无法激活了

##手动创建配置文件

[root@junwu_server ~]# echo "DEVICE /dev/sd[d-g]" > /etc/mdadm.conf

##扫描磁盘阵列信息,追加到/etc/mdadm.conf

[root@junwu_server ~]# echo "DEVICE /dev/sd[d-g]" > /etc/mdadm.conf [root@junwu_server ~]# mdadm -Ds >> /etc/mdadm.conf [root@junwu_server ~]# cat /etc/mdadm.conf DEVICE /dev/sd[d-g] ARRAY /dev/md/10 metadata=1.2 name=junwu_server:10 UUID=49f70d00:768b1042:236a6216:b45e79fb

##取消raid挂载

[root@junwu_server ~]# umount /dev/md10

##停止raid10

[root@junwu_server ~]# mdadm -S /dev/md10 mdadm: stopped /dev/md10 [root@junwu_server ~]# mdadm -D /dev/md10 mdadm: cannot open /dev/md10: No such file or directory

##在有配置文件的情况下,可以正常启停raid

[root@junwu_server ~]# mdadm -A /dev/md10 mdadm: /dev/md10 has been started with 4 drives. [root@junwu_server ~]# mdadm -D /dev/md10 /dev/md10: Version : 1.2 Creation Time : Fri Mar 17 09:54:48 2023 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Mar 17 10:30:27 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : junwu_server:10 (local to host junwu_server) UUID : 49f70d00:768b1042:236a6216:b45e79fb Events : 44 Number Major Minor RaidDevice State 0 8 48 0 active sync set-A /dev/sdd 4 8 64 1 active sync set-B /dev/sde 2 8 80 2 active sync set-A /dev/sdf 3 8 96 3 active sync set-B /dev/sdg

删除软件RAID

1、取消挂载、停止raid服务

[root@junwu_server ~]# umount /dev/md10 [root@junwu_server ~]# mdadm -S /dev/md10 mdadm: stopped /dev/md10

2、卸载raid中所有磁盘信息

[root@junwu_server ~]# mdadm --misc --zero-superblock /dev/sdd [root@junwu_server ~]# mdadm --misc --zero-superblock /dev/sde [root@junwu_server ~]# mdadm --misc --zero-superblock /dev/sdf [root@junwu_server ~]# mdadm --misc --zero-superblock /dev/sdg

3、删除raid配置文件

[root@junwu_server ~]# rm -f /etc/mdadm.conf

4、删除开机自动配置挂载文件内容

[root@junwu_server ~]# sed -e '12d' /etc/fstab # # /etc/fstab # Created by anaconda on Sun Sep 18 18:38:20 2022 # # Accessible filesystems, by reference, are maintained under '/dev/disk' # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # UUID=9d5d7859-2a23-4b89-b177-74ddb3cd50b1 / xfs defaults 0 0 UUID=4ff42a0c-a9c5-41c6-b5c2-ef28a4a5540b /boot xfs defaults 0 0 UUID=6a392a74-cd56-4d74-b186-e838a29ff17a swap swap defaults 0 0

部署RAID5

##raid5部署需要三块盘

-C raid部署的文件名

-v显示部署过程

-n使用需要的磁盘数量

-l raid的级别

-x备用盘的数量

[root@junwu_server ~]# mdadm -Cv /dev/md5 -n 3 -l 5 -x 1 /dev/sd[d-g] mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: size set to 20954112K mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md5 started.

##检查raid状态

[root@junwu_server ~]# mdadm -D /dev/md5 /dev/md5: Version : 1.2 Creation Time : Sat Mar 18 10:28:33 2023 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sat Mar 18 10:29:00 2023 State : clean, degraded, recovering Active Devices : 2 Working Devices : 4 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 31% complete Name : junwu_server:5 (local to host junwu_server) UUID : a38c61ea:d68f734e:955dfa05:f63a39bc Events : 5 Number Major Minor RaidDevice State 0 8 48 0 active sync /dev/sdd 1 8 64 1 active sync /dev/sde 4 8 80 2 spare rebuilding /dev/sdf 3 8 96 - spare /dev/sdg

##格式化磁盘文件系统

[root@junwu_server ~]# mkfs.xfs -f /dev/md5 meta-data=/dev/md5 isize=512 agcount=16, agsize=654720 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=10475520, imaxpct=25 = sunit=128 swidth=256 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=5120, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0

##检查挂载情况

[root@junwu_server ~]# mount |tail -n 1 /dev/md5 on /mnt/new_sd_md type xfs (rw,relatime,attr2,inode64,sunit=1024,swidth=2048,noquota)

如何使用备用盘

1、此时raid5磁盘使用情况

[root@junwu_server ~]# mdadm -D /dev/md5 |grep sd 0 8 48 0 active sync /dev/sdd 1 8 64 1 active sync /dev/sde 4 8 80 2 active sync /dev/sdf ##激活使用的磁盘 3 8 96 - spare /dev/sdg ##备用盘

2、剔除一块正在使用的磁盘

[root@junwu_server ~]# mdadm /dev/md5 -f /dev/sdd mdadm: set /dev/sdd faulty in /dev/md5

3、再次查看磁盘状态

[root@junwu_server ~]# mdadm -D /dev/md5 |grep sd 3 8 96 0 spare rebuilding /dev/sdg ##sdg备用盘顶上来了 1 8 64 1 active sync /dev/sde 4 8 80 2 active sync /dev/sdf 0 8 48 - faulty /dev/sdd

此时又可以正常使用raid5

只有经历过生活的苦难

才会更加努力去生活

自己梦想的一切

更加需要自己脚踏实地的去践行

结果未必尽如人意

但是路途中的努力

一定比结果更加美丽

----by ljw

浙公网安备 33010602011771号

浙公网安备 33010602011771号