【VMware vSAN】主机之间网络性能测试,提示“无法运行网络性能测试。请稍后重试。”的处理过程。

vSAN集群监控,有一个主动测试功能,里面可以针对vSAN主机进行虚拟机创建测试、网络性能测试等。

官方解释:

- 虚拟机创建测试通常需要 20 至 40 秒时间,在超时情况下最长需要 180 秒时间。将为每个主机生成一个虚拟机创建任务和一个删除任务,这些任务将显示在任务控制台中。

- 网络性能测试旨在评估是否存在连接问题以及主机间的网络带宽是否能够满足 vSAN 的要求。

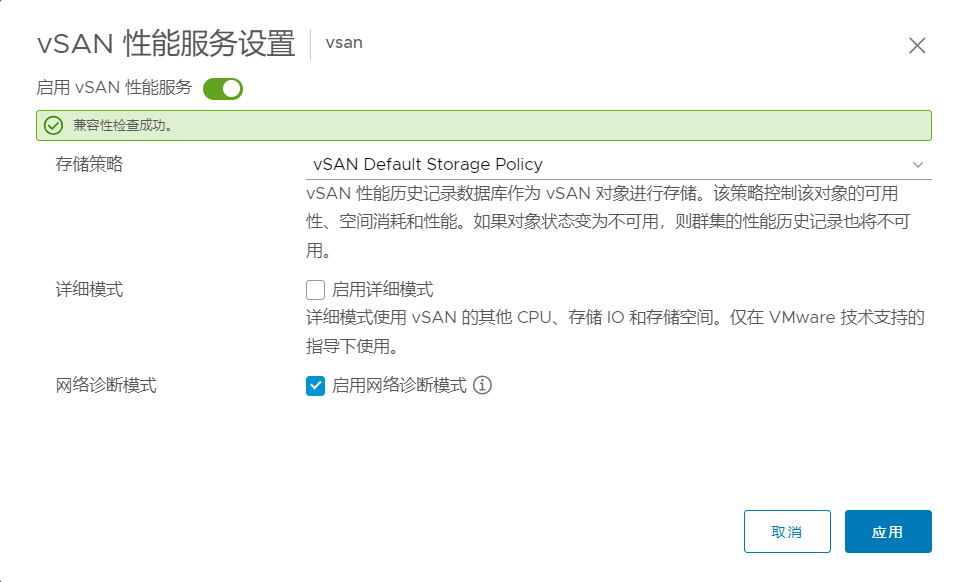

使用该功能需要提前启用 vSAN 性能服务的网络诊断模式,设置路径:vSAN服务配置 - 性能服务 - 网络诊断模式。

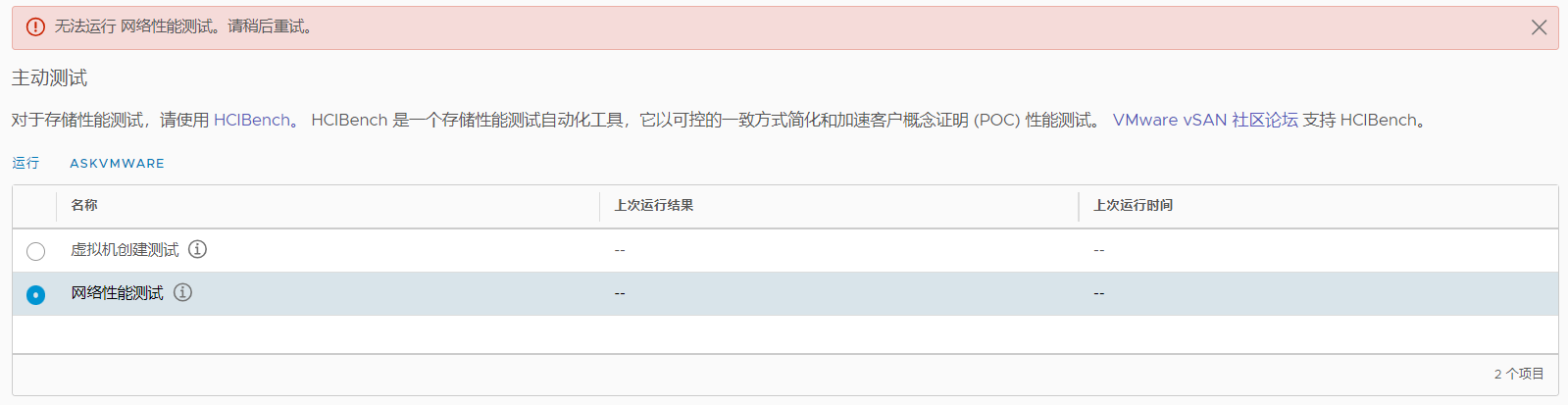

现在运行网络性能测试后,平台出现错误,提示“无法运行 网络性能测试。请稍后重试。”。

SSH连接到vCenter Shell,进入到/storage/log/vmware/vsan-health目录,查看tail -n 300 vmware-vsan-health-service.log日志,发现以下内容:

2023-12-05T09:00:15.528Z INFO vsan-mgmt[61232] [VsanVcClusterHealthSystemImpl::QueryClusterNetworkPerfTest opID=a89d241a] Run network(unicast) performance test 2023-12-05T09:00:15.551Z INFO vsan-mgmt[Thread-276] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf server on esxi-a1.lab.com 2023-12-05T09:00:15.554Z INFO vsan-mgmt[Thread-278] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf server on esxi-a2.lab.com 2023-12-05T09:00:15.558Z INFO vsan-mgmt[Thread-280] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf server on esxi-a4.lab.com 2023-12-05T09:00:15.560Z INFO vsan-mgmt[Thread-282] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf server on esxi-a3.lab.com 2023-12-05T09:00:22.947Z INFO vsan-mgmt[Thread-8] [VsanPyVmomiProfiler::logProfile opID=noOpId] invoke-method:ServiceInstance:CurrentTime: 0.00s 2023-12-05T09:00:25.037Z INFO vsan-mgmt[Thread-286] [VsanSupportBundleHelper::parseSystemProxies opID=noOpId] VCSA proxy is disabled. 2023-12-05T09:00:27.042Z ERROR vsan-mgmt[Thread-286] [VsanHttpRequestWrapper::urlopen opID=noOpId] Exception while sending request : <urlopen error [Errno 99] Cannot assign requested address> 2023-12-05T09:00:45.584Z INFO vsan-mgmt[Thread-277] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf client on esxi-a3.lab.com 2023-12-05T09:00:45.586Z INFO vsan-mgmt[Thread-279] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf client on esxi-a1.lab.com 2023-12-05T09:00:45.589Z INFO vsan-mgmt[Thread-281] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf client on esxi-a2.lab.com 2023-12-05T09:00:45.592Z INFO vsan-mgmt[Thread-283] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Launching iperf client on esxi-a4.lab.com 2023-12-05T09:01:00.191Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::log opID=noOpId] Profiler: 2023-12-05T09:01:00.191Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::logProfile opID=noOpId] GetState: 0.02s 2023-12-05T09:01:00.199Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::log opID=noOpId] Profiler: 2023-12-05T09:01:00.200Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::logProfile opID=noOpId] GetState: 0.01s 2023-12-05T09:01:00.214Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::logProfile opID=noOpId] invoke-accessor:ServiceInstance:content: 0.01s 2023-12-05T09:01:00.224Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::log opID=noOpId] Profiler: 2023-12-05T09:01:00.224Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::logProfile opID=noOpId] GetState: 0.00s 2023-12-05T09:01:05.141Z INFO vsan-mgmt[EventMonitor] [VsanEventUtil::_collectClustersEventsFromCache opID=noOpId] skip cluster vsan without updated timestamp : 2023-12-05 08:54:48.732163+00:00 2023-12-05T09:01:05.273Z INFO vsan-mgmt[Thread-289] [VsanPyVmomiProfiler::logProfile opID=noOpId] invoke-method:ha-vsan-health-system:WaitForVsanHealthGenerationIdChange: 5.04s:esxi-a4.lab.com 2023-12-05T09:01:05.276Z INFO vsan-mgmt[Thread-288] [VsanPyVmomiProfiler::logProfile opID=noOpId] invoke-method:ha-vsan-health-system:WaitForVsanHealthGenerationIdChange: 5.04s:esxi-a1.lab.com 2023-12-05T09:01:05.281Z INFO vsan-mgmt[Thread-290] [VsanPyVmomiProfiler::logProfile opID=noOpId] invoke-method:ha-vsan-health-system:WaitForVsanHealthGenerationIdChange: 5.04s:esxi-a3.lab.com 2023-12-05T09:01:05.284Z INFO vsan-mgmt[Thread-291] [VsanPyVmomiProfiler::logProfile opID=noOpId] invoke-method:ha-vsan-health-system:WaitForVsanHealthGenerationIdChange: 5.04s:esxi-a2.lab.com 2023-12-05T09:01:05.285Z INFO vsan-mgmt[ClusterGenMonitor] [VsanVcClusterUtil::WaitVsanClustersGenerationIdChange opID=noOpId] Get hosts generation ID change result : {'vim.HostSystem:host-33': False, 'vim.HostSystem:host-42': False, 'vim.HostSystem:host-39': False, 'vim.HostSystem:host-36': False} 2023-12-05T09:01:05.286Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::log opID=noOpId] Profiler: 2023-12-05T09:01:05.286Z INFO vsan-mgmt[ClusterGenMonitor] [VsanPyVmomiProfiler::logProfile opID=noOpId] ClusterHostsConnStateManager.GetHostsConnState: 0.02s 2023-12-05T09:01:11.749Z INFO vsan-mgmt[Thread-281] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfClient: 26.16s:esxi-a2.lab.com 2023-12-05T09:01:11.750Z INFO vsan-mgmt[Thread-281] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a2.lab.com 2023-12-05T09:01:11.798Z INFO vsan-mgmt[Thread-277] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfClient: 26.21s:esxi-a3.lab.com 2023-12-05T09:01:11.799Z INFO vsan-mgmt[Thread-277] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a3.lab.com 2023-12-05T09:01:11.817Z INFO vsan-mgmt[Thread-279] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfClient: 26.23s:esxi-a1.lab.com 2023-12-05T09:01:11.817Z INFO vsan-mgmt[Thread-279] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a1.lab.com 2023-12-05T09:01:11.946Z INFO vsan-mgmt[Thread-283] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfClient: 26.35s:esxi-a4.lab.com 2023-12-05T09:01:11.947Z INFO vsan-mgmt[Thread-283] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a4.lab.com 2023-12-05T09:01:15.968Z INFO vsan-mgmt[Thread-280] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfServer: 60.41s:esxi-a4.lab.com 2023-12-05T09:01:15.970Z INFO vsan-mgmt[Thread-280] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a4.lab.com 2023-12-05T09:01:16.160Z INFO vsan-mgmt[Thread-276] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfServer: 60.61s:esxi-a1.lab.com 2023-12-05T09:01:16.162Z INFO vsan-mgmt[Thread-276] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a1.lab.com 2023-12-05T09:01:16.199Z INFO vsan-mgmt[Thread-282] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfServer: 60.64s:esxi-a3.lab.com 2023-12-05T09:01:16.200Z INFO vsan-mgmt[Thread-282] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a3.lab.com 2023-12-05T09:01:16.458Z INFO vsan-mgmt[Thread-278] [VsanPyVmomiProfiler::logProfile opID=a89d241a] invoke-method:ha-vsan-health-system:QueryRunIperfServer: 60.90s:esxi-a2.lab.com 2023-12-05T09:01:16.459Z INFO vsan-mgmt[Thread-278] [VsanClusterHealthSystemImpl::PerHostIperfThreadMain opID=a89d241a] Iperf finished on esxi-a2.lab.com 2023-12-05T09:01:16.461Z INFO vsan-mgmt[61232] [VsanClusterHealthSystemImpl::QueryClusterNetworkPerfTest opID=a89d241a] Iperf result: (vim.cluster.VsanClusterNetworkLoadTestResult) { clusterResult = (vim.cluster.VsanClusterProactiveTestResult) { overallStatus = 'yellow', overallStatusDescription = '', timestamp = 2023-12-05T09:01:16.460179Z, healthTest = <unset> }, hostResults = (vim.host.VsanNetworkLoadTestResult) [ (vim.host.VsanNetworkLoadTestResult) { hostname = 'esxi-a3.lab.com', status = 'yellow', client = true, bandwidthBps = 74740481, totalBytes = 1121195648, lostDatagrams = <unset>, lossPct = <unset>, sentDatagrams = <unset>, jitterMs = <unset> }, (vim.host.VsanNetworkLoadTestResult) { hostname = 'esxi-a4.lab.com', status = 'yellow', client = true, bandwidthBps = 31971322, totalBytes = 479586696, lostDatagrams = <unset>, lossPct = <unset>, sentDatagrams = <unset>, jitterMs = <unset> }, (vim.host.VsanNetworkLoadTestResult) { hostname = 'esxi-a1.lab.com', status = 'yellow', client = true, bandwidthBps = 31321318, totalBytes = 469799320, lostDatagrams = <unset>, lossPct = <unset>, sentDatagrams = <unset>, jitterMs = <unset> }, (vim.host.VsanNetworkLoadTestResult) { hostname = 'esxi-a2.lab.com', status = 'yellow', client = true, bandwidthBps = 75505704, totalBytes = 1132633816, lostDatagrams = <unset>, lossPct = <unset>, sentDatagrams = <unset>, jitterMs = <unset> } ] } 2023-12-05T09:01:16.461Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::log opID=a89d241a] Profiler: 2023-12-05T09:01:16.462Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] GetClusterHostInfo.PopulateCapabilities: 0.06s 2023-12-05T09:01:16.462Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] GetClusterHostInfos: 0.12s 2023-12-05T09:01:16.463Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] GetClusterHostInfos.CollectMultiple_host: 0.00s 2023-12-05T09:01:16.463Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] GetClusterHostInfos.CollectMultiple_hostConfig: 0.04s 2023-12-05T09:01:16.465Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] GetClusterHostInfos.CollectMultiple_vsanSysConfig: 0.01s 2023-12-05T09:01:16.465Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] GetClusterHostInfos.getWitnessHost: 0.00s 2023-12-05T09:01:16.466Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] clusterAdapter.GetState: 0.00s 2023-12-05T09:01:16.466Z INFO vsan-mgmt[61232] [VsanPyVmomiProfiler::logProfile opID=a89d241a] impl.QueryClusterNetworkPerfTest: 60.93s

从上面日志可以看出,其实在运行网络性能测试后,后台是已经成功执行了的,那为什么平台提示运行错误呢?

我的环境是VMware vSAN 6.7 U3,VMware vCenter 6.7 U3,之前因为VMware vCenter Server高危漏洞做过升级,现在是U3t。

怀疑这可能是个bug......

如果想正常运行网络性能测试,并且展示出来,可以在vCenter右上角,选择我的首选项,将vSphere Client的语言修改为英文或者其他语言,再重新执行即可。

修改语言为英文后,运行显示正常。

关于vSAN网络性能测试对API对象的说明:

其实vSAN运行主动网络性能测试使用的工具是iPerf,iPerf 二进制文件位于主机的/usr/lib/vmware/vsan/bin/iperf3,我们也可以直接用该工具在两台主机之间运行网络性能测试,使用/usr/lib/vmware/vsan/bin/iperf3 --help命令查看该工具的帮助。

[root@esxi-a1:~] /usr/lib/vmware/vsan/bin/iperf3 --help

Usage: iperf [-s|-c host] [options]

iperf [-h|--help] [-v|--version]

Server or Client:

-p, --port # server port to listen on/connect to

-f, --format [kmgKMG] format to report: Kbits, Mbits, KBytes, MBytes

-i, --interval # seconds between periodic bandwidth reports

-F, --file name xmit/recv the specified file

-A, --affinity n/n,m set CPU affinity

-B, --bind <host> bind to a specific interface

-V, --verbose more detailed output

-J, --json output in JSON format

--logfile f send output to a log file

--forceflush force flushing output at every interval

-d, --debug emit debugging output

-v, --version show version information and quit

-h, --help show this message and quit

Server specific:

-s, --server run in server mode

-D, --daemon run the server as a daemon

-I, --pidfile file write PID file

-1, --one-off handle one client connection then exit

Client specific:

-c, --client <host> run in client mode, connecting to <host>

-u, --udp use UDP rather than TCP

-b, --bandwidth #[KMG][/#] target bandwidth in bits/sec (0 for unlimited)

(default 1 Mbit/sec for UDP, unlimited for TCP)

(optional slash and packet count for burst mode)

-t, --time # time in seconds to transmit for (default 10 secs)

-n, --bytes #[KMG] number of bytes to transmit (instead of -t)

-k, --blockcount #[KMG] number of blocks (packets) to transmit (instead of -t or -n)

-l, --len #[KMG] length of buffer to read or write

(default 128 KB for TCP, dynamic or 1 for UDP)

--cport <port> bind to a specific client port (TCP and UDP, default: ephemeral port)

-P, --parallel # number of parallel client streams to run

-R, --reverse run in reverse mode (server sends, client receives)

-w, --window #[KMG] set window size / socket buffer size

-C, --congestion <algo> set TCP congestion control algorithm (Linux and FreeBSD only)

-M, --set-mss # set TCP/SCTP maximum segment size (MTU - 40 bytes)

-N, --no-delay set TCP/SCTP no delay, disabling Nagle's Algorithm

-4, --version4 only use IPv4

-6, --version6 only use IPv6

-S, --tos N set the IP 'type of service'

-L, --flowlabel N set the IPv6 flow label (only supported on Linux)

-Z, --zerocopy use a 'zero copy' method of sending data

-O, --omit N omit the first n seconds

-T, --title str prefix every output line with this string

--get-server-output get results from server

--udp-counters-64bit use 64-bit counters in UDP test packets

[KMG] indicates options that support a K/M/G suffix for kilo-, mega-, or giga-

Report bugs to: https://github.com/esnet/iperf使用iPerf工具,分为客户端(client)和服务器(server)端模式。比如我有两台主机,叫a1和a2,这时我要运行iPerf网络性能测试,则指定a1运行客户端,a2运行服务器端。

使用iPerf工具(默认使用5201端口)之前,需要在主机上使用下面命令关闭主机防火墙,等运行测试结束后再重新启用。

esxcli network firewall set --enabled false在a2主机上使用-s选项以server模式运行,使用-B选项指定本地地址被用来进行性能测试。

/usr/lib/vmware/vsan/bin/iperf3.copy -s -B esxi-a2.lab.com

在a1主机上使用-c选项以client模式运行,使用-n选项指定用于测试的数据传输大小,并与esxi-a2.lab.com主机进行网络性能测试。

/usr/lib/vmware/vsan/bin/iperf3 -n 1G -c esxi-a2.lab.com

注意:

如果在ESXi7主机或者之前版本上运行iPerf的服务器端,提示“iperf3: error - unable to start listener for connections: Operation not permitted”错误。需要执行以下操作,再以/usr/lib/vmware/vsan/bin/iperf3.copy运行服务器端模式。

cp /usr/lib/vmware/vsan/bin/iperf3 /usr/lib/vmware/vsan/bin/iperf3.copy如果在ESXi8主机上运行iPerf,提示“iperf3: running in appDom(30): ipAddr = ::, port = 5201: Access denied by vmkernel access control policy”错误。需要执行以下操作,并在服务器和客户端 ESXi 主机上完成 iperf 测试结束后再重新启用。

esxcli system secpolicy domain set -n appDom -l disabled

esxcli system secpolicy domain set -n appDom -l enforcing