网络爬虫之HttpClient

-

HttpClient

-

网络爬虫就是用程序爬取资源,需要使用Http协议访问互联网的网页,在爬虫过程中使用java的Http协议客户端HttpClient这个技术来实现抓取网页中的数据

-

-

HttpClient之Get请求

- 下面进行代码实现

-

HttpClient

-

网络爬虫就是用程序爬取资源,需要使用Http协议访问互联网的网页,在爬虫过程中使用java的Http协议客户端HttpClient这个技术来实现抓取网页中的数据

-

-

HttpClient之Get请求

-

代码:

package cn.itcast.crawler.test;

import org.apache.http.HttpEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

public class HttpGetTest {

public static void main(String[] args) {

//1.创建HttpClient对象

CloseableHttpClient httpClient= HttpClients.createDefault();

//2.创建HttpGet对象,设置URL地址

HttpGet httpGet=new HttpGet("https://www.baidu.com");

//使用httpClient发起响应获取repsonse

CloseableHttpResponse response=null;

try {

response=httpClient.execute(httpGet);

//4.解析响应,获取数据

//判断状态码是否是200

if(response.getStatusLine().getStatusCode()==200){

HttpEntity httpEntity=response.getEntity();

String content=EntityUtils.toString(httpEntity,"utf8");

System.out.println(content.length());

}

} catch (IOException e) {

e.printStackTrace();

}finally {

try {

response.close();

} catch (IOException e) {

e.printStackTrace();

}

try {

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

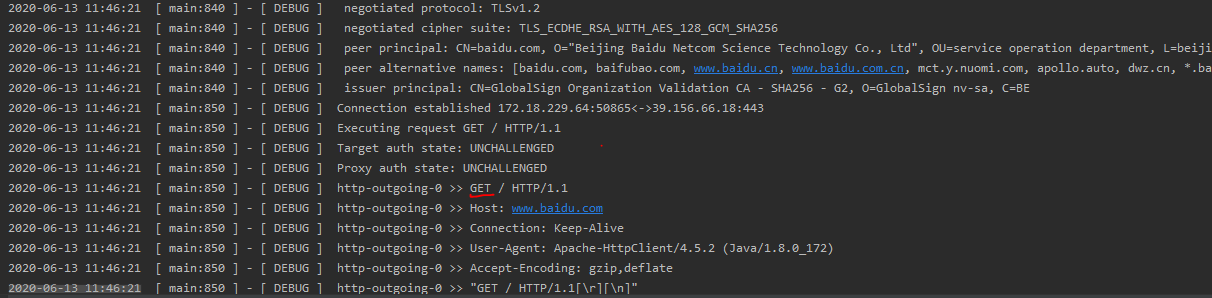

执行结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号