1. 采集数据_项目一

说明

- 用户点击页面后数据存储到a.log文件中。(本项目省去了这一步,数据已经在a.log中了)

- 使用java代码将a.log文件中的数据,写入project.log中。

- 使用flume采集日志,监控project.log文件内容的变化,将新增的用户的数据写出到hdfs上。

a.log中的现成数据

点击查看代码

120.191.181.178 - - 2018-02-18 20:24:39 "POST https://www.taobao.com/item/b HTTP/1.1" 203 69172 https://www.taobao.com/register UCBrowser Webkit X3android 8.0 海南 20.02 110.20 36

149.74.183.133 - - 2018-09-24 19:38:17 "GET https://www.taobao.com/register HTTP/1.0" 300 72815 https://www.taobao.com/item/a Windows Internet Explorer Tridentwindows 广西 22.48 108.19 39

58.9.92.122 - - 2018-08-30 11:28:15 "GET https://www.taobao.com/list/ HTTP/1.0" 203 17119 https://www.taobao.com/category/a OPera blinkwindows 重庆 29.59 106.54 35

77.56.72.210 - - 2018-05-13 18:11:22 "POST https://www.taobao.com/category/b HTTP/1.0" 201 17843 https://www.taobao.com/list/ Firefox ios 吉林 43.54 125.19 57

217.147.196.74 - - 2018-08-22 18:06:01 "GET https://www.taobao.com/category/c HTTP/1.0" 501 95033 https://www.taobao.com/category/b Mozilla Firefox Geckowindows 山东 36.40 117.00 28

37.146.124.65 - - 2018-05-08 02:12:24 "POST https://www.taobao.com/category/d HTTP/1.1" 203 47329 https://www.taobao.com/item/a Safari webkitwindows 江西 28.40 115.55 25

167.108.24.171 - - 2018-08-20 12:19:01 "POST https://www.taobao.com/recommand HTTP/1.1" 302 80056 https://www.taobao.com/category/d Google Chrome Chromium/BlinkMac OS X 广东 23.08 113.14 19

94.69.229.202 - - 2018-07-27 13:46:37 "GET https://www.taobao.com/recommand HTTP/1.1" 501 63116 https://www.taobao.com/recommand Windows Internet Explorer Tridentwindows 陕西 34.17 108.57 58

日志采集脚本的编写

- 监控文件内容的变化,进而采集到数据

[root@node1 dataCollect]# cat dataCollect.conf

dataCollect.sources = s1

dataCollect.channels = c1

dataCollect.sinks = k1

dataCollect.sources.s1.type = exec

dataCollect.sources.s1.command = tail -F /opt/project/dataCollect/project.log

dataCollect.channels.c1.type = memory

dataCollect.channels.c1.capacity = 20000

dataCollect.channels.c1.transactionCapacity = 10000

dataCollect.channels.c1.byteCapacity = 1048576000

# sink info

dataCollect.sinks.k1.type = hdfs

#指定采集的数据存储到HDFS的哪个目录下

dataCollect.sinks.k1.hdfs.path = hdfs://node1:9000/project/%Y%m%d/

#上传文件的前缀

dataCollect.sinks.k1.hdfs.filePrefix = project-

#上传文件的后缀

dataCollect.sinks.k1.hdfs.fileSuffix = .log

##是否按照时间滚动文件夹

dataCollect.sinks.k1.hdfs.round = true

##多少时间单位创建一个新的文件夹

dataCollect.sinks.k1.hdfs.roundValue = 24

##重新定义时间单位

dataCollect.sinks.k1.hdfs.roundUnit = hour

##是否使用本地时间戳

dataCollect.sinks.k1.hdfs.useLocalTimeStamp = true

##积攒多少个Event才flush到HDFS一次

dataCollect.sinks.k1.hdfs.batchSize = 5000

##设置文件类型,可支持压缩

dataCollect.sinks.k1.hdfs.fileType = DataStream

##多久生成一个新的文件每隔6小时

dataCollect.sinks.k1.hdfs.rollInterval = 21600

##设置每个文件的滚动大小128M

dataCollect.sinks.k1.hdfs.rollSize = 134217700

##文件的滚动与Event数量无关

dataCollect.sinks.k1.hdfs.rollCount = 0

##最小冗余数 副本数

dataCollect.sinks.k1.hdfs.minBlockReplicas = 1

# Bind the source and sink to the channel

dataCollect.sources.s1.channels = c1

dataCollect.sinks.k1.channel = c1

使用java代码将用户行为数据写入project.log中

package com.sxuek;

import java.io.*;

import java.util.Random;

public class SimPro {

public static void main(String[] args) throws IOException, InterruptedException {

/*

每隔一段时间去读取/root/project/dataCollect/a.log文件中的一行或者多行数据

然后将数据追加到project.log文件

*/

BufferedReader br = new BufferedReader(new InputStreamReader(new FileInputStream("/root/project/dataCollect/a.log"), "UTF-8"));

BufferedWriter bw = new BufferedWriter(new OutputStreamWriter(new FileOutputStream("/root/project/dataCollect/project.log", true), "utf-8"));

/*

读取文件数据 模拟用户点击

*/

/*

随机生成两个数字,第一个数字时间间隔,第二个数字每一次点击的次数

*/

String line = null;

Random random = new Random();

while ((line = br.readLine()) != null) {

int time = random.nextInt(5000);

int count = 1 + random.nextInt(10000);

Thread.sleep(time); // 代表时间间隔

System.out.println("间隔了"+time+"时间,有"+count+"个用户点击了网站,产生了用户行为日志数据");

for (int i = 0; i < count; i++) {

bw.write(line);

bw.newLine();

bw.flush();

line = br.readLine();

}

}

}

}

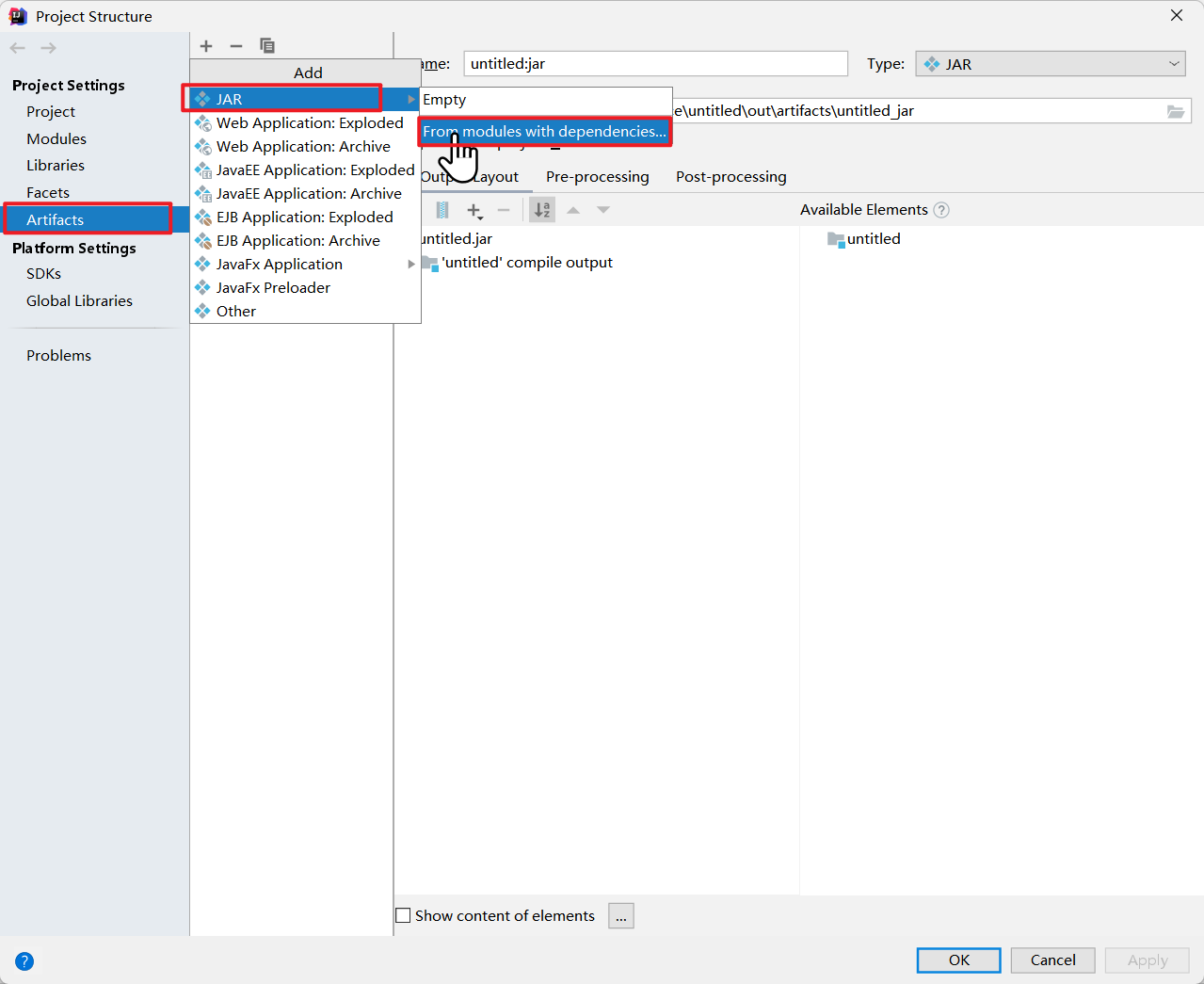

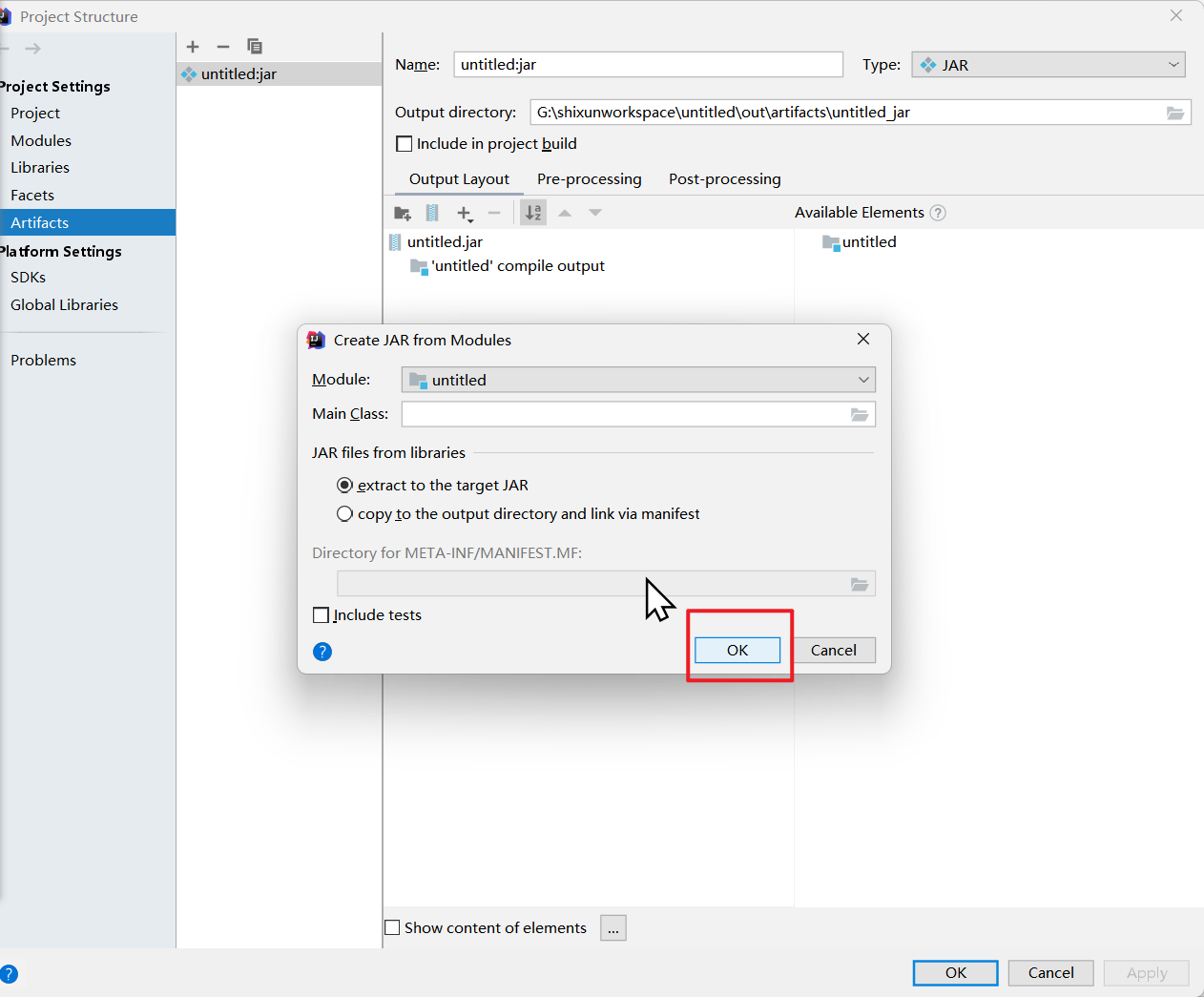

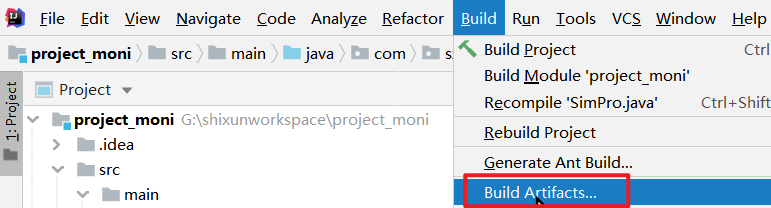

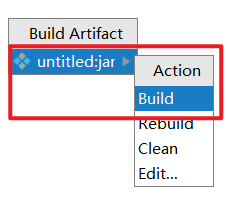

打jar包上传,运行

开始采集

flume-ng agent -n dataCollect -f dataCollect.conf -Dflume.root.logger=INFO,console

java -jar untitled.jar SimPro.java

本文来自博客园,作者:jsqup,转载请注明原文链接:https://www.cnblogs.com/jsqup/p/16564429.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号