udtf一进多出案例2

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>hive_function</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.3.8</version>

<!--排除依赖中的某个jar包-->

<exclusions>

<exclusion>

<groupId>org.pentaho</groupId>

<artifactId>pentaho-aggdesigner-algorithm</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.62</version>

</dependency>

</dependencies>

</project>

java编写

package udtf;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import java.util.ArrayList;

import java.util.List;

public class JSONDataParseUDTF extends GenericUDTF {

@Override

public StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException {

List<String> columnNames = new ArrayList<String>();

columnNames.add("name");

columnNames.add("age");

columnNames.add("sex");

columnNames.add("phone");

List<ObjectInspector> objectInspectors = new ArrayList<ObjectInspector>();

objectInspectors.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

objectInspectors.add(PrimitiveObjectInspectorFactory.javaIntObjectInspector);

objectInspectors.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

objectInspectors.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(columnNames, objectInspectors);

}

public void process(Object[] args) throws HiveException {

String jsonStr = args[0].toString();

JSONObject jsonObject = JSON.parseObject(jsonStr);

List<Object> line = new ArrayList<Object>();

line.add(jsonObject.get("name"));

line.add(jsonObject.getInteger("age"));

line.add(jsonObject.get("sex"));

line.add(jsonObject.get("phone"));

forward(line);

}

public void close() throws HiveException {

}

}

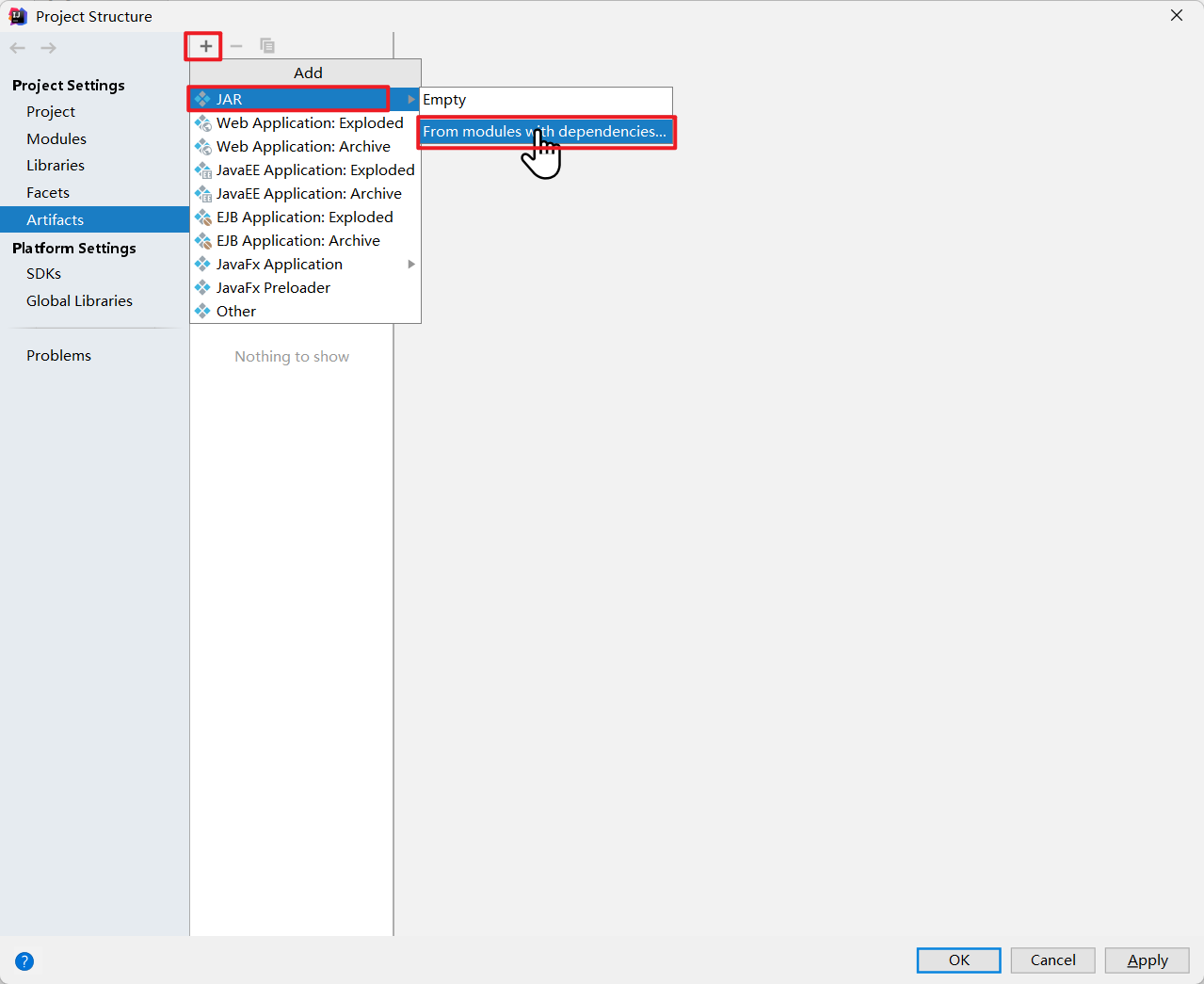

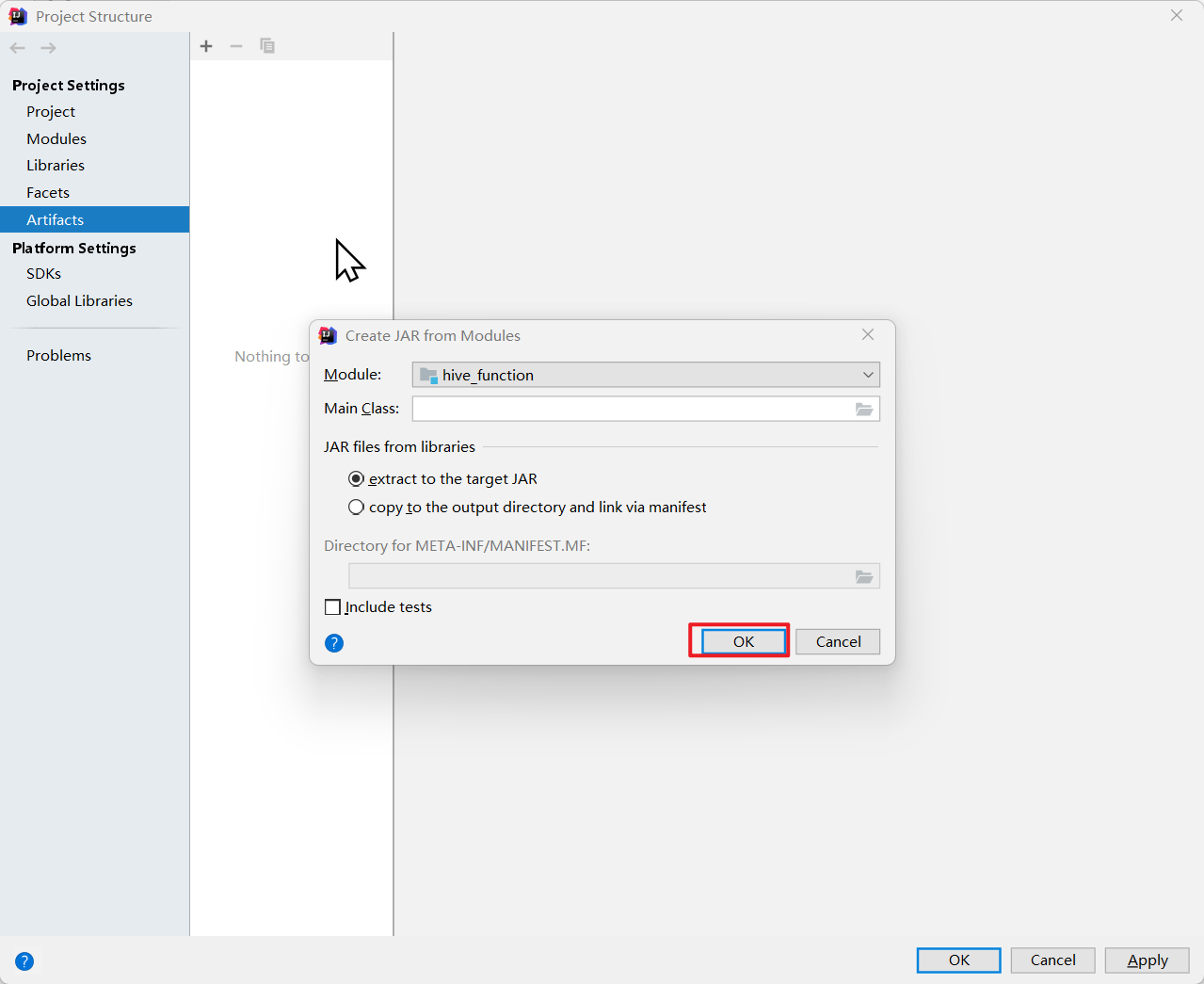

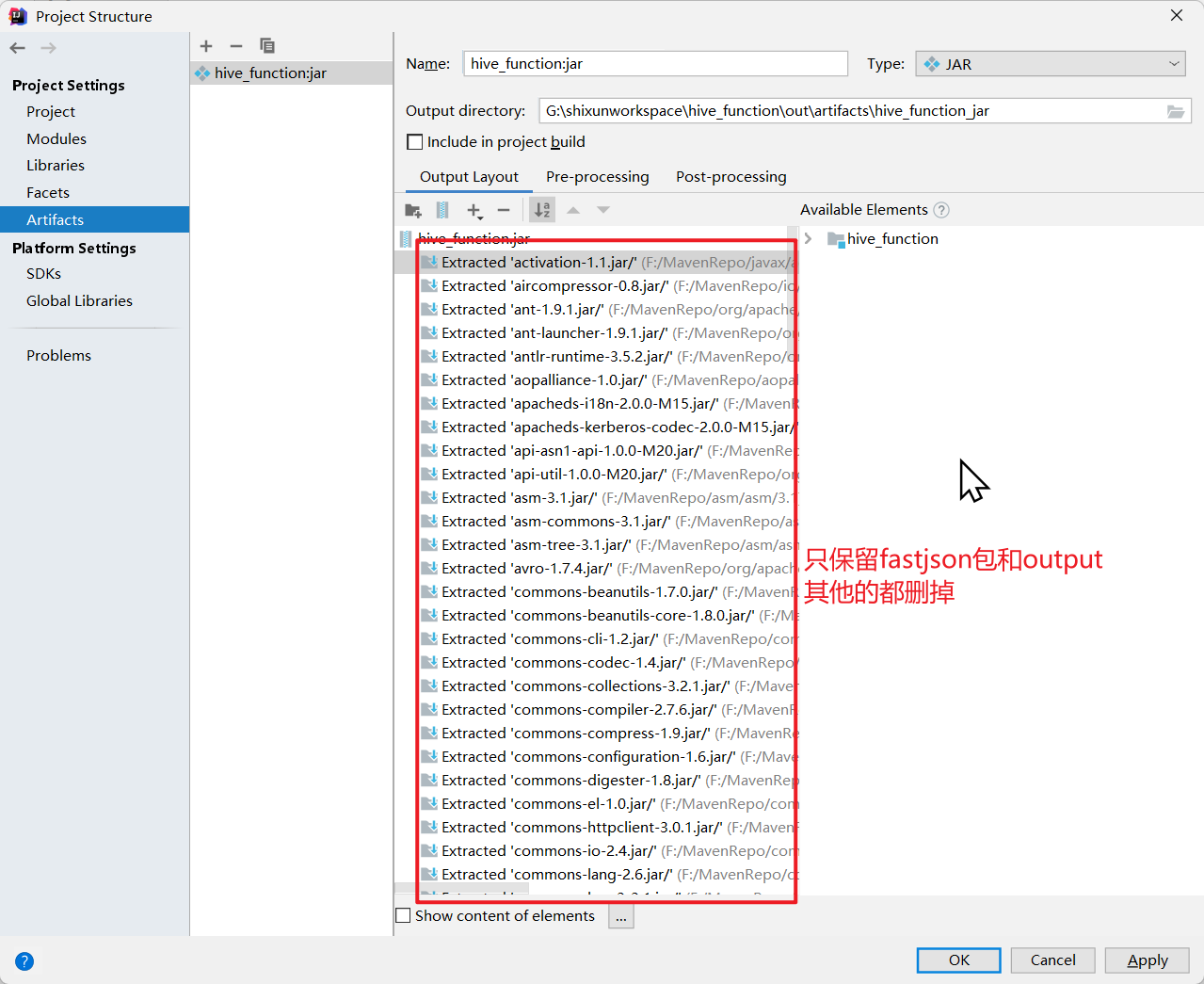

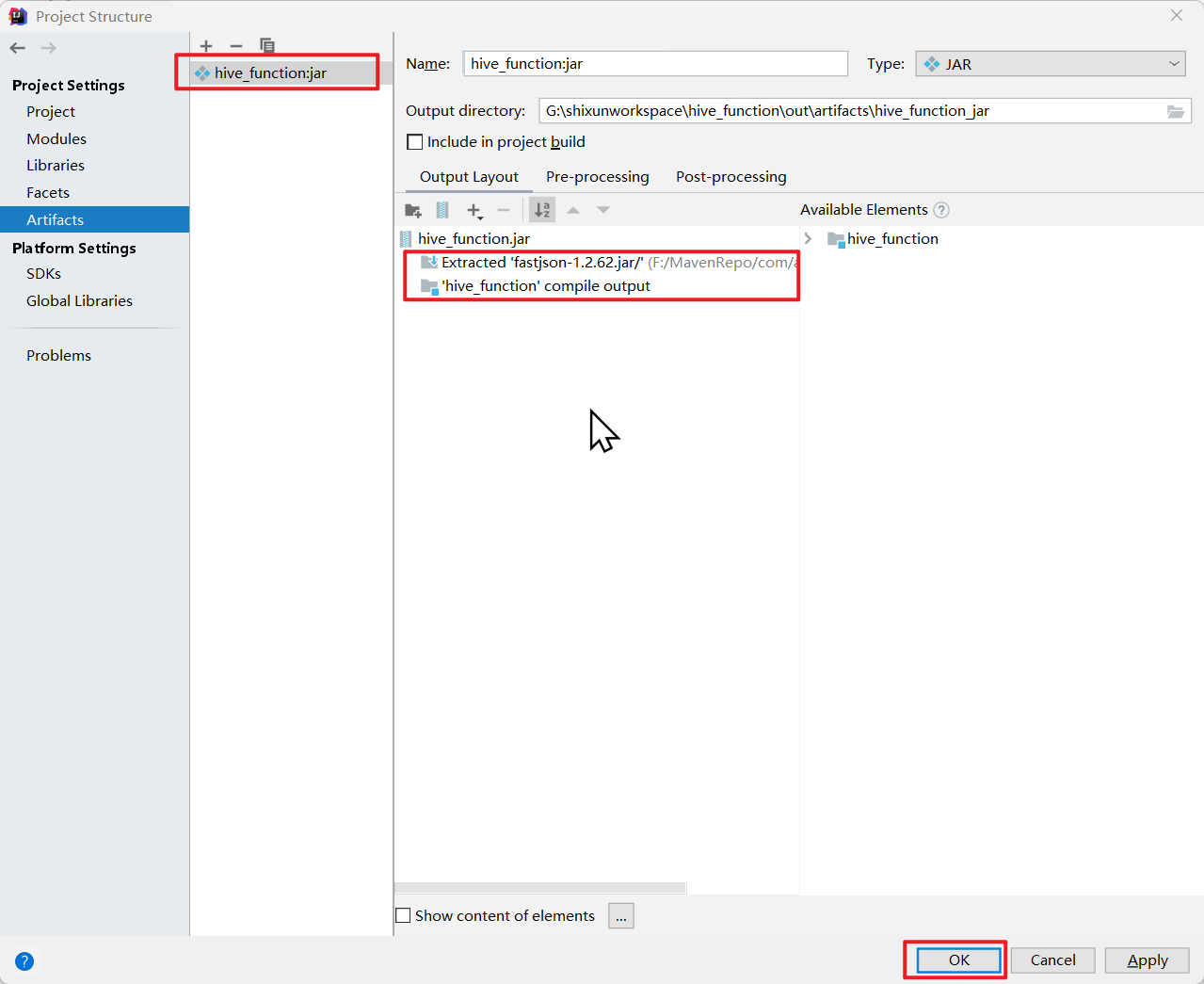

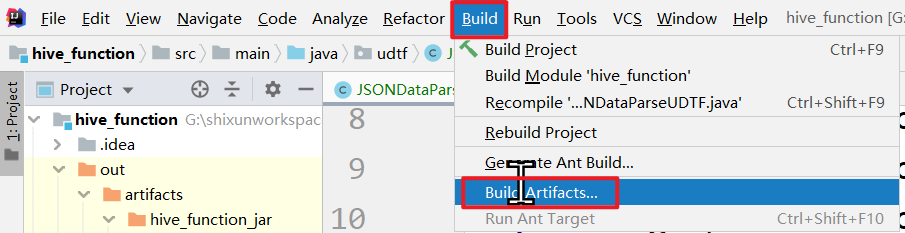

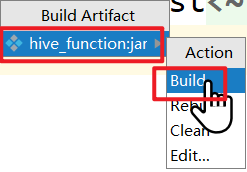

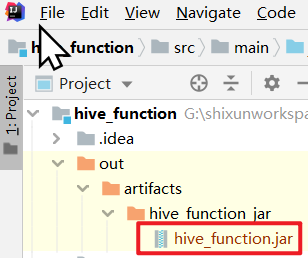

打jar包

因为这里有fastjson这个第三方工具包,所以不能用maven的方式导包

在shell中操作

数据

[root@node1 data]# cat aa.txt

{"name":"zs","age":20,"sex":"man","phone":"13888888888"}

{"name":"ls","age":21,"sex":"woman","phone":"13123148888"}

{"name":"ww","age":22,"sex":"man","phone":"1388883456"}

{"name":"ml","age":23,"sex":"woman","phone":"1388883456"}

{"name":"zb","age":24,"sex":"man","phone":"1388885678"}

{"name":"wb","age":25,"sex":"woman","phone":"13888343488"}

{"name":"lb","age":26,"sex":"man","phone":"1388881188"}

hive (default)> create temporary function parse_json_data as "udtf.JSONDataParseUDTF" using jar "hdfs://node1:9000/hive_function-1.0-SNAPSHOT.jar";

Added [/tmp/d0389ef2-b8cf-4739-b438-d9c595057ef5_resources/hive_function-1.0-SNAPSHOT.jar] to class path

Added resources: [hdfs://node1:9000/hive_function-1.0-SNAPSHOT.jar]

FAILED: Class udtf.JSONDataParseUDTF not found

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.FunctionTask

hive (default)> create table user_info(userjson string) row format delimited fields terminated by '\n';

OK

Time taken: 4.959 seconds

hive (default)> load data local inpath "/opt/data/aa.txt" into table user_info;

Loading data to table default.user_info

OK

Time taken: 2.66 seconds

hive (default)> select * from user_info;

OK

user_info.userjson

{"name":"zs","age":20,"sex":"man","phone":"13888888888"}

{"name":"ls","age":21,"sex":"woman","phone":"13123148888"}

{"name":"ww","age":22,"sex":"man","phone":"1388883456"}

{"name":"ml","age":23,"sex":"woman","phone":"1388883456"}

{"name":"zb","age":24,"sex":"man","phone":"1388885678"}

{"name":"wb","age":25,"sex":"woman","phone":"13888343488"}

{"name":"lb","age":26,"sex":"man","phone":"1388881188"}

Time taken: 4.626 seconds, Fetched: 7 row(s)

hive (default)> create temporary function parse_json_data as "udtf.JSONDataParseUDTF" using jar "hdfs://node1:9000/hive_function.jar";

Added [/tmp/d0389ef2-b8cf-4739-b438-d9c595057ef5_resources/hive_function.jar] to class path

Added resources: [hdfs://node1:9000/hive_function.jar]

OK

Time taken: 0.273 seconds

hive (default)> select parse_json_data(userjson) from user_info;

OK

name age sex phone

zs 20 man 13888888888

ls 21 woman 13123148888

ww 22 man 1388883456

ml 23 woman 1388883456

zb 24 man 1388885678

wb 25 woman 13888343488

lb 26 man 1388881188

Time taken: 1.129 seconds, Fetched: 7 row(s)

本文来自博客园,作者:jsqup,转载请注明原文链接:https://www.cnblogs.com/jsqup/p/16550792.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号