- getSplits:对文件进行切片

- createRecordReader:将文件数据转换成key-value的格式

TextInputFormat:默认实现子类

| 读取的文件数据机制(类型:LongWritable Text): |

| 以文件每一行的偏移量为key,每一行的数据为value进行key-value转换的 |

| |

| 切片机制: |

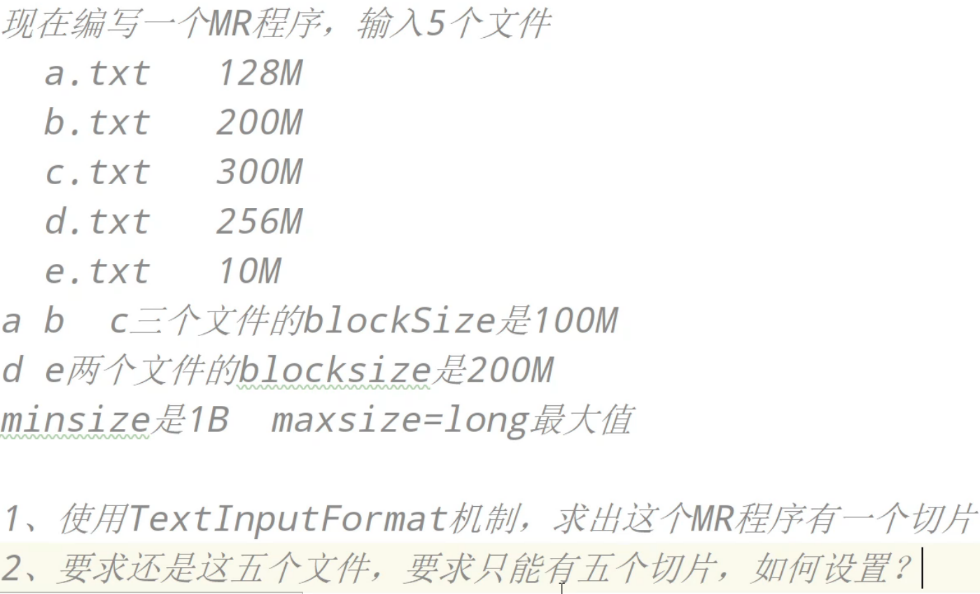

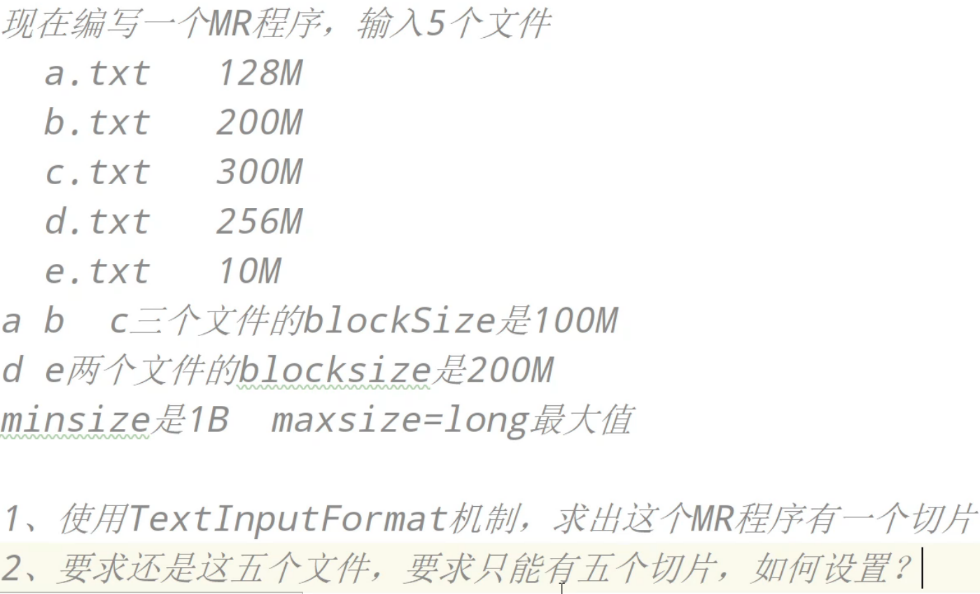

| 1. 有三个参数,minSize,maxSize,blockSize |

| Math.max(minSize, Math.min(maxSize, blockSize)); |

| 2. 先求出maxSize和blockSize的最大值,然后将求出的最小值和minsize求最大值,最大值就是切片的大小 |

| 3. maxsize和minsize是可以调整大小的,大小一调整,切片的大小就调整了 |

| 4. 如果处理的数据是多个文件,一个文件最少一个块 |

| 5. 两个文件数据,a 100M b 300M blocksize 128M |

| 4个切片 |

| a 单独一个切片 |

| b 三个切片 |

| |

| 切片机制再理解: |

| 1. 获取输入的所有文件状态FileStatus |

| 2. 如果有多个文件,每一个文件单独切片 5 |

| 3. 每一个文件单独切片之前,都会先判断一下文件是否能被切割(压缩包一般不能被切割的) |

| 4. 如果文件能被切割,那么就会按照公式进行切片 |

| Math.max(minSize, Math.min(maxSize, blockSize)); |

| 举例:三个文件 a.txt 10M b.txt 100M c.txt 200M minsize 1 maxsize:Long_MAX_VALUE blocksize 128M |

| 一共分成4片 |

举例

- 10片

- 将minSize调为300M

CombinerTextInputFormat

| 作用: |

| 专门用来进行大量的小文件的处理 |

| |

| 读取的文件数据机制(类型:LongWritable Text): |

| 以文件每一行的偏移量为key,每一行的数据为value进行key-value转换的,同TextInputFormat |

| |

| 切片机制: |

| 1. 先指定一个小文件的最大值 |

| 2. 逻辑切片: |

| 对每一个文件按照设定值进行切割,如果小文件不大于指定值,那么是一块, |

| 如果大于指定值但是小于指定值的两倍,平均切割成两个块, |

| 如果大于指定值并且大于指定值的两倍,先按照最大值切成一个块,剩余值看看多大 |

| 3. 物理切片: |

| 将分割好的块,前一个和后一个块累加,如果大于指定值,物理上分为一个切片 |

| |

| |

| import org.apache.hadoop.mapreduce.lib.input.CombineTextInputFormat; |

| # 设置实现切片的子类:CombineTextInputFormat, 在设置输入路径前设置 |

| job.setInputFormatClass(CombineTextInputFormat.class); |

| FileInputFormat.setInputPaths(job, new Path("")); |

| # 设置指定切片值 |

| CombineTextInputFormat.setMaxInputSplitSize(job, 70*1024*1024); |

举例

| 举例:设置指定值是10M,求5个文件大小为如下5M,11M,21M,8M,15M,求最终的切片值。 |

| 解: |

| 先逻辑切片:5M 5.5M 5.5M 10M 5.5M 5.5M 8M 7.5M 7.5M |

| 再物理切片:10.5M 15.5M 11M 15.5M 7.5M |

| 读取的文件数据机制(类型:LongWritable Text): |

| 以文件每一行的偏移量为key,每一行的数据为value进行key-value转换的,同TextInputFormat |

| |

| 切片机制: |

| 1. 不是按照文件切片,按照指定的行数进行切片 |

| 2. 一个文件至少一个切片,每个文件按照行数切割,不会将两个文件合并到一块儿 |

| |

| |

| |

| package com.sxuek.NLineInputFormat; |

| import org.apache.hadoop.io.LongWritable; |

| import org.apache.hadoop.io.NullWritable; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.Mapper; |

| import java.io.IOException; |

| public class TestMapper extends Mapper<LongWritable, Text, Text, NullWritable> { |

| @Override |

| protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { |

| System.out.println(key.get()+"="+value); |

| context.write(value, NullWritable.get()); |

| } |

| } |

| |

| |

| package com.sxuek.NLineInputFormat; |

| import org.apache.hadoop.conf.Configuration; |

| import org.apache.hadoop.fs.FileSystem; |

| import org.apache.hadoop.fs.Path; |

| import org.apache.hadoop.io.NullWritable; |

| import org.apache.hadoop.mapreduce.Job; |

| import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; |

| import org.apache.hadoop.mapreduce.lib.input.NLineInputFormat; |

| import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; |

| import org.junit.Test; |

| import java.io.IOException; |

| import java.net.URI; |

| import java.net.URISyntaxException; |

| public class TestDriver { |

| public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException, URISyntaxException { |

| Configuration conf = new Configuration(); |

| conf.set("fs.defaultFS", "hdfs://node1:9000"); |

| Job job = Job.getInstance(conf); |

| job.setJarByClass(TestDriver.class); |

| |

| job.setMapperClass(TestMapper.class); |

| job.setMapOutputKeyClass(Test.class); |

| job.setMapOutputValueClass(NullWritable.class); |

| |

| job.setNumReduceTasks(0); |

| |

| job.setInputFormatClass(NLineInputFormat.class); |

| NLineInputFormat.setNumLinesPerSplit(job, 5); |

| |

| FileInputFormat.setInputPaths(job, new Path("/wc.txt")); |

| |

| Path path = new Path("/output"); |

| FileSystem fs = FileSystem.get(new URI("hdfs://node1:9000"), conf, "root"); |

| if (fs.exists(path)) { |

| fs.delete(path, true); |

| } |

| FileOutputFormat.setOutputPath(job, path); |

| |

| boolean flag = job.waitForCompletion(true); |

| if (flag) { |

| System.out.println("成功"+flag); |

| } else { |

| System.out.println("失败"+flag); |

| } |

| } |

| } |

| 切片机制: |

| 与TextInputFormat是一致的 |

| |

| 读取的文件数据机制: |

| 指定一个分隔符,对每一行数据使用分隔符切割,切割之后的第一个字段为key。剩余字段为value。默认分割符是\t。 |

| |

| |

| package com.sxuek.NLineInputFormat; |

| import org.apache.hadoop.io.NullWritable; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.Mapper; |

| import java.io.IOException; |

| public class TestMapper extends Mapper<Text, Text, Text, NullWritable> { |

| @Override |

| protected void map(Text key, Text value, Context context) throws IOException, InterruptedException { |

| System.out.println("key的值为"+key.toString()+",value的值为"+value.toString()); |

| context.write(value, NullWritable.get()); |

| } |

| } |

| |

| |

| package com.sxuek.KeyValueInputFormat; |

| import org.apache.hadoop.conf.Configuration; |

| import org.apache.hadoop.fs.FileSystem; |

| import org.apache.hadoop.fs.Path; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.Job; |

| import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; |

| import org.apache.hadoop.mapreduce.lib.input.KeyValueTextInputFormat; |

| import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; |

| import java.io.IOException; |

| import java.net.URI; |

| import java.net.URISyntaxException; |

| public class TestDriver { |

| public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException, URISyntaxException { |

| Configuration conf = new Configuration(); |

| conf.set("fs.defaultFS", "hdfs://node1:9000"); |

| |

| conf.set("mapreduce.input.keyvaluelinerecordreader.key.value.separator", "="); |

| |

| Job job = Job.getInstance(conf); |

| job.setJarByClass(TestDriver.class); |

| |

| job.setMapOutputKeyClass(Text.class); |

| job.setMapOutputValueClass(Text.class); |

| |

| job.setNumReduceTasks(0); |

| |

| job.setInputFormatClass(KeyValueTextInputFormat.class); |

| |

| FileInputFormat.setInputPaths(job, new Path("/aa.txt")); |

| |

| Path path = new Path("/output"); |

| FileSystem fs = FileSystem.get(new URI("hdfs://node1:9000"), conf, "root"); |

| if (fs.exists(path)) { |

| fs.delete(path, true); |

| } |

| FileOutputFormat.setOutputPath(job, path); |

| |

| boolean flag = job.waitForCompletion(true); |

| if (flag) { |

| System.out.println("成功"+flag); |

| } else { |

| System.out.println("失败"+flag); |

| } |

| } |

| } |

| 方法: |

| 1. 继承FileInputFormat类 |

| 2. 如果改写切片机制,重写getSplit方法 |

| 3. 如果改写mapper阶段接受的key-value类型以及key-value的含义,重写createRecordReader方法 |

| |

| 重点两个方法: |

| 1. getSplits():先执行,对输入数据源进行切片,如果你想FileInputFormat默认切片机制 |

| 那么这个方法就不需要重写了 |

| 2. createRecordReader方法: |

| 切片完成之后,每一个切片需要一个MapTask任务去处理,map任务在处理切片的时候, |

| 需要读取切片数据成为key-value键值对。怎么读取,一次读取一行还是一次读取一个文件。 |

| |

| TestMapper类: |

| package com.sxuek.SelfInputFormat; |

| import org.apache.hadoop.io.NullWritable; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.Mapper; |

| import java.io.IOException; |

| public class TestMapper extends Mapper<Text, Text, Text, NullWritable> { |

| @Override |

| protected void map(Text key, Text value, Context context) throws IOException, InterruptedException { |

| System.out.println("key的值为"+key.toString()+",value的值为"+value.toString()); |

| context.write(value, NullWritable.get()); |

| } |

| } |

| |

| |

| TestDriver类: |

| package com.sxuek.SelfInputFormat; |

| import org.apache.hadoop.conf.Configuration; |

| import org.apache.hadoop.fs.FileSystem; |

| import org.apache.hadoop.fs.Path; |

| import org.apache.hadoop.io.NullWritable; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.Job; |

| import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; |

| import org.apache.hadoop.mapreduce.lib.input.NLineInputFormat; |

| import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; |

| import org.junit.Test; |

| import java.io.IOException; |

| import java.net.URI; |

| import java.net.URISyntaxException; |

| public class TestDriver { |

| public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException, URISyntaxException { |

| Configuration conf = new Configuration(); |

| conf.set("fs.defaultFS", "hdfs://node1:9000"); |

| Job job = Job.getInstance(conf); |

| job.setJarByClass(TestDriver.class); |

| |

| job.setMapperClass(TestMapper.class); |

| job.setMapOutputKeyClass(Text.class); |

| job.setMapOutputValueClass(Text.class); |

| |

| job.setNumReduceTasks(0); |

| |

| job.setInputFormatClass(CustomInputFormat.class); |

| |

| FileInputFormat.setInputPaths(job, new Path("/wc.txt")); |

| |

| Path path = new Path("/output"); |

| FileSystem fs = FileSystem.get(new URI("hdfs://node1:9000"), conf, "root"); |

| if (fs.exists(path)) { |

| fs.delete(path, true); |

| } |

| FileOutputFormat.setOutputPath(job, path); |

| |

| boolean flag = job.waitForCompletion(true); |

| if (flag) { |

| System.out.println("成功"+flag); |

| } else { |

| System.out.println("失败"+flag); |

| } |

| } |

| } |

| |

| |

| CustomInputFormat类: |

| package com.sxuek.SelfInputFormat; |

| import org.apache.hadoop.fs.Path; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.InputSplit; |

| import org.apache.hadoop.mapreduce.JobContext; |

| import org.apache.hadoop.mapreduce.RecordReader; |

| import org.apache.hadoop.mapreduce.TaskAttemptContext; |

| import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; |

| import java.io.IOException; |

| |

| |

| |

| |

| |

| public class CustomInputFormat extends FileInputFormat<Text, Text> { |

| |

| |

| |

| |

| |

| |

| |

| @Override |

| protected boolean isSplitable(JobContext context, Path filename) { |

| return false; |

| } |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| public RecordReader<Text, Text> createRecordReader(InputSplit inputSplit, TaskAttemptContext taskAttemptContext) throws IOException, InterruptedException { |

| CustomRecordReader crr = new CustomRecordReader(); |

| crr.initialize(inputSplit, taskAttemptContext); |

| return crr; |

| } |

| } |

| |

| |

| CustomRecordReader类: |

| package com.sxuek.SelfInputFormat; |

| import org.apache.hadoop.conf.Configuration; |

| import org.apache.hadoop.fs.FSDataInputStream; |

| import org.apache.hadoop.fs.FileSystem; |

| import org.apache.hadoop.fs.Path; |

| import org.apache.hadoop.io.Text; |

| import org.apache.hadoop.mapreduce.InputSplit; |

| import org.apache.hadoop.mapreduce.RecordReader; |

| import org.apache.hadoop.mapreduce.TaskAttemptContext; |

| import org.apache.hadoop.mapreduce.lib.input.FileSplit; |

| import java.io.IOException; |

| |

| |

| |

| public class CustomRecordReader extends RecordReader<Text, Text> { |

| private FileSplit fileSplit; |

| private Configuration conf; |

| private Text key; |

| private Text value; |

| private boolean flag = true; |

| |

| |

| public void initialize(InputSplit inputSplit, TaskAttemptContext taskAttemptContext) throws IOException, InterruptedException { |

| this.fileSplit = (FileSplit) inputSplit; |

| this.conf = taskAttemptContext.getConfiguration(); |

| } |

| |

| |

| |

| |

| |

| |

| public boolean nextKeyValue() throws IOException, InterruptedException { |

| |

| if (flag) { |

| |

| Path path = fileSplit.getPath(); |

| System.out.println(path); |

| |

| |

| FileSystem fs = path.getFileSystem(conf); |

| |

| String name = path.getName(); |

| |

| this.key = new Text(name); |

| |

| FSDataInputStream inputStream = fs.open(path); |

| byte[] buf = new byte[inputStream.available()]; |

| inputStream.read(buf); |

| String value = new String(buf); |

| this.value = new Text(value); |

| |

| flag = false; |

| return true; |

| } else { |

| return false; |

| } |

| } |

| |

| public Text getCurrentKey() throws IOException, InterruptedException { |

| return this.key; |

| } |

| |

| public Text getCurrentValue() throws IOException, InterruptedException { |

| return this.value; |

| } |

| |

| public float getProgress() throws IOException, InterruptedException { |

| return 0; |

| } |

| |

| public void close() throws IOException { |

| |

| } |

| } |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?