照着官网来安装openstack pike之创建并启动instance

有了之前组件(keystone、glance、nova、neutron)的安装后,那么就可以在命令行创建并启动instance了

照着官网来安装openstack pike之environment设置

照着官网来安装openstack pike之keystone安装

照着官网来安装openstack pike之glance安装

照着官网来安装openstack pike之neutron安装

创建并启动实例需要进行如下操作:

1、创建一个虚拟网络(使用的是网络选项1:provider networks)

Create virtual networks for the networking option that you chose when configuring Neutron. If you chose option 1, create only the provider network. If you chose option 2, create the provider and self-service networks.

# source admin-openrc

Create the network:

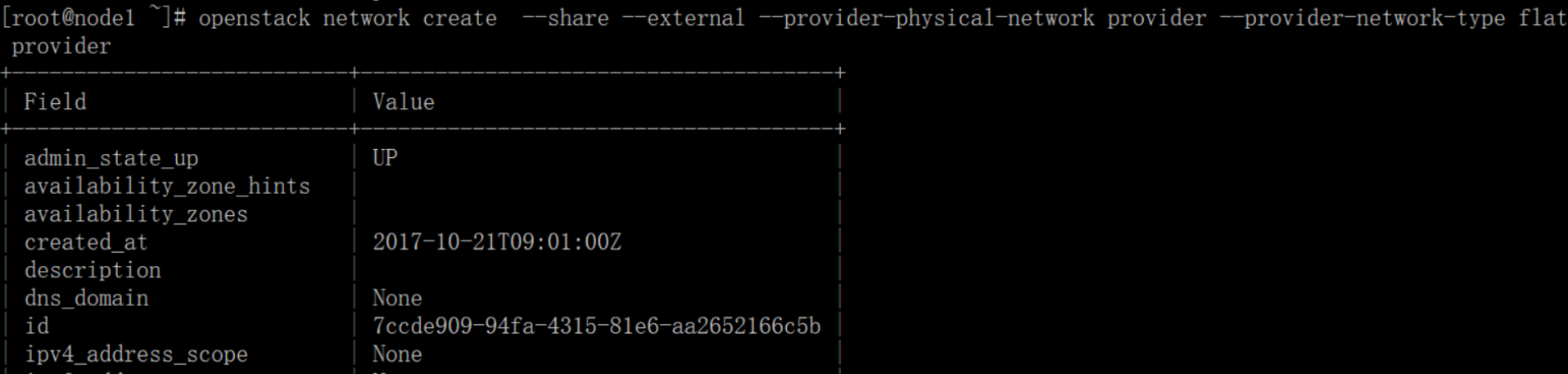

# openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

The --share option allows all projects to use the virtual network.

The --external option defines the virtual network to be external. If you wish to create an internal network, you can use --internal instead. Default value is internal.

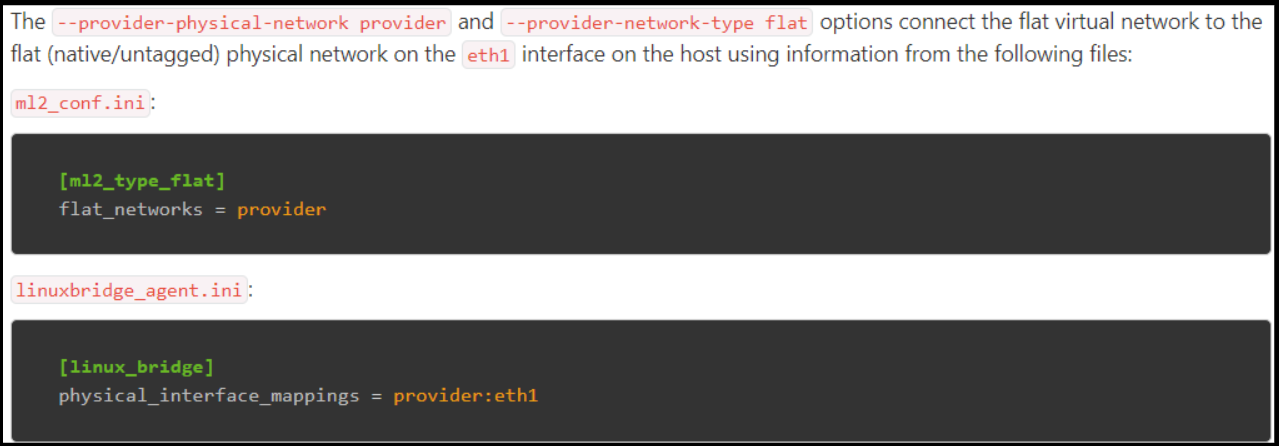

The --provider-physical-network provider and --provider-network-type flat options connect the flat virtual network to the flat (native/untagged) physical network on the eth1 interface on the host using information from the following files:

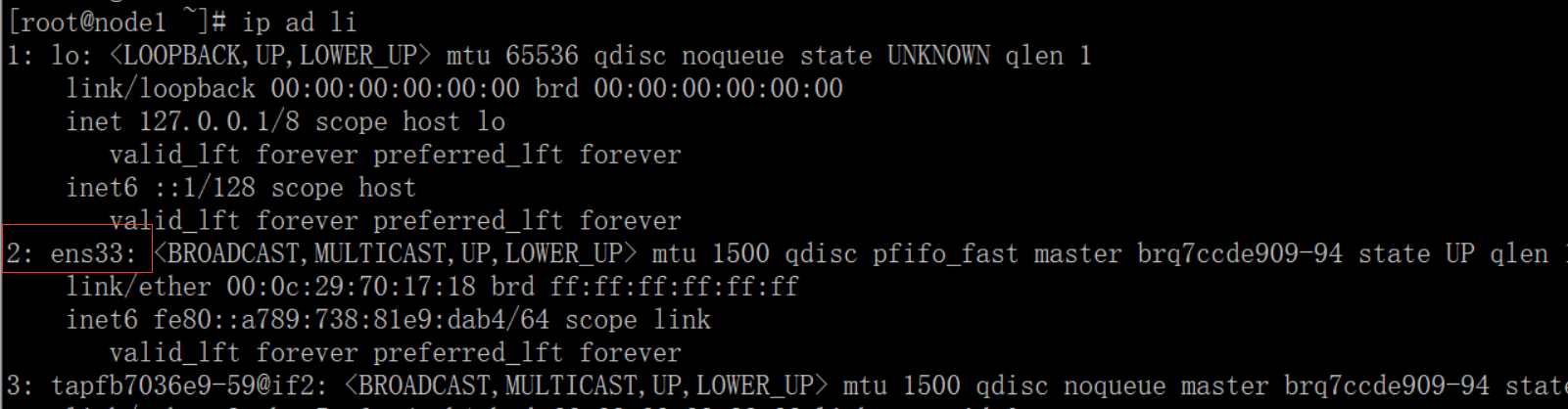

由于此次搭建的环境的本地网卡设备名

所以这里配置将eth1改为ens33

Create a subnet on the network:

# openstack subnet create --network provider --allocation-pool start=192.168.101.100,end=192.168.101.200 --dns-nameserver 192.168.101.2 --gateway 192.168.101.2 --subnet-range 192.168.101.0/24 provider

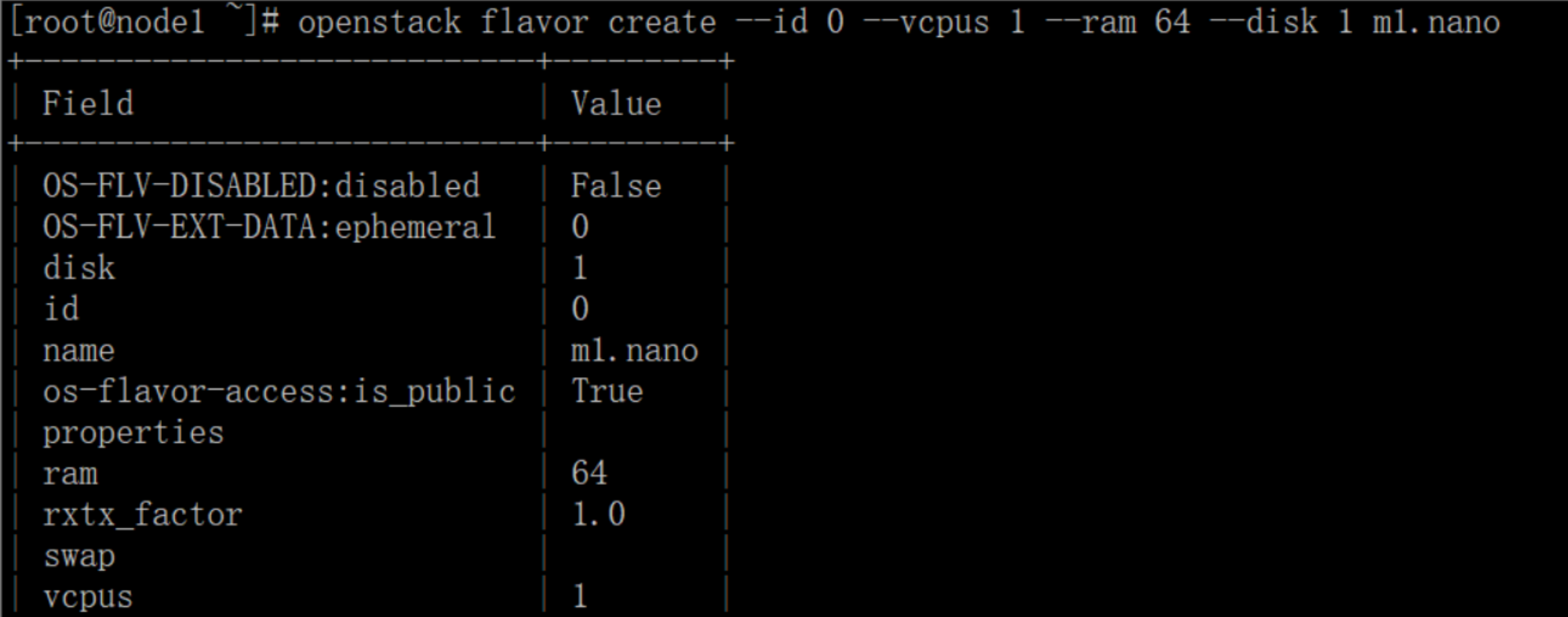

Create m1.nano flavor:

# openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

Generate a key pair

Most cloud images support public key authentication rather than conventional password authentication. Before launching an instance, you must add a public key to the Compute service.

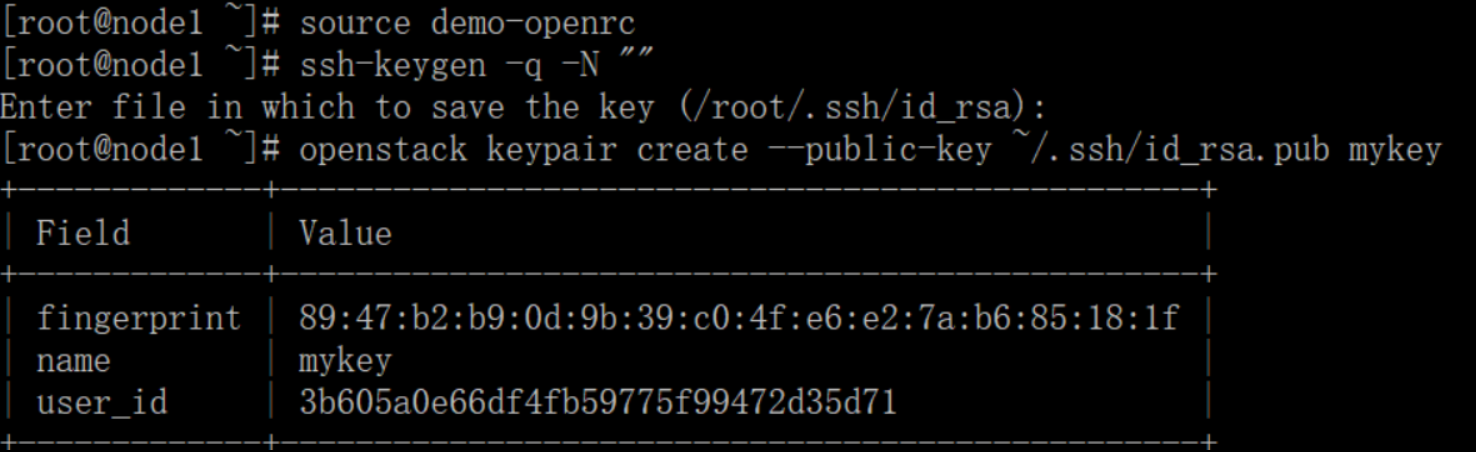

Source the demo project credentials:

# source demo-openrc

Generate a key pair and add a public key:

# ssh-keygen -q -N "" # openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

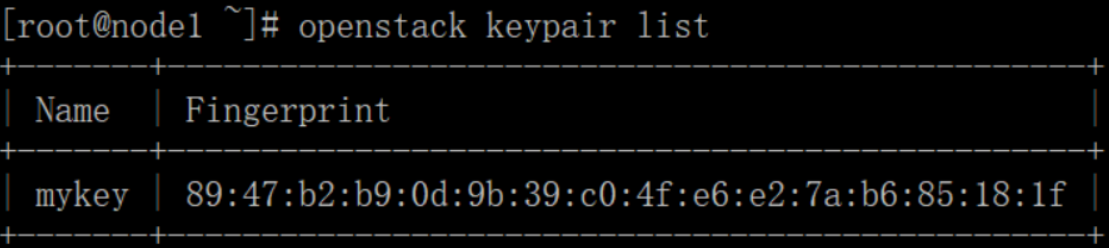

Verify addition of the key pair:

# openstack keypair list

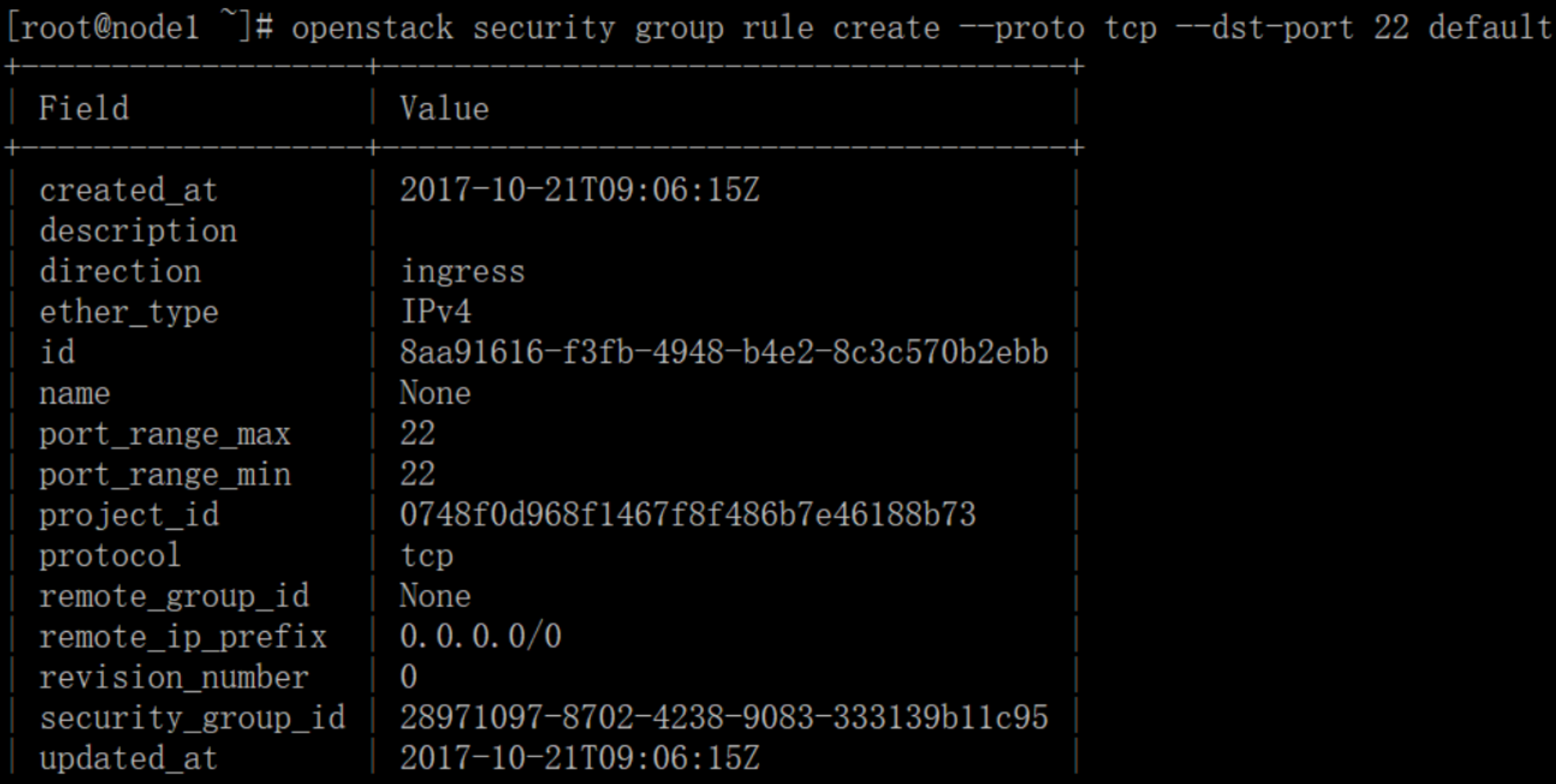

Add security group rules

By default, the default security group applies to all instances and includes firewall rules that deny remote access to instances. For Linux images such as CirrOS, we recommend allowing at least ICMP (ping) and secure shell (SSH).

Add rules to the default security group:

Permit ICMP (ping):

# openstack security group rule create --proto icmp default

Permit secure shell (SSH) access:

# openstack security group rule create --proto tcp --dst-port 22 default

Launch an instance

If you chose networking option 1, you can only launch an instance on the provider network. If you chose networking option 2, you can launch an instance on the provider network and the self-service network.

启动实例之前需要指定the flavor, image name, network, security group, key, and instance name.

On the controller node, source the demo credentials to gain access to user-only CLI commands:

# source demo-openrc

A flavor specifies a virtual resource allocation profile which includes processor, memory, and storage.

List available flavors:

# openstack flavor list

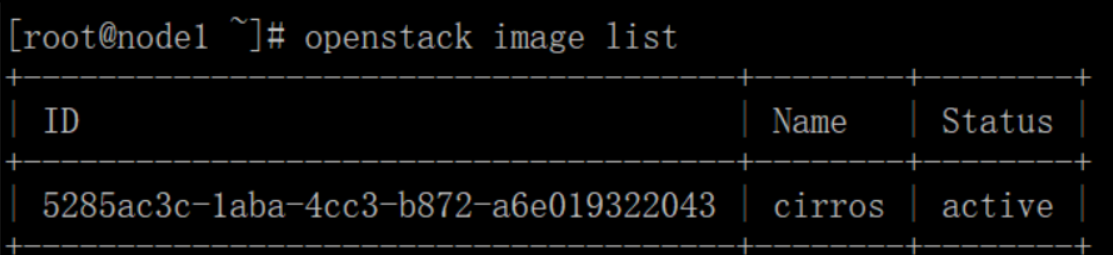

List available images:

# openstack image list

List available networks:

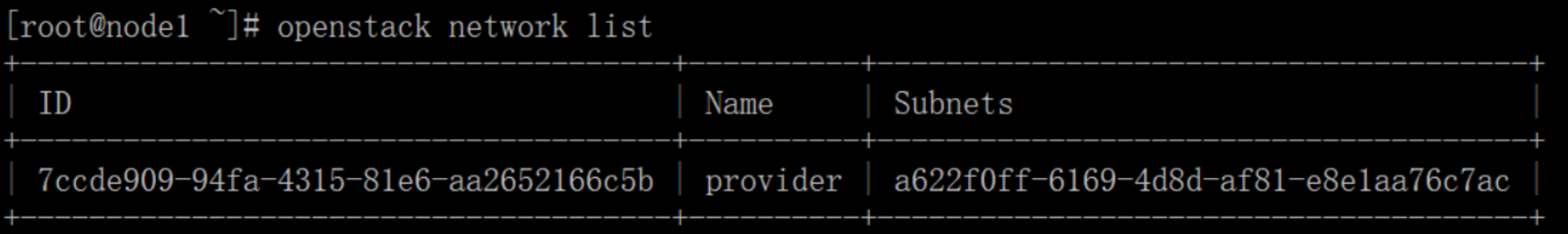

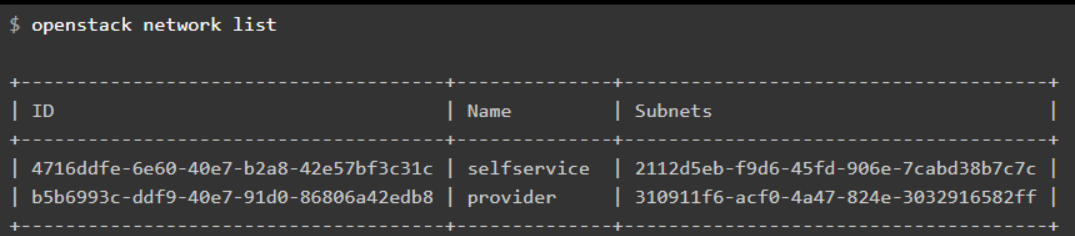

# openstack network list

由于选择的网络为provider networks所以这里显示为上面,如果选择的option 2则为:

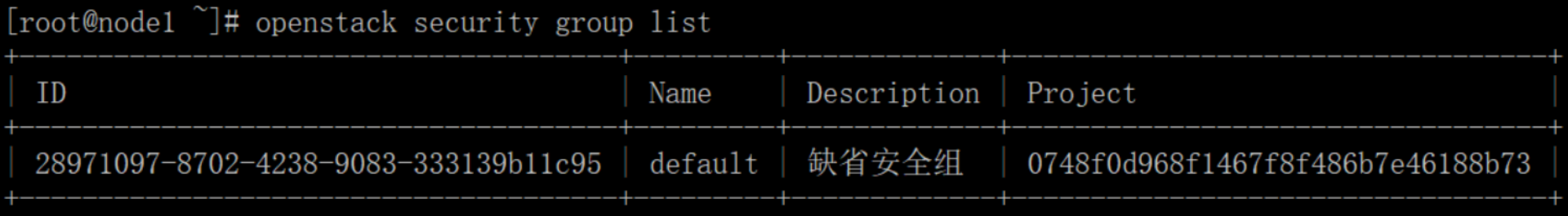

List available security groups:

# openstack security group list

现在启动一个实例:

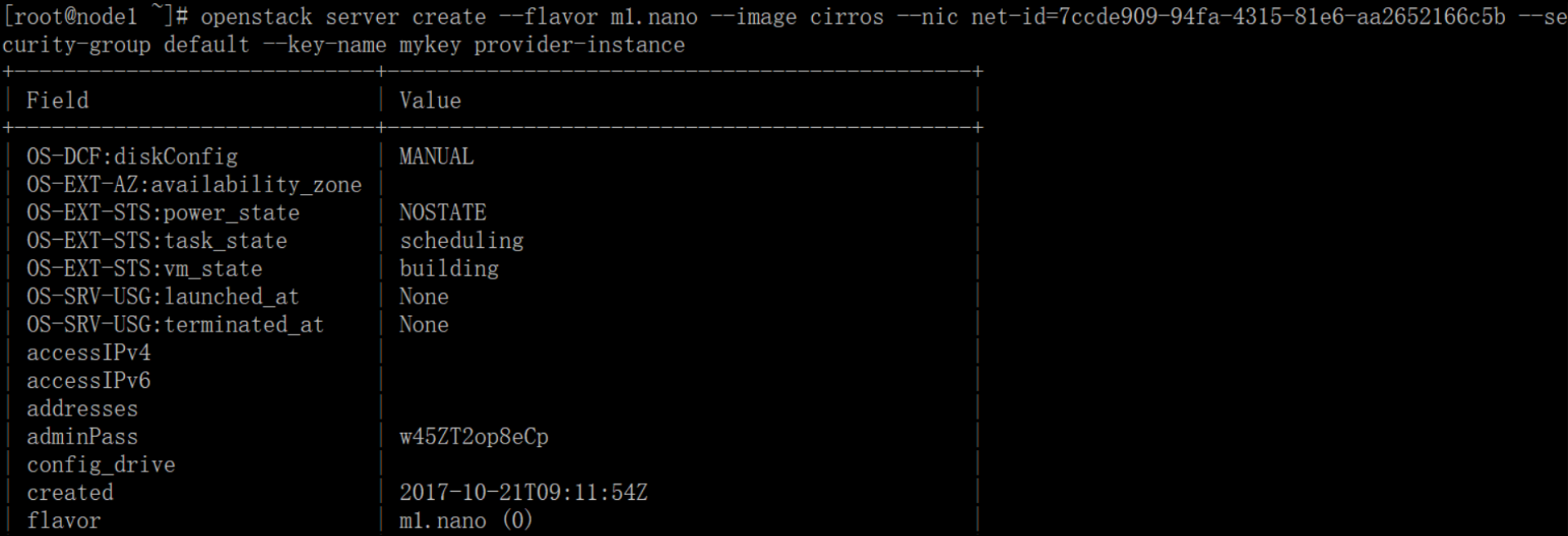

Launch the instance:

Replace PROVIDER_NET_ID with the ID of the provider provider network.

# openstack server create --flavor m1.nano --image cirros --nic net-id=PROVIDER_NET_ID --security-group default --key-name mykey provider-instance

If you chose option 1 and your environment contains only one network, you can omit the --nic option because OpenStack automatically chooses the only network available.

将上面的PROVIDER_NET_ID改为上面的7ccde909-94fa-4315-81e6-aa2652166c5b

# openstack server create --flavor m1.nano --image cirros --nic net-id=7ccde909-94fa-4315-81e6-aa2652166c5b --security-group default --key-name mykey provider-instance

最后面为实例名称,可以随便命名

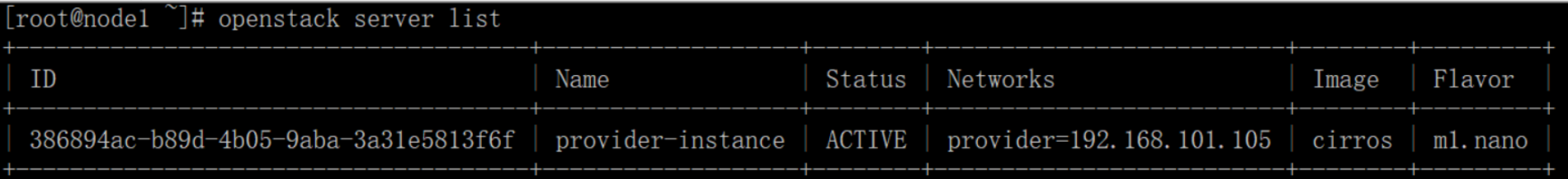

Check the status of your instance:

# openstack server list

The status changes from BUILD to ACTIVE when the build process successfully completes.

可以看出已经有了ip

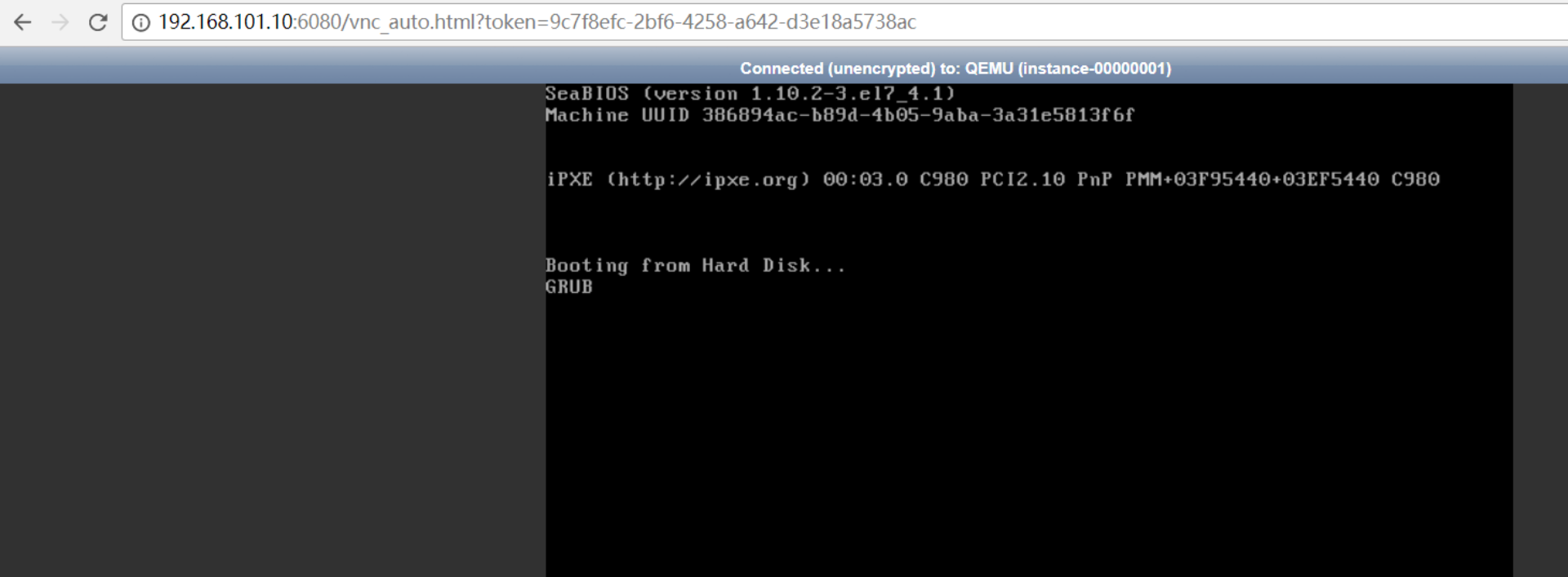

Access the instance using the virtual console

Obtain a Virtual Network Computing (VNC) session URL for your instance and access it from a web browser:

# openstack console url show provider-instance(后面是实例名称)

现在进行浏览器访问:

一直在grub这里卡住了,解决办法:

将计算节点的的配置文件/etc/nova/nova.conf做如下修改:

[libvirt] virt_type = qemu cpu_mode = none

重启服务:

# systemctl restart libvirtd.service openstack-nova-compute.service

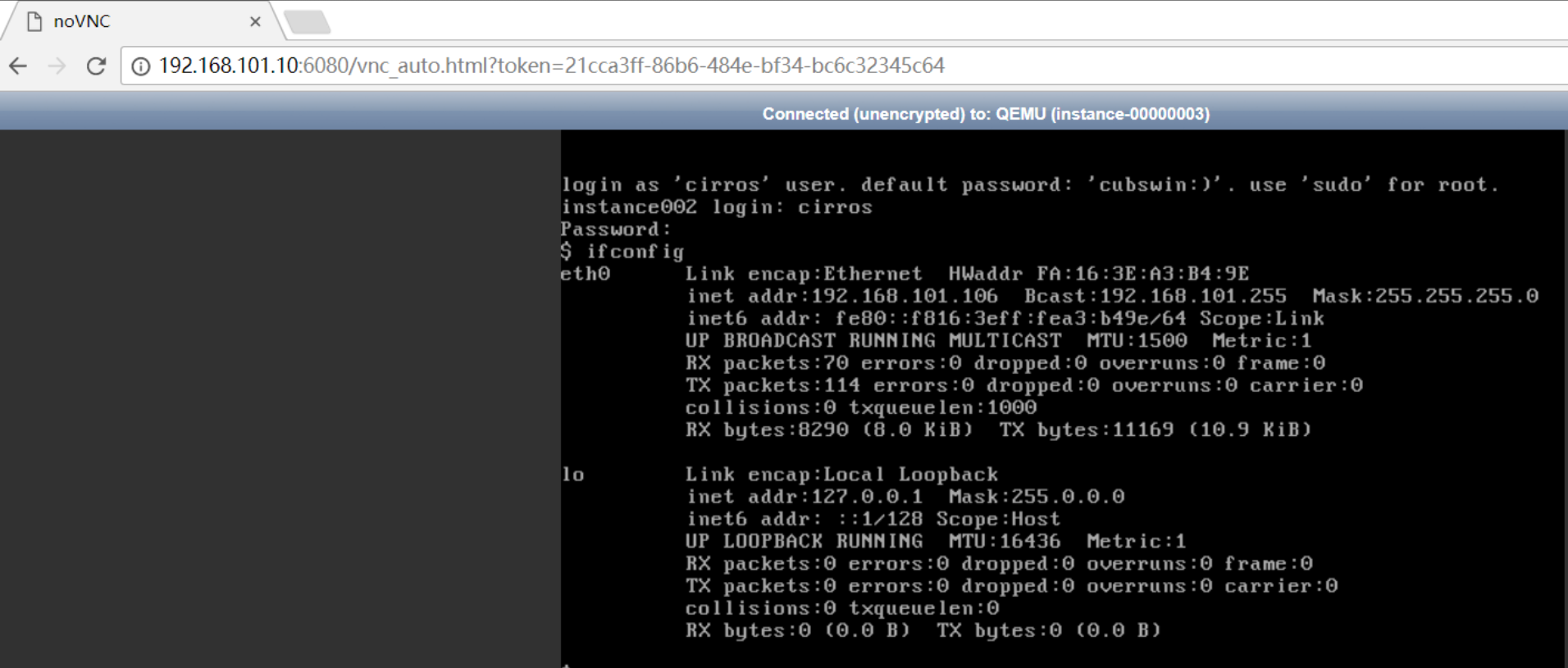

控制节点重启nova服务:

# systemctl status openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

然后在控制节点重新进行创建一个虚拟机:

# source demo-openrc # openstack server create --flavor m1.nano --image cirros001 --nic net-id=7ccde909-94fa-4315-81e6-aa2652166c5b --security-group default --key-name mykey instance002

# openstack server list

# openstack console url show instance002

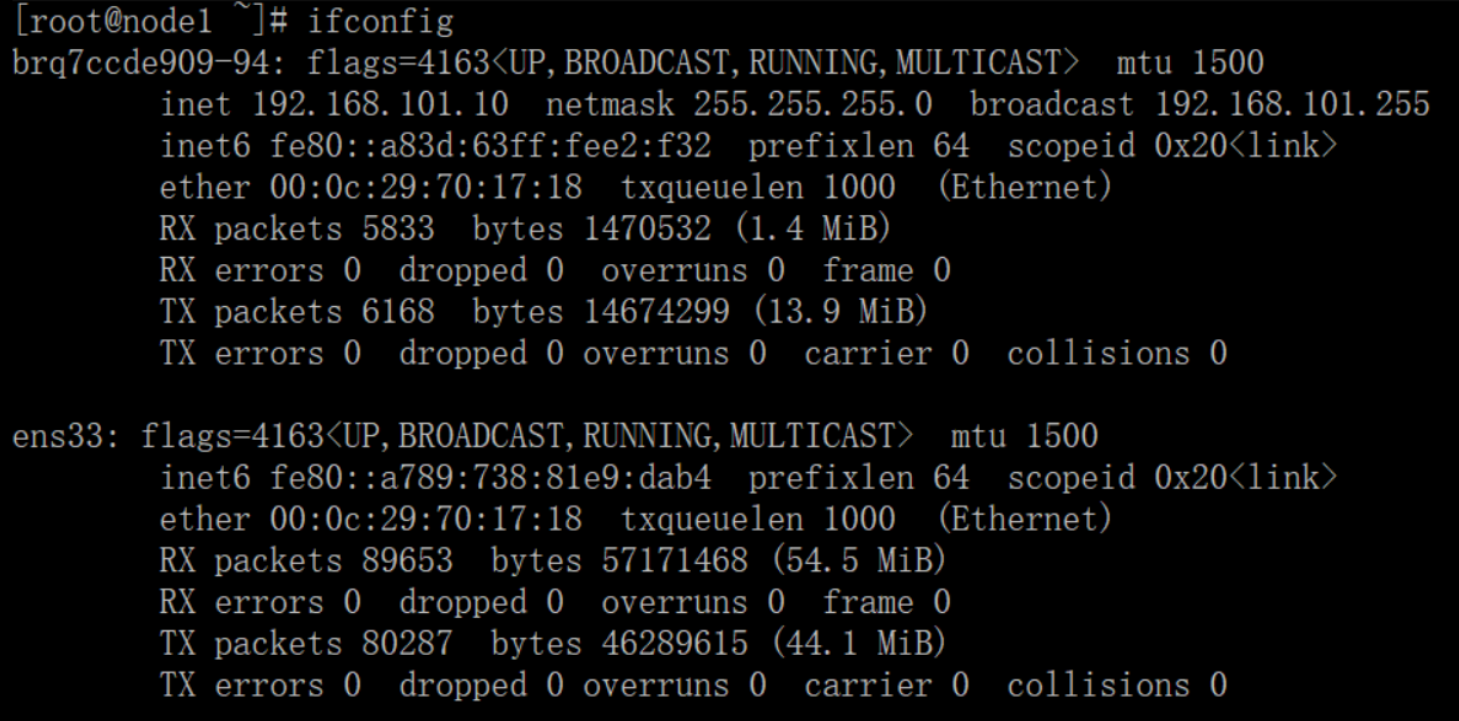

计算节点查看虚拟机信息:

这里的虚拟机id和控制节点上面的openstack server list显示的虚拟机id一致

各部分日志:

grep 'ERROR' /var/log/nova/* grep 'ERROR' /var/log/neutron/* grep 'ERROR' /var/log/glance/* grep 'ERROR' /var/log/keystone/*

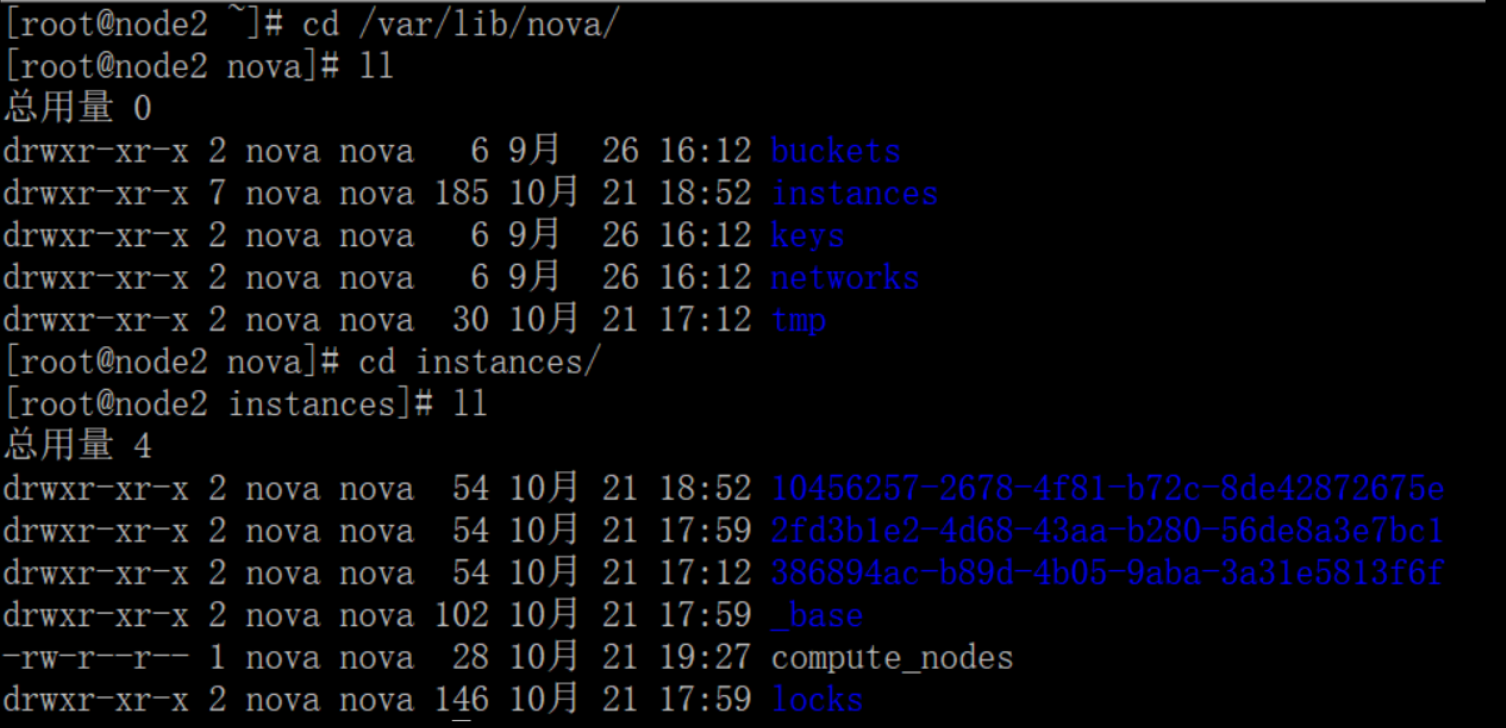

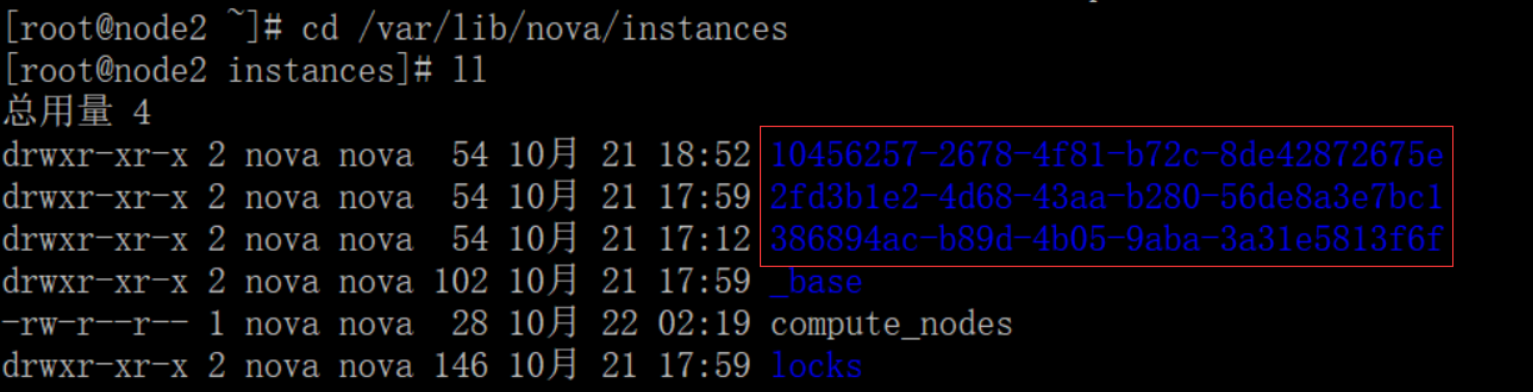

查看节点instance:后面又创建了虚拟机

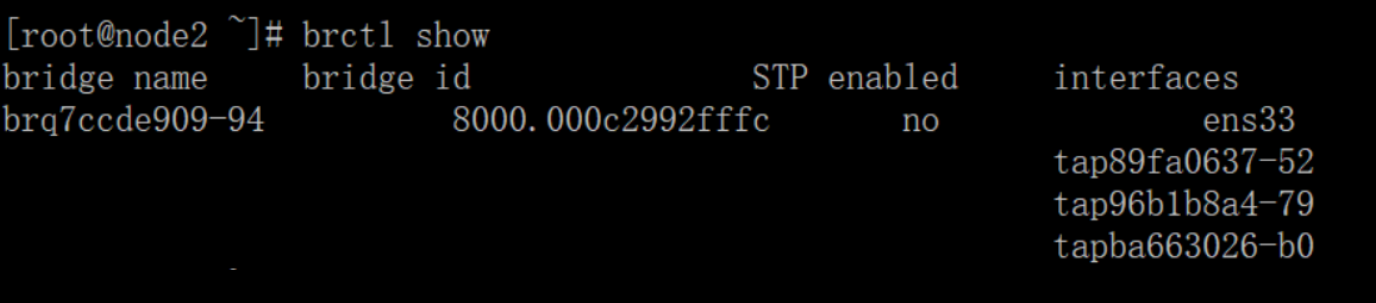

提示:在openstack环境下,所有计算节点主机的桥接网卡名称都一样。

对应三个虚拟机:

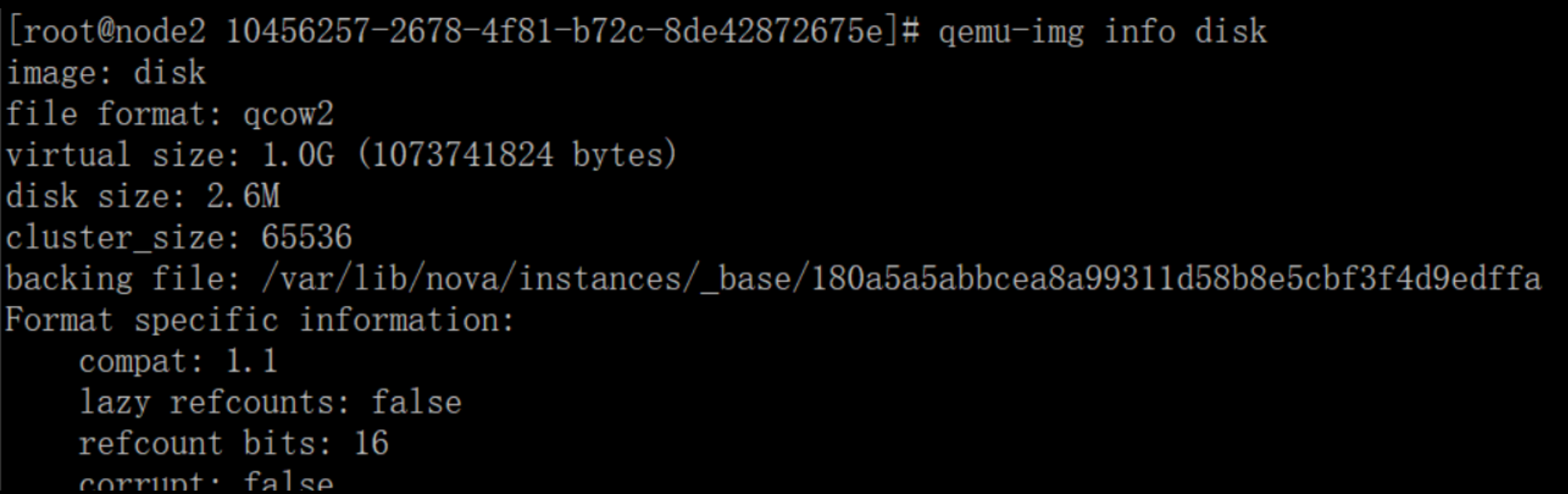

[root@node2 instances]# cd 10456257-2678-4f81-b72c-8de42872675e/ [root@node2 10456257-2678-4f81-b72c-8de42872675e]# ll 总用量 2736 -rw------- 1 root root 38149 10月 21 19:12 console.log -rw-r--r-- 1 qemu qemu 2752512 10月 21 19:12 disk -rw-r--r-- 1 nova nova 79 10月 21 18:52 disk.info

- console.log 控制台日志

- disk 虚拟磁盘

- disk.info 虚拟磁盘信息

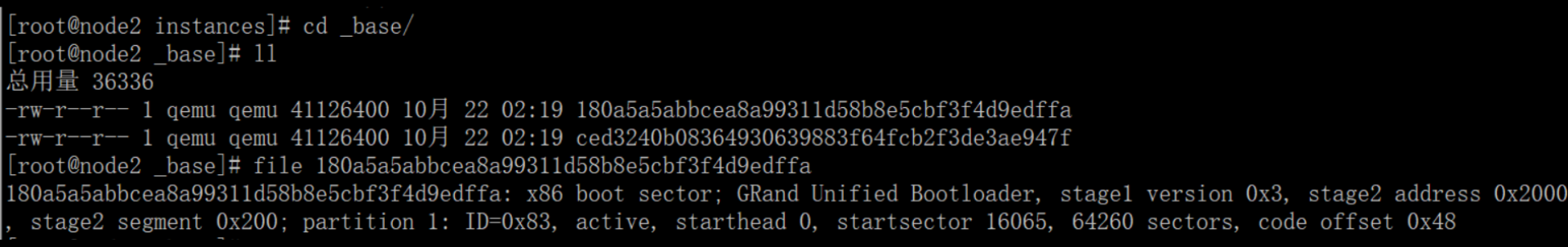

上图中_base下面的 是镜像(上传的两个镜像)

[root@node2 10456257-2678-4f81-b72c-8de42872675e]# ls -lh 总用量 2.7M -rw------- 1 root root 38K 10月 21 19:12 console.log -rw-r--r-- 1 qemu qemu 2.7M 10月 21 19:12 disk

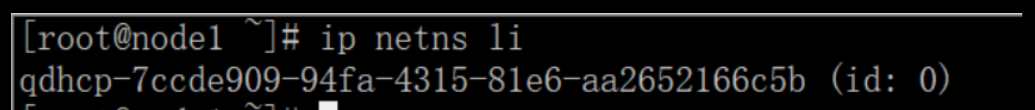

云主机metadata使用以及原理(在控制节点上查看)

上面显示的结果是一个namespace(命名空间)

然后在namespace中执行ip:

ip netns exec qdhcp-7ccde909-94fa-4315-81e6-aa2652166c5b ip ad li

可以在域名空间执行一些命令

可以看出来多了几个ip

- 云主机如何从dhcp获取这些信息?

根据etc/neutron/dhcp_agent.ini配置文件enable_isolated_metadata = true 实现

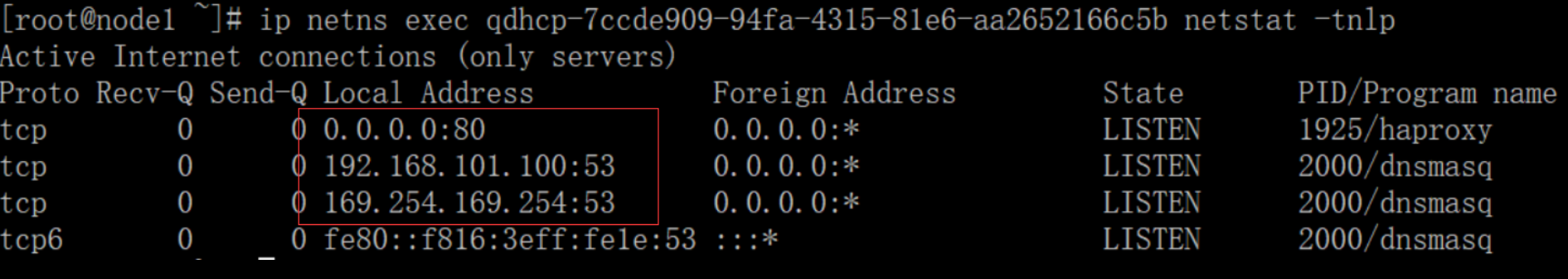

同时我们可以查看到namespace上启动80端口,用于云主机访问metadata,获取信息

可以看见在namespace中启动了80和53端口

浙公网安备 33010602011771号

浙公网安备 33010602011771号