logstash运输器以及kibana的更多操作

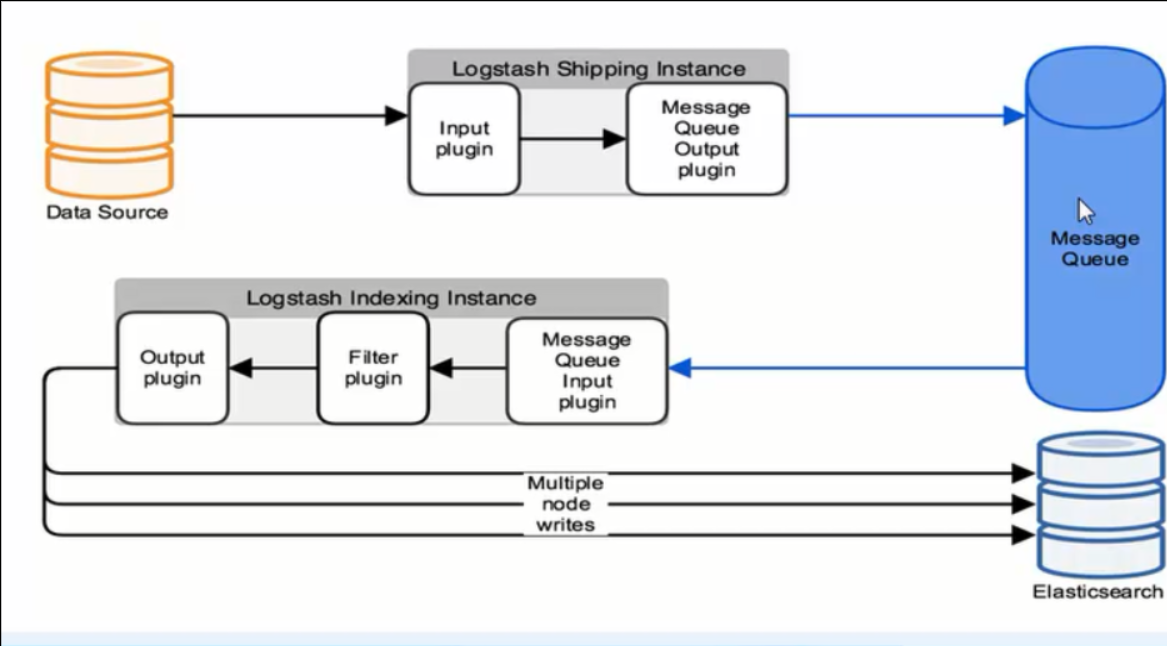

为了达到不会因为ELK中的某一项组件因为故障而导致整个ELK工作出问题,于是

将logstash收集到的数据存入到消息队列中如redis,rabbitMQ,activeMQ或者kafka,这里以redis为例进行操作

首先将需要收集的日志数据通过logstash收集到redis中,这里需要用到output的redis插件了:

1、安装redis,这里采用yum安装,存在于epel源

2、修改redis配置

[root@node3 ~]# egrep -v "^#|^$" /etc/redis.conf bind 192.168.44.136 protected-mode yes port 6379 tcp-backlog 511 timeout 0 tcp-keepalive 300 daemonize yes

开启redis服务:

[root@node3 ~]# /etc/init.d/redis start 启动 : [确定]

编写logstash配置文件,将日志数据写入到redis中:

[root@node3 conf.d]# cat redis_out.conf

input {

file {

path => ["/var/log/nginx/access.log"]

start_position => "beginning"

}

}

output {

redis {

host => ["192.168.44.136"]

data_type => "list"

db => 1

key => "nginx"

}

}

然后logstash开始执行:

[root@node3 conf.d]# /usr/share/logstash/bin/logstash -f redis_out.conf Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

登录redis进行查看是否已经有数据了:

[root@node3 ~]# redis-cli -h 192.168.44.136 192.168.44.136:6379> select 1 OK 192.168.44.136:6379[1]> llen nginx (integer) 19

select 1:1就是上面logstash配置文件的db

llen nginx:nginx就是上面logstash配置文件的key

接下来将redis收集到的数据再通过logstash输出到es中:

[root@node3 conf.d]# cat redis_input.conf

input {

redis {

host => ["192.168.44.136"]

data_type => "list"

db => 1

key => "nginx"

}

}

output {

elasticsearch {

hosts => ["192.168.44.134:9200"]

index => "redis-nginx"

}

}

开始执行:

[root@node3 conf.d]# /usr/share/logstash/bin/logstash -f redis_input.conf Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

通过登录redis查看数据是否还存在:

[root@node3 ~]# redis-cli -h 192.168.44.136 192.168.44.136:6379> select 1 OK 192.168.44.136:6379[1]> llen nginx (integer) 0 192.168.44.136:6379[1]>

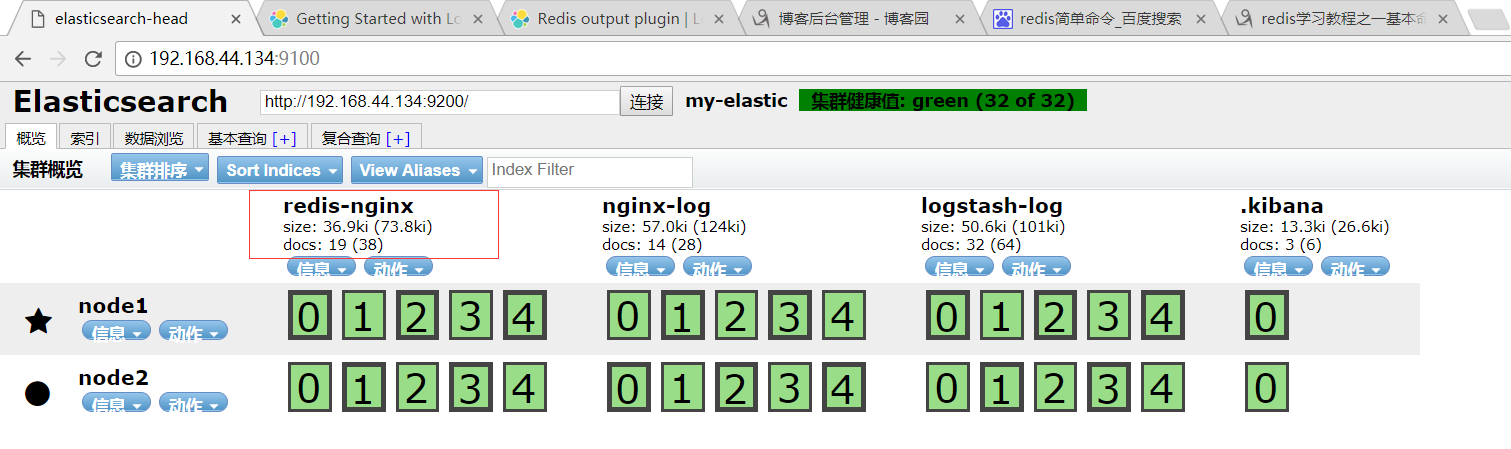

可以看见redis中的数据已经被读取完了,现在查看es是否创建了相应的索引:

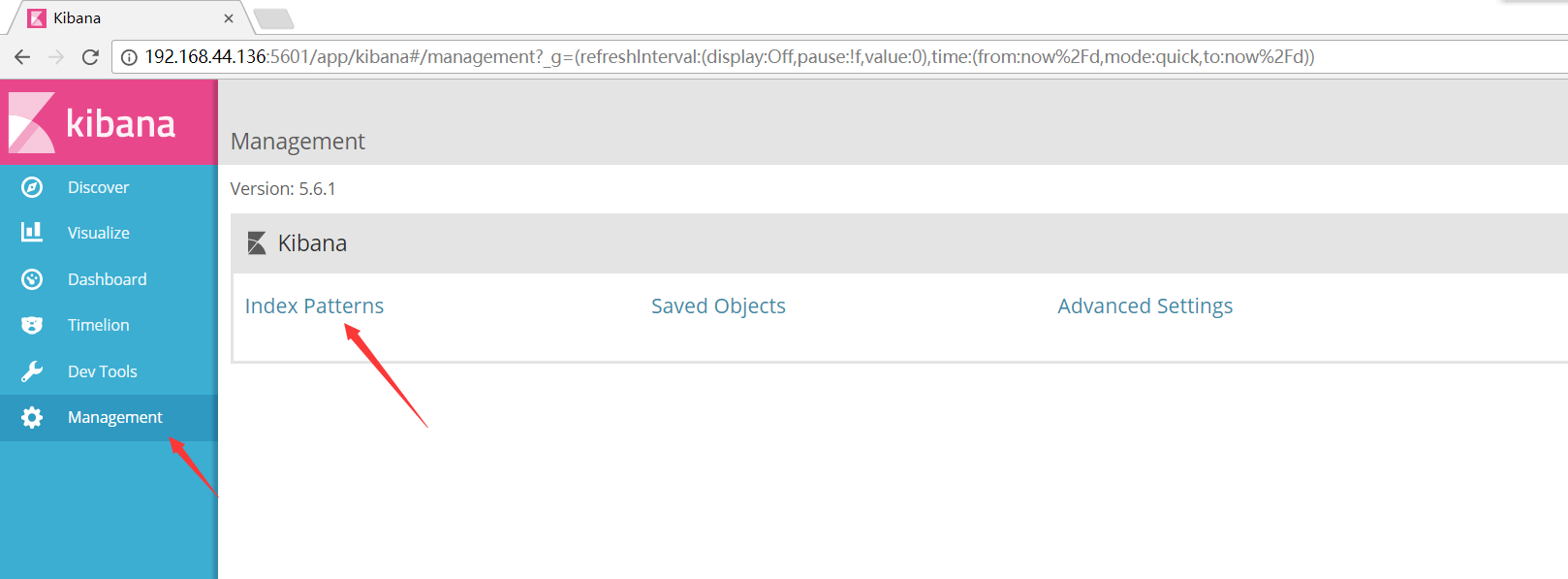

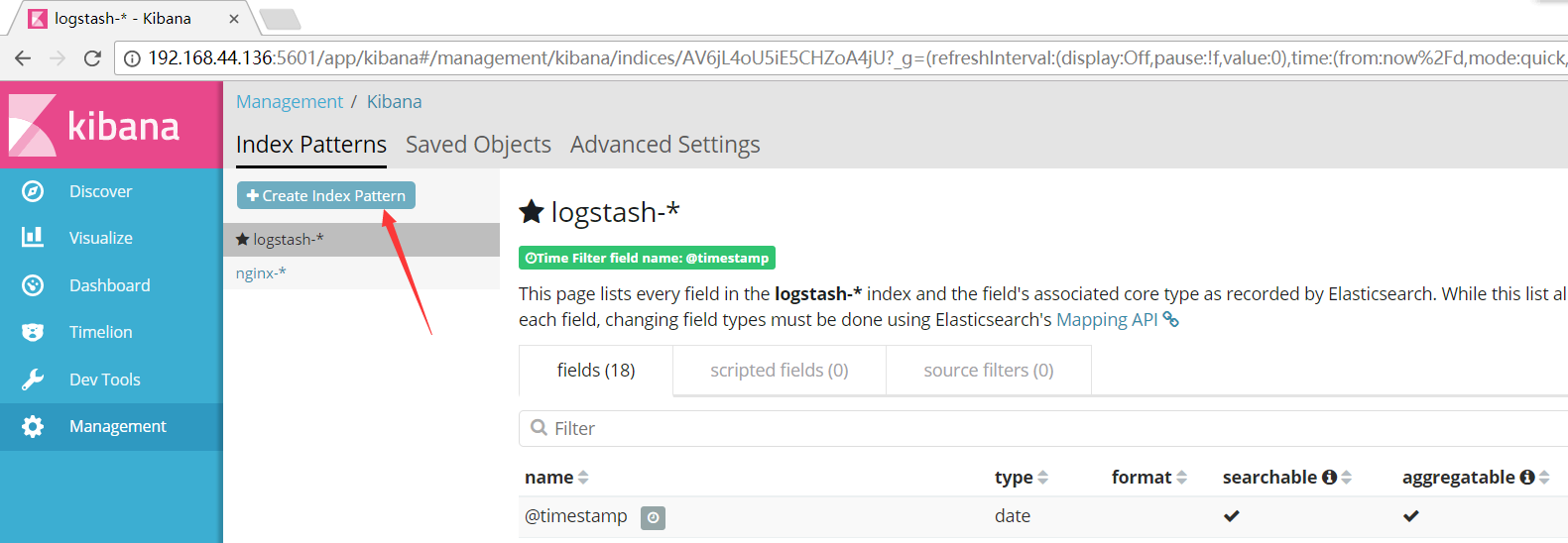

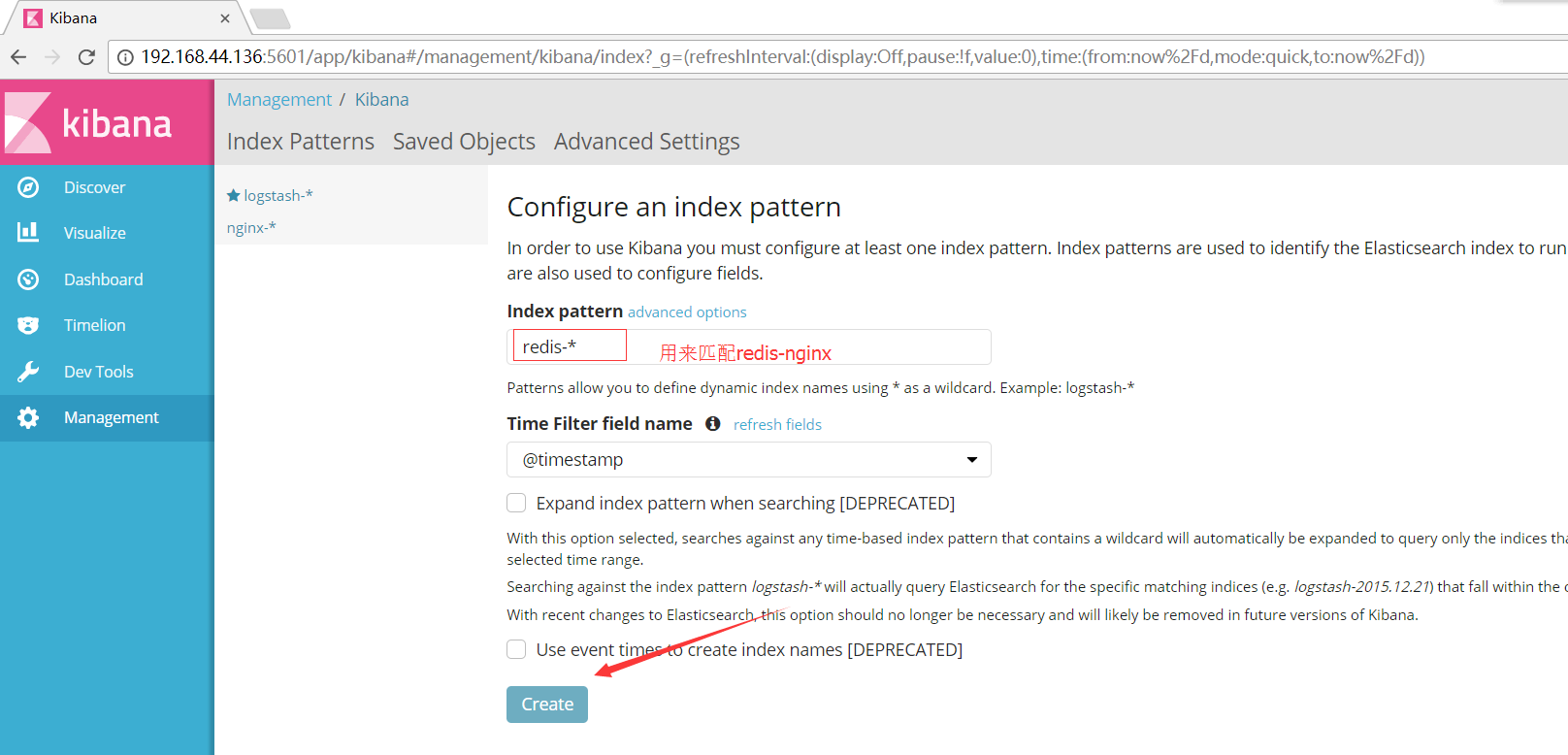

然后通过kibana将es的index添加进来:

于是整个流程就这样完成了,redis这个收集站可以换成其他消息队列服务

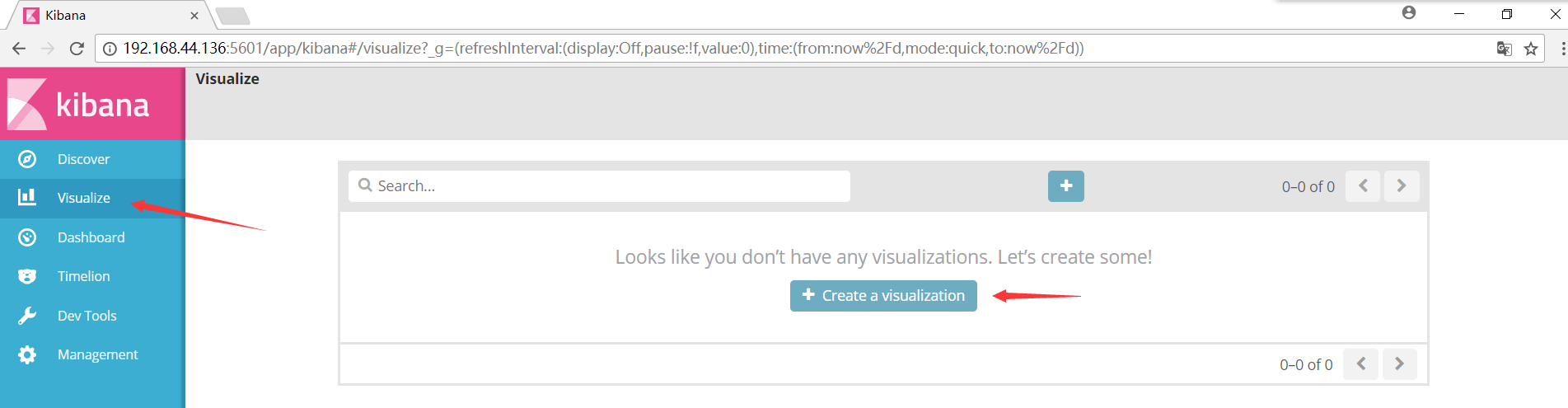

接下来通过操作kibana,使收集到的数据更加好看:

浙公网安备 33010602011771号

浙公网安备 33010602011771号