ELK之kibana6.5

ELK之安装kibana6.5,这里采用rpm安装:

https://www.elastic.co/guide/en/kibana/current/rpm.html

# wget https://artifacts.elastic.co/downloads/kibana/kibana-6.5.4-x86_64.rpm # rpm --install kibana-6.5.4-x86_64.rpm # systemctl daemon-reload # systemctl enable kibana.service # systemctl start kibana.service # systemctl status kibana.service [root@node1 ELK]# netstat -tnlp|grep 5601 tcp 0 0 127.0.0.1:5601 0.0.0.0:* LISTEN 7673/node

kibana服务监听在5601端口,将kibana配置文件做一些相应的修改:

[root@node1 ELK]# cd /etc/kibana/ [root@node1 kibana]# ll 总用量 8 -rw-r--r--. 1 root root 5054 12月 18 05:40 kibana.yml [root@node1 kibana]# vim kibana.yml [root@node1 kibana]# egrep -v "^$|^#" kibana.yml server.port: 5601 server.host: "172.16.23.129" server.name: "node1" elasticsearch.url: "http://172.16.23.129:9200"

重启kibana服务:

# systemctl restart kibana

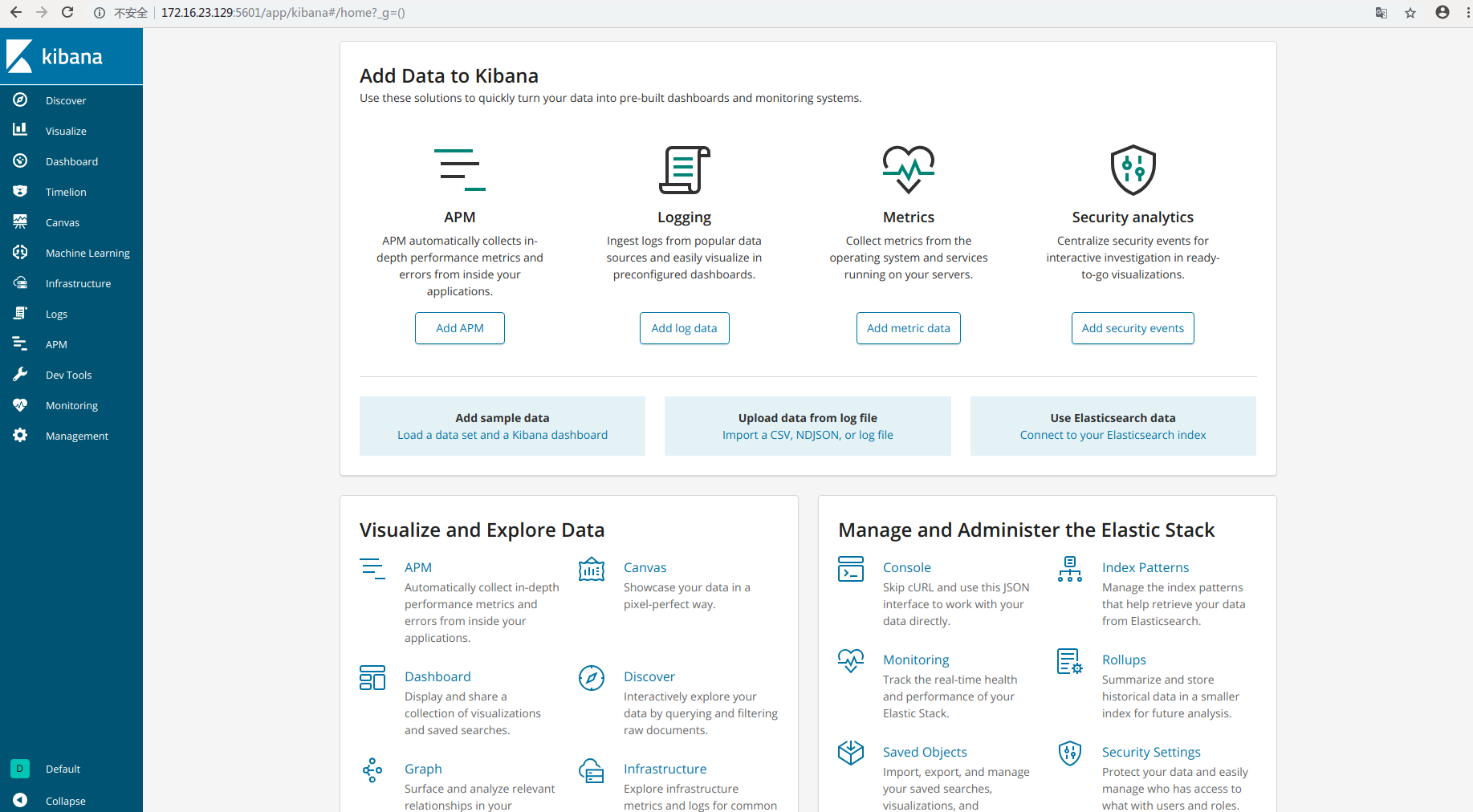

通过浏览器进行访问kibana:

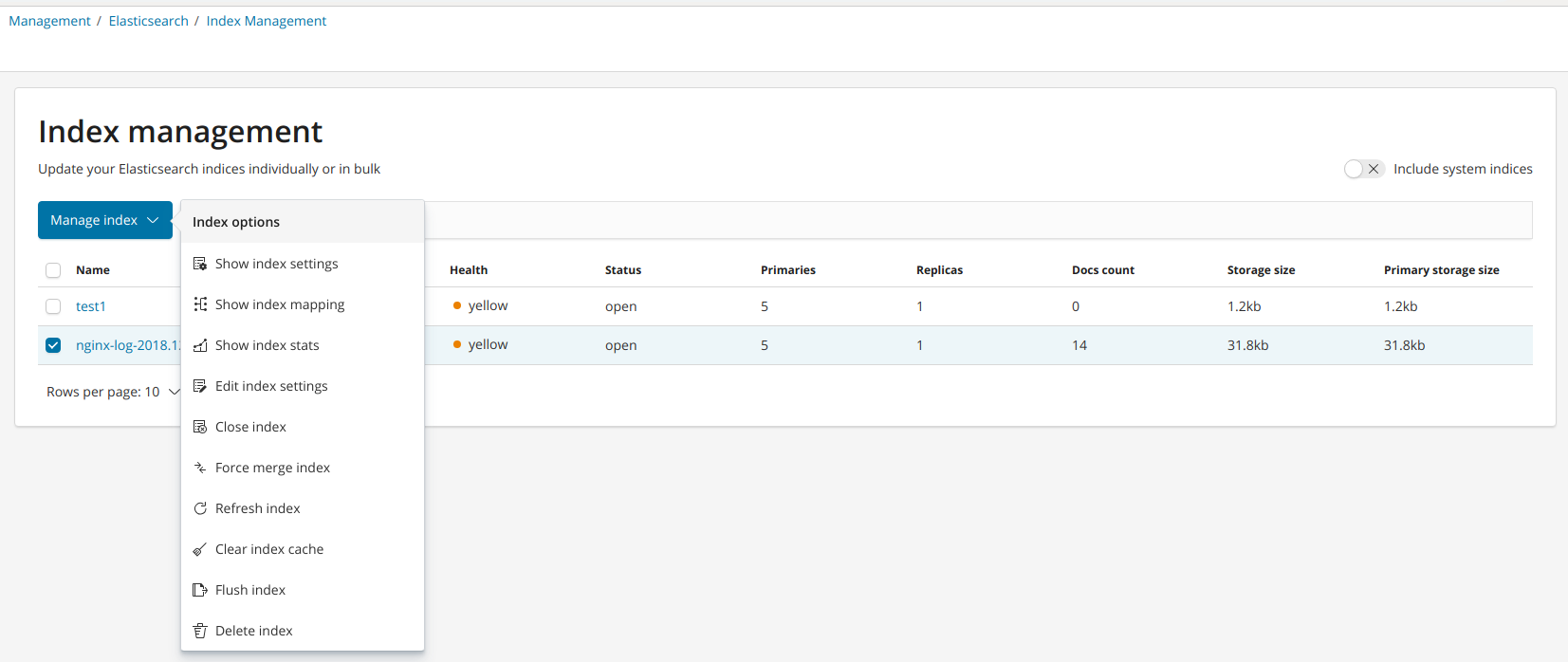

查看上次收集的nginx日志存放在elasticsearch上:

[root@node1 kibana]# curl -X GET "localhost:9200/_cat/indices?v" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size yellow open test1 ZAjj9y_sSPmGz8ZscIXUsA 5 1 0 0 1.2kb 1.2kb green open .kibana_1 CV1LRTOXQV-I04AEh7hcow 1 0 3 0 11.8kb 11.8kb yellow open nginx-log-2018.12.25 Zr4q_U5bTk2dY9PfEpZz_Q 5 1 14 0 31.8kb 31.8kb

上面nginx-log-2018.12.25这个index即是收集的nginx的日志,现在通过将es与kibana结合起来进行展示出来:

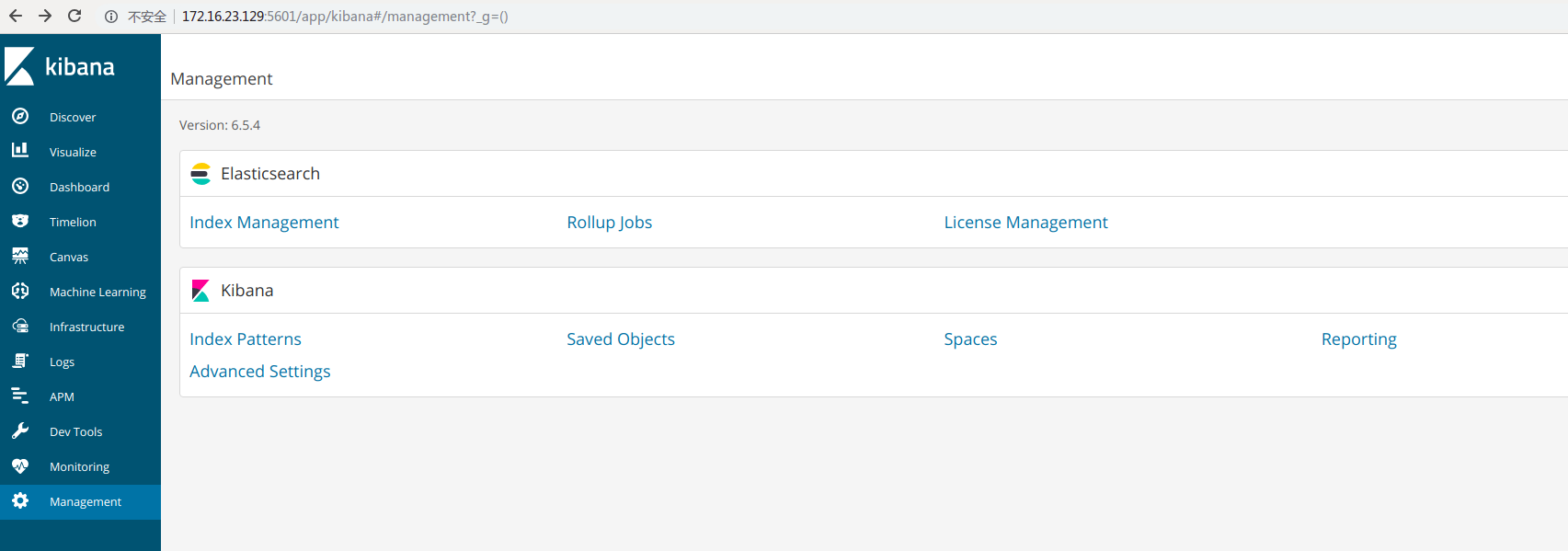

选择左边栏Management然后就可以看见es的index管理,点进去

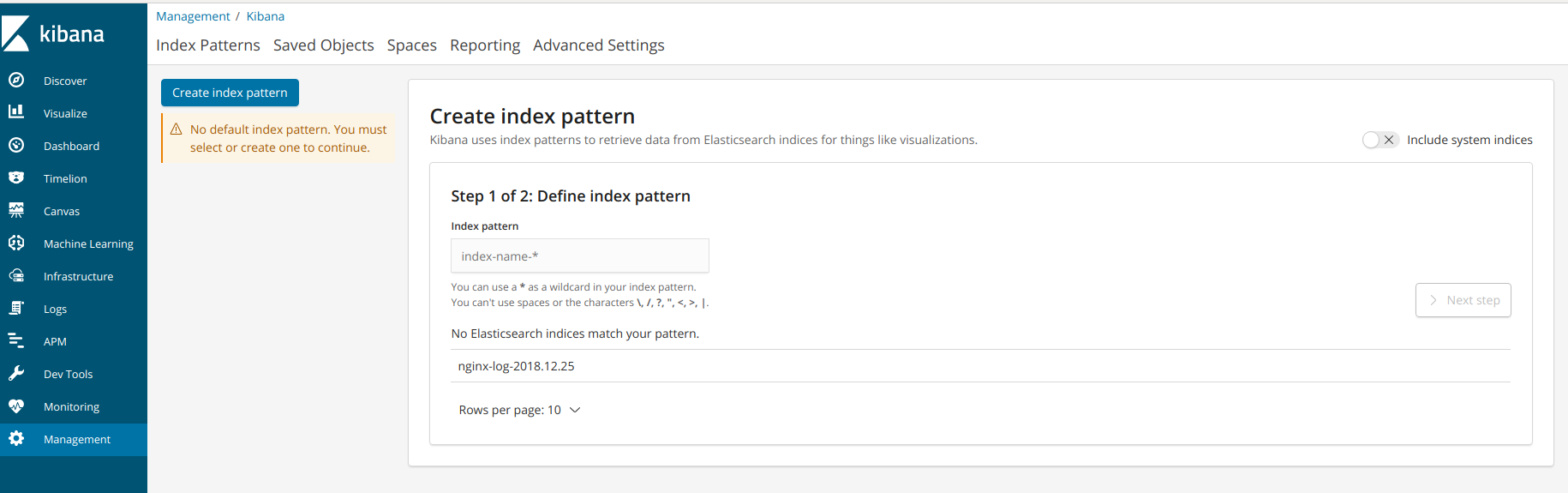

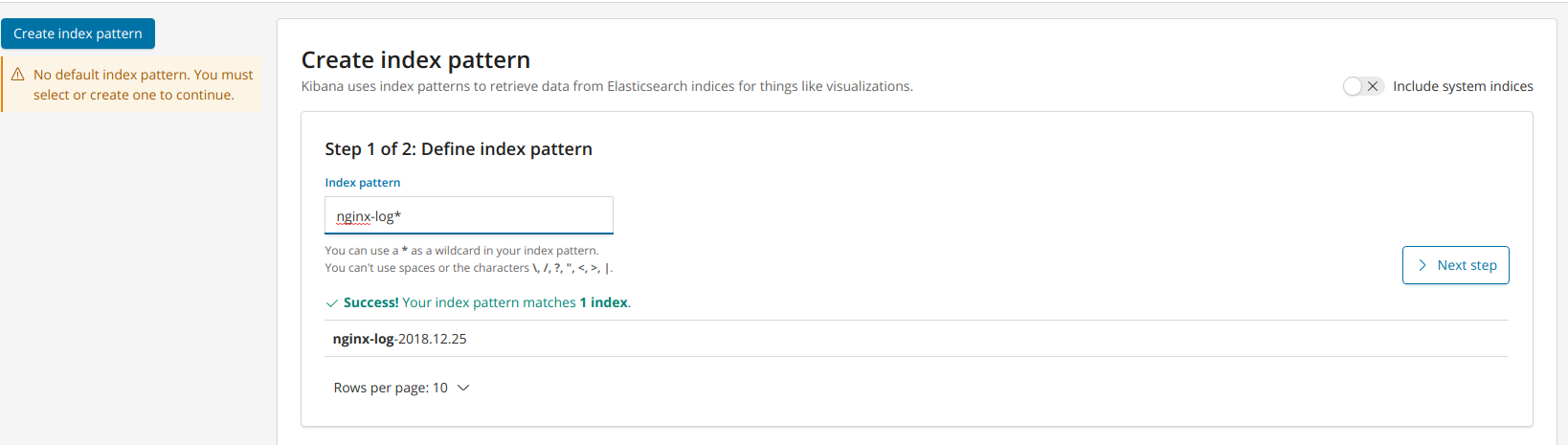

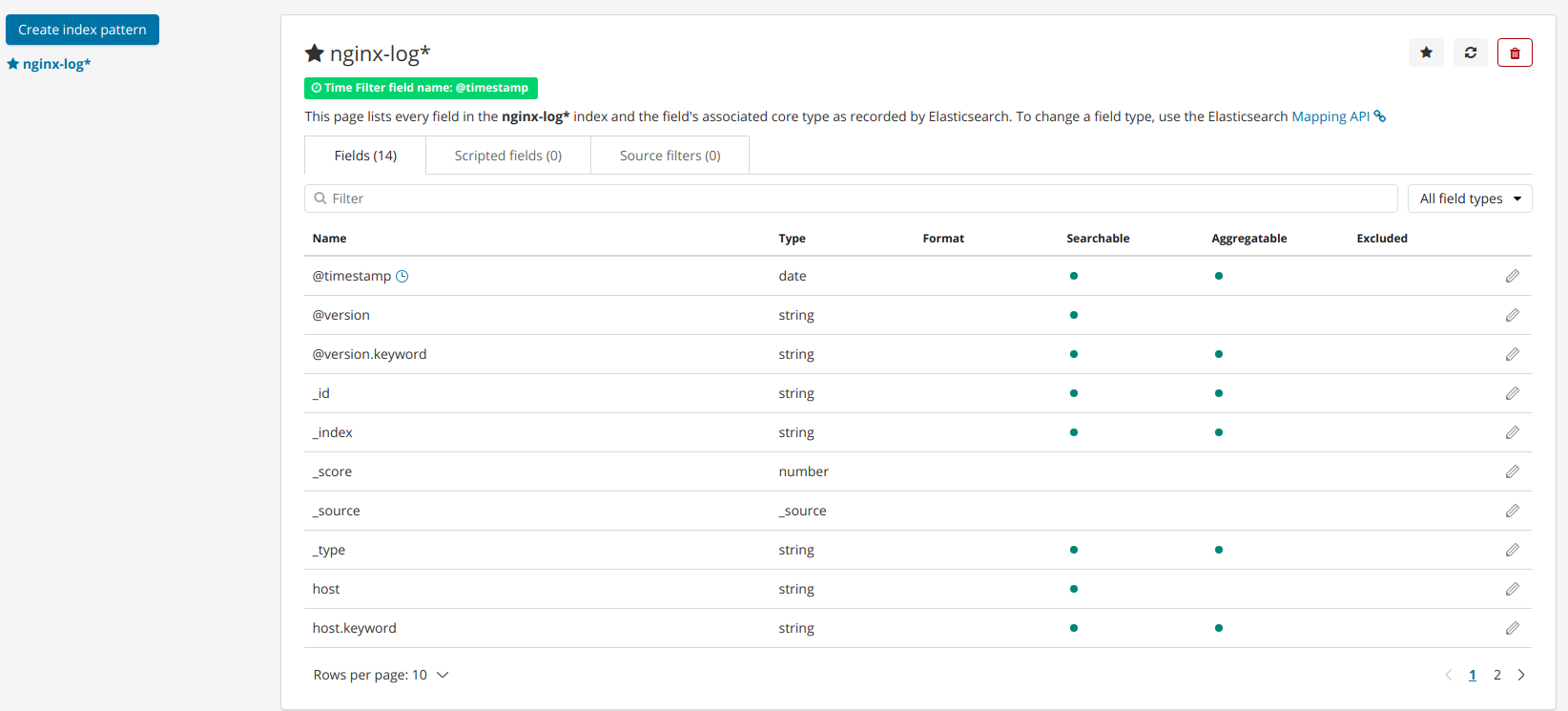

选择Management---->Kibana----->create index pattern:

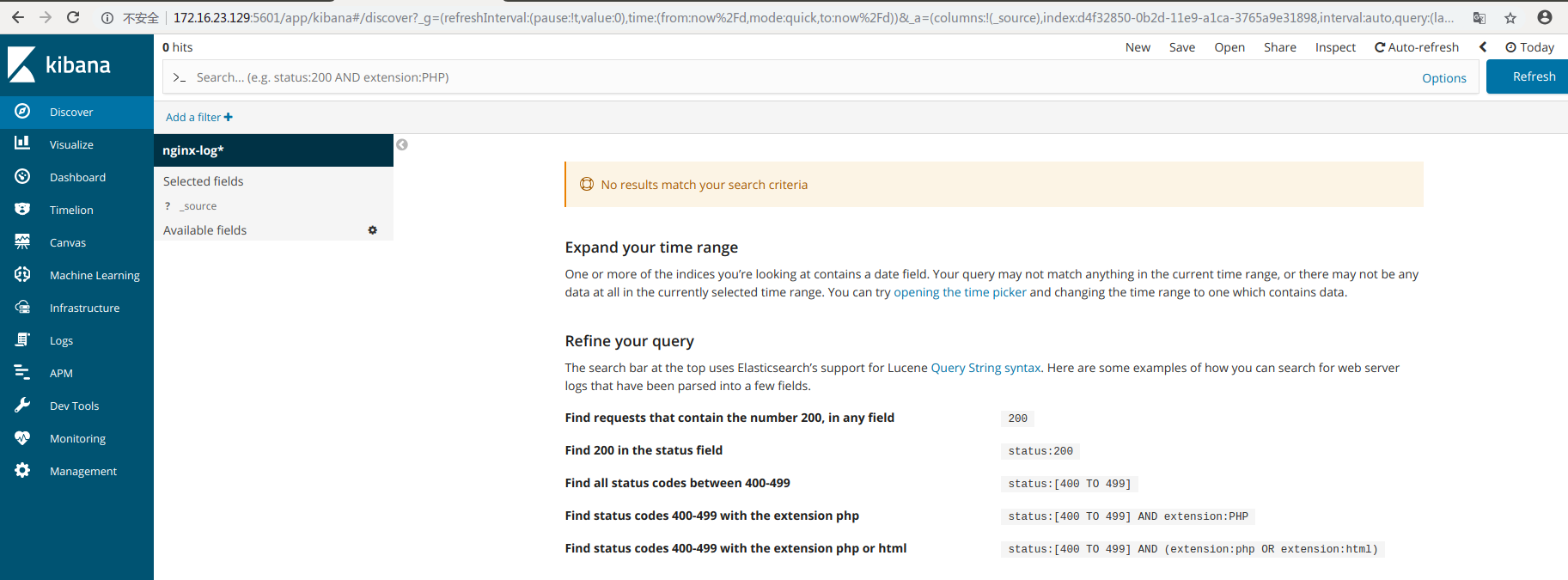

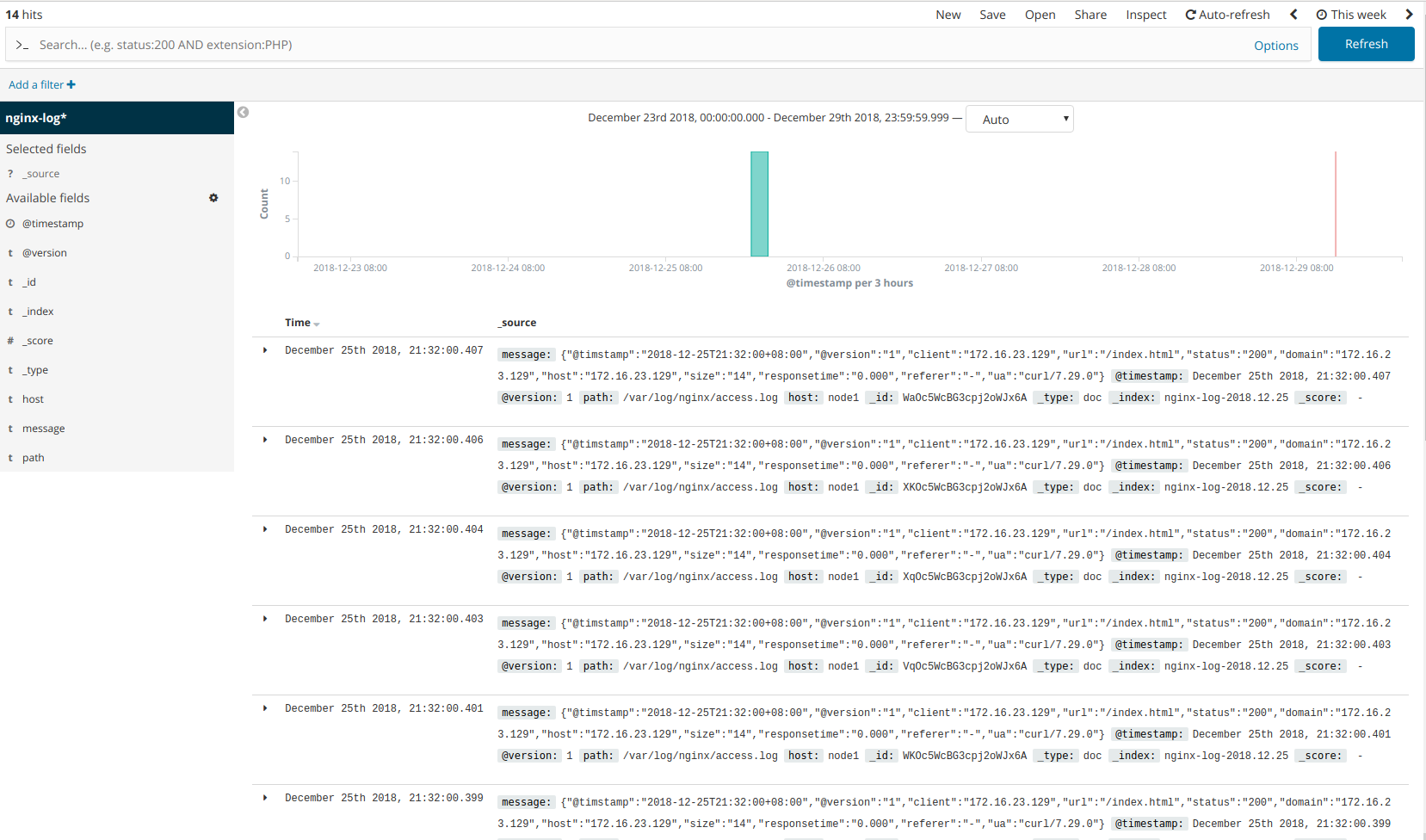

index创建好后,选择discover:

可以看见并没有图形展示出来,因为右上角是today,我们将时间改为this week:

现在将nginx日志输出到redis,然后es到redis中进行消费,这边手动进行访问nginx,然后查询redis库存数据:

1.将nginx日志输出到redis:

[root@node1 conf.d]# /usr/share/logstash/bin/logstash -f nginx_output_redis.conf

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-12-29T14:04:14,403][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2018-12-29T14:04:14,470][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.5.4"}

[2018-12-29T14:04:20,600][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-12-29T14:04:31,474][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/var/lib/logstash/plugins/inputs/file/.sincedb_d883144359d3b4f516b37dba51fab2a2", :path=>["/var/log/nginx/access.log"]}

[2018-12-29T14:04:31,574][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x7223aec6 run>"}

[2018-12-29T14:04:31,871][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2018-12-29T14:04:31,917][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-12-29T14:04:33,725][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

2.将es通过redis去消费nginx日志生成index:

[root@node1 conf.d]# /usr/share/logstash/bin/logstash -f redis_output_es.conf Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties [2018-12-29T14:04:44,604][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2018-12-29T14:04:44,965][FATAL][logstash.runner ] Logstash could not be started because there is already another instance using the configured data directory. If you wish to run multiple instances, you must change the "path.data" setting. [2018-12-29T14:04:45,058][ERROR][org.logstash.Logstash ] java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit

由上面报错知道logstash在同一主机上运行多个会报错,于是采用另外一台主机进行logstash收集到es中:

[root@master conf.d]# /usr/share/logstash/bin/logstash -f redis_output_es.conf

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-12-29T14:40:06,749][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/var/lib/logstash/queue"}

[2018-12-29T14:40:06,765][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/var/lib/logstash/dead_letter_queue"}

[2018-12-29T14:40:07,651][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2018-12-29T14:40:07,670][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.5.4"}

[2018-12-29T14:40:07,742][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"7059ccab-6ba6-4082-ad0c-6320a1121ed2", :path=>"/var/lib/logstash/uuid"}

[2018-12-29T14:40:13,024][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-12-29T14:40:13,957][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://172.16.23.129:9200/]}}

[2018-12-29T14:40:14,439][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://172.16.23.129:9200/"}

[2018-12-29T14:40:14,558][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6}

[2018-12-29T14:40:14,567][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>6}

[2018-12-29T14:40:14,640][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//172.16.23.129"]}

[2018-12-29T14:40:14,678][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2018-12-29T14:40:14,766][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2018-12-29T14:40:14,823][INFO ][logstash.inputs.redis ] Registering Redis {:identity=>"redis://@172.16.23.129:6379/0 list:nginx_log"}

[2018-12-29T14:40:14,892][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x3f08e08c run>"}

[2018-12-29T14:40:15,134][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-12-29T14:40:16,254][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

3.查看生成的index:

[root@node1 conf.d]# curl -X GET "localhost:9200/_cat/indices?v" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size green open .kibana_1 CV1LRTOXQV-I04AEh7hcow 1 0 4 0 19kb 19kb yellow open nginx-log-2018.12.25 Zr4q_U5bTk2dY9PfEpZz_Q 5 1 14 0 31.8kb 31.8kb yellow open nginx-log-2018.12.29 KTWG3qeGTCeuknJCE4juaA 5 1 10 0 35.1kb 35.1kb yellow open test1 ZAjj9y_sSPmGz8ZscIXUsA 5 1 0 0 1.2kb 1.2kb

然后查看kibana的index:

浙公网安备 33010602011771号

浙公网安备 33010602011771号