Docker网络

Docker网络

Docker0

清空所有环境,删除所有镜像和容器

查看本地ip

[root@jinpengyong /]# ip addr

# 本地回环地址

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

# 阿里云内网地址

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:04:8c:1d brd ff:ff:ff:ff:ff:ff

inet 172.26.236.138/20 brd 172.26.239.255 scope global dynamic eth0

valid_lft 312789808sec preferred_lft 312789808sec

# docker0地址,相当于docker的路由器,所有容器都在这一网段

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:2e:1d:e2:a1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

# 看能否ping通docker0的ip

[root@jinpengyong /]# ping 172.17.0.1

PING 172.17.0.1 (172.17.0.1) 56(84) bytes of data.

64 bytes from 172.17.0.1: icmp_seq=1 ttl=64 time=0.087 ms

64 bytes from 172.17.0.1: icmp_seq=2 ttl=64 time=0.048 ms

容器ip

# 启动容器

[root@jinpengyong /]# docker run -d -P --name tomcat01 tomcat:9.0

# ip addr 查看容器内地址

# docker exec -it 容器id ip addr

# 发现OCI runtime exec failed,此时需要先进入容器执行以下命令

1. apt-get update # 更新apt

2. apt-get install -y iputils-ping # 安装ping工具

3. apt-get install -y iproute2 # 安装 ip指令(ip addr)

[root@jinpengyong /]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

114: eth0@if115: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 看能否ping同容器内部,可以

[root@jinpengyong /]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.195 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.055 ms

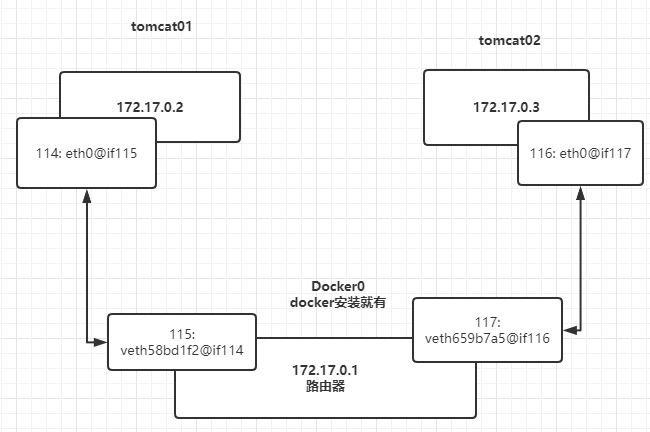

原理

-

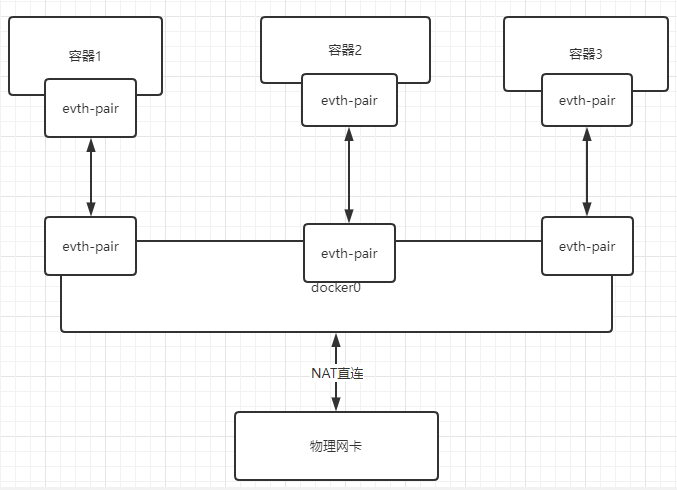

每启动一个docker容器,docker就会个docker容器分配一个ip,只要安装了docker,就会有一个网卡docker0,使用的是桥接模式,evth-pair技术

每启动一个容器,ip addr查看,就会多一个网卡

[root@jinpengyong /]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:04:8c:1d brd ff:ff:ff:ff:ff:ff inet 172.26.236.138/20 brd 172.26.239.255 scope global dynamic eth0 valid_lft 312789068sec preferred_lft 312789068sec 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:2e:1d:e2:a1 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever # 每启动一个容器,都会多一个这个网卡,vethxxxxxx 115: veth58bd1f2@if114: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether fa:93:8c:28:5f:9e brd ff:ff:ff:ff:ff:ff link-netnsid 0 -

再次启动一个容器,又多了一个网卡

[root@jinpengyong /]# docker run -d -P --name tomcat02 tomcat:9.0 466c1739d9358aa61e73b6bcdad2368f23930f7855c5b0008a7a2c801fb7be69 [root@jinpengyong /]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:04:8c:1d brd ff:ff:ff:ff:ff:ff inet 172.26.236.138/20 brd 172.26.239.255 scope global dynamic eth0 valid_lft 312788848sec preferred_lft 312788848sec 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:2e:1d:e2:a1 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever # 容器网卡 ,容器外面是114,连着容器里面的115 115: veth58bd1f2@if114: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether fa:93:8c:28:5f:9e brd ff:ff:ff:ff:ff:ff link-netnsid 0 117: veth659b7a5@if116: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 36:62:1b:67:db:d5 brd ff:ff:ff:ff:ff:ff link-netnsid 1我们发现容器带来的网卡,都是一对一对的

evth-pair 就是一对的虚拟设备接口,都是成对出现。一端连着协议,一端彼此相连

因为这个特性,evth-pair 充当一个桥梁,连接各种虚拟网络设备

-

测试tomcat01和tomcat02能否ping通, 可以

[root@jinpengyong /]# docker exec -it tomcat01 ping 172.17.0.3 PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data. 64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.131 ms 64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.074 ms -

模型图

结论:tomcat01和tomcat02共用一个路由器Docker0

所有容器在不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用ip

-

小结

Docker使用的是Linux的桥接,宿主机中有一个docker容器的网桥docker0

只要容器删除,对应的一对网桥就没有了

--link

思考一个场景,我们编写了一个微服务,database url=ip .项目不重启,数据库ip换掉了,我们希望可以处理这个问题,希望可以通过名字来访问服务器?

# 不通

[root@jinpengyong /]# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Name or service not known

# 解决:启动时加入--link

[root@jinpengyong /]# docker run -d -P --name tomcat03 --link tomcat02 tomcat:9.0

# tomcat03 ping tomcat02

[root@jinpengyong /]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.116 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.078 ms

# 反向tomcat02 ping tomcat03 不通

[root@jinpengyong /]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

# 原理探究,去tomcat03里查看/etc/hosts文件

[root@jinpengyong /]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 466c1739d935 # 绑定了名字和ip

172.17.0.4 02dd1b3d28c3

# 发行tomcat02这个名字已经与ip绑定,所以能ping通

不过,现在玩docker,不建议使用--link!

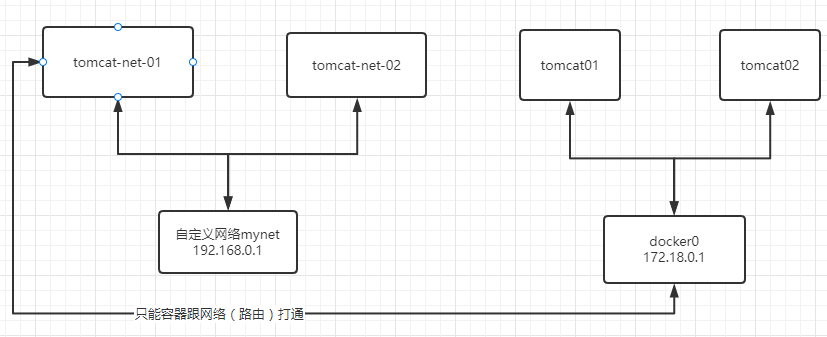

自定义网络!不适用docker0

doker0问题,不支持容器名连接访问

自定义网络

查看所有docker网络

[root@jinpengyong /]# clear

[root@jinpengyong /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1f122f7cec2f bridge bridge local # docker0

b745c5d36e0e host host local

160424a32a37 none null local

网络模式:

- bridge 桥接,默认的,自己创建网络也使用此模式

- none 不配置网路

- host 和宿主机共享网络

- container 容器内网络连通(用得少)

测试

# 我们直接启动的命令 --net bridge 而这个就是我们的docker0

docker run -d -P --name tomcat01 tomcat:9.0

实际上就是

docker run -d -P --name tomcat01 --net bridge tomcat:9.0

# docker0特点:默认,不能通过名字访问,--link可以打通。麻烦

# 自定义网络

# --dirver bridge 桥接模式

# --subnet 192.168.0.1/16 ip地址范围:192.168.0.2-192.168.255.255

# --gateway 192.168.0.1

[root@jinpengyong /]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

# 查看自定义网络的信息

[root@jinpengyong /]# docker network inspect mynet

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

# 启动镜像在我们自定义的网络上

[root@jinpengyong /]# docker run -d -P --name tomcat-net-01 --net mynet tomcat:9.0

[root@jinpengyong /]# docker run -d -P --name tomcat-net-02 --net mynet tomcat:9.0

# 再次查看自定义网络的信息

[root@jinpengyong /]# docker network inspect mynet

"Containers": {

"0eefed8aca861645f4adbf60bbeba46711b6a182eb6483ac284e943b3a021637": {

"Name": "tomcat-net-01",

"EndpointID": "801fed042dafaafbcca7aeaac7dca8ab1533fa8614d48dc095ff71a1fa037335",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"278c6ce7f3978c8896f1c8dd5daf3f9a51c4bc49d0ef74d752101dcdfcd41fc7": {

"Name": "tomcat-net-02",

"EndpointID": "8940e70a12ce55e0c27f2f0b28b1912682c7bc2dceef48ba91f07d0186a0b560",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

# 测试自定义网络下的容器互通,可以直接互通,修复了docker0的缺点

# 不使用--link也可以互通访问

[root@jinpengyong /]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.129 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.086 ms

自定义网络docker已经维护好了对应关系,推荐使用!

应用:redis集群就创建redis网络,mysql集群就创建mysql的网络,不同集群使用不同网络,保证了集群安全和健康

网络连通

# 在docker0上启动一个tomcat

[root@jinpengyong /]# docker run -d -P --name tomcat01 tomcat:9.0

# 测试连接到自定义网络mynet上的容器,不同

[root@jinpengyong /]# docker exec -it tomcat01 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

# connect 命令打通mynet和tomcat01

# docker network connect 网络 容器

[root@jinpengyong /]# docker network connect mynet tomcat01

# 再次测试,通了,02没打通

[root@jinpengyong /]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.107 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.072 ms

# 查看mynet信息,发现tomcat01已经在mynet网络下了

# 一个容器,两个ip,相当于一个公网ip,一个私网ip

[root@jinpengyong /]# docker network inspect mynet

"Containers": {

"0eefed8aca861645f4adbf60bbeba46711b6a182eb6483ac284e943b3a021637": {

"Name": "tomcat-net-01",

"EndpointID": "801fed042dafaafbcca7aeaac7dca8ab1533fa8614d48dc095ff71a1fa037335",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"278c6ce7f3978c8896f1c8dd5daf3f9a51c4bc49d0ef74d752101dcdfcd41fc7": {

"Name": "tomcat-net-02",

"EndpointID": "8940e70a12ce55e0c27f2f0b28b1912682c7bc2dceef48ba91f07d0186a0b560",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"d44bb146c217e38a8649a3ff2886b987fc57b92ccd2784d14594f40f7216621c": {

"Name": "tomcat01",

"EndpointID": "4879044f2a747435abfa6c3fcadb678f189d4cec076b30d2fe112a2d80b04bf8",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

}

},

结论:跨网络操作别人,就需要connect连通

实战 Redis集群

3主,3从

# 创建网络

[root@jinpengyong /]# docker network create --subnet 172.38.0.1/16 redis

# shell脚本创建6个redis配置

for port in $(seq 1 6); \

do \

mkdir -p /mydata/redis/node-${port}/conf

cat << EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

# shell监本启动6个redis容器

for port in $(seq 1 6); \

do \

docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port} \

-v /mydata/redis/node-${port}/data:/data \

-v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.1${port} redis redis-server /etc/redis/redis.conf; \

done

# 随便进入一个容器

[root@jinpengyong /]# docker exec -it redis-1 /bin/bash

# 创建集群

root@6ad3b3e31123:/data# redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

# 登陆集群,查看集群信息

root@6ad3b3e31123:/data# redis-cli -c

172.38.0.12:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:2

cluster_stats_messages_ping_sent:248

cluster_stats_messages_pong_sent:245

cluster_stats_messages_meet_sent:1

cluster_stats_messages_sent:494

cluster_stats_messages_ping_received:245

cluster_stats_messages_pong_received:249

cluster_stats_messages_received:494

# 节点信息

172.38.0.12:6379> cluster nodes

956b4958edca759851f6e13e0f9d1d9a0b66789e 172.38.0.16:6379@16379 slave 9d620583409b72c98d90141d1024b2726efe54b2 0 1637311648426 2 connected

9d620583409b72c98d90141d1024b2726efe54b2 172.38.0.12:6379@16379 myself,master - 0 1637311648000 2 connected 5461-10922

822b67aa42b063c31c43fc06910c2b2cefc47b61 172.38.0.11:6379@16379 master - 0 1637311649000 1 connected 0-5460

090660a6f03edf0f7a0ad1af3d1f88437ea2fb44 172.38.0.15:6379@16379 slave 822b67aa42b063c31c43fc06910c2b2cefc47b61 0 1637311647000 1 connected

00d260314d29c4ff549e417ae881cb4e65df2783 172.38.0.14:6379@16379 slave e4c2ee41989027991d7ce2f1ae9758b0e1f36cfb 0 1637311649429 3 connected

e4c2ee41989027991d7ce2f1ae9758b0e1f36cfb 172.38.0.13:6379@16379 master - 0 1637311647000 3 connected 10923-16383

# 测试,设备name的是12集群

172.38.0.12:6379> set name tt

OK

# 把12的集群容器停了

[root@jinpengyong ~]# docker stop redis-2

redis-2

# 变成了从16获取数据

127.0.0.1:6379> get name

-> Redirected to slot [5798] located at 172.38.0.16:6379

"tt"

172.38.0.16:6379>

# 查看集群节点,172.38.0.12已经挂了,16变成了master

172.38.0.16:6379> cluster nodes

0690164ba41f6e02fef8d1ac60620ede6f642154 172.38.0.11:6379@16379 master - 0 1637313141000 1 connected 0-5460

be2eaf18f4eb3089f141f60dc7c975080ceffbd3 172.38.0.14:6379@16379 slave d506a7ad1a561520e602ac943e2e019d9b60ebc4 0 1637313141828 3 connected

556cbfe2aa2de63a12063b1fd9e7e5732ee9421c 172.38.0.15:6379@16379 slave 0690164ba41f6e02fef8d1ac60620ede6f642154 0 1637313141000 1 connected

65911cd9ca12c1cd5d2a9ea1ff367a0e47f9b1f8 172.38.0.16:6379@16379 myself,master - 0 1637313137000 7 connected 5461-10922

d506a7ad1a561520e602ac943e2e019d9b60ebc4 172.38.0.13:6379@16379 master - 0 1637313139815 3 connected 10923-16383

19916e3bc32d567173139e5090f78c693c0ec175 172.38.0.12:6379@16379 master,fail - 1637312945643 1637312942000 2 connected

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY