4.18团队开发报告

1.昨天遇到的问题

昨日发现一个通病问题,就是不管使用视频还是用实时摄像头时,对于画面的帧率有很高的要求,帧率如果超过25帧,画面就会卡,我猜测的问题是因为我人脸识别算法的反应时间太长,算法跟不上每帧画面的速度。

2.今天的目标

今天决定调试视频和摄像头的帧率,保证程序稳定性,同时开始课堂专注度算法的设计。

3.代码

import time

import cv2

from pipeline_module.core.base_module import BaseModule, TASK_DATA_CLOSE, TASK_DATA_OK, TaskData, TASK_DATA_SKIP, \

TASK_DATA_IGNORE

class VideoModule(BaseModule):

def __init__(self, source=0, fps=25, skippable=False):

super(VideoModule, self).__init__(skippable=skippable)

self.task_stage = None

self.source = source

self.cap = None

self.frame = None

self.ret = False

self.skip_timer = 0

self.set_fps(fps)

self.loop = True

def process_data(self, data):

if not self.ret:

if self.loop:

self.open()

return TASK_DATA_IGNORE

else:

return TASK_DATA_CLOSE

data.source_fps = self.fps

data.frame = self.frame

self.ret, self.frame = self.cap.read()

result = TASK_DATA_OK

if self.skip_timer != 0:

result = TASK_DATA_SKIP

data.skipped = None

skip_gap = int(self.fps * self.balancer.short_stab_interval)

# skip_gap = 1

# print(self.balancer.short_stab_module, self.balancer.short_stab_interval)

if self.skip_timer > skip_gap:

self.skip_timer = 0

else:

self.skip_timer += 1

time.sleep(self.interval)

return result

def product_task_data(self):

return TaskData(self.task_stage)

def set_fps(self, fps):

self.fps = fps

self.interval = 1 / fps

def open(self):

super(VideoModule, self).open()

if self.cap is not None:

self.cap.release()

self.cap = cv2.VideoCapture(self.source)

if self.cap.isOpened():

self.set_fps(self.cap.get(cv2.CAP_PROP_FPS))

self.ret, self.frame = self.cap.read()

print("视频源帧率: ", self.fps)

pass

import cv2

from pipeline_module.core.base_module import BaseModule, TASK_DATA_CLOSE, TASK_DATA_OK, TaskData, TASK_DATA_SKIP, \

TASK_DATA_IGNORE

class VideoModule(BaseModule):

def __init__(self, source=0, fps=25, skippable=False):

super(VideoModule, self).__init__(skippable=skippable)

self.task_stage = None

self.source = source

self.cap = None

self.frame = None

self.ret = False

self.skip_timer = 0

self.set_fps(fps)

self.loop = True

def process_data(self, data):

if not self.ret:

if self.loop:

self.open()

return TASK_DATA_IGNORE

else:

return TASK_DATA_CLOSE

data.source_fps = self.fps

data.frame = self.frame

self.ret, self.frame = self.cap.read()

result = TASK_DATA_OK

if self.skip_timer != 0:

result = TASK_DATA_SKIP

data.skipped = None

skip_gap = int(self.fps * self.balancer.short_stab_interval)

# skip_gap = 1

# print(self.balancer.short_stab_module, self.balancer.short_stab_interval)

if self.skip_timer > skip_gap:

self.skip_timer = 0

else:

self.skip_timer += 1

time.sleep(self.interval)

return result

def product_task_data(self):

return TaskData(self.task_stage)

def set_fps(self, fps):

self.fps = fps

self.interval = 1 / fps

def open(self):

super(VideoModule, self).open()

if self.cap is not None:

self.cap.release()

self.cap = cv2.VideoCapture(self.source)

if self.cap.isOpened():

self.set_fps(self.cap.get(cv2.CAP_PROP_FPS))

self.ret, self.frame = self.cap.read()

print("视频源帧率: ", self.fps)

pass

class DrawModule(BaseModule):

def __init__(self):

super(DrawModule, self).__init__()

self.last_time = time.time()

def process_data(self, data):

current_time = time.time()

fps = 1 / (current_time - self.last_time)

frame = data.frame

pred = data.detections

preds_kps = data.keypoints

preds_scores = data.keypoints_scores

for det in pred:

show_text = "person: %.2f" % det[4]

cv2.rectangle(frame, (det[0], det[1]), (det[2], det[3]), box_color, 2)

cv2.putText(frame, show_text,

(det[0], det[1]),

cv2.FONT_HERSHEY_COMPLEX,

float((det[2] - det[0]) / 200),

box_color)

if preds_kps is not None:

draw_keypoints136(frame, preds_kps, preds_scores)

# 记录fps

cv2.putText(frame, "FPS: %.2f" % fps, (0, 52), cv2.FONT_HERSHEY_COMPLEX, 0.5, (0, 0, 255))

# 显示图像

cv2.imshow("yolov5", frame)

cv2.waitKey(40)

self.last_time = current_time

return TASK_DATA_OK

def open(self):

super(DrawModule, self).open()

pass

def __init__(self):

super(DrawModule, self).__init__()

self.last_time = time.time()

def process_data(self, data):

current_time = time.time()

fps = 1 / (current_time - self.last_time)

frame = data.frame

pred = data.detections

preds_kps = data.keypoints

preds_scores = data.keypoints_scores

for det in pred:

show_text = "person: %.2f" % det[4]

cv2.rectangle(frame, (det[0], det[1]), (det[2], det[3]), box_color, 2)

cv2.putText(frame, show_text,

(det[0], det[1]),

cv2.FONT_HERSHEY_COMPLEX,

float((det[2] - det[0]) / 200),

box_color)

if preds_kps is not None:

draw_keypoints136(frame, preds_kps, preds_scores)

# 记录fps

cv2.putText(frame, "FPS: %.2f" % fps, (0, 52), cv2.FONT_HERSHEY_COMPLEX, 0.5, (0, 0, 255))

# 显示图像

cv2.imshow("yolov5", frame)

cv2.waitKey(40)

self.last_time = current_time

return TASK_DATA_OK

def open(self):

super(DrawModule, self).open()

pass

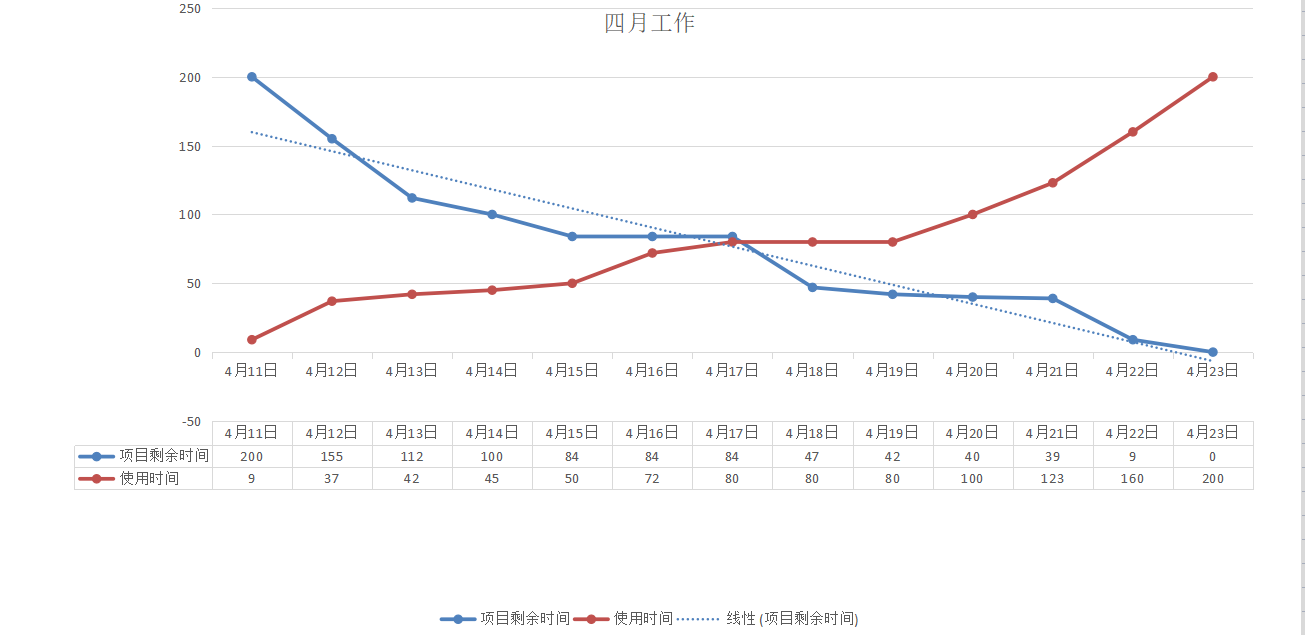

4.燃尽图

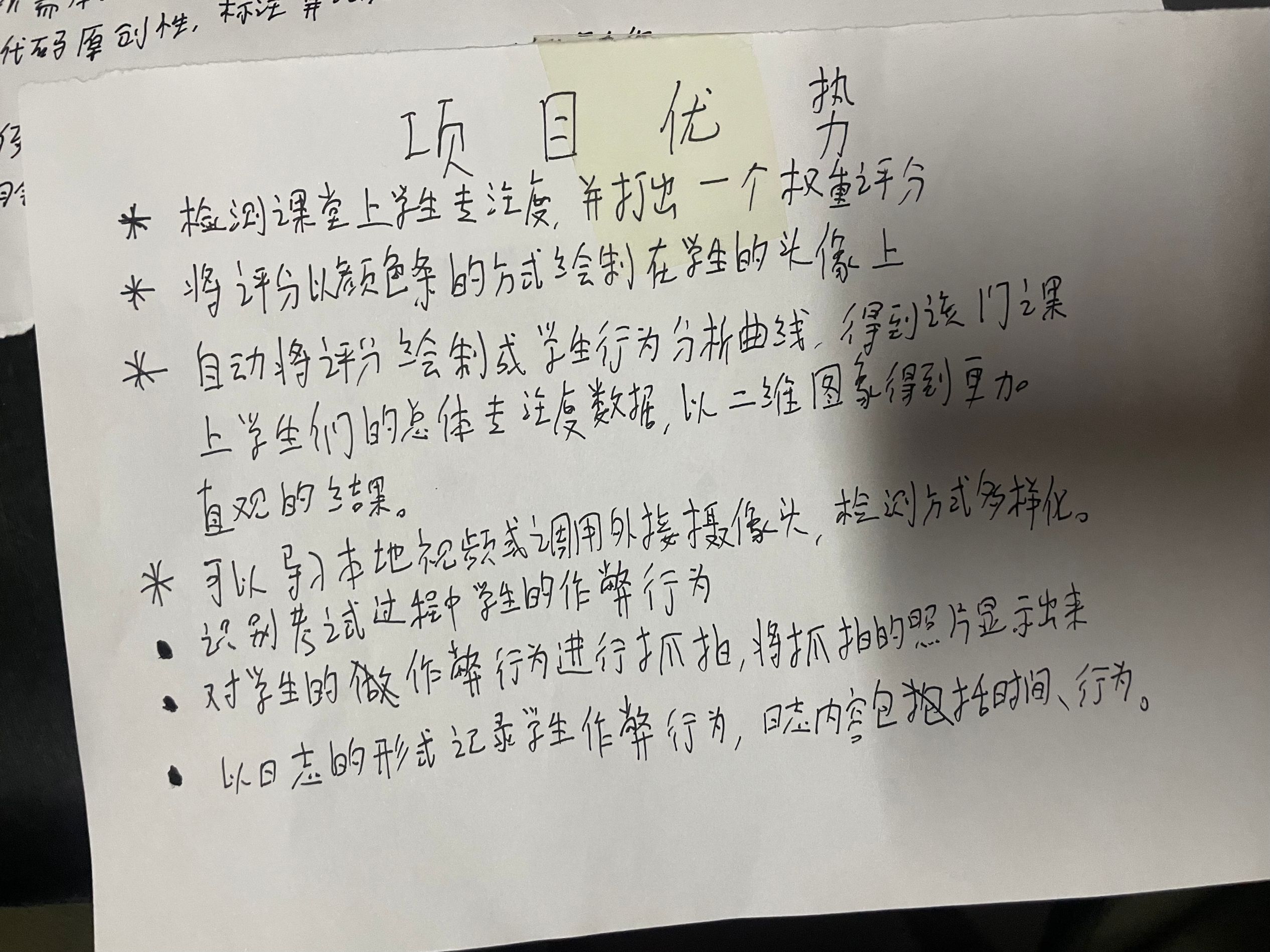

5.对应任务索引卡

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 使用C#创建一个MCP客户端

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· ollama系列1:轻松3步本地部署deepseek,普通电脑可用

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· 按钮权限的设计及实现