用SQL查Linux日志工具-q

概述

Linux文本查找命令不要太多:tail、head、cat、sed、more、less、awk,但对新手不友好。q是一个命令行工具,允许在任意文件或查询结果,如ps -ef查询进程命令的结果集上,执行SQL语句查询。

GitHub

官方文档

理念:文本即数据库表,将普通文件或者结果集当作数据库表,几乎支持所有的SQL结构和关键词,如WHERE、GROUP BY、JOINS等,支持自动列名和列类型检测,跨文件连接查询,和多种编码。

安装

参考:installation手册。

Linux

CentOS环境:

# 下载

wget https://github.com/harelba/q/releases/download/1.7.1/q-text-as-data-1.7.1-1.noarch.rpm

# 安装

sudo rpm -ivh q-text-as-data-1.7.1-1.noarch.rpm

# 验证

q --version

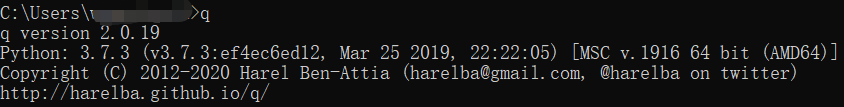

Windows

去GitHub Release下载最新版,一路next即可:

使用

q -h得到命令使用手册。支持所有SQLite SQL语法,标准命令行格式,q + 参数命令 + SQL:q <命令> <SQL>

查询文件内容:q "SELECT * FROM myfile.log"

利用参数会让显示结果更加美观,参数有2类:

- input输入命令:对要查询的文件或结果集进行操作,如

-H命令表示输入的数据包含标题行。

q -H "SELECT * FROM myfile.log"

在这种情况下,将自动检测列名,并可在查询语句中使用。如果未提供此选项,则列将自动命名为cX,以c1起始以此类推。

q "select c1,c2 from ..." - output输出命令:作用在查询输出的结果集,如

-O让查询出来的结果显示列名:

ps -ef | q -H -O "select count(UID) from - where UID='root'"

具体Input命令参数讲解:

Input Data Options:

-H, --skip-header Skip header row. This has been changed from earlier

version - Only one header row is supported, and the

header row is used for column naming

-d DELIMITER, --delimiter=DELIMITER

Field delimiter. If none specified, then space is used

as the delimiter.

-p, --pipe-delimited

Same as -d '|'. Added for convenience and readability

-t, --tab-delimited

Same as -d <tab>. Just a shorthand for handling

standard tab delimited file You can use $'\t' if you

want (this is how Linux expects to provide tabs in the

command line

-e ENCODING, --encoding=ENCODING

Input file encoding. Defaults to UTF-8. set to none

for not setting any encoding - faster, but at your own

risk...

-z, --gzipped Data is gzipped. Useful for reading from stdin. For

files, .gz means automatic gunzipping

-A, --analyze-only Analyze sample input and provide information about

data types

-m MODE, --mode=MODE

Data parsing mode. fluffy, relaxed and strict. In

strict mode, the -c column-count parameter must be

supplied as well

-c COLUMN_COUNT, --column-count=COLUMN_COUNT

Specific column count when using relaxed or strict

mode

-k, --keep-leading-whitespace

Keep leading whitespace in values. Default behavior

strips leading whitespace off values, in order to

provide out-of-the-box usability for simple use cases.

If you need to preserve whitespace, use this flag.

--disable-double-double-quoting

Disable support for double double-quoting for escaping

the double quote character. By default, you can use ""

inside double quoted fields to escape double quotes.

Mainly for backward compatibility.

--disable-escaped-double-quoting

Disable support for escaped double-quoting for

escaping the double quote character. By default, you

can use \" inside double quoted fields to escape

double quotes. Mainly for backward compatibility.

--as-text Don't detect column types - All columns will be

treated as text columns

-w INPUT_QUOTING_MODE, --input-quoting-mode=INPUT_QUOTING_MODE

Input quoting mode. Possible values are all, minimal

and none. Note the slightly misleading parameter name,

and see the matching -W parameter for output quoting.

-M MAX_COLUMN_LENGTH_LIMIT, --max-column-length-limit=MAX_COLUMN_LENGTH_LIMIT

Sets the maximum column length.

-U, --with-universal-newlines

Expect universal newlines in the data. Limitation: -U

works only with regular files for now, stdin or .gz

files are not supported yet.

具体Output命令参数讲解:

Output Options:

-D OUTPUT_DELIMITER, --output-delimiter=OUTPUT_DELIMITER

Field delimiter for output. If none specified, then

the -d delimiter is used if present, or space if no

delimiter is specified

-P, --pipe-delimited-output

Same as -D '|'. Added for convenience and readability.

-T, --tab-delimited-output

Same as -D <tab>. Just a shorthand for outputting tab

delimited output. You can use -D $'\t' if you want.

-O, --output-header

Output header line. Output column-names are determined

from the query itself. Use column aliases in order to

set your column names in the query. For example,

'select name FirstName,value1/value2 MyCalculation

from ...'. This can be used even if there was no

header in the input.

-b, --beautify Beautify output according to actual values. Might be

slow...

-f FORMATTING, --formatting=FORMATTING

Output-level formatting, in the format X=fmt,Y=fmt

etc, where X,Y are output column numbers (e.g. 1 for

first SELECT column etc.

-E OUTPUT_ENCODING, --output-encoding=OUTPUT_ENCODING

Output encoding. Defaults to 'none', leading to

selecting the system/terminal encoding

-W OUTPUT_QUOTING_MODE, --output-quoting-mode=OUTPUT_QUOTING_MODE

Output quoting mode. Possible values are all, minimal,

nonnumeric and none. Note the slightly misleading

parameter name, and see the matching -w parameter for

input quoting.

-L, --list-user-functions

List all user functions

实战

关键字查询

查询时必须指定某一列:

q "select * from douyin.log where c9 like '%待解析%'"

2021-06-11 14:46:49.323 INFO 22790 --- [nio-8888-exec-2] c.x.douyin.controller.ParserController : 待解析URL :url=https%3A%2F%2Fv.douyin.com%2Fe9g9uJ6%2F

用grep命令则是全文检索:

cat douyin.log | grep '待解析URL'

2021-06-11 14:46:49.323 INFO 22790 --- [nio-8888-exec-2] c.x.douyin.controller.ParserController : 待解析URL :url=https%3A%2F%2Fv.douyin.com%2Fe9g9uJ6%2F

模糊查询

like模糊搜索,如果文本内容列有名字直接用列名检索,没有则直接根据列号c1、c2、cN。

q -H -t "select * from test.log where abc like '%2%'"

交集并集

支持UNION和UNION ALL操作符对多个文件取交集或者并集。

q -H -t "select * from test.log union select * from test1.log"

内容去重

比如统计某个路径下的./clicks.csv文件中,uuid字段去重后出现的总个数。

q -H -t "SELECT COUNT(DISTINCT(uuid)) FROM ./clicks.csv"

列类型自动检测

q会理解每列是数字还是字符串,判断是根据实数值比较,还是字符串比较进行过滤,这里会用到-t命令。

q -H -t "SELECT request_id,score FROM ./clicks.csv WHERE score > 0.7 ORDER BY score DESC LIMIT 5"

字段运算

读取系统命令查询结果,计算/tmp目录中每个用户和组的总值,可对字段进行运算处理。

sudo find /tmp -ls | q "SELECT c5,c6,sum(c7)/1024.0/1024 AS total FROM - GROUP BY c5,c6 ORDER BY total desc"

# sudo find /tmp -ls | q "SELECT c5,c6,sum(c7)/1024.0/1024 AS total FROM - GROUP BY c5,c6 ORDER BY total desc"

www www 8.86311340332

root root 0.207922935486

mysql mysql 4.76837158203e-06

数据统计

统计系统拥有最多进程数的前 3个用户ID,按降序排序,需要配合系统命令使用,先查询所有进程再利用SQL筛选,q相当于grep:

ps -ef | q -H "SELECT UID,COUNT(*) cnt FROM - GROUP BY UID ORDER BY cnt DESC LIMIT 3"

root 104

www 16

rabbitmq 4

ps -ef | q -H -O "SELECT UID,COUNT(*) cnt FROM - GROUP BY UID ORDER BY cnt DESC LIMIT 3"

UID cnt

root 110

www 16

rabbitmq 4

加与不加-O命令的区别就是否显示查询结果的标题。

连接文件

一般情况下,日志文件会按天分割成很多个固定容量的子文件,在没有统一的日志收集服务器的情况下。可将所有文件内容合并后再查询:

q -H "select * from douyin.log a join douyin-2021-06-18.0.log b on (a.c2=b.c3) where b.c1='root'"

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix