MongoDB副本集Command failed with error 10107 (NotMaster):on server

问题

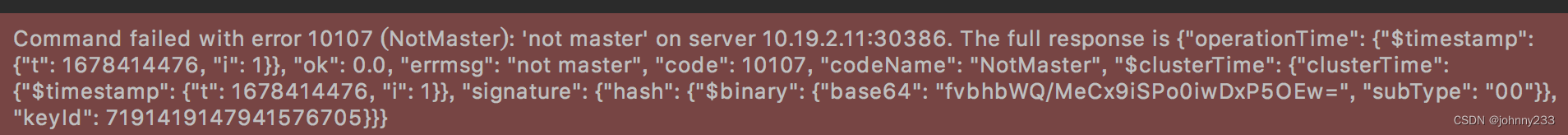

通过DataGrip连接的MongoDB节点,之前可以新增或更新数据。某天突然不能新增或更新数据,报错信息如下:

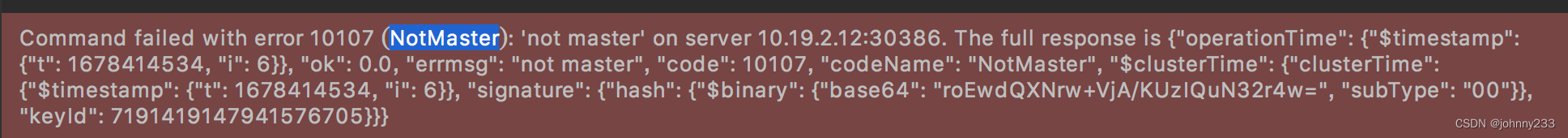

具体的报错信息:

Command failed with error 10107 (NotMaster): 'not master' on server 10.19.21.11:30386. The full response is {"operationTime": {"$timestamp": {"t": 1677831692, "i": 1}}, "ok": 0.0, "errmsg": "not master", "code": 10107, "codeName": "NotMaster", "$clusterTime": {"clusterTime": {"$timestamp": {"t": 1677831692, "i": 1}}, "signature": {"hash": {"$binary": {"base64": "lHLZvy4mhv1/eWxsjPzI4Fo8fwg=", "subType": "00"}}, "keyId": 7191419147941576705}}}

排查

通过排查,得知k8s是1master + 3node节点,通过k8s安装部署的MongoDB副本集,一主两从:

[root@k8s-master01 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 Ready master 3y153d v1.15.1 10.19.1.123 <none> CentOS Linux 7 (Core) 5.1.0-1.el7.elrepo.x86_64 docker://18.9.6

k8s-node01 Ready <none> 2y66d v1.15.1 10.19.2.12 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.6

k8s-node02 Ready <none> 3y153d v1.15.1 10.19.2.11 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.6

k8s-node03 Ready <none> 3y153d v1.15.1 10.19.2.13 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.6

[root@k8s-master01 ~]# kubectl get pod -n aba-stg-public | grep mongo

mongodb-replicaset-0 1/1 Running 6 353d

mongodb-replicaset-1 1/1 Running 3 263d

mongodb-replicaset-2 1/1 Running 3 262d

进入指定命名空间下的指定容器:

kubectl exec -it -n <ns> <pod> /bin/bash

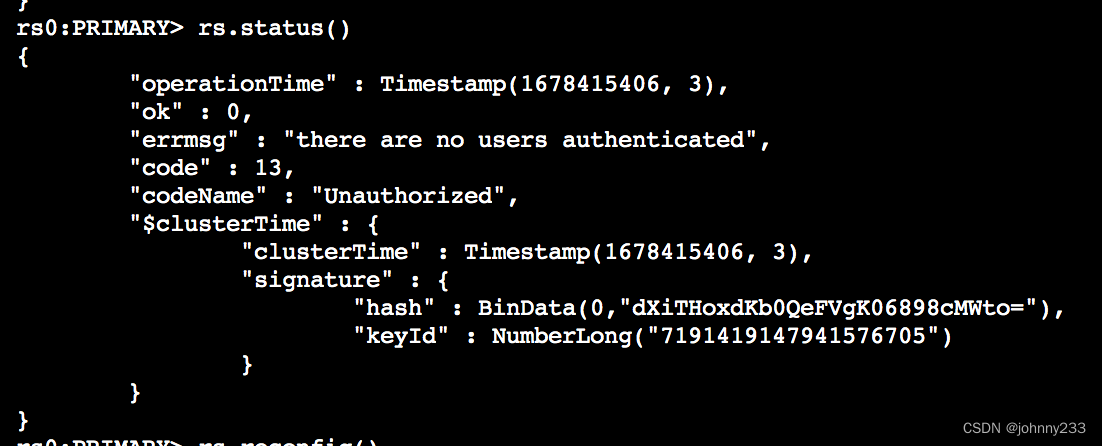

执行mong命令,即可连接mongod服务,执行rs.status()命令查看Replica Set的状态:

报错:there are no users authenticated。

解决方法:

因为是在 admin这个数据库下添加的账号,故而需要在 admin 库下输入正确的用户名和密码进行验证:

use admin

db.auth('root','root')

再次执行命令rs.status();即可得到正常输出:

{

"set" : "rs0",

"date" : ISODate("2023-03-10T14:08:01.694Z"),

"myState" : 1,

"term" : NumberLong(117),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"appliedOpTime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"durableOpTime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

}

},

"members" : [

{

"_id" : 0,

"name" : "mongodb-replicaset-0.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1226572,

"optime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"optimeDurable" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"optimeDate" : ISODate("2023-03-10T14:07:57Z"),

"optimeDurableDate" : ISODate("2023-03-10T14:07:57Z"),

"lastHeartbeat" : ISODate("2023-03-10T14:08:00.747Z"),

"lastHeartbeatRecv" : ISODate("2023-03-10T14:08:00.736Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongodb-replicaset-2.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"syncSourceHost" : "mongodb-replicaset-2.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 3

},

{

"_id" : 1,

"name" : "mongodb-replicaset-1.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1226592,

"optime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"optimeDate" : ISODate("2023-03-10T14:07:57Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1677230706, 1),

"electionDate" : ISODate("2023-02-24T09:25:06Z"),

"configVersion" : 3,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 2,

"name" : "mongodb-replicaset-2.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1226585,

"optime" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"optimeDurable" : {

"ts" : Timestamp(1678457277, 1),

"t" : NumberLong(117)

},

"optimeDate" : ISODate("2023-03-10T14:07:57Z"),

"optimeDurableDate" : ISODate("2023-03-10T14:07:57Z"),

"lastHeartbeat" : ISODate("2023-03-10T14:08:00.747Z"),

"lastHeartbeatRecv" : ISODate("2023-03-10T14:08:00.736Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongodb-replicaset-1.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"syncSourceHost" : "mongodb-replicaset-1.mongodb-replicaset.aba-stg-public.svc.cluster.local:27017",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 3

}

],

"ok" : 1,

"operationTime" : Timestamp(1678457277, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1678457277, 1),

"signature" : {

"hash" : BinData(0,"Er9WM7g3xmePUgTZwjC4dWIJ++w="),

"keyId" : NumberLong("7191419147941576705")

}

}

}

health为1表明服务器正常,0表明服务器down

state为1表明是Primary,2表明是Secondary,3是Recovering,7是Arbiter,8是Down

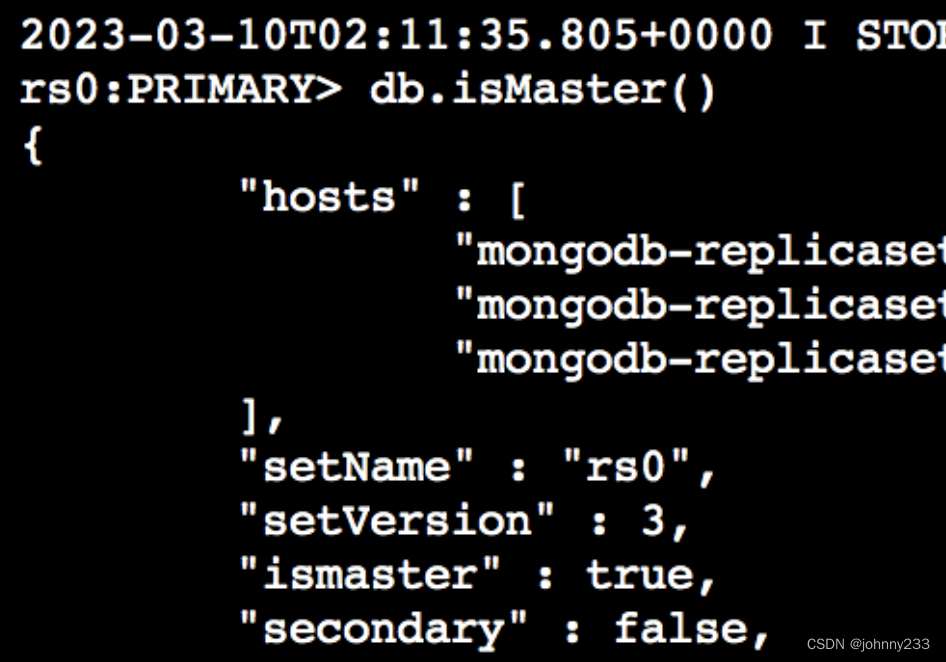

另外执行db.isMaster()可返回副本集节点状态信息。

排查后得知,MongoDB副本集发生Master选举,现在节点1变成Master,之前节点0才是Master。

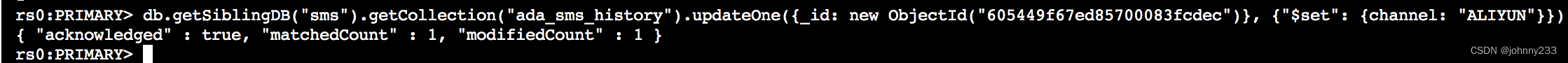

并且发现,在命令行执行新增或更新命令:

db.getSiblingDB("sms").getCollection("aba_sms_history").updateOne({_id: new ObjectId("605449f67ed85700083fcdec")}, {"$set": {channel: "ALIYUN"}})

在Master节点,是可以执行成功的:

在从节点执行失败:

也就是说副本集状态是正常的?

DataGrip复制数据源,分别对应3个节点,之前11这个节点是Master节点:

现在Master节点变成12,但是也执行失败:

另外13节点不出意料也是失败:

总结

在pod里执行mongo命令连接mongod server成功,然后执行db.isMaster(),或rs.status(),打印信息看起来都是正常的。节点1之前是secondary的节点现在变成primary,并且只有primary节点可以写入数据,secondary节点写入数据时报错not master。所以副本集状态是正常的。

但是通过DataGrip连接这3个节点,所有节点都报错not master。

解决

[root@k8s-master01 ~]# kubectl get svc -A -o wide | grep mongo

aba-stg-public mongodb-replicaset ClusterIP None <none> 27017/TCP 2y127d app=mongodb-replicaset,release=mongodb-replicaset

aba-stg-public mongodb-replicaset-client ClusterIP None <none> 27017/TCP 2y127d app=mongodb-replicaset,release=mongodb-replicaset

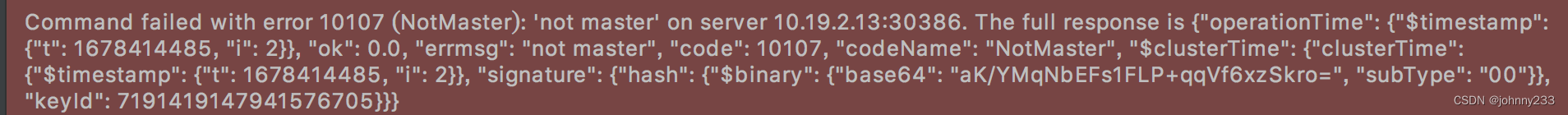

aba-stg-public mongodb-replicaset-service NodePort 10.104.187.101 <none> 27017:30386/TCP 2y128d statefulset.kubernetes.io/pod-name=mongodb-replicaset-1

可知其中mongodb-replicaset-service是提供服务的svc,我们查看一下这个svc:

[root@k8s-master01 ~]# kubectl describe svc mongodb-replicaset-service -n aba-stg-public

Name: mongodb-replicaset-service

Namespace: aba-stg-public

Labels: app=mongodb-replicaset

Annotations: field.cattle.io/publicEndpoints:

[{"addresses":["10.19.2.11"],"port":30386,"protocol":"TCP","serviceName":"aba-stg-public:mongodb-replicaset-service","allNodes":true}]

kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"mongodb-replicaset"},"name":"mongodb-replicaset-service"...

Selector: statefulset.kubernetes.io/pod-name=mongodb-replicaset-0

Type: NodePort

IP: 10.104.187.101

Port: mongodb-replicaset 27017/TCP

TargetPort: 27017/TCP

NodePort: mongodb-replicaset 30386/TCP

Endpoints: 10.244.86.172:27017

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

发现其Selector不正确,修改svc:kubectl edit svc mongodb-replicaset-service -n aba-stg-public。

理论基础:kubectl管控(操作)的所有对象都是一个个yml文件,编辑对象就是编辑一个yml文件,也就是说kubectl edit命令实际上会调用类似vi或vim这些编辑命令,进入编辑模式,然后和操作vi命令一样,保存退出,kubectl会自动检查yml文件格式是否有语法问题,没有语法问题,会尝试自动重启svc。如果启动失败则说明编辑后的yml文件有误。

如果没有做任何修改,则会提示:Edit cancelled, no changes made.

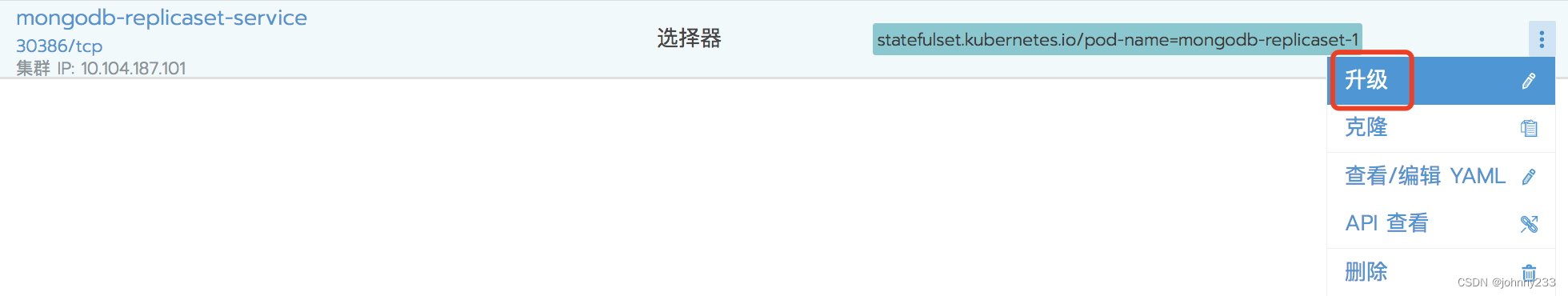

另外,我们使用Rancher来管理k8s集群资源。也就是说绝对大多数命令行操作都可以通过Rancher来实现。

上述操作步骤对应页面截图:

- 先切换到服务发现Tab页:搜索框搜索mongo:

如上截图所示,会看到3个svc,我们需要操作的是第三个,页面上能看到选择器,即Selector。

注:上面截图的选择器已经是修改后的正确的目标。 - 点击右侧的三个点,然后点击升级,即会触发

kubectl edit命令:

- 如下图所示,修改标签值,点击保存即可

后续

关于kubect 标签Selector,请扩展阅读学习。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

2019-04-02 Java开发IDE神器IntelliJ IDEA 教程