VMware's vSphere 4 brings a number of new vSphere networking features to the table, including tighter VM traffic management and control with the vNetwork Distributed Switch (vDS) , as well as support for third-party virtual switches (vSwitches). Along with that come a new high-performance virtual NIC, VMXNET3, the ability to create private VLANs and support for IPv6.

Implementation and benefits of private VLANs with a vSphere network

![]()

More vSphere networking resources

vSphere VLAN: Understanding 802.1Q VLAN tagging

How to configure vSwitch virtual switch tagging forVLANs in a vSphere network

Cisco Nexus 1000v virtual switch: Virtualization network management

Managing virtualization networking

![]()

![]()

Private VLANs allow communication between VMs on a vSwitch to be controlled and restricted. This feature, which is normally available on physical switches, was added only to the vDS and not the standard vSwitch. Normally, traffic on the same vSwitch port group has no restrictions and any VM in that port group can see the traffic from other VMs. Private VLANs restrict this visibility and in essence act as firewalls within the VLAN. Private VLANs must first be configured on the physical switch ports of the uplink NICs on the vDS. Private VLANs are then configured by editing the settings of a vDS and selecting the Private VLANs tab.

vSphere networking virtual NIC features

The VMXNET3 virtual NIC is a third-generation virtual NIC that includes support for a series of advanced new features:

- Receive Side Scaling (RSS): RSS is designed to reduce processing delays by distributing receive processing from a NIC across multiple CPUs on a vSMP VM. This feature is supported for Windows Server 2008. Without RSS, all of the processing is performed by a single CPU, resulting in inefficient system cache utilization. To enable RSS within Server 2008, you must follow the specific rules laid out in the Microsoft Knowledge Base.

- Large Tx/Rx ring sizes: A ring serves as a staging area for packets in line to be transmitted or received. Ring buffer size can be tuned to ensure enough queuing at the device level to handle the peak loads generated by the system and the network. With a larger ring size, a network device can handle higher peak loads without dropping any packets. The default receive (Rx) ring size is 150 for enhanced VMXNET2 and 256 for VMXNET3. For VMXNET2, it can be increased to 512; for VMXNET3, it can be increased to 4096. For VMXNET2, this can be increased by adding a parameter to the VM config file (Ethernet.numRecvBuffers=); however, for VMXNET3, it can be increased only inside the guest operating system. Currently, only Linux guests support this (ethtool -G eth rx ) as there is no mechanism to set this within Windows.

- MSI/MSI-X support: Message-Signaled Interrupts (MSI) and Extended Message-Signaled Interrupts (MSI-X) are an alternative and more efficient way for a device to interrupt a CPU. An interrupt is a hardware signal from a device to a CPU informing it that the device needs attention and signaling that the CPU should stop current processing and respond to the device. Older systems used a special line-based interrupt pin for this that was limited to four lines and shared by multiple devices. With MSI and MSI-X, data is written to a special address in the memory, which the chipset delivers as a message to the CPU. MSI supports passing interrupts only to a single processor core and supports up to 32 messages. MSI-X was the successor to MSI and allows multiple interrupts to be handled simultaneously and load balanced across multiple cores as well as up to 2,048 messages. VMXNET3 supports three interrupt modes: MSI-X, MSI and INTx (line-based). VMXNET3 will attempt to use the interrupt modes in the order previously listed if the server hardware and guest OS kernel support them. Almost all hardware today supports MSI and MSI-X. MSI & MSI-X is supported on Windows 2008, Vista and Windows 7, while line-based interrupts are used on Windows 2000, XP and 2003.

- IPv6 checksum and TSO over IPv6: Checksums are used with both IPv4 and IPv6 for error checking of TCP packets. For IPv6, the method used to compute the checksum has changed, which the VMXNET3 driver supports. Both VMXNET2 and VMXNET3 support the offload of computing the checksum to a network adapter for reduced CPU overhead. TCP Segmentation Offload (TSO, also known as large-send offload) is designed to offload portions of outbound TCP processing to a NIC, thereby reducing system CPU utilization and enhancing performance. Instead of processing many small MTU-sized frames during transmit, the system can send fewer large virtual MTU-sized frames and let the NIC break them into smaller frames. TSO must be supported by the physical NIC and enabled in the guest operating system (usually a registry setting for Windows servers).

VMware has done performance comparisons between VMXNET2 and VMXNET3 and found that VMXNET3 offers equal or better performance than VMXNET2 in both Windows and Linux guests. In addition, the advanced features that are supported by VMXNET3 can further increase the performance of certain workloads. In most cases, use of the VMXNET3 over the older VMXNET2 adapter is always recommended.

Currently, the Fault Tolerance feature does not support the VMXNET3 adapter. When the VMXNET3 adapter is used, it will show as connected as 10 Gbps in the Windows system tray no matter what the physical NIC speed is. This is because the NIC speed shown within a guest operating system is not always a true reflection of the physical NIC speed that is in the vSwitch. Despite showing a different speed, the vNIC runs at the speed of the physical NIC.

vSphere networks now support IPv6

Another new feature in vSphere is support for IPv6, which was created to deal with the exhaustion of IP addresses supported by IPv4. IPv6 addresses are 16 bytes (128 bits) and represented in hexadecimal.

Support for IPv6 was enabled in vSphere for the networking in the VMkernel, Service Console and vCenter Server. Support for IPv6 for network storage protocols is currently considered experimental and is not recommended for production use. Mixed environments of IPv4 and IPv6 are also supported.

To enable IPv6 on a host, you simply select the host and choose the "Configuration" tab and then "Networking." If you click the "Properties" link (not the "vSwitch Properties"), there is a checkmark to enable IPv6 for that host. Once enabled, you must restart the host for the changes to take effect. Then you will see in the VMkernel, Service Console (ESX) or Management Network (ESXi) properties of the vSwitch as both an IPv4 and IPv6 address.

Distributed vSwitches: Clustered-level vSphere networking configuration

vNetwork Distributed vSwitches (vDS or vNDS) allow a single switch to be used across multiple hosts for configuration. Previously, vSwitches with identical configurations had to be created on each host for features like VMotion to work properly. When a VM moved from one host to another, it had to find the network name and same configuration on the other host in order to connect for use. Configuring vSwitches individually on each host became a tedious process, and when all configurations weren't identical, VMotion compatibility issues often resulted.

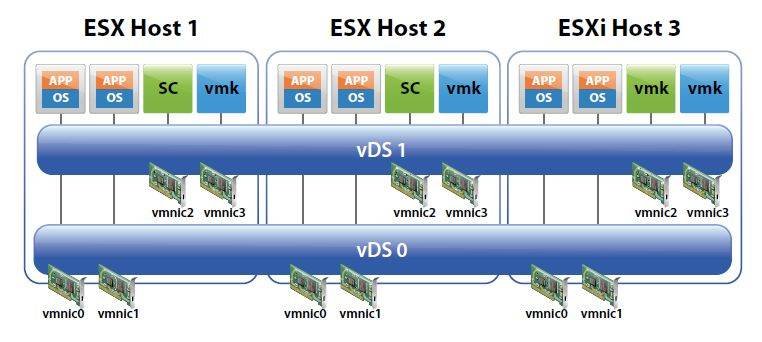

vDSs are very similar to standard ones, but while standard vSwitches are configured individually on each host, vDSs are configured centrally using vCenter Server. You may have up to 16 vDSs per vCenter Server and up to 64 hosts may connect to each vDS. vDSs are created and maintained using a vCenter Server but they are not dependent on the server for operation. This is important because if the vCenter Server becomes unavailable, vDSs won't lose their configurations. When a vDS is created in vCenter Server, a hidden vSwitch is created on each host connected to the vDS in a folder named .dvsData on a local VMFS volume. vDSs provide more than just centralized management of vSwitches; they also provide the features listed below, which are not available with standard vSwitches:

- Support for Private VLANs to provide segmentation between VMs.

- The network port state of a VM is maintained when moved in VMotion from one host to another, which enables continuous statistic monitoring and facilitates security monitoring. This is known as Network Policy VMotion.

- Bi-directional traffic shaping supports both Tx and Rx rate limiting. The standard vSwitch supports only Tx rate limiting.

- Support for third-party distributed vSwitches like the Cisco Nexus 1000v.

The requirements for using a vDS are simple: All your hosts that will use the vDS must have an Enterprise Plus license, and they need to be managed by vCenter Server. You can use standard vSwitches in combination with vDSs as long as they do not use the same physical NICs. It is common practice to use standard vSwitches for VMkernel, ESX Service Console and ESXi Management networking while using vDSs for virtual machine networking. This hybrid mode is shown below.

Keeping the Service Console and VMkernel networking on a standard vSwitch prevents any changes made to vDSs from affecting the critical networking of a host server. Using vDSs for that traffic is fully supported, but many prefer to keep it separate, as once it is set up and configured per host, it typically does not change. You can also use multiple vDSs per host, as shown below.

This is generally done to segregate traffic types; for example, running the VMkernel, ESX Service Console and ESXi Management networking on one vDS while maintaining the virtual machine networking on another.

While at first vDSs may seem more complicated than the simple standard vSwitches, they are easier to administer and provide additional benefits.

In the second part of this series on vSphere networking features, learn more about how the Cisco Nexus 1000v distributed virtual switch is used in a vSphere network.

About the author: Eric Siebert is a 25-year IT veteran with experience in programming, networking, telecom and systems administration. He is a guru-status moderator on the VMware community VMTN forum and maintains VMware-land.com, a VI3 information site.