day07 sparksql 生成Physical Plan

1. 案例sql

select A,B from testdata2 where A>2

对应的执行计划:

== Analyzed Logical Plan == Project [A#23, B#24] +- Filter (A#23 > 2) +- SubqueryAlias testdata2 +- View (`testData2`, [a#23,b#24]) +- SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).a AS a#23, knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).b AS b#24] +- ExternalRDD [obj#22] == Optimized Logical Plan == Project [A#23, B#24] +- Filter (A#23 > 2) +- SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).a AS a#23, knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).b AS b#24] +- ExternalRDD [obj#22] == Physical Plan == Project [A#23, B#24] +- Filter (A#23 > 2) +- SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).a AS a#23, knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).b AS b#24] +- Scan[obj#22] == executedPlan == *(1) Project [A#23, B#24] +- *(1) Filter (A#23 > 2) +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).a AS a#23, knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).b AS b#24] +- Scan[obj#22]

1.1 该sql涉及的策略以及策略执行后得到的物理执行计划

========================涉及的策略:class org.apache.spark.sql.execution.SparkStrategies$SpecialLimits$============================ List(PlanLater Project [A#23, B#24]) ========================涉及的策略:class org.apache.spark.sql.execution.SparkStrategies$BasicOperators$============================ List(Project [A#23, B#24] +- PlanLater Filter (A#23 > 2) ) ========================涉及的策略:class org.apache.spark.sql.execution.SparkStrategies$BasicOperators$============================ List(Filter (A#23 > 2) +- PlanLater SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).a AS a#23, knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).b AS b#24] ) ========================涉及的策略:class org.apache.spark.sql.execution.SparkStrategies$BasicOperators$============================ List(SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).a AS a#23, knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData2, true])).b AS b#24] +- PlanLater ExternalRDD [obj#22] ) ========================涉及的策略:class org.apache.spark.sql.execution.SparkStrategies$BasicOperators$============================ List(Scan[obj#22] )

打印起作用的策略以及策略作用后的结果代码如下:

val candidates = strategies.iterator.flatMap{ stra =>

val my_plan = stra(plan)

if(my_plan.size>0){

println("========================涉及的策略:"+stra.getClass+"============================")

println(my_plan)

println()

}

my_plan

}

2 . 代码作用细节:

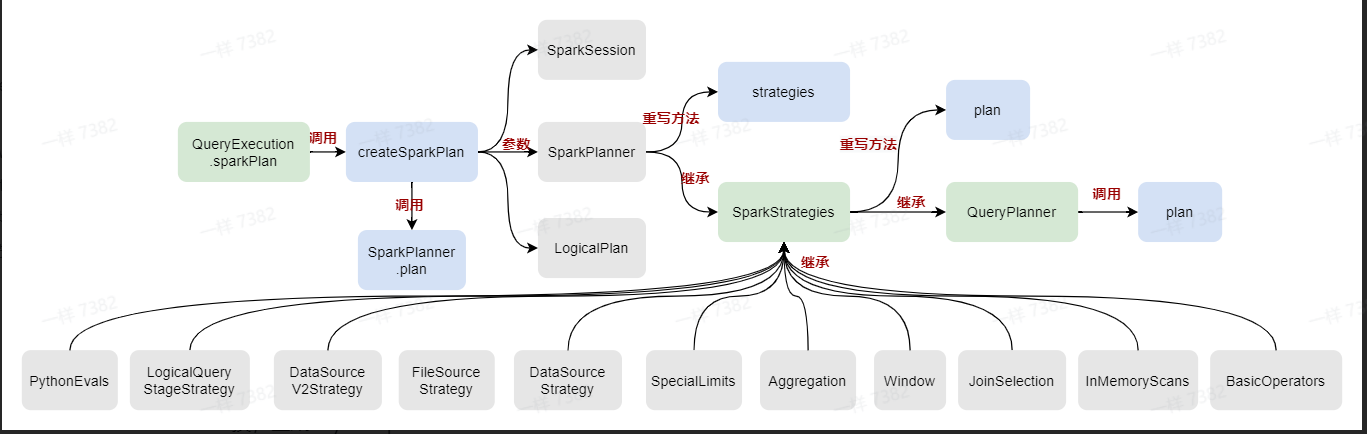

2.1 代码入口:

spark.sql("xxx").queryExecution.sparkPlan // 最终调用QueryPlanner中的plan方法

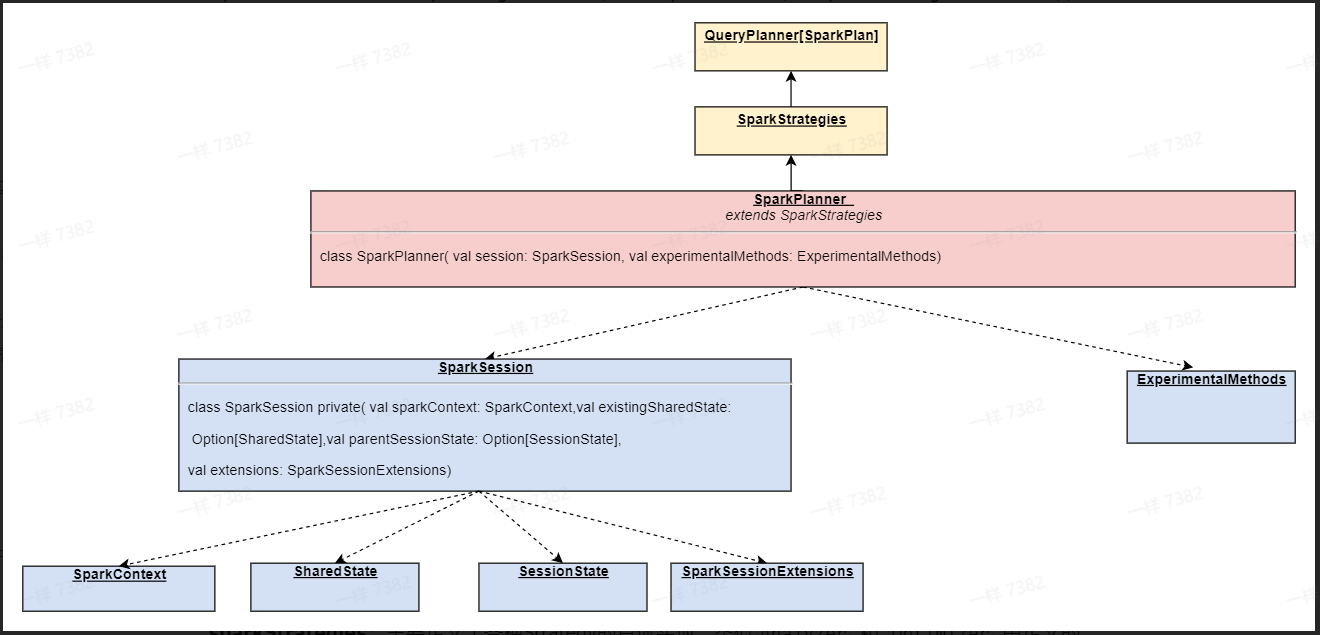

- 物理执行计划分析器SparkPlanner

在得到优化后的逻辑执行计划后,SparkPlanner会对Optimized Logical Plan进行转换,生成Physical plans,SparkPlanner的继承关系如下