声明

什么是Phoenix

Phoenix的团队用了一句话概括Phoenix:"We put the SQL back in NoSQL" 意思是:我们把SQL又放回NoSQL去了!这边说的NoSQL专指HBase,意思是可以用SQL语句来查询Hbase,你可能会说:“Hive和Impala也可以啊!”。但是Hive和Impala还可以查询文本文件,Phoenix的特点就是,它只能查Hbase,别的类型都不支持!但是也因为这种专一的态度,让Phoenix在Hbase上查询的性能超过了Hive和Impala!

安装Phoenix

Phoenix跟Hbase的版本对应

- Phoenix 2.x - HBase 0.94.x

- Phoenix 3.x - HBase 0.94.x 下载地址

- Phoenix 4.x - HBase 0.98.1+ 下载地址 官网下载地址

这里我用4.2.2,下载好后,解压开,把 phoenix-4.2.2-server.jar 拷贝到所有RegionServer的lib目录下 /usr/lib/hbase/lib

- cp phoenix-4.2.2-server.jar /usr/lib/hbase/lib

然后重启所有regionserver

- service hbase-regionserver restart

使用Phoenix

把 phoenix-4.2.2-bin.tar.gz 解压出来的 phoenix-4.2.2-bin 文件夹也上传到host1上,然后到bin目录下执行(其实在本机也可以,只是我本机没有Python环境而Centos天生有Python)

如果是windows下下载的,得先在centos上给bin文件夹里面的.py文件赋上执行权限

- [root@host1 ~]# cd phoenix-4.2.2-bin/

- [root@host1 phoenix-4.2.2-bin]# cd bin

- [root@host1 bin]# chmod +x *.py

phoenix可以用4种方式调用

批处理方式

我们建立sql 名叫 us_population.sql 内容是

- CREATE TABLE IF NOT EXISTS us_population ( state CHAR(2) NOT NULL, city VARCHAR NOT NULL, population BIGINT CONSTRAINT my_pk PRIMARY KEY (state, city));

建立一个文件 us_population.csv

- NY,New York,8143197

- CA,Los Angeles,3844829

- IL,Chicago,2842518

- TX,Houston,2016582

- PA,Philadelphia,1463281

- AZ,Phoenix,1461575

- TX,San Antonio,1256509

- CA,San Diego,1255540

- TX,Dallas,1213825

- CA,San Jose,912332

再创建一个文件 us_population_queries.sql

- SELECT state as "State",count(city) as "City Count",sum(population) as "Population Sum" FROM us_population GROUP BY state ORDER BY sum(population) DESC;

然后一起执行

- phoenix-4.2.2-bin/bin/psql.py host1,host2:2181 us_population.sql us_population.csv us_population_queries.sql

这边记得把 host1 和 host2 换成你的zookeeper地址

这条命令你同时做了 创建一个表,插入数据,查询结果 三件事情

- [root@host1 ~]# phoenix-4.2.2-bin/bin/psql.py host1,host2:2181 us_population.sql us_population.csv us_population_queries.sql

- 15/03/04 17:14:23 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- 15/03/04 17:14:24 WARN impl.MetricsConfig: Cannot locate configuration: tried hadoop-metrics2-phoenix.properties,hadoop-metrics2.properties

- no rows upserted

- Time: 0.726 sec(s)

-

- csv columns from database.

- CSV Upsert complete. 10 rows upserted

- Time: 0.103 sec(s)

-

- St City Count Population Sum

- -- ---------------------------------------- ----------------------------------------

- NY 1 8143197

- CA 3 6012701

- TX 3 4486916

- IL 1 2842518

- PA 1 1463281

- AZ 1 1461575

- Time: 0.048 sec(s)

用hbase shell 看下会发现多出来一个 US_POPULATION 表,用scan 命令查看一下这个表的数据

- hbase(main):002:0> scan 'US_POPULATION'

- ROW COLUMN+CELL

- AZPhoenix column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x16MG

- AZPhoenix column=0:_0, timestamp=1425460467206, value=

- CALos Angeles column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00:\xAA\xDD

- CALos Angeles column=0:_0, timestamp=1425460467206, value=

- CASan Diego column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x13(t

- CASan Diego column=0:_0, timestamp=1425460467206, value=

- CASan Jose column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x0D\xEB\xCC

- CASan Jose column=0:_0, timestamp=1425460467206, value=

- ILChicago column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00+_\x96

- ILChicago column=0:_0, timestamp=1425460467206, value=

- NYNew York column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00|A]

- NYNew York column=0:_0, timestamp=1425460467206, value=

- PAPhiladelphia column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x16S\xF1

- PAPhiladelphia column=0:_0, timestamp=1425460467206, value=

- TXDallas column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x12\x85\x81

- TXDallas column=0:_0, timestamp=1425460467206, value=

- TXHouston column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x1E\xC5F

- TXHouston column=0:_0, timestamp=1425460467206, value=

- TXSan Antonio column=0:POPULATION, timestamp=1425460467206, value=\x80\x00\x00\x00\x00\x13,=

- TXSan Antonio column=0:_0, timestamp=1425460467206, value=

- 10 row(s) in 0.2220 seconds

会发现

- 之前定义的PRIMARY KEY 为 state, city ,于是Phoenix就把你输入的state 和 city的值拼起来成为rowkey

- 其他的字段还是按照列名去保存,默认的列簇为 0

- 还有一个0:_0 这个列是没有值的,这个是Phoenix处于性能方面考虑增加的一个列,不用管这个列

命令行方式

然后执行sqlline.py

可以进入命令行模式

- 0: jdbc:phoenix:localhost>

退出命令行的方式是执行 !quit

- 0: jdbc:phoenix:localhost>!quit

命令开头需要一个感叹号,使用help可以打印出所有命令

- 0: jdbc:phoenix:localhost> help

- !all Execute the specified SQL against all the current connections

- !autocommit Set autocommit mode on or off

- !batch Start or execute a batch of statements

- !brief Set verbose mode off

- !call Execute a callable statement

- !close Close the current connection to the database

- !closeall Close all current open connections

- !columns List all the columns for the specified table

- !commit Commit the current transaction (if autocommit is off)

- !connect Open a new connection to the database.

- !dbinfo Give metadata information about the database

- !describe Describe a table

- !dropall Drop all tables in the current database

- !exportedkeys List all the exported keys for the specified table

- !go Select the current connection

- !help Print a summary of command usage

- !history Display the command history

- !importedkeys List all the imported keys for the specified table

- !indexes List all the indexes for the specified table

- !isolation Set the transaction isolation for this connection

- !list List the current connections

- !manual Display the SQLLine manual

- !metadata Obtain metadata information

- !nativesql Show the native SQL for the specified statement

- !outputformat Set the output format for displaying results

- (table,vertical,csv,tsv,xmlattrs,xmlelements)

- !primarykeys List all the primary keys for the specified table

- !procedures List all the procedures

- !properties Connect to the database specified in the properties file(s)

- !quit Exits the program

- !reconnect Reconnect to the database

- !record Record all output to the specified file

- !rehash Fetch table and column names for command completion

- !rollback Roll back the current transaction (if autocommit is off)

- !run Run a script from the specified file

- !save Save the current variabes and aliases

- !scan Scan for installed JDBC drivers

- !script Start saving a script to a file

- !set Set a sqlline variable

- !sql Execute a SQL command

- !tables List all the tables in the database

- !typeinfo Display the type map for the current connection

- !verbose Set verbose mode on

建立employee的映射表

数据准备

然后我们来建立一个映射表,映射我之前建立过的一个hbase表 employee

- hbase(main):003:0> describe 'employee'

- DESCRIPTION ENABLED

- 'employee', {NAME => 'company', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => true

- '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_DELETED_CELLS => 'false', BLOCKSIZE => '65536', I

- N_MEMORY => 'false', BLOCKCACHE => 'true'}, {NAME => 'family', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLIC

- ATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_DELETED_CELLS => '

- false', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

- 1 row(s) in 0.1120 seconds

可以看出employee有连个列簇 company 和 family

- hbase(main):016:0> scan 'employee'

- ROW COLUMN+CELL

- row1 column=company:name, timestamp=1425537923391, value=ted

- row1 column=company:position, timestamp=1425537950471, value=worker

- row1 column=family:tel, timestamp=1425537956413, value=13600912345

- row2 column=family:tel, timestamp=1425537994087, value=18942245698

- row2 column=family:name, timestamp=1425537975610, value=michael

- row2 column=family:position, timestamp=1425537985594, value=manager

- 2 row(s) in 0.0340 seconds

有两条数据。如果没有这些数据的同学可以用以下命令创建

- create 'employee','company','family'

- put 'employee','row1','company:name','ted'

- put 'employee','row1','company:position','worker'

- put 'employee','row1','family:tel','13600912345'

- put 'employee','row2','company:name','michael'

- put 'employee','row2','company:position','manager'

- put 'employee','row2','family:tel','1894225698'

- scan 'employee'

关于映射表

在建立映射表之前要说明的是,Phoenix是大小写敏感的,并且所有命令都是大写,如果你建的表名没有用双引号括起来,那么无论你输入的是大写还是小写,建立出来的表名都是大写的,如果你需要建立出同时包含大写和小写的表名和字段名,请把表名或者字段名用双引号括起来

你可以建立读写的表或者只读的表,他们的区别如下

- 读写表:如果你定义的列簇不存在,会被自动建立出来,并且赋以空值

- 只读表:你定义的列簇必须事先存在

建立映射

- 0: jdbc:phoenix:localhost> CREATE TABLE IF NOT EXISTS "employee" ("no" CHAR(4) NOT NULL PRIMARY KEY, "company"."name" VARCHAR(30),"company"."position" VARCHAR(20), "family"."tel" CHAR(11), "family"."age" INTEGER);

- 2 rows affected (1.745 seconds)

这行语句有几个注意点

- IF NOT EXISTS可以保证如果已经有建立过这个表,配置不会被覆盖

- 作为rowkey的字段用 PRIMARY KEY标定

- 列簇用 columnFamily.columnName 来表示

- family.age 是新增的字段,我之前建立测试数据的时候没有建立这个字段的原因是在hbase shell下无法直接写入数字型,等等我用UPSERT 命令插入数据的时候你就可以看到真正的数字型在hbase 下是如何显示的

建立好后,查询一下数据

- 0: jdbc:phoenix:localhost> SELECT * FROM "employee";

- +------+--------------------------------+----------------------+-------------+------------------------------------------+

- | no | name | position | tel | age |

- +------+--------------------------------+----------------------+-------------+------------------------------------------+

- | row1 | ted | worker | 13600912345 | null |

- | row2 | michael | manager | 1894225698 | null |

- +------+--------------------------------+----------------------+-------------+------------------------------------------+

插入/更改数据

插入或者更改数据在Phoenix里面是一个命令叫 UPSERT 意思是 update + insert

我们插入一条数据试试

- UPSERT INTO "employee" VALUES ('row3','billy','worker','16974681345',33);

查询一下数据

- 0: jdbc:phoenix:localhost> SELECT * FROM "employee";

- +------+--------------------------------+----------------------+-------------+------------------------------------------+

- | no | name | position | tel | age |

- +------+--------------------------------+----------------------+-------------+------------------------------------------+

- | row1 | ted | worker | 13600912345 | null |

- | row2 | michael | manager | 1894225698 | null |

- | row3 | billy | worker | 16974681345 | 33 |

- +------+--------------------------------+----------------------+-------------+------------------------------------------+

- 3 rows selected (0.195 seconds)

我们去hbase里面看一下数据

- hbase(main):054:0> scan 'employee'

- ROW COLUMN+CELL

- row1 column=company:_0, timestamp=1425543735420, value=

- row1 column=company:name, timestamp=1425543735274, value=ted

- row1 column=company:position, timestamp=1425543735323, value=worker

- row1 column=family:tel, timestamp=1425543735420, value=13600912345

- row2 column=company:_0, timestamp=1425543735767, value=

- row2 column=company:name, timestamp=1425543735608, value=michael

- row2 column=company:position, timestamp=1425543735720, value=manager

- row2 column=family:tel, timestamp=1425543735767, value=1894225698

- row3 column=company:_0, timestamp=1425543857594, value=

- row3 column=company:name, timestamp=1425543857594, value=billy

- row3 column=company:position, timestamp=1425543857594, value=worker

- row3 column=family:age, timestamp=1425543857594, value=\x80\x00\x00!

- row3 column=family:tel, timestamp=1425543857594, value=16974681345

- 3 row(s) in 0.0650 seconds

最后那个 \x80\x00\x00! 就是数字型在hbase中序列化成了字节的存储形式

TABLE 跟 VIEW 的区别

建立VIEW的语句跟TABLE一样,就是把TABLE关键字换成VIEW而已,但是他们有本质的区别:

- 如果你有一张Hbase的表,并且以前已经有数据,最好建立VIEW,因为一旦建立了TABLE, Phoenix 会认为这张table完全是属于Phoenix的,就算你只是删除Phoenix里面的表,Hbase里面对应的表也会一起删除掉

- 如果你之前没有这张Hbase的表,你想建立全新的Phoenix表,就用Table,但是要记住所有的操作都要经过Phoenix,那张同时被建立的表,只是一张附属表,不要试图往里面手动维护数据,忘记它的存在

GUI方式的安装方法在 http://phoenix.apache.org/installation.html 这边不讲了,因为我自己也没搞起来,而且那个界面实在太丑了,看了不忍心使用。

JDBC调用

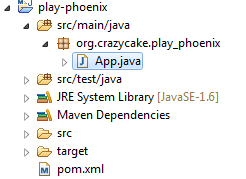

打开Eclipse建立一个简单的Maven项目 play-phoenix

pom.xml的内容是

- <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

- xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

- <modelVersion>4.0.0</modelVersion>

-

- <groupId>org.crazycake</groupId>

- <artifactId>play-phoenix</artifactId>

- <version>0.0.1-SNAPSHOT</version>

- <packaging>jar</packaging>

-

- <name>play-phoenix</name>

- <url>http://maven.apache.org</url>

-

- <properties>

- <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

- </properties>

-

- <repositories>

- <repository>

- <id>apache release</id>

- <url>https://repository.apache.org/content/repositories/releases/</url>

- </repository>

- </repositories>

-

-

- <build>

- <plugins>

- <plugin>

- <artifactId>maven-compiler-plugin</artifactId>

- <version>2.0.2</version>

- <configuration>

- <source>1.7</source>

- <target>1.7</target>

- <encoding>UTF-8</encoding>

- <optimise>true</optimise>

- <compilerArgument>-nowarn</compilerArgument>

- </configuration>

- </plugin>

- <plugin>

- <groupId>org.apache.maven.plugins</groupId>

- <artifactId>maven-shade-plugin</artifactId>

- <version>2.3</version>

- <configuration>

- <transformers>

- <transformer

- implementation="org.apache.maven.plugins.shade.resource.ApacheLicenseResourceTransformer">

- </transformer>

- </transformers>

- </configuration>

- <executions>

- <execution>

- <phase>package</phase>

- <goals>

- <goal>shade</goal>

- </goals>

- </execution>

- </executions>

- </plugin>

- </plugins>

- </build>

- <dependencies>

- <dependency>

- <groupId>junit</groupId>

- <artifactId>junit</artifactId>

- <version>3.8.1</version>

- <scope>test</scope>

- </dependency>

- <dependency>

- <groupId>org.apache.phoenix</groupId>

- <artifactId>phoenix-core</artifactId>

- <version>4.2.2</version>

- </dependency>

- </dependencies>

- </project>

phoenix 4.2.2 使用jdk1.7编译的,如果你只有1.6就用 4.2.0

- <dependency>

- <groupId>org.apache.phoenix</groupId>

- <artifactId>phoenix-core</artifactId>

- <version>4.2.0</version>

- </dependency>

我们建立一个类 PhoenixManager

- package org.crazycake.play_phoenix;

-

- import java.sql.Connection;

- import java.sql.DriverManager;

- import java.sql.ResultSet;

- import java.sql.SQLException;

- import java.sql.Statement;

-

- public class PhoenixManager {

- public static void main(String[] args) throws SQLException {

- Connection conn = null;

- Statement stat = null;

- ResultSet rs = null;

- try {

- Class.forName("org.apache.phoenix.jdbc.PhoenixDriver");

- conn = DriverManager.getConnection("jdbc:phoenix:host1,host2:2181");

- stat = conn.createStatement();

- rs = stat.executeQuery("select * from \"employee\"");

- while(rs.next()){

- System.out.println("no: " + rs.getString("no"));

- System.out.println("name: " + rs.getString("name"));

- System.out.println("position: " + rs.getString("position"));

- System.out.println("age: " + rs.getInt("age"));

- }

- } catch (Throwable e) {

- e.printStackTrace();

- } finally{

- if(rs != null){

- rs.close();

- }

- if(stat != null){

- stat.close();

- }

- if(conn != null){

- conn.close();

- }

- }

- }

- }

运行下,结果为

- no: row1

- name: ted

- position: worker

- age: 0

- no: row2

- name: michael

- position: manager

- age: 0

- no: row3

- name: billy

- position: worker

- age: 33

搞定!

结语

至此所有Hadoop必学的组件已经完成,菜鸟课程已经完成!后续的非必学组件我就看心情更新了! :-)

参考资料

- http://phoenix.apache.org/Phoenix-in-15-minutes-or-less.html

Phoenix中的语法

Phoenix中的数据类型

Phoenix中的方法

我自己使用过程中一些简单语句,如下:

select * from shuju;select count(1) from shuju;select cmtid,count(1) as num from shuju group by cmtid order by num desc;select avg(TO_NUMBER(avgt)) from shuju;select cmtid,count(1) as num,avg(TO_NUMBER(avgt)) as avgt,avg(TO_NUMBER(loss)) as loss from shuju group by cmtid order by num desc;select acm,dtype,cmtid,count(1) as num,avg(TO_NUMBER(avgt)) as avgt,avg(TO_NUMBER(loss)) as lossfrom shujugroup by acm,dtype,cmtidorder by num desc;select acm,dtype,porgcode,orgid,cmtid,count(1) as num,avg(TO_NUMBER(avgt)) as avgt,avg(TO_NUMBER(loss)) as lossfrom shujugroup by acm,dtype,porgcode,orgid,cmtidorder by num desc;where TO_DATE(ttime,'yyyyMMddHHmmss')=TO_DATE('20141125','yyyyMMdd')select ttime from shuju order by ttime desc; where TO_DATE(ttime,'yyyyMMddHHmmss')=TO_DATE('20141125','yyyyMMdd') select TO_DATE(ttime,'yyyyMMddHHmmss') from shuju;select TO_DATE('20141125','yyyyMMdd') from shuju;select (TO_DATE(ttime,'yyyyMMddHHmmss')=TO_DATE('20141125','yyyyMMdd')) as aaa from shuju order by aaa asc;

浙公网安备 33010602011771号

浙公网安备 33010602011771号