Hadoop(19)自定义输出类

自定义OutputFormat类

思考一个问题:我们前面编程的时候可以发现,一个reducetask默认会把结果输出到一个文件。那如果我们想要让一个reducetask的结果分类输出到不同文件中,要怎么实现,我们可以通过自定义outputformat类来解决。

案例需求

现在有一些订单的评论数据,需求,将订单的好评与其他评论(中评、差评)进行区分开来,将最终的数据分开到不同的文件夹下面去,数据内容参见资料文件夹,其中数据第十个字段表示好评,中评,差评。0:好评,1:中评,2:差评

部分数据如下:

1 2018-03-15 22:29:06 2018-03-15 22:29:06 我想再来一个 \N 1 3 hello 来就来吧 0 2018-03-14 22:29:03

2 2018-03-15 22:42:08 2018-03-15 22:42:08 好的 \N 1 1 添加一个吧 说走咱就走啊 0 2018-03-14 22:42:04

3 2018-03-15 22:55:21 2018-03-15 22:55:21 haobuhao \N 1 1 nihao 0 2018-03-24 22:55:17

4 2018-03-23 11:15:28 2018-03-23 11:15:28 店家很好 非常好 \N 1 3 666 谢谢 0 2018-03-23 11:15:20

5 2018-03-23 14:52:48 2018-03-23 14:53:22 a'da'd \N 0 4 da'd's 打打操作 0 2018-03-22 14:52:42

6 2018-03-23 14:53:52 2018-03-23 14:53:52 达到 \N 1 4 1313 13132131 0 2018-03-07 14:30:38

7 2018-03-23 14:54:29 2018-03-23 14:54:29 321313 \N 1 4 111 1231231 1 2018-03-06 14:54:24

案例分析

要将订单数据根据评论分类输出到不同的文件中,有两种做法:

- 第一种方法:设置

reducetask个数为2,将好评订单数据与其它评论的订单数据通过分区输出到两个不同的reducetask,然后就可以输出不同文件了 - 第二种方法:使用一个

reducetask即可,通过自定义outputformat即可分类输出数据到不同文件。 - 这两种方法的效果是一样的。我们来演示一下第二种方法。

步骤1:自定义OutputFormat类

package com.jimmy.day10;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

//outputformat类的泛型要跟reduce的输出泛型相一致

//Text:订单数据

//NullWritable:无

public class MyOutputFormat extends FileOutputFormat<Text, NullWritable> {

//重写getRecordWriter()方法,用来获取我们自己定义的RecordWriter

@Override

public RecordWriter<Text, NullWritable> getRecordWriter(TaskAttemptContext taskAttemptContext) throws IOException, InterruptedException {

//创建hdfs文件系统对象

FileSystem fs=FileSystem.get(taskAttemptContext.getConfiguration());

//创建两个输出流

Path goodPath=new Path("file:///F://test2//myof//good.txt");

Path badPath=new Path("file:///F://test2//myof//bad.txt");

FSDataOutputStream goodStream=fs.create(goodPath);

FSDataOutputStream badStream=fs.create(badPath);

//返回一个RecordWriter

return new MyRecordWriter(goodStream,badStream);

}

}

步骤2:定义RecordWriter类

package com.jimmy.day10;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import java.io.IOException;

//该类的泛型要跟MyOutputFormat的泛型一致

public class MyRecordWriter extends RecordWriter<Text, NullWritable> {

//创建两个输入流:

private FSDataOutputStream goodStream=null;

private FSDataOutputStream badStream=null;

//创建有参构造方法,传入两个输出流

public MyRecordWriter(FSDataOutputStream goodStream,FSDataOutputStream badStream){

this.goodStream=goodStream;

this.badStream=badStream;

}

//重写write方法,text是当前行内容

@Override

public void write(Text text, NullWritable nullWritable) throws IOException, InterruptedException {

if(text.toString().split("\t")[9].equals("0")){

goodStream.write(text.toString().getBytes());

goodStream.write("\r\n".getBytes());

}else{

badStream.write(text.toString().getBytes());

badStream.write("\r\n".getBytes());

}

}

//释放资源

@Override

public void close(TaskAttemptContext taskAttemptContext) throws IOException, InterruptedException {

if(badStream !=null){ //badStream初始值为null,如果这里badStream不为null,证明流已经开启,关闭流

badStream.close();

}

if (goodStream != null) {

goodStream.close();

}

}

}

步骤3:定义map逻辑

package com.jimmy.day10;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class MyMap extends Mapper<LongWritable, Text,Text, NullWritable> {

Text kout=new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

kout=value;

context.write(kout,NullWritable.get());

}

}

步骤4:定义reduce逻辑

package com.jimmy.day10;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class MyReduce extends Reducer<Text, NullWritable,Text,NullWritable> {

@Override

protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key,NullWritable.get());

}

}

步骤5:定义组装类和main()方法

package com.jimmy.day10;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class Assem extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

//获取Hadoop配置对象

Configuration conf = super.getConf();

//获取job实例

Job job = Job.getInstance(conf, "MyOutputFormat");

//设置jar包打包

job.setJarByClass(Assem.class);

//设置输入类:

job.setInputFormatClass(TextInputFormat.class);

//设置输入路径

TextInputFormat.addInputPath(job, new Path("file:///F://test5"));

//设置mapper类

job.setMapperClass(MyMap.class);

//设置mapper类输出的kv对类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

//设置输出类:

job.setOutputFormatClass(MyOutputFormat.class);

//设置输出路径:

MyOutputFormat.setOutputPath(job, new Path("file:///F://test2//myof"));

//设置要使用的Reducer类

job.setReducerClass(MyReduce.class);

// 设置reduce输出的kv对类型:

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//获取事务的信息

//true表示将运行进度等信息及时输出给用户,false的话只是等待作业结束

boolean a = job.waitForCompletion(true);

return a ? 0 : 1;

}

//定义main方法

public static void main(String[] args) throws Exception {

Configuration conf=new Configuration();

int exitCode= ToolRunner.run(conf,new Assem(),args);

System.exit(exitCode);

}

}

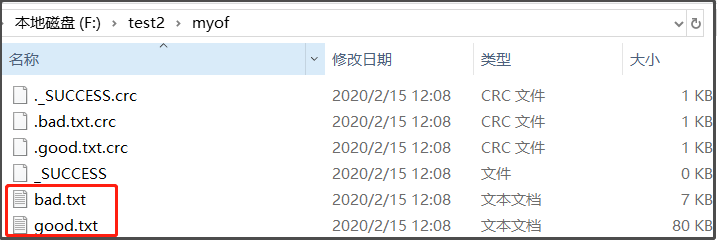

运行结果: