Hadoop(5)hdfs的Java Api开发

hdfs的JavaAPI开发

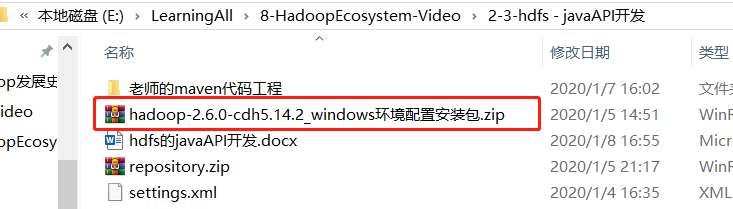

windows安装hadoop

- 解压CDH版本的在windows系统上运行的Hadoop安装包,解压路径不能有中文或者空格

- 在windows当中配置hadoop环境变量

- 将hadoop.dll文件拷贝到C:\Windows\System32

创建Maven工程并导入jar包

由于cdh版本的所有的软件涉及版权的问题,所以并没有将所有的jar包托管到maven仓库当中去,而是托管在了CDH自己的服务器上面,所以我们默认去maven的仓库下载不到,需要自己手动的添加repository去CDH仓库进行下载,以下两个地址是官方文档说明,请仔细查阅

下面以IDEA为例:

- 创建Maven工程

- 在IDEA将本地仓库设置为我们存放repository这个文件的路径

- 将Maven工程的pom.xml文件,添加内容,如下:

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0-mr1-cdh5.14.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0-cdh5.14.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0-cdh5.14.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.0-cdh5.14.2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/junit/junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>RELEASE</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

<!-- <verbal>true</verbal>-->

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<minimizeJar>true</minimizeJar>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

API操作

首先要开启虚拟机,保证hdfs集群的正常开启状态

案例1:在hdfs集群文件系统创建目录

//src/main/java/T1.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi a=new HdfsApi();

try {

a.mkdirToHdfs();

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void mkdirToHdfs() throws IOException{

//创建配置对象

Configuration conf=new Configuration();

//设置配置对象

conf.set("fs.defaultFS","hdfs://node01:8020");//第一个参数是名字,第二个参数是值

//通过conf新建hdfs文件系统对象

FileSystem fs=FileSystem.get(conf);

//新建目录

fs.mkdirs(new Path("/kobe"));//传入的是一个Path对象

//关闭

fs.close();

}

}

/*

解释: "fs.defaultFS","hdfs://node01:8020"

fs.defaultFS的意思可以通过查看Hadoop官网查看core-default.xml帮助文档了解:https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/core-default.xml

从文档信息可以了解到fs.default的意思是:The name of the default file system.(默认文件系统名称)

*/

补充:新建hdfs文件系统对象不一定通过上面那种方法,还可以通过下列这种方式:

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://node01:8020"), conf);

//传入URI对象

案例2:windows本地文件-->hdfs文件系统

//src/main/java/T1.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi b=new HdfsApi();

try {

b.localToHdfs();

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void localToHdfs() throws IOException{

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

fs.copyFromLocalFile(new Path("file:///F://test//aa.txt"),new Path("hdfs://node01:8020//kobe"));

fs.close();

}

}

案例2:从hdfs下载到windows本地

//src/main/java/T1.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi c=new HdfsApi();

try {

c.downFromHdfs();

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void downFromHdfs() throws IOException{

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

fs.copyToLocalFile(new Path("hdfs://node01:8020//kobe//aa.txt"),new Path("file:///F://test"));

//需要保证windows本地目录的存在file:///F://test

fs.close();

}

}

案例4:删除hdfs的文件或目录

//src/main/java/T1.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi c=new HdfsApi();

try {

c.deleteFile();

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void deleteFile() throws IOException{

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

fs.delete(new Path("hdfs://node01:8020//first"),true);

//第二个参数为true的意思是支持递归多层级删除

fs.close();

}

}

案例5:重命名hdfs的文件或目录

//src/main/java/T1.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi d=new HdfsApi();

try {

d.renameFile();

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void renameFile() throws IOException{

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

fs.rename(new Path("hdfs://node01:8020//IDEA.txt"),new Path("hdfs://node01:8020//James.txt"));

fs.close();

}

}

案例6:查看hdfs某目录下所有文件的相关信息

//src/main/java/T1.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi f=new HdfsApi();

try {

f.ListFileInfo();

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void ListFileInfo() throws IOException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

RemoteIterator<LocatedFileStatus> lf=fs.listFiles(new Path("hdfs://node01:8020//"),true);

//第二个参数为true表明支持递归多层级

while(lf.hasNext()){

LocatedFileStatus status=lf.next();

System.out.println(status.getPath().getName()); //打印文件名称

System.out.println(status.getPath()); //打印文件路径

System.out.println(status.getLen()); //打印文件长度

System.out.println(status.getPermission()); //打印文件权限

System.out.println(status.getGroup()); //打印分组情况

BlockLocation [] bks=status.getBlockLocations(); //获取块信息

for (BlockLocation a:bks){

System.out.print("块信息: "+a+" : "+"块所在主机节点:"); //打印块信息,不换行

String []hosts=a.getHosts(); //获取块存在的主机节点

for (String b:hosts){

System.out.println(b+" "); //打印块存在的主机节点

}

}

System.out.println("-------------------------------");

}

}

}

/*运行结果格式大致如下:

edits.txt

hdfs://node01:8020/edits.txt

19

rw-r--r--

supergroup

块信息: 0,19,node03 : 块所在主机节点:node03

-------------------------------

hJ.txt

hdfs://node01:8020/hJ.txt

19

rw-r--r--

supergroup

块信息: 0,19,node03 : 块所在主机节点:node03

*/

案例7:通过IO流上传本地文件到hdfs

//src/main/java/T1.java

import org.apache.commons.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi g=new HdfsApi();

File inputPath=new File("F://test//em.txt"); //这里注意:一定不要加file:///

Path outputPath=new Path("hdfs://node01:8020//em3.txt");

try {

g.ioLocalToHdfs1(inputPath,outputPath);

g.ioLocalToHdfs2(inputPath,outputPath);

//上面两个方法的效果一样

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

//方法1:使用IOUtils工具类

public void ioLocalToHdfs1(File inputPath, Path outputPath) throws IOException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

//创建输入流

FileInputStream fis=new FileInputStream(inputPath);

//利用hdfs文件系统对象创建输出流

FSDataOutputStream fos=fs.create(outputPath);

//流对拷:

IOUtils.copy(fis,fos);

//关闭资源:

IOUtils.closeQuietly(fos);

IOUtils.closeQuietly(fis);

fs.close();

}

//方法2:不使用IOUtils工具类

public void ioLocalToHdfs2(File inputPath, Path outputPath) throws IOException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

FileInputStream fis=new FileInputStream(inputPath);

FSDataOutputStream fos=fs.create(outputPath); //使用create方法

byte[] bArr=new byte[100];

int len;

while ((len=fis.read(bArr))!=-1){

fos.write(bArr,0,len);

}

fos.close();

fis.close();

fs.close();

}

}

案例8:通过IO流从hdfs下载文件到本地

//src/main/java/T1.java

import org.apache.commons.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi g=new HdfsApi();

Path inputPath=new Path("hdfs://node01:8020//em3.txt");

File outputPath=new File("F://test//em4.txt");

try {

g.ioHdfsToLocal(inputPath,outputPath);

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void ioHdfsToLocal(Path inputPath, File outputPath) throws IOException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

//创建输入输出流

FSDataInputStream fis=fs.open(inputPath); //使用open方法

FileOutputStream fos=new FileOutputStream(outputPath);

IOUtils.copy(fis,fos);

IOUtils.closeQuietly(fos);

IOUtils.closeQuietly(fis);

fs.close();

}

}

案例8:IO流实现小文件合并

意思是:将本地文件系统某一个目录下的所有小文件合并并上传到hdfs系统,要合并的目录下的小文件不能是目录,下面案例是把本地某目录下的所有.txt文件合并,合并效果是把小文件的内容合并。

//src/main/java/T1.java

import org.apache.commons.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.IOException;

public class T1{

public static void main(String[] args) {

HdfsApi g=new HdfsApi();

Path inputPath=new Path("hdfs://node01:8020//test2.rar");

Path outputPath=new Path("F://test//");

try {

g.mergeFile(inputPath,outputPath);

} catch (IOException e) {

e.printStackTrace();

}

}

}

class HdfsApi{

public void mergeFile(Path outputPath,Path a) throws IOException {

//创建hdfs文件系统对象

Configuration conf=new Configuration();

conf.set("fs.defaultFS","hdfs://node01:8020");

FileSystem fs=FileSystem.get(conf);

//创建输出流:

FSDataOutputStream fos=fs.create(outputPath);

//创建本地文件系统对象

LocalFileSystem lfs=FileSystem.getLocal(new Configuration());

//读取本地文件

FileStatus [] fstatus=lfs.listStatus(a);

//循环读取到的所有本地文件,并循环创建输入流,再进行流对拷

for(FileStatus i:fstatus){

Path localPath=i.getPath();

//创建输入流,通过本地文件系统对象lfs的open方法创建

FSDataInputStream fis=lfs.open(localPath);

//流对拷:

IOUtils.copy(fis,fos);

//关闭输入流资源:

IOUtils.closeQuietly(fis);

}

//关闭资源:

IOUtils.closeQuietly(fos);

lfs.close();

fs.close();

}

}