spark(5)通过IDEA开发spark程序

通过IDEA开发spark程序

构建maven工程

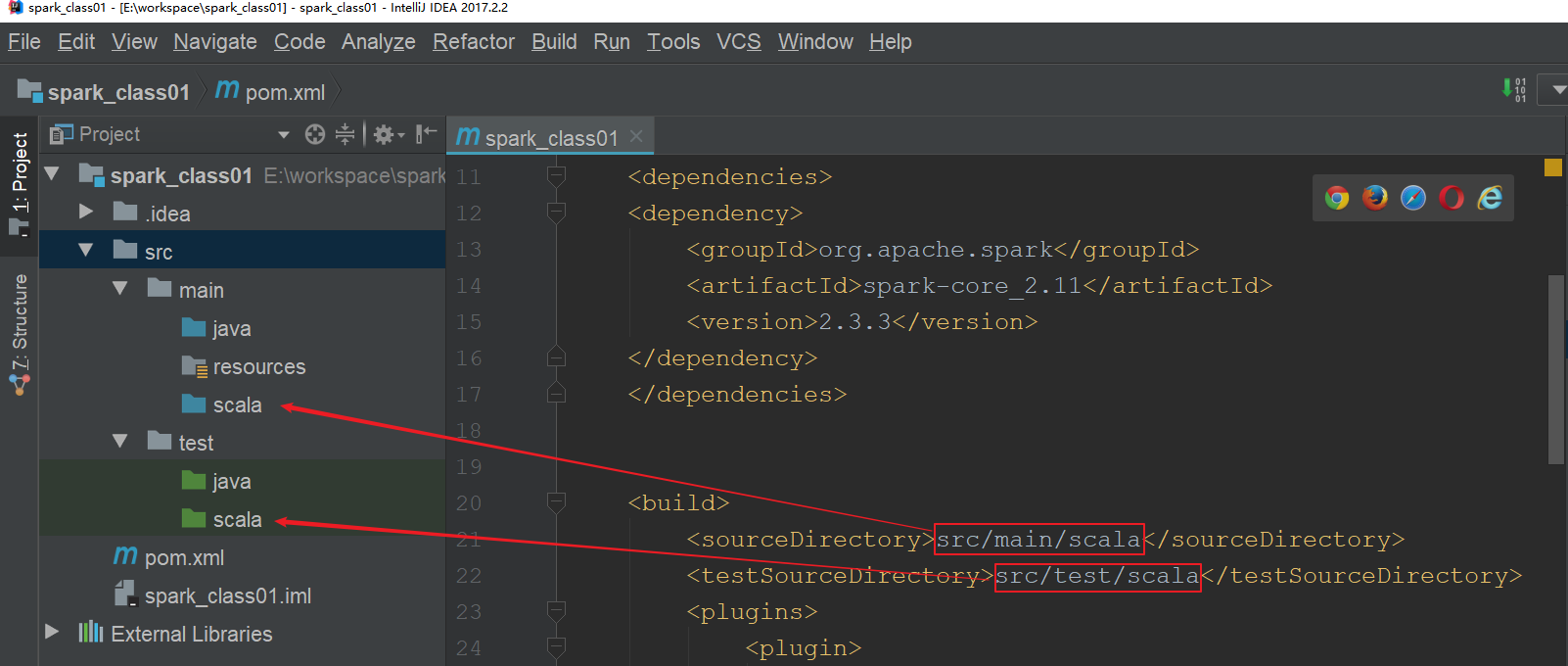

创建src/main/scala 和 src/test/scala 目录

添加pom依赖

说明:

- 创建maven工程后,设定maven为自己安装的maven,并在确保settings.xml里面设置了镜像地址为阿里云

- 如果下载不下来scala-maven-plugin或者maven-shade-plugin,则自己去网上搜索下载,然后存放到本地仓库repository的对应目录,没有对应的目录就自己创建。

- 比如,import不了scala-maven-plugin,那就将从网上下载的plugin的jar包存放到以下目录:E:\BaiduNetdiskDownload\repository\net\alchim31\maven\scala-maven-plugin

- 下载maven依赖的好网址:https://mvnrepository.com/

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.3.3</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<arg>-dependencyfile</arg>

<arg>${project.build.directory}/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass></mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

spark单词统计(scala/本地运行)

在windows创建文件"F:\test\aa.txt"

hadoop spark spark

flume hadoop flink

hive spark hadoop

spark hbase

创建object,代码开发:

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SparkWordCount {

def main(args: Array[String]): Unit = {

//构建sparkConf对象 设置application名称和master地址

val sConf:SparkConf=new SparkConf().setAppName("WordCount").setMaster("local[2]")

//构建sparkContext对象,该对象非常重要,它是所有spark程序的执行入口

// 它内部会构建DAGScheduler和 TaskScheduler对象

val sc=new SparkContext(sConf)

//设置日志输出级别

sc.setLogLevel("warn")

//读取数据文件

val data:RDD[String]=sc.textFile("F:\\test\\aa.txt")

//切分每一行,获取所有单词

val words:RDD[String]=data.flatMap(x=>x.split(" "))

//每个单词计为1

val wordAndOne:RDD[(String,Int)]=words.map(x=>(x,1))

//将相同单词出现的1累加

val res:RDD[(String,Int)]=wordAndOne.reduceByKey((x,y)=>x+y)

//按照单词出现的次数降序排列 第二个参数默认是true表示升序,设置为false表示降序

val res_sort:RDD[(String,Int)]=res.sortBy(x=>x._2,false)

//收集数据

val res_coll:Array[(String,Int)]=res_sort.collect()

//打印

res_coll.foreach(println)

//关闭spark Context

sc.stop()

}

}

运行输出结果为:

(spark,4)

(hadoop,3)

(hive,1)

(flink,1)

(flume,1)

(hbase,1)

spark单词统计(scala/集群运行)

集群运行与本地运行的代码开发很接近,本次集群运行相对于上面的本地运行代码,修改的地方有:

- new SparkConf().setAppName("WordCount")没有加.setMaster("local[2]")

- sc.textFile(args(0))数据输入路径采用动态传参

- 不再有数据收集,即.collect()

- res_sort.saveAsTextFile(args(1))结果输出路径采用动态传参

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SparkWordCount {

def main(args: Array[String]): Unit = {

val sConf:SparkConf=new SparkConf().setAppName("WordCount")

val sc=new SparkContext(sConf)

sc.setLogLevel("warn")

val data:RDD[String]=sc.textFile(args(0))

val words:RDD[String]=data.flatMap(x=>x.split(" "))

val wordAndOne:RDD[(String,Int)]=words.map(x=>(x,1))

val res:RDD[(String,Int)]=wordAndOne.reduceByKey((x,y)=>x+y)

val res_sort:RDD[(String,Int)]=res.sortBy(x=>x._2,false)

res_sort.saveAsTextFile(args(1))

}

}

打成jar包提交到集群中运行

[hadoop@node01 tmp]$ spark-submit --master spark://node01:7077,node02:7077 \

--class SparkWordCount \

--executor-memory 1g \

--total-executor-cores 2 \

/tmp/original-MySparkDemo-1.0-SNAPSHOT.jar \

/words.txt /spark_out1

说明:

- 运行jar包前,要先创建好数据文件/words.txt

- /words.txt指的是hdfs的路径(因为之前spark整合了hdfs)

- /out也是hdfs的路径

- /tmp/original-MySparkDemo-1.0-SNAPSHOT.jar是linux的本地路径

spark单词统计(JAVA/本地运行)TODO

代码开发

package com.kaikeba;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import scala.Tuple2;

import java.util.Arrays;

import java.util.Iterator;

import java.util.List;

//todo: 利用java语言开发spark的单词统计程序

public class JavaWordCount {

public static void main(String[] args) {

//1、创建SparkConf对象

SparkConf sparkConf = new SparkConf().setAppName("JavaWordCount").setMaster("local[2]");

//2、构建JavaSparkContext对象

JavaSparkContext jsc = new JavaSparkContext(sparkConf);

//3、读取数据文件

JavaRDD<String> data = jsc.textFile("E:\\words.txt");

//4、切分每一行获取所有的单词 scala: data.flatMap(x=>x.split(" "))

JavaRDD<String> wordsJavaRDD = data.flatMap(new FlatMapFunction<String, String>() {

public Iterator<String> call(String line) throws Exception {

String[] words = line.split(" ");

return Arrays.asList(words).iterator();

}

});

//5、每个单词计为1 scala: wordsJavaRDD.map(x=>(x,1))

JavaPairRDD<String, Integer> wordAndOne = wordsJavaRDD.mapToPair(new PairFunction<String, String, Integer>() {

public Tuple2<String, Integer> call(String word) throws Exception {

return new Tuple2<String, Integer>(word, 1);

}

});

//6、相同单词出现的1累加 scala: wordAndOne.reduceByKey((x,y)=>x+y)

JavaPairRDD<String, Integer> result = wordAndOne.reduceByKey(new Function2<Integer, Integer, Integer>() {

public Integer call(Integer v1, Integer v2) throws Exception {

return v1 + v2;

}

});

//按照单词出现的次数降序 (单词,次数) -->(次数,单词).sortByKey----> (单词,次数)

JavaPairRDD<Integer, String> reverseJavaRDD = result.mapToPair(new PairFunction<Tuple2<String, Integer>, Integer, String>() {

public Tuple2<Integer, String> call(Tuple2<String, Integer> t) throws Exception {

return new Tuple2<Integer, String>(t._2, t._1);

}

});

JavaPairRDD<String, Integer> sortedRDD = reverseJavaRDD.sortByKey(false).mapToPair(new PairFunction<Tuple2<Integer, String>, String, Integer>() {

public Tuple2<String, Integer> call(Tuple2<Integer, String> t) throws Exception {

return new Tuple2<String, Integer>(t._2, t._1);

}

});

//7、收集打印

List<Tuple2<String, Integer>> finalResult = sortedRDD.collect();

for (Tuple2<String, Integer> t : finalResult) {

System.out.println("单词:"+t._1 +"\t次数:"+t._2);

}

jsc.stop();

}

}