Nuscenes数据集浅析

Nuscenes主要在波士顿和新加坡进行,用于采集的车辆装备了1个旋转雷达(spinning LIDAR),5个远程雷达传感器(long range RADAR sensor)和6个相机(camera)

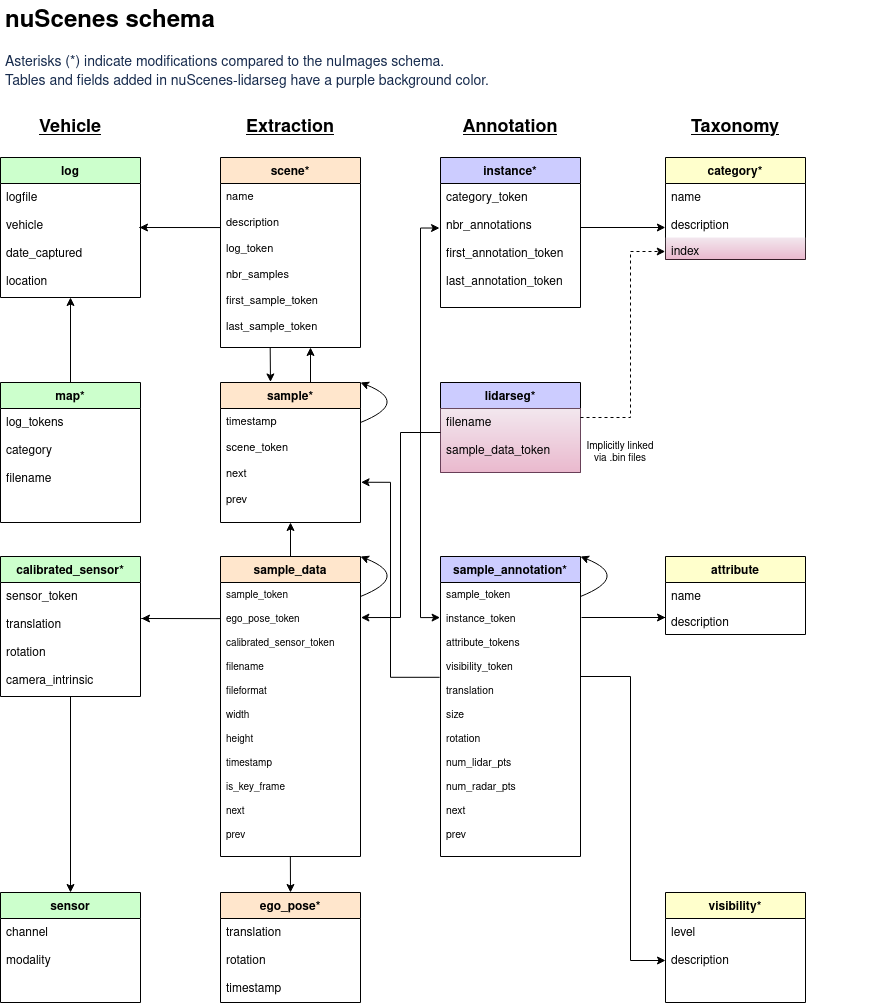

一、数据集结构:(借用https://zhuanlan.zhihu.com/p/463537059生图)

从左到右四列分别是Vehicle(采集数据所用的交通工具)、Extraction(所采集的对象)、Annotation(标注)和Taxonomy(分类)。

从上到下各行表示各种级别的对象,以Extraction为例,一个scene表示从日志中提取的20秒长的连续帧序列(A scene is a 20s long sequence of consecutive frames extracted from a log.)而一个sample表示一个2Hz的带注释的关键帧(A sample is an annotated keyframe at 2 Hz.)也就是从一个scene中提取出的一帧。而sample_data则更进一步的表示在某时刻(timestamp)的传感器数据,例如图片,点云或者Radar等等。每一个sample_data都与某个sample相联系,如果其is_key_frame值为True,那么它对应的时间戳应当与它所对应的关键帧非常接近,它的数据也会与该关键帧时刻的数据较为接近(我的个人理解)。

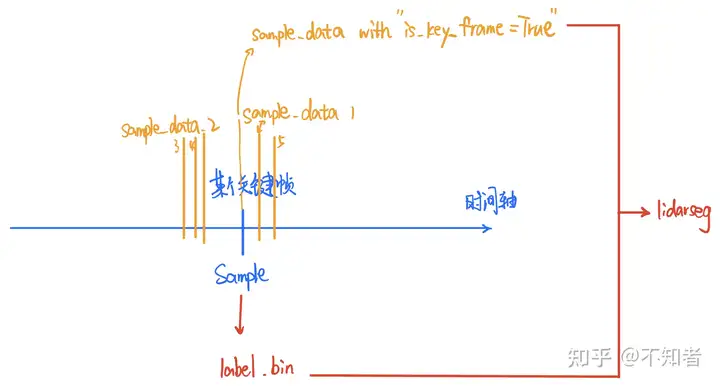

由此可以发现,一个上一级的对象可以包含多个下一级的对象,或者说表示更加广义层面的信息。例如一个scene中可以有多个sample(scene的一个属性为nbr_samples=number of samples)。又比如sample和sample_data的关系,sample只是表示某个采样的时刻,而sample_data则表示该时刻具体传感器采样得到了什么数据,在一个sample对应的关键帧附近,多次采集了各时间戳的sample_data。关于这一点可以看我所画的下面这张图。

sample与sample_data, 以及lidarseg

接下来对图表中的每个部分作相应的解(fan)释(yi)。不同于官网文档的排序,这里我会按照每一行从左到右的顺序。

第一级

log:采集数据的相关日志,包含车辆信息、所采集的数据(类型?)、采集地点等信息。

scene:采集场景

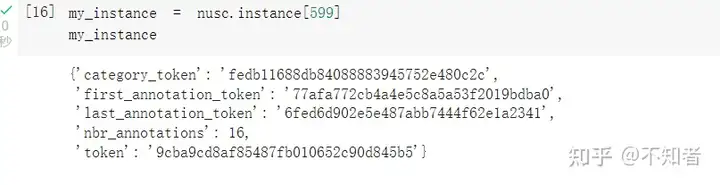

instance:对象注释实例,包含对象类别、注释数量、初次和末次注释的token

category:对象分类集合,包含human、vehicle等共有23类。子类以human.pedestrain.adult的形式表示。

第二级

map:地图数据,自上而下获得。包含map_category、filename:存储map_mask掩码数据的bin file地址。

sample:一个2Hz的带注释的关键帧,也就是从一个scene中提取出的一帧。包含时间戳、对应的scene_token、下一个sample和上一个sample的token。

lidarseg:标注信息和点云之间的映射关系。filename为对应的bin file的名称,该bin文件以numpy arrays的形式存储了nuScenes-lidarseg labels 标签。sample_data_token为某个sample data的token key,该sample data为对应的bin标签文件所标注的、且is_key_frame值为True的sample_data数据。

第三级

calibrated_sensor:一个特定传感器在一个特定车辆上的矫正参数。包含translation、rotation、camera_intrinsic(内在相机校准)。

sample_data:某时刻(timestamp)的传感器数据,例如图片,点云或者Radar等等。包含ego_pose_token(指向某一ego pose)、calibrated_sensor_token(指向特定的calibrated sensor block)、数据存储路径、时间戳、is_key_frame值等。

sample_annotation:样本标注。包含所对应的sample token、instance token、attribute token、visibility token、bounding box 中心点坐标以及长宽高、lidar points数量、下一个和上一个标注。

attribute:一些关于样本的可能会改变的性质,描述了物体本身的一些状态,比如行驶、停下等,包含三个key,Example: a vehicle being parked/stopped/moving, and whether or not a bicycle has a rider.

第四级

sensor:传感器。包含频道(channel)、modality({camera,lidar,radar})

ego_pose:采集所用的车辆在特定时间戳的pose。基于激光雷达地图的定位算法给出(见nuScenes论文)。包含坐标系原点和四元坐标(需要读论文了解具体含义)、时间戳。

visibility:可见性评估,0-40%,40%-60%,60%-80%以及80%-100%。

二、实例分析

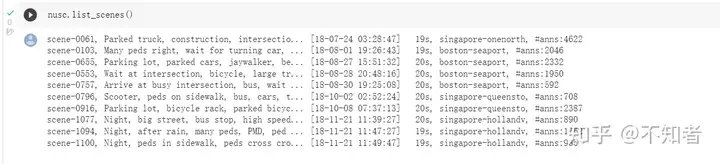

Scenes:

下载得到一个mini版的nuScenes数据集,可以看到里面有若干个scene

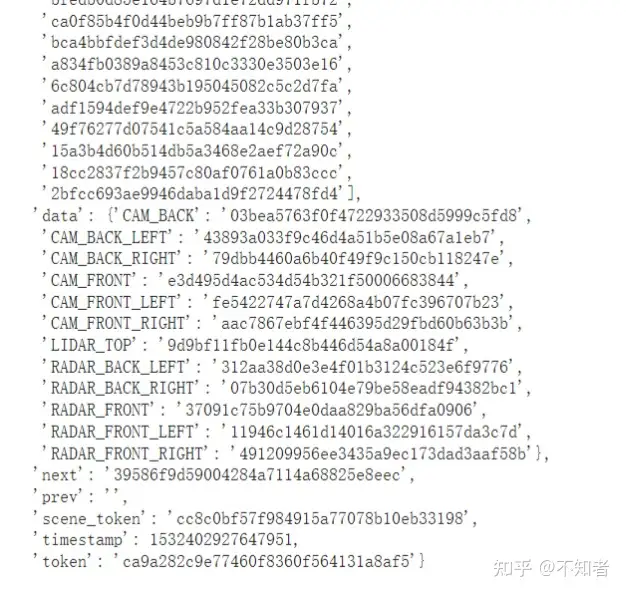

以第一个sample为例。使用nusc.get(类别,token)获取sample

可以看到一个sample包含了。。。data中包含了这一sample由各种传感器收集到的数据,

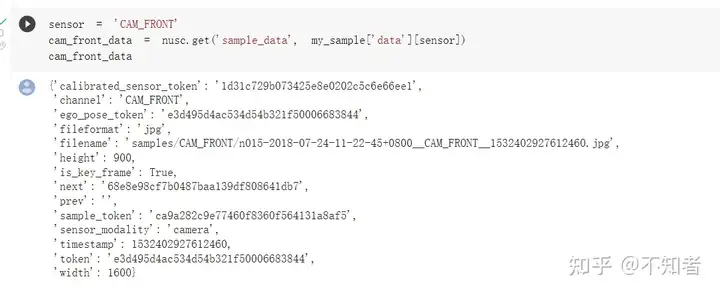

通过对特定的token使用nusc.get()命令,我们就可以得到对应的数据。例如对data中的CAM_FRONT使用,如下图。

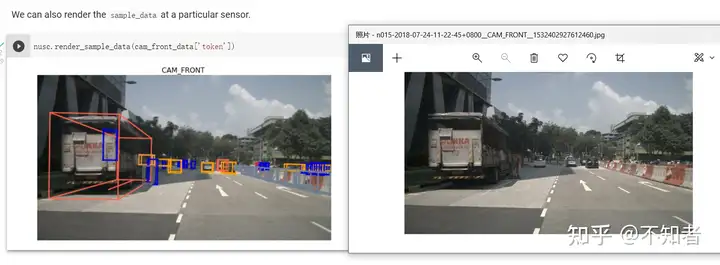

我们也可以在这里 对上面获得的数据使用nusc.render_sample_data(数据的token),来看其可视化结果(含标注)。

右图为根据cam_from_data中的filename路径找到的文件,可以发现render会自动添加检测框

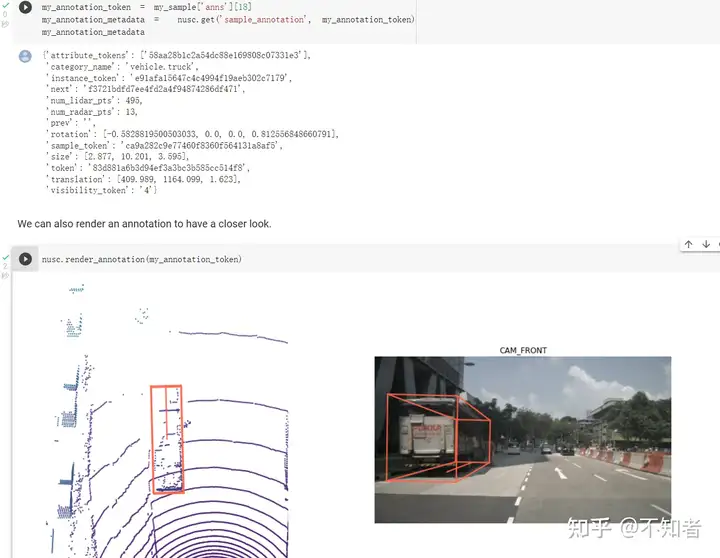

一个sample中可能标注多个对象,此时可以通过nusc.render_annotation(annotation's token)来可视化特定标注。如果无法看到可视化,需要添加两个参数extra_info和out_path,nusc.render_instance(instance['token'],extra_info=True,out_path=os.path.join(output,'instance_300')

instance

上面我们探索了一下scene。而从另一方面来说,另外一个第一级对象instance代表了一个个特定的需要被检测的目标,我们可以简单的使用nusc.instance[index]来获取某个instance。

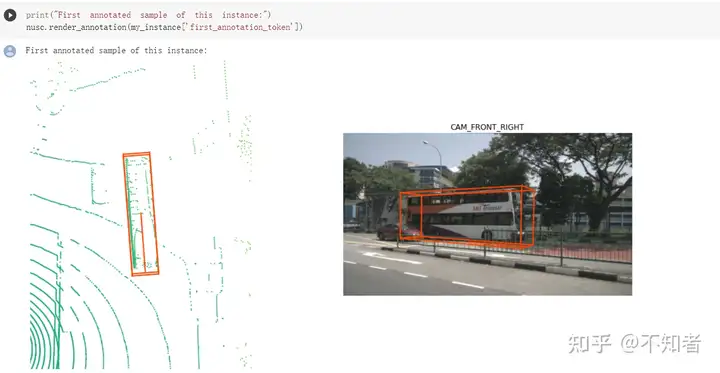

对这样的某个目标,可能有多个标注来标记他,对应一段时间内不同的时间戳。例如下面的案例中,从卡车进入范围到远离,一共标注了16次。我们可以通过简单的调用nusc.render_instance来可视化这个目标,个人理解是会选择中间的某一帧标注进行可视化。如下图。

直接调用render _instance(),也可以调用特定帧的标注,例如最开始和最后的,来看不同时刻的标注情况。

调用render_annotation()获得第一帧的标注

""" Copyright (C) 2020 NVIDIA Corporation. All rights reserved. Licensed under the NVIDIA Source Code License. See LICENSE at https://github.com/nv-tlabs/lift-splat-shoot. Authors: Jonah Philion and Sanja Fidler """ import os.path import os import torch import matplotlib as mpl mpl.use('Agg') import matplotlib.pyplot as plt from PIL import Image import matplotlib.patches as mpatches from .data import compile_data from .tools import (ego_to_cam, get_only_in_img_mask, denormalize_img, SimpleLoss, get_val_info, add_ego, gen_dx_bx, get_nusc_maps, plot_nusc_map) from .models import compile_model from nuscenes.nuscenes import NuScenes def render_sample_data(nusc, output, list_sle, scene_id): scene = nusc.scene[scene_id] out_path = output+ str(scene_id) if not os.path.exists(out_path): os.mkdir(out_path) print('\nandy render_sample_data scene:',scene) first_sample_token = scene['first_sample_token'] print('\nandy render_sample_data first_sample_token:', first_sample_token) first_sample = nusc.get('sample', first_sample_token) print('\nandy render_sample_data first_sample:',first_sample) cam_front_left_data = nusc.get('sample_data', nusc.get('sample',first_sample_token)['data']['CAM_FRONT_LEFT']) print('\nandy render_sample_data cam_front_left_data:',cam_front_left_data) nusc.render_sample_data(cam_front_left_data['token'],out_path=os.path.join(out_path,'sampledata_front_left')) cam_front_data = nusc.get('sample_data', first_sample['data']['CAM_FRONT']) print('\nandy render_sample_data cam_front_data:',cam_front_data) nusc.render_sample_data(cam_front_data['token'],out_path=os.path.join(out_path,'sampledata_front')) cam_front_right_data = nusc.get('sample_data', first_sample['data']['CAM_FRONT_RIGHT']) print('\nandy render_sample_data cam_front_right_data:',cam_front_right_data) nusc.render_sample_data(cam_front_right_data['token'],out_path=os.path.join(out_path,'sampledata_front_right')) cam_back_left_data = nusc.get('sample_data', nusc.get('sample',first_sample_token)['data']['CAM_BACK_LEFT']) print('\nandy render_sample_data cam_back_left_data:',cam_back_left_data) nusc.render_sample_data(cam_back_left_data['token'],out_path=os.path.join(out_path,'sampledata_back_left')) cam_back_data = nusc.get('sample_data', nusc.get('sample',first_sample_token)['data']['CAM_BACK']) print('\nandy render_sample_data cam_back_data:',cam_back_data) nusc.render_sample_data(cam_back_data['token'],out_path=os.path.join(out_path,'sampledata_back')) cam_back_right_data = nusc.get('sample_data', nusc.get('sample',first_sample_token)['data']['CAM_BACK_RIGHT']) print('\nandy render_sample_data cam_back_right_data:',cam_back_right_data) nusc.render_sample_data(cam_back_right_data['token'],out_path=os.path.join(out_path,'sampledata_back_right')) #list_sample = list_sle #for sle_i in range(list_sample): sample_i = nusc.sample[scene_id] nusc.render_sample(sample_i['token'], out_path=os.path.join(out_path, 'sample_'+ str(scene_id))) print('\nandy render_sample sample_i:\n',sample_i) first_sample_token = scene['first_sample_token'] scene_sample_i = nusc.sample[scene_id] nusc.render_sample(sample_i['token'], out_path=os.path.join(out_path, 'sample_'+ str(scene_id))) print('\nandy render_sample sample_i:\n',sample_i) def render_scene_channel(nusc, output,scene_name): if not os.path.exists(output): os.mkdir(output) scene_token = nusc.field2token('scene', 'name', scene_name)[0] nusc.render_scene_channel(scene_token, 'CAM_FRONT',out_path=os.path.join(output, scene_name+'_CAM_FRONT.avi')) def render_scene(nusc, output,scene_name): if not os.path.exists(output): os.mkdir(output) scene_token = nusc.field2token('scene', 'name', scene_name)[0] nusc.render_scene(scene_token, out_path= os.path.join(os.path.join(output, scene_name+'.avi'))) def render_pointcloud_in_image(nusc, output): if not os.path.exists(output): os.mkdir(output) sample = nusc.sample[10] nusc.render_pointcloud_in_image(sample_token=sample['token'], pointsensor_channel='LIDAR_TOP', out_path= os.path.join(output,'10_pointcloud_in_image')) nusc.render_pointcloud_in_image(sample_token=sample['token'], pointsensor_channel='LIDAR_TOP', out_path= os.path.join(output,'10_pointcloud_in_image_intensity'), render_intensity = True) nusc.render_pointcloud_in_image(sample_token=sample['token'], pointsensor_channel='RADAR_FRONT', out_path= os.path.join(output,'10_radarpoint_in_image')) from datetime import datetime def scene_list_info(nusc): recs = [(nusc.get('sample', record['first_sample_token'])['timestamp'], record) for record in nusc.scene] print("resc:", recs) for starttime, rec in sorted(recs): starttime = nusc.get('sample', rec['first_sample_token'])['timestamp']/1000000 lengthtime = nusc.get('sample', rec['last_sample_token'])['timestamp'] /1000000 - starttime desc = rec['name'] + '\n' + rec['description'] #print('lengthtime:', lengthtime) print('\nAndy scene_list_info {:16} \n[{}] \n{:4.1f}s'.format(desc, datetime.utcfromtimestamp(starttime).strftime('%Y-%m-%d %H:%H:%S'), lengthtime)) from pyquaternion import Quaternion from tqdm import tqdm def geo_visual(viewpoint): viewpad = np.eye(4) viewpad[:view.shape[0], :view.shape[1]] = viewpoint nbr_points = points.shape[1] def render_annotation(nusc, output, ann_token): list_ann_token = ann_token #['1','3','5','7','9'] first_sample_token = scene_1['first_sample_token'] first_sample = nusc.get('sample', first_sample_token) for ann_i in range(list_ann_token): annotation_token = first_sample['anns'][ann_i] annotation_metadata = nusc.get('sample_annotation', annotation_token) nusc.render_annotation(annotation_metadata['token'], extra_info=True, out_path=os.path.join(output,'sample_annotation_'+str(ann_i))) def render_sample(nusc, output, list_sle): list_sample = list_sle for sle_i in range(list_sample): sample_i = nusc.sample[sle_i] nusc.render_sample(sample_i['token'], out_path=os.path.join(output, 'sample_'+ str(sle_i))) print('\nandy render_sample sample_i:\n',sample_i) def render_instance(nusc, output, inst): list_inst = inst for inst_i in range(list_inst): instance_i = nusc.instance[inst_i] print('\nandy instance:\n', instance_i) instance_token = instance_i['token'] print('\nandy instance_token:\n', instance_token) nusc.render_instance(instance_i['token'], out_path=os.path.join(output,'instance_'+str(inst_i))) def viz_gtbox(version='trainval', dataroot='../Data/v1.0-mini', viz_train=False, nepochs=1, H=900, W=1600, resize_dim=(0.193,0.225), final_dim=(128,352), rot_dim=(1.0), bot_pct_dim=(1.0), rand_flip=True, xbound=[-50.0, 50.0, 0.5], ybound=[-50.0, 50.0, 0.5], zbound=[-10.0, 10.0, 20.0], dbound=[4.0, 45.0,1.0], bsz=1, nworkers=10, output='./viz_gtbox',): cfg_grid = { 'xbound': xbound, 'ybound': ybound, 'zbound': zbound,} cams =['CAM_FRONT_LEFT', 'CAM_FRONT', 'CAM_FRONT_RIGHT', 'CAM_BACK_LEFT', 'CAM_BACK', 'CAM_BACK_RIGHT'] cfg_dataaug = { 'resize_lim': resize_dim, 'final_lim': final_dim, 'rot_lim': rot_dim, 'H':H, 'W':W, 'rand_flip': rand_flip, 'bot_pct_lim':bot_pct_dim, 'cams': cams, 'Ncams': 5,} nusc = NuScenes(version='v1.0-{}'.format(version), dataroot=os.path.join(dataroot, version), verbose=False) scene_num = len(nusc.scene) print("scene_num:",scene_num) #render_pointcloud_in_image(nusc, output) scene_list_info(nusc) #print("nusc.scene:",nusc.scene) #for i in range(scene_num): # print("scene_name:",nusc.scene[i]['name']) #render_scene_channel(nusc, output, nusc.scene[i]['name']) # render_scene(nusc, output, nusc.scene[i]['name']) #for scene_i in range(scene_num): # render_sample_data(nusc, output, 1, scene_i) #render_sample(nusc, output, 2) #render_annotation(nusc, output, 2) #render_instance(nusc, output, 5) def lidar_check(version='trainval',dataroot='../Data/v1.0-mini', show_lidar=True,viz_train=False,nepochs=1,H=900, W=1600,resize_lim=(0.193, 0.225), final_dim=(128, 352),bot_pct_lim=(0.0, 0.22),rot_lim=(-5.4, 5.4),rand_flip=True, xbound=[-50.0, 50.0, 0.5],ybound=[-50.0, 50.0, 0.5],zbound=[-10.0, 10.0, 20.0], dbound=[4.0, 45.0, 1.0],bsz=1,nworkers=10,output='./visualize',): grid_conf = { 'xbound': xbound,'ybound': ybound,'zbound': zbound,'dbound': dbound, } cams = ['CAM_FRONT_LEFT', 'CAM_FRONT', 'CAM_FRONT_RIGHT','CAM_BACK_LEFT', 'CAM_BACK', 'CAM_BACK_RIGHT'] data_aug_conf = {'resize_lim': resize_lim,'final_dim': final_dim,'rot_lim': rot_lim,'H': H, 'W': W, 'rand_flip': rand_flip,'bot_pct_lim': bot_pct_lim,'cams': cams, 'Ncams': 5,} trainloader, valloader = compile_data(version, dataroot, data_aug_conf=data_aug_conf, grid_conf=grid_conf, bsz=bsz, nworkers=nworkers, parser_name='vizdata') loader = trainloader if viz_train else valloader model = compile_model(grid_conf, data_aug_conf, outC=1) rat = H / W val = 10.1 fig = plt.figure(figsize=(val + val/3*2*rat*3, val/3*2*rat)) gs = mpl.gridspec.GridSpec(2, 6, width_ratios=(1, 1, 1, 2*rat, 2*rat, 2*rat)) gs.update(wspace=0.0, hspace=0.0, left=0.0, right=1.0, top=1.0, bottom=0.0) for epoch in range(nepochs): for batchi, (imgs, rots, trans, intrins, post_rots, post_trans, pts, binimgs) in enumerate(loader): img_pts = model.get_geometry(rots, trans, intrins, post_rots, post_trans) for si in range(imgs.shape[0]): plt.clf() final_ax = plt.subplot(gs[:, 5:6]) for imgi, img in enumerate(imgs[si]): ego_pts = ego_to_cam(pts[si], rots[si, imgi], trans[si, imgi], intrins[si, imgi]) mask = get_only_in_img_mask(ego_pts, H, W) plot_pts = post_rots[si, imgi].matmul(ego_pts) + post_trans[si, imgi].unsqueeze(1) ax = plt.subplot(gs[imgi // 3, imgi % 3]) showimg = denormalize_img(img) plt.imshow(showimg) if show_lidar: plt.scatter(plot_pts[0, mask], plot_pts[1, mask], c=ego_pts[2, mask], s=5, alpha=0.1, cmap='jet') # plot_pts = post_rots[si, imgi].matmul(img_pts[si, imgi].view(-1, 3).t()) + post_trans[si, imgi].unsqueeze(1) # plt.scatter(img_pts[:, :, :, 0].view(-1), img_pts[:, :, :, 1].view(-1), s=1) plt.axis('off') plt.sca(final_ax) plt.plot(img_pts[si, imgi, :, :, :, 0].view(-1), img_pts[si, imgi, :, :, :, 1].view(-1), '.', label=cams[imgi].replace('_', ' ')) plt.legend(loc='upper right') final_ax.set_aspect('equal') plt.xlim((-0, 50)) plt.ylim((-50, 50)) ax = plt.subplot(gs[:, 3:4]) plt.scatter(pts[si, 0], pts[si, 1], c=pts[si, 2], vmin=-5, vmax=5, s=5) plt.xlim((-50, 50)) plt.ylim((-50, 50)) ax.set_aspect('equal') ax = plt.subplot(gs[:, 4:5]) plt.imshow(binimgs[si].squeeze(0).T, origin='lower', cmap='Greys', vmin=0, vmax=1) imname = f'lcheck{epoch:03}_{batchi:05}_{si:02}.jpg' #if os.path.exists(output): # os.makedirs(output) #output_path = os.path.join(output, imname) print('saving', imname) plt.savefig(imname) def cumsum_check(version, dataroot='../Data/v1.0-mini', gpuid=1, H=900, W=1600, resize_lim=(0.193, 0.225), final_dim=(128, 352), bot_pct_lim=(0.0, 0.22), rot_lim=(-5.4, 5.4), rand_flip=True, xbound=[-50.0, 50.0, 0.5], ybound=[-50.0, 50.0, 0.5], zbound=[-10.0, 10.0, 20.0], dbound=[4.0, 45.0, 1.0], bsz=4, nworkers=10, ): grid_conf = { 'xbound': xbound, 'ybound': ybound, 'zbound': zbound, 'dbound': dbound, } data_aug_conf = { 'resize_lim': resize_lim, 'final_dim': final_dim, 'rot_lim': rot_lim, 'H': H, 'W': W, 'rand_flip': rand_flip, 'bot_pct_lim': bot_pct_lim, 'cams': ['CAM_FRONT_LEFT', 'CAM_FRONT', 'CAM_FRONT_RIGHT', 'CAM_BACK_LEFT', 'CAM_BACK', 'CAM_BACK_RIGHT'], 'Ncams': 6, } trainloader, valloader = compile_data(version, dataroot, data_aug_conf=data_aug_conf, grid_conf=grid_conf, bsz=bsz, nworkers=nworkers, parser_name='segmentationdata') device = torch.device('cpu') if gpuid < 0 else torch.device(f'cuda:{gpuid}') loader = trainloader model = compile_model(grid_conf, data_aug_conf, outC=1) model.to(device) model.eval() for batchi, (imgs, rots, trans, intrins, post_rots, post_trans, binimgs) in enumerate(loader): model.use_quickcumsum = False model.zero_grad() out = model(imgs.to(device), rots.to(device), trans.to(device), intrins.to(device), post_rots.to(device), post_trans.to(device), ) out.mean().backward() print('autograd: ', out.mean().detach().item(), model.camencode.depthnet.weight.grad.mean().item()) model.use_quickcumsum = True model.zero_grad() out = model(imgs.to(device), rots.to(device), trans.to(device), intrins.to(device), post_rots.to(device), post_trans.to(device), ) out.mean().backward() print('quick cumsum:', out.mean().detach().item(), model.camencode.depthnet.weight.grad.mean().item()) print() def eval_model_iou(version='trainval', modelf='./model_ckpts/model525000.pt', dataroot='../Data/v1.0-mini', gpuid=1, H=900, W=1600, resize_lim=(0.193, 0.225), final_dim=(128, 352), bot_pct_lim=(0.0, 0.22), rot_lim=(-5.4, 5.4), rand_flip=True, xbound=[-50.0, 50.0, 0.5], ybound=[-50.0, 50.0, 0.5], zbound=[-10.0, 10.0, 20.0], dbound=[4.0, 45.0, 1.0], bsz=1, nworkers=10, ): grid_conf = { 'xbound': xbound, 'ybound': ybound, 'zbound': zbound, 'dbound': dbound, } data_aug_conf = { 'resize_lim': resize_lim, 'final_dim': final_dim, 'rot_lim': rot_lim, 'H': H, 'W': W, 'rand_flip': rand_flip, 'bot_pct_lim': bot_pct_lim, 'cams': ['CAM_FRONT_LEFT', 'CAM_FRONT', 'CAM_FRONT_RIGHT', 'CAM_BACK_LEFT', 'CAM_BACK', 'CAM_BACK_RIGHT'], 'Ncams': 5, } trainloader, valloader = compile_data(version, dataroot, data_aug_conf=data_aug_conf, grid_conf=grid_conf, bsz=bsz, nworkers=nworkers, parser_name='segmentationdata') device = torch.device('cpu') if gpuid < 0 else torch.device(f'cuda:{gpuid}') model = compile_model(grid_conf, data_aug_conf, outC=1) #model = compile_model(cfg_grid, cfg_dataaug,out_ch=1) print('loading', modelf) model.load_state_dict(torch.load(modelf)) model.to(device) loss_fn = SimpleLoss(1.0).cuda(gpuid) model.eval() val_info = get_val_info(model, valloader, loss_fn, device) print(val_info) def viz_model_preds(version='trainval', modelf='./model_ckpts/model525000.pt', dataroot='../Data/v1.0-mini', map_folder='../Data/v1.0-mini/trainval', gpuid=1, viz_train=False, H=900, W=1600, resize_lim=(0.193, 0.225), final_dim=(128, 352), bot_pct_lim=(0.0, 0.22), rot_lim=(-5.4, 5.4), rand_flip=True, xbound=[-50.0, 50.0, 0.5], ybound=[-50.0, 50.0, 0.5], zbound=[-10.0, 10.0, 20.0], dbound=[4.0, 45.0, 1.0], bsz=1, nworkers=10, ): grid_conf = { 'xbound': xbound, 'ybound': ybound, 'zbound': zbound, 'dbound': dbound, } cams = ['CAM_FRONT_LEFT', 'CAM_FRONT', 'CAM_FRONT_RIGHT', 'CAM_BACK_LEFT', 'CAM_BACK', 'CAM_BACK_RIGHT'] data_aug_conf = { 'resize_lim': resize_lim, 'final_dim': final_dim, 'rot_lim': rot_lim, 'H': H, 'W': W, 'rand_flip': rand_flip, 'bot_pct_lim': bot_pct_lim, 'cams': cams, 'Ncams': 5, } trainloader, valloader = compile_data(version, dataroot, data_aug_conf=data_aug_conf, grid_conf=grid_conf, bsz=bsz, nworkers=nworkers, parser_name='segmentationdata') loader = trainloader if viz_train else valloader nusc_maps = get_nusc_maps(map_folder) device = torch.device('cpu') if gpuid < 0 else torch.device(f'cuda:{gpuid}') model = compile_model(grid_conf, data_aug_conf, outC=1) print('loading', modelf) model.load_state_dict(torch.load(modelf)) model.to(device) dx, bx, _ = gen_dx_bx(grid_conf['xbound'], grid_conf['ybound'], grid_conf['zbound']) dx, bx = dx[:2].numpy(), bx[:2].numpy() scene2map = {} for rec in loader.dataset.nusc.scene: log = loader.dataset.nusc.get('log', rec['log_token']) scene2map[rec['name']] = log['location'] val = 0.01 fH, fW = final_dim fig = plt.figure(figsize=(3*fW*val, (1.5*fW + 2*fH)*val)) gs = mpl.gridspec.GridSpec(3, 3, height_ratios=(1.5*fW, fH, fH)) gs.update(wspace=0.0, hspace=0.0, left=0.0, right=1.0, top=1.0, bottom=0.0) model.eval() counter = 0 with torch.no_grad(): for batchi, (imgs, rots, trans, intrins, post_rots, post_trans, binimgs) in enumerate(loader): out = model(imgs.to(device), rots.to(device), trans.to(device), intrins.to(device), post_rots.to(device), post_trans.to(device), ) out = out.sigmoid().cpu() for si in range(imgs.shape[0]): plt.clf() for imgi, img in enumerate(imgs[si]): ax = plt.subplot(gs[1 + imgi // 3, imgi % 3]) showimg = denormalize_img(img) # flip the bottom images if imgi > 2: showimg = showimg.transpose(Image.FLIP_LEFT_RIGHT) plt.imshow(showimg) plt.axis('off') plt.annotate(cams[imgi].replace('_', ' '), (0.01, 0.92), xycoords='axes fraction') ax = plt.subplot(gs[0, :]) ax.get_xaxis().set_ticks([]) ax.get_yaxis().set_ticks([]) plt.setp(ax.spines.values(), color='b', linewidth=2) plt.legend(handles=[ mpatches.Patch(color=(0.0, 0.0, 1.0, 1.0), label='Output Vehicle Segmentation'), mpatches.Patch(color='#76b900', label='Ego Vehicle'), mpatches.Patch(color=(1.00, 0.50, 0.31, 0.8), label='Map (for visualization purposes only)') ], loc=(0.01, 0.86)) plt.imshow(out[si].squeeze(0), vmin=0, vmax=1, cmap='Blues') # plot static map (improves visualization) rec = loader.dataset.ixes[counter] plot_nusc_map(rec, nusc_maps, loader.dataset.nusc, scene2map, dx, bx) plt.xlim((out.shape[3], 0)) plt.ylim((0, out.shape[3])) add_ego(bx, dx) imname = f'eval{batchi:06}_{si:03}.jpg' print('saving', imname) plt.savefig(imname) counter += 1 def render_nusc_data(version='trainval', modelf='./model_ckpts/model525000.pt', dataroot='../Data/v1.0-mini', map_folder='../Data/v1.0-mini/trainval', gpuid=1, viz_train=False, H=900, W=1600, resize_lim=(0.193, 0.225), final_dim=(128, 352), bot_pct_lim=(0.0, 0.22), rot_lim=(-5.4, 5.4), rand_flip=True, xbound=[-50.0, 50.0, 0.5], ybound=[-50.0, 50.0, 0.5], zbound=[-10.0, 10.0, 20.0], dbound=[4.0, 45.0, 1.0], bsz=1, nworkers=10, ): self.nusc.list_attributes

最后一帧的标注