MapReduce之词频统计本地运行

1、上述的MapReduce之Mapper、Reducer、Driver三步实现,是基于输入和输出都是HDFS的

(1)输入:HADOOP_USER_NAME、

(2)输出:hdfs://192.168.126.101:8020

//WordCountApp.java //设置权限 System.setProperty("HADOOP_USER_NAME", "hadoop"); Configuration configuration = new Configuration(); //在configuration里设置一些东西: configuration.set("fs.defaultFS", "hdfs://192.168.126.101:8020");

2、不连HDFS,只在本地处理词频统计

(0)优势:运行速度快

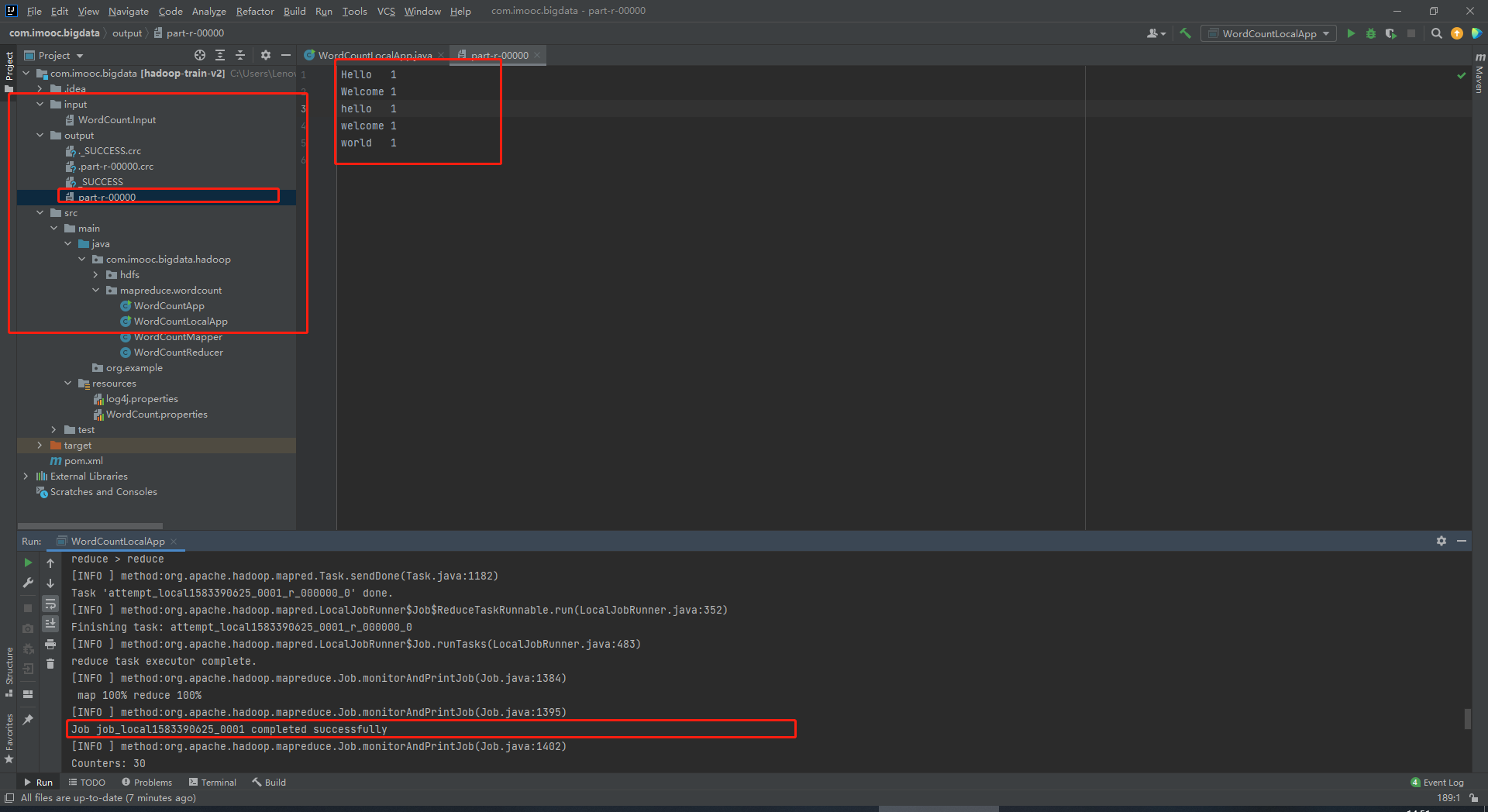

(1)在hadoop-train-v2下新建Directory:input

(2)在input里新建file.text:WordCount.Input

(3)将h.txt内容考入WordCount.Input中

(4)在com.imooc.bigdata.hadoop.mapreduce.wordcount下复制WordCountApp.java为:WordCountLocalApp.java

3、WordCountLocalApp.java

package com.imooc.bigdata.hadoop.mapreduce.wordcount; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; /* * Driver类:配置Mapper和Reducer的相关属性 * 通过WordCountApp.java将Mapper和Reducer关联起来 * 使用MapReduce统计HDFS上的文件对应的词频 * * 使用本地文件进行统计,然后统计结果输出到本地路径 */ public class WordCountLocalApp { public static void main(String[] args) throws Exception{ Configuration configuration = new Configuration(); //创建一个Job //将configuration传进来 Job job = Job.getInstance(configuration); //设置Job对应的参数:主类 job.setJarByClass(WordCountLocalApp.class); //设置Job对应的参数:设置自定义的Mapper和Reducer处理类 job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); //设置Job对应的参数:Mapper输出key和value的类型 //不需要关注Mapper输入 //Mapper<LongWritable, Text, Text, IntWritable> job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); //设置Job对应的参数:Reducer输出key和value的类型 //不需要关注Reducer输入 //Reducer<Text, IntWritable, Text, IntWritable> job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); //设置Job对应的参数:Mapper输出key和value的类型:作业输入和输出的路径 FileInputFormat.setInputPaths(job, new Path("input")); FileOutputFormat.setOutputPath(job, new Path("output")); //提交job boolean result = job.waitForCompletion(true); System.exit(result ? 0 : -1); } }

4、结果输出

问题描述:统计结果区分大小写

解决措施:在WordCountMapper.java中

context.write(new Text(word), new IntWritable(1));

改为

context.write(new Text(word.toLowerCase()), new IntWritable(1));