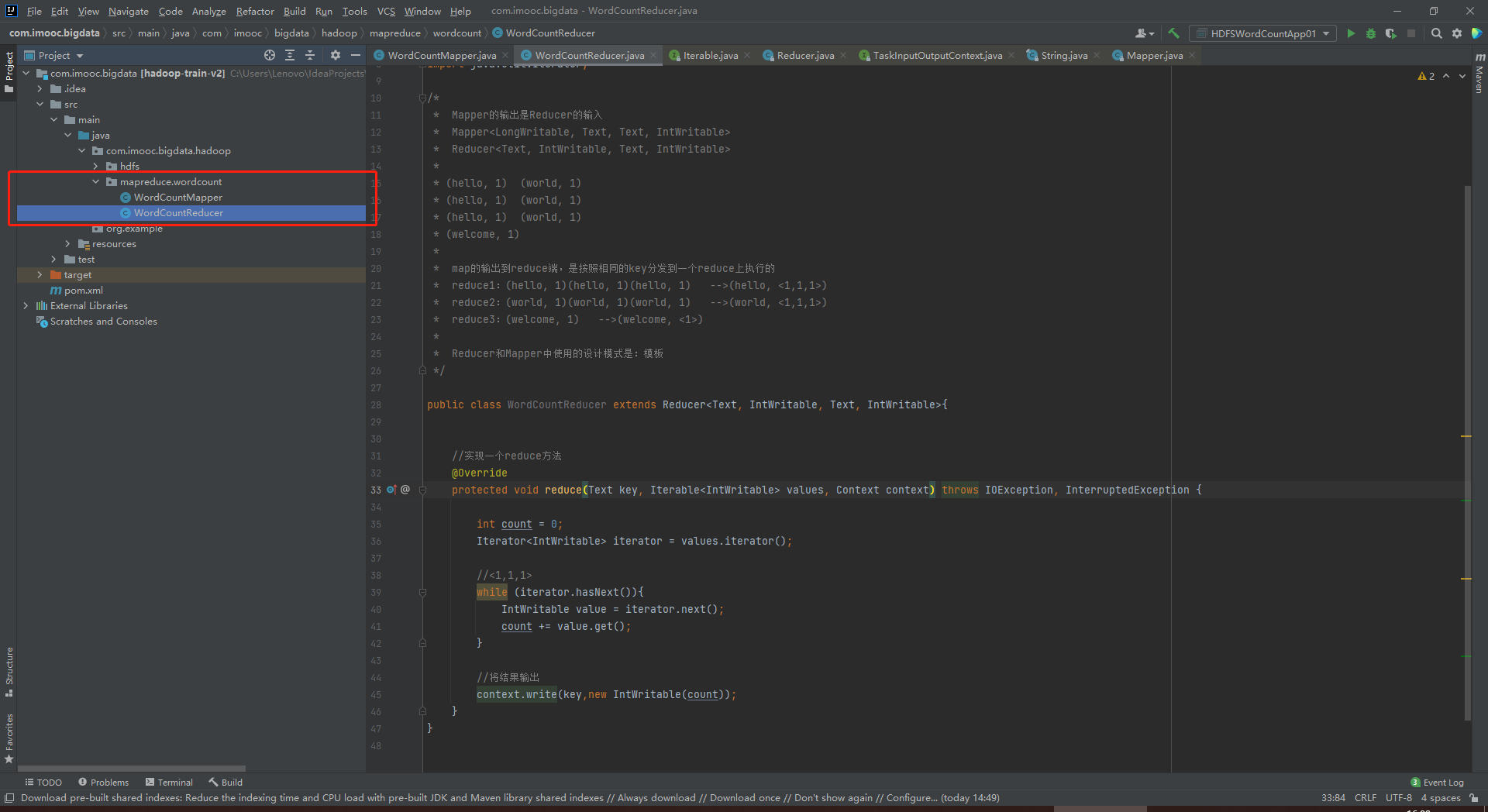

package com.imooc.bigdata.hadoop.mapreduce.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.util.Iterator;

/*

* Mapper的输出是Reducer的输入

* Mapper<LongWritable, Text, Text, IntWritable>

* Reducer<Text, IntWritable, Text, IntWritable>

*

* (hello, 1) (world, 1)

* (hello, 1) (world, 1)

* (hello, 1) (world, 1)

* (welcome, 1)

*

* map的输出到reduce端,是按照相同的key分发到一个reduce上执行的

* reduce1:(hello, 1)(hello, 1)(hello, 1) -->(hello, <1,1,1>)

* reduce2:(world, 1)(world, 1)(world, 1) -->(world, <1,1,1>)

* reduce3:(welcome, 1) -->(welcome, <1>)

*

* Reducer和Mapper中使用的设计模式是:模板

*/

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

//实现一个reduce方法

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int count = 0;

Iterator<IntWritable> iterator = values.iterator();

//<1,1,1>

while (iterator.hasNext()){

IntWritable value = iterator.next();

count += value.get();

}

//将结果输出

context.write(key,new IntWritable(count));

}

}