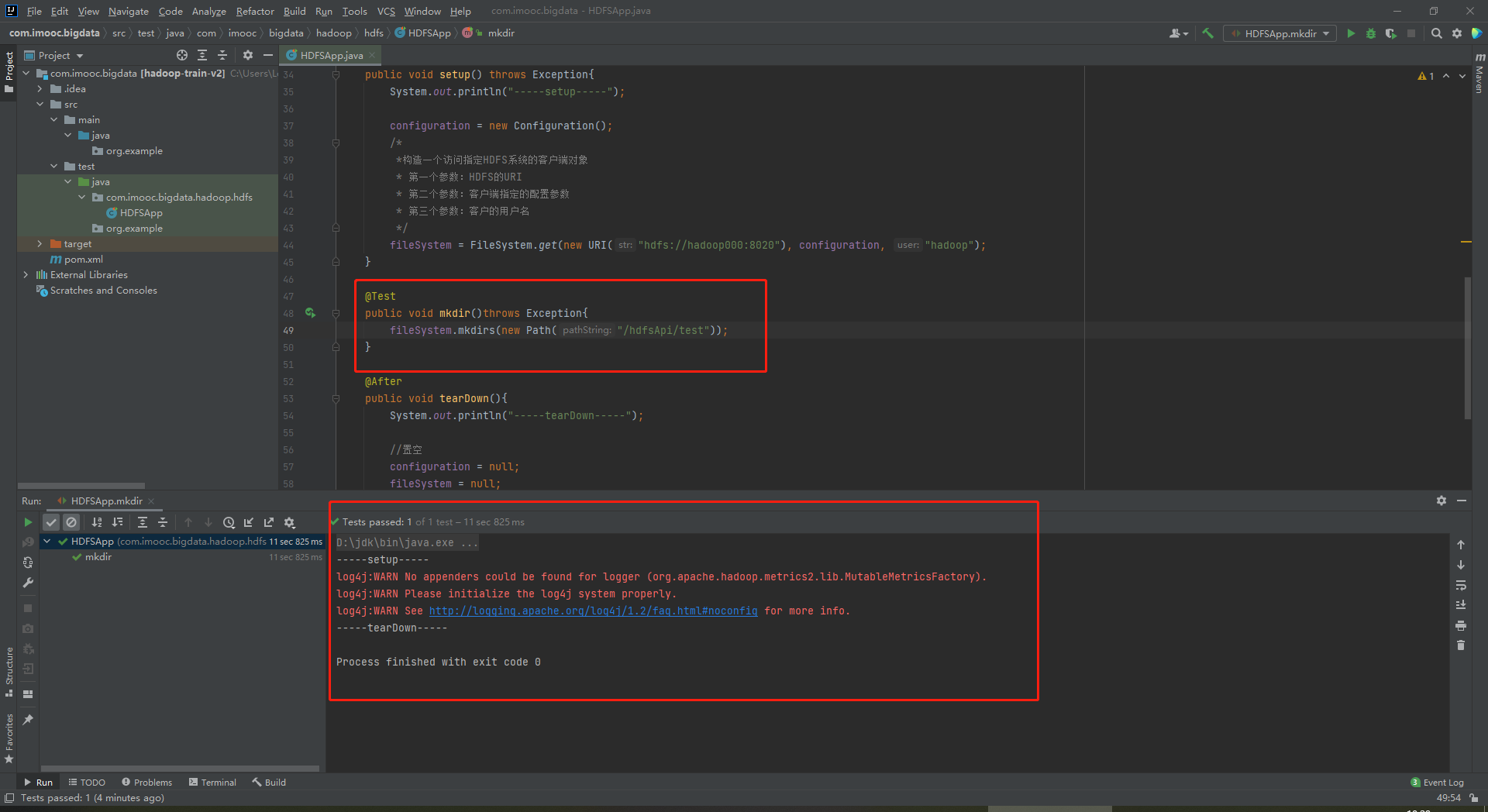

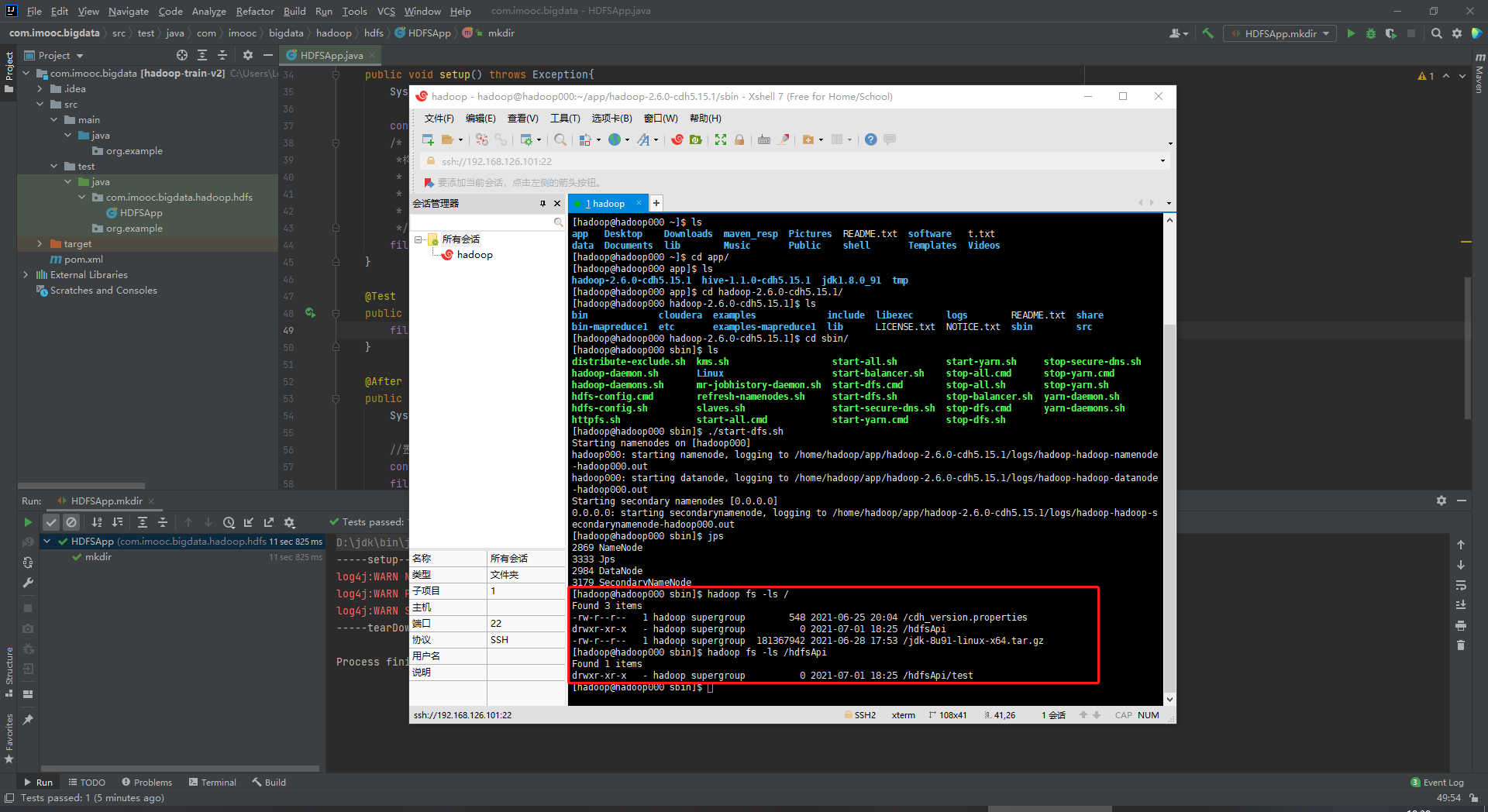

使用Java API操作HDFS文件系统(使用jUnit封装)

package com.imooc.bigdata.hadoop.hdfs; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.junit.After; import org.junit.Before; import org.junit.Test; import java.net.URI; /** * 使用Java API操作HDFS文件系统 * * 因为是放在test下面,所以最好使用单元测试的方式 * 在pom中引入的jUnit单元测试的方式 * 单元测试有两个方法:(1)在单元测试之前进行;(2)在单元测试之后进行 * * 关键点: * 1)创建Configuration * 2)获取FileSystem * 3)剩下的是HDFS API的操作 */ public class HDFSApp { public static final String HDFS_PATH = "hdfs://hadoop000:8020"; //Configuration、FileSystem是每一个方法使用之前必须构建的 Configuration configuration = null; FileSystem fileSystem = null; @Before public void setup() throws Exception{ System.out.println("-----setup-----"); configuration = new Configuration(); /* *构造一个访问指定HDFS系统的客户端对象 * 第一个参数:HDFS的URI * 第二个参数:客户端指定的配置参数 * 第三个参数:客户的用户名 */ fileSystem = FileSystem.get(new URI("hdfs://hadoop000:8020"), configuration, "hadoop"); } /* * 创建HDFS文件夹 */ @Test public void mkdir()throws Exception{ fileSystem.mkdirs(new Path("/hdfsApi/test")); } @After public void tearDown(){ System.out.println("-----tearDown-----"); //置空 configuration = null; fileSystem = null; } }

pom.xml

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>hadoop-train-v2</artifactId> <version>1.0</version> <name>hadoop-train-v2</name> <!-- FIXME change it to the project's website --> <url>http://www.example.com</url> <properties> <!--定义Hadoop版本2.6.0-cdh5.15.1--> <hadoop.version>2.6.0-cdh5.15.1</hadoop.version> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <maven.compiler.source>1.7</maven.compiler.source> <maven.compiler.target>1.7</maven.compiler.target> </properties> <!--引入cdh的仓库--> <repositories> <repository> <id>cloudera</id> <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> </repository> </repositories> <dependencies> <!--添加junit的依赖包--> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.11</version> <scope>test</scope> </dependency> <!--添加Hadoop的依赖包--> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> </dependency> </dependencies> <build> <pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) --> <plugins> <!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle --> <plugin> <artifactId>maven-clean-plugin</artifactId> <version>3.1.0</version> </plugin> <!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging --> <plugin> <artifactId>maven-resources-plugin</artifactId> <version>3.0.2</version> </plugin> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.8.0</version> </plugin> <plugin> <artifactId>maven-surefire-plugin</artifactId> <version>2.22.1</version> </plugin> <plugin> <artifactId>maven-jar-plugin</artifactId> <version>3.0.2</version> </plugin> <plugin> <artifactId>maven-install-plugin</artifactId> <version>2.5.2</version> </plugin> <plugin> <artifactId>maven-deploy-plugin</artifactId> <version>2.8.2</version> </plugin> <!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle --> <plugin> <artifactId>maven-site-plugin</artifactId> <version>3.7.1</version> </plugin> <plugin> <artifactId>maven-project-info-reports-plugin</artifactId> <version>3.0.0</version> </plugin> </plugins> </pluginManagement> </build> </project>