SkyWalking-JVM指标收集流程

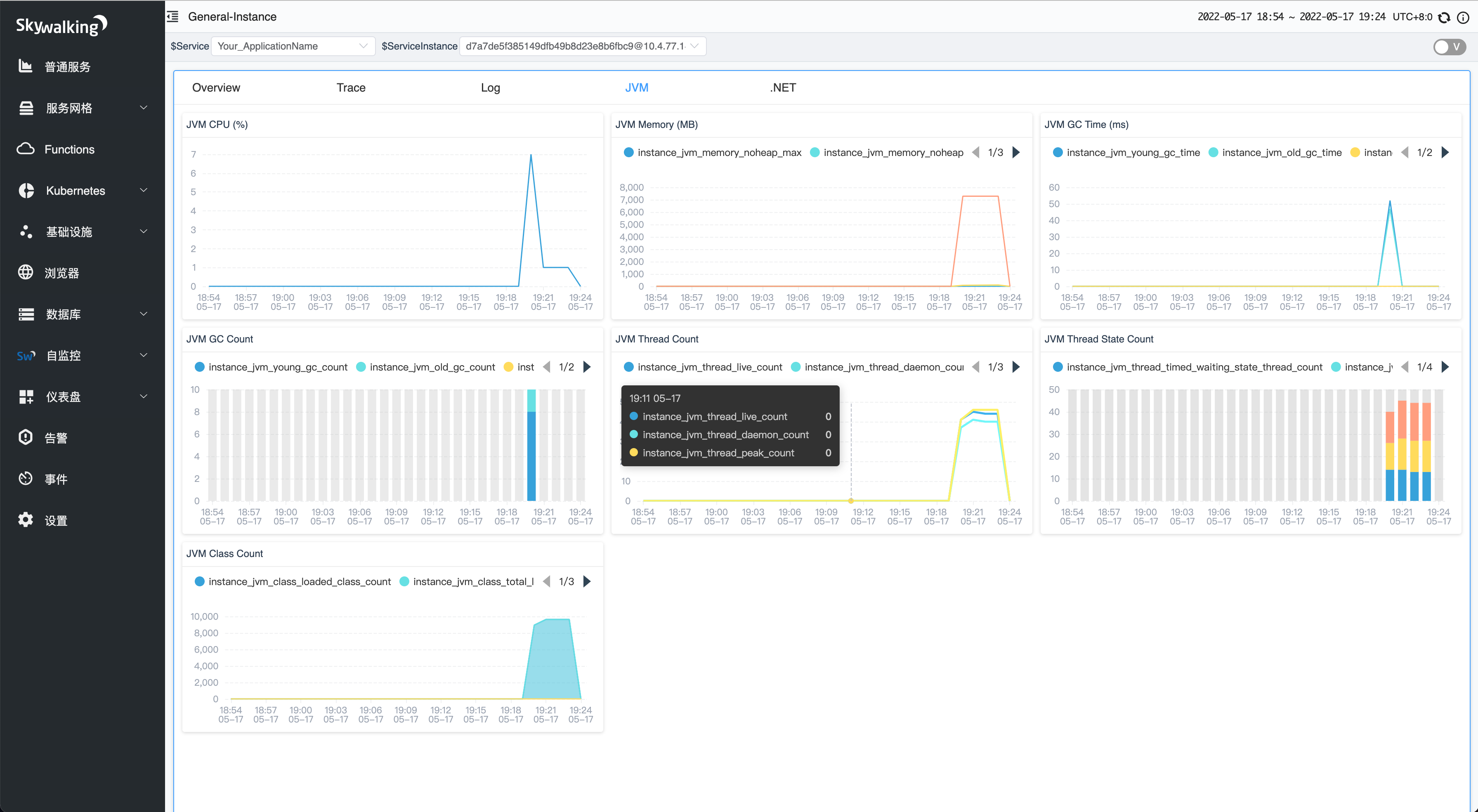

JVM监控界面

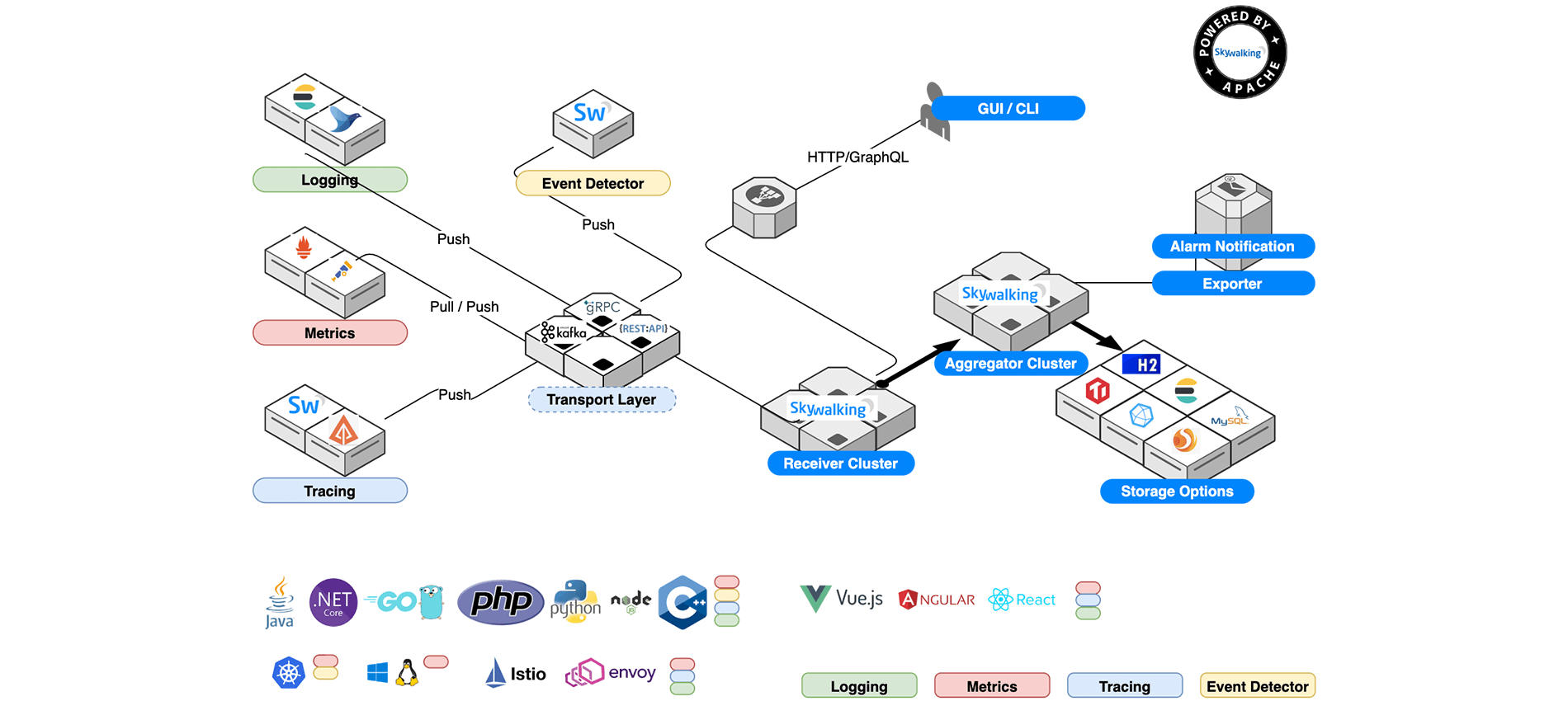

官方文档的SkyWalking架构图

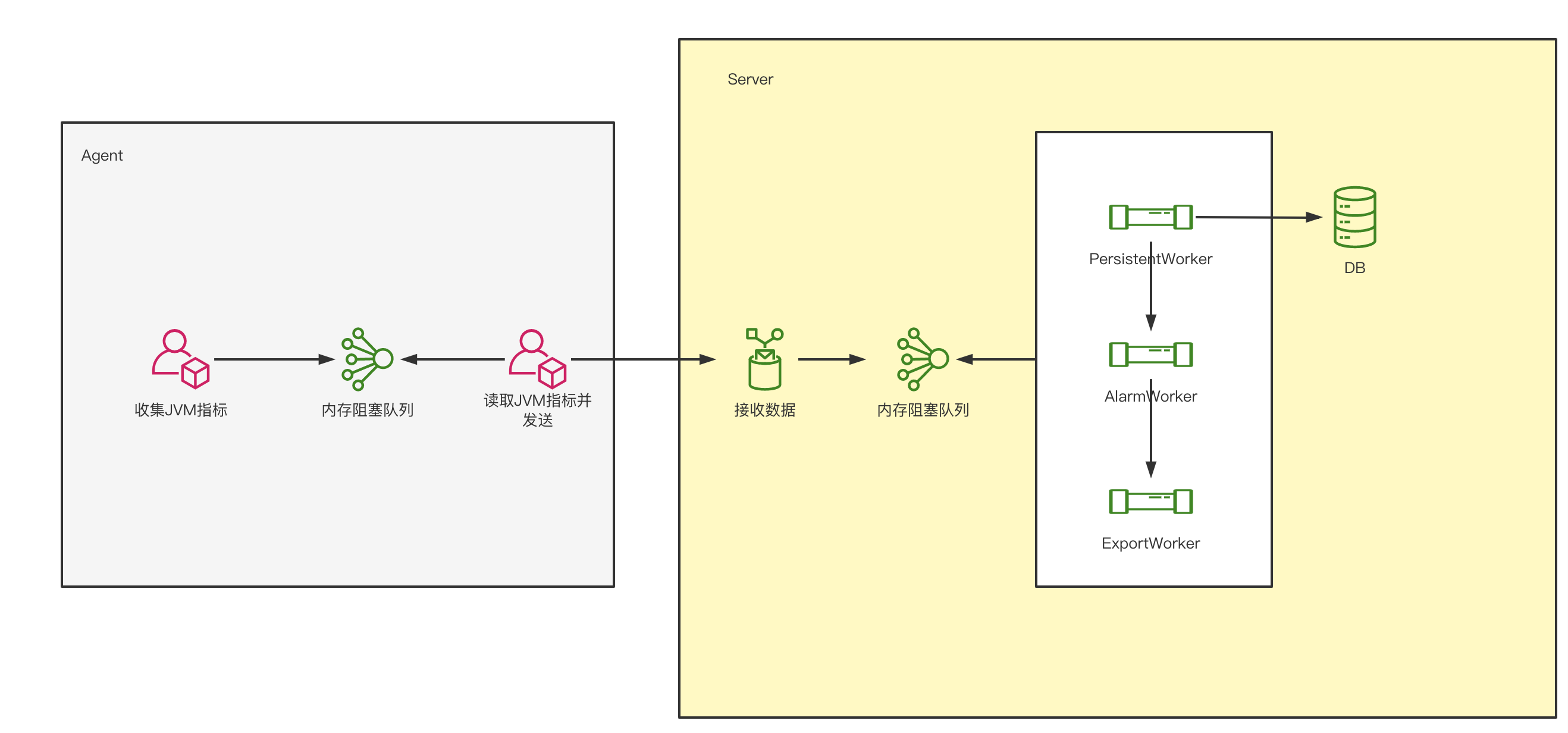

JVM指标收集大概的示意图

Agengt数据收集上报

采集数据

JVM数据的采集是通过运行在用户机器上的agent实现的,agent是独立于用户程序的一个jar包,其原理可以参考Java 动态调试技术原理及实践这篇文章,这里介绍agent采集数据的简要流程。

- agent启动时,会通过Java的SPI机制,这也是SkyWalking的插件模式的原理,将实现BootService的类全部加载,其中就包括了JVMService.java和JVMMetricsSender.java,该类的boot方法会启动两个线程池,分别执行JVMService的run方法和JVMMetricsSender的run方法

@Override

public void boot() throws Throwable {

collectMetricFuture = Executors.newSingleThreadScheduledExecutor(

new DefaultNamedThreadFactory("JVMService-produce"))

.scheduleAtFixedRate(new RunnableWithExceptionProtection(

this,

new RunnableWithExceptionProtection.CallbackWhenException() {

@Override

public void handle(Throwable t) {

LOGGER.error("JVMService produces metrics failure.", t);

}

}

), 0, 1, TimeUnit.SECONDS);

sendMetricFuture = Executors.newSingleThreadScheduledExecutor(

new DefaultNamedThreadFactory("JVMService-consume"))

.scheduleAtFixedRate(new RunnableWithExceptionProtection(

sender,

new RunnableWithExceptionProtection.CallbackWhenException() {

@Override

public void handle(Throwable t) {

LOGGER.error("JVMService consumes and upload failure.", t);

}

}

), 0, 1, TimeUnit.SECONDS);

}- JVMService的run方法会每秒被线程池执行一次,通过java.lang.management提供的工具采集JVM的各项指标,然后调用sender.offer将生成的JVMMetric发送到内存中的阻塞队列(LinkedBlockingQueue<JVMMetric>)

@Override

public void run() {

long currentTimeMillis = System.currentTimeMillis();

try {

JVMMetric.Builder jvmBuilder = JVMMetric.newBuilder();

jvmBuilder.setTime(currentTimeMillis);

jvmBuilder.setCpu(CPUProvider.INSTANCE.getCpuMetric());

jvmBuilder.addAllMemory(MemoryProvider.INSTANCE.getMemoryMetricList());

jvmBuilder.addAllMemoryPool(MemoryPoolProvider.INSTANCE.getMemoryPoolMetricsList());

jvmBuilder.addAllGc(GCProvider.INSTANCE.getGCList());

jvmBuilder.setThread(ThreadProvider.INSTANCE.getThreadMetrics());

jvmBuilder.setClazz(ClassProvider.INSTANCE.getClassMetrics());

JVMMetric jvmMetric = jvmBuilder.build();

sender.offer(jvmMetric);

// refresh cpu usage percent

cpuUsagePercent = jvmMetric.getCpu().getUsagePercent();

} catch (Exception e) {

LOGGER.error(e, "Collect JVM info fail.");

}

}发送数据

- JVMMetricsSender的run方法会每秒被线程池执行一次,当建立RPC连接后,会将数据通过gRPC发送到服务端,并接收返回值

@Override

public void run() {

if (status == GRPCChannelStatus.CONNECTED) {

try {

JVMMetricCollection.Builder builder = JVMMetricCollection.newBuilder();

LinkedList<JVMMetric> buffer = new LinkedList<>();

queue.drainTo(buffer);

if (buffer.size() > 0) {

builder.addAllMetrics(buffer);

builder.setService(Config.Agent.SERVICE_NAME);

builder.setServiceInstance(Config.Agent.INSTANCE_NAME);

// 数据发送到服务端

Commands commands = stub.withDeadlineAfter(GRPC_UPSTREAM_TIMEOUT, TimeUnit.SECONDS)

.collect(builder.build());

// 处理返回值

ServiceManager.INSTANCE.findService(CommandService.class).receiveCommand(commands);

}

} catch (Throwable t) {

LOGGER.error(t, "send JVM metrics to Collector fail.");

ServiceManager.INSTANCE.findService(GRPCChannelManager.class).reportError(t);

}

}

}Server数据接收处理

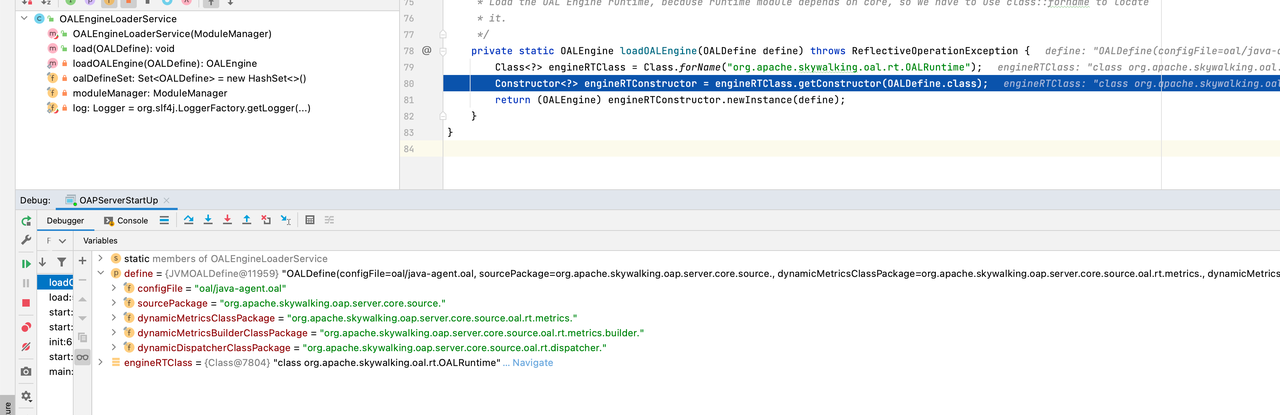

在调试项目的过程中,发现Server端处理Java Agent上报的JVM指标数据的类是动态加载的,然后进一步发现是通过OAL和预先定义好的代码模板,借助Antlr4生成的类,这里先介绍SkyWalking Server中的动态生成类。

动态生成类

OAL(Observably Analysis Language)是借助Antlr4自定义的一套语言,通过该语言,对从服务、服务实例和EndPioint等收集到的指标进行流式处理

- Server端的启动过程也使用了Java的SPI机制,启动时,会调用ModuleManager的init方法将继承了ModuleDefine(模块定义类)和ModuleProvider(模块提供类)的类全部加载到JVM中,并且执行这些类的prepare和start方法,这其中就包括JVMModule.java和JVMModuleProvider.java

public void init(

ApplicationConfiguration applicationConfiguration) throws ModuleNotFoundException, ProviderNotFoundException, ServiceNotProvidedException, CycleDependencyException, ModuleConfigException, ModuleStartException {

String[] moduleNames = applicationConfiguration.moduleList();

ServiceLoader<ModuleDefine> moduleServiceLoader = ServiceLoader.load(ModuleDefine.class);

ServiceLoader<ModuleProvider> moduleProviderLoader = ServiceLoader.load(ModuleProvider.class);

HashSet<String> moduleSet = new HashSet<>(Arrays.asList(moduleNames));

for (ModuleDefine module : moduleServiceLoader) {

// 遍历所有模块定义类,并调用这些类的provider 执行 prepare方法

if (moduleSet.contains(module.name())) {

module.prepare(this, applicationConfiguration.getModuleConfiguration(module.name()), moduleProviderLoader);

loadedModules.put(module.name(), module);

moduleSet.remove(module.name());

}

}

// Finish prepare stage

isInPrepareStage = false;

if (moduleSet.size() > 0) {

throw new ModuleNotFoundException(moduleSet.toString() + " missing.");

}

BootstrapFlow bootstrapFlow = new BootstrapFlow(loadedModules);

// 调用加载的类的start方法

bootstrapFlow.start(this);

bootstrapFlow.notifyAfterCompleted();

}- JVMModuleProvider的start方法会通过CoreModuleProvider获取到的OALEngineLoaderService,并通过OALEngineLoaderService的load方法完成动态类的生成:

- JVMModuleProvider的start方法

@Override public void start() throws ModuleStartException { // load official analysis getManager().find(CoreModule.NAME) .provider() .getService(OALEngineLoaderService.class) .load(JVMOALDefine.INSTANCE); GRPCHandlerRegister grpcHandlerRegister = getManager().find(SharingServerModule.NAME) .provider() .getService(GRPCHandlerRegister.class); JVMMetricReportServiceHandler jvmMetricReportServiceHandler = new JVMMetricReportServiceHandler(getManager()); grpcHandlerRegister.addHandler(jvmMetricReportServiceHandler); grpcHandlerRegister.addHandler(new JVMMetricReportServiceHandlerCompat(jvmMetricReportServiceHandler)); } public class JVMOALDefine extends OALDefine { public static final JVMOALDefine INSTANCE = new JVMOALDefine(); private JVMOALDefine() { super( "oal/java-agent.oal", "org.apache.skywalking.oap.server.core.source" ); } } - OALEngineLoaderService的load方法主要完成下面的事情:

- 通过反射和OALDefine中定义的configFile、sourcePackage等获取到一个OALRuntime实例

- 设置对应的StreamListener、DispatcherListener和StorageBuilderFactory

- 调用OALRuntime的start方法,完成动态类的生成

- 通知所有监听器,将动态生成的Metrics添加到MetricsStreamProcessor以生成相关的表和工作流工作任务,将SourceDispatch实现类添加至DispatcherManager

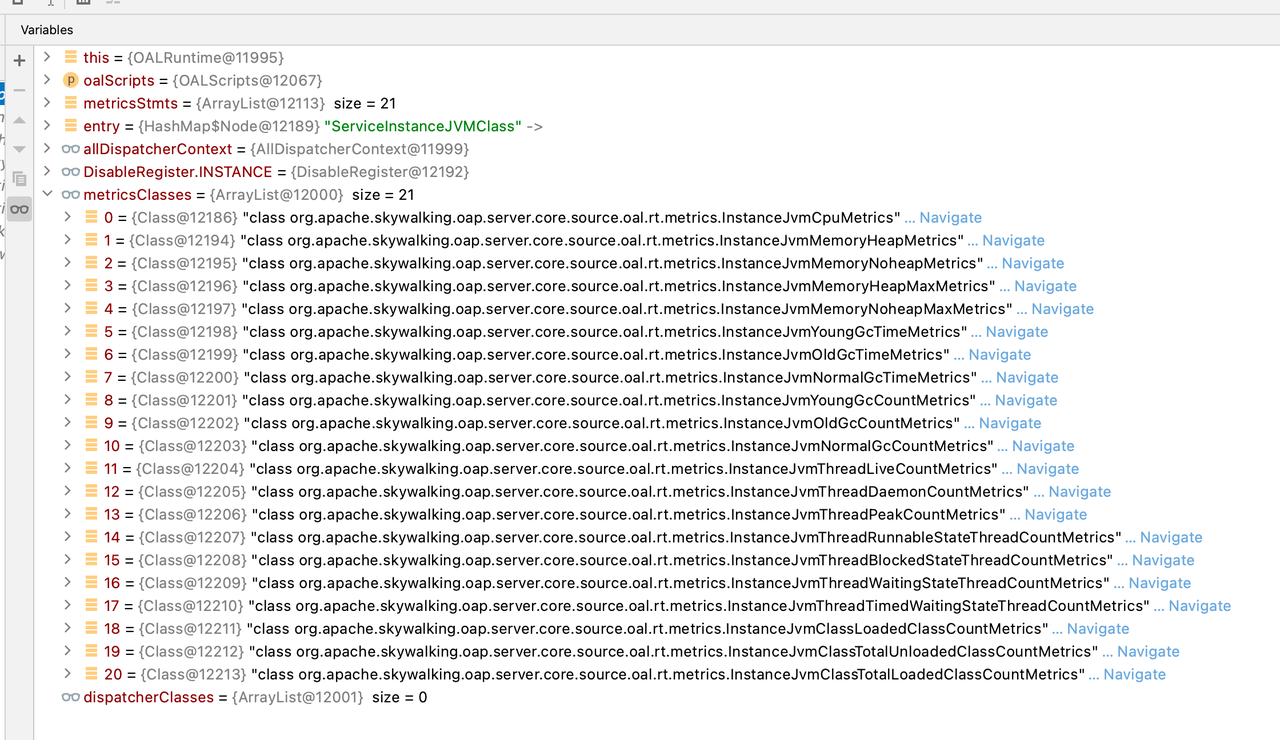

public void load(OALDefine define) throws ModuleStartException { if (oalDefineSet.contains(define)) { // each oal define will only be activated once return; } try { // 通过反射和OALDefine中定义的configFile、sourcePackage等获取到一个OALRuntime实例 OALEngine engine = loadOALEngine(define); // 设置对应的StreamListener、DispatcherListener和StorageBuilderFactory StreamAnnotationListener streamAnnotationListener = new StreamAnnotationListener(moduleManager); engine.setStreamListener(streamAnnotationListener); engine.setDispatcherListener(moduleManager.find(CoreModule.NAME) .provider() .getService(SourceReceiver.class) .getDispatcherDetectorListener()); engine.setStorageBuilderFactory(moduleManager.find(StorageModule.NAME) .provider() .getService(StorageBuilderFactory.class)); // 调用OALRuntime的start方法,完成动态类的生成 engine.start(OALEngineLoaderService.class.getClassLoader()); // 通知所有监听器,将动态生成的Metrics添加到MetricsStreamProcessor,SourceDispatch至DispatcherManager engine.notifyAllListeners(); oalDefineSet.add(define); } catch (ReflectiveOperationException | OALCompileException e) { throw new ModuleStartException(e.getMessage(), e); } } @Override public void notifyAllListeners() throws ModuleStartException { for (Class metricsClass : metricsClasses) { try { streamAnnotationListener.notify(metricsClass); } catch (StorageException e) { throw new ModuleStartException(e.getMessage(), e); } } for (Class dispatcherClass : dispatcherClasses) { try { dispatcherDetectorListener.addIfAsSourceDispatcher(dispatcherClass); } catch (Exception e) { throw new ModuleStartException(e.getMessage(), e); } } }

- 通过反射和OALDefine中定义的configFile、sourcePackage等获取到一个OALRuntime实例

- OALRuntime的start方法主要完成了下面的工作:

- 通过JVMOALDefine中的位置,读取java-agent.oal文件

- 获取获取oal脚本解析器和OALScripts

- 调用generateClassAtRuntime(oalScripts)方法根据代码模板(oap-server/oal-rt/src/main/resources/code-templates)动态生成指标类和dispatcher类

public void start(ClassLoader currentClassLoader) throws ModuleStartException, OALCompileException { if (!IS_RT_TEMP_FOLDER_INIT_COMPLETED) { prepareRTTempFolder(); IS_RT_TEMP_FOLDER_INIT_COMPLETED = true; } this.currentClassLoader = currentClassLoader; Reader read; try { // 读取oal文件 read = ResourceUtils.read(oalDefine.getConfigFile()); } catch (FileNotFoundException e) { throw new ModuleStartException("Can't locate " + oalDefine.getConfigFile(), e); } OALScripts oalScripts; try { // 获取oal脚本解析器 ScriptParser scriptParser = ScriptParser.createFromFile(read, oalDefine.getSourcePackage()); // 获取oal脚本OALScripts oalScripts = scriptParser.parse(); } catch (IOException e) { throw new ModuleStartException("OAL script parse analysis failure.", e); } // 动态生成类 this.generateClassAtRuntime(oalScripts); } // 获取oal脚本解析器 public static ScriptParser createFromFile(Reader scriptReader, String sourcePackage) throws IOException { ScriptParser parser = new ScriptParser(); parser.lexer = new OALLexer(CharStreams.fromReader(scriptReader)); parser.sourcePackage = sourcePackage; return parser; } // 获取oal脚本OALScripts public OALScripts parse() throws IOException { OALScripts scripts = new OALScripts(); CommonTokenStream tokens = new CommonTokenStream(lexer); OALParser parser = new OALParser(tokens); ParseTree tree = parser.root(); ParseTreeWalker walker = new ParseTreeWalker(); walker.walk(new OALListener(scripts, sourcePackage), tree); return scripts; } private void generateClassAtRuntime(OALScripts oalScripts) throws OALCompileException { List<AnalysisResult> metricsStmts = oalScripts.getMetricsStmts(); metricsStmts.forEach(this::buildDispatcherContext); for (AnalysisResult metricsStmt : metricsStmts) { metricsClasses.add(generateMetricsClass(metricsStmt)); generateMetricsBuilderClass(metricsStmt); } for (Map.Entry<String, DispatcherContext> entry : allDispatcherContext.getAllContext().entrySet()) { dispatcherClasses.add(generateDispatcherClass(entry.getKey(), entry.getValue())); } oalScripts.getDisableCollection().getAllDisableSources().forEach(disable -> { DisableRegister.INSTANCE.add(disable); }); }动态生成的类的示例(环境变量)ServiceInstanceJVMGCDispatcher.classpublic class ServiceInstanceJVMGCDispatcher implements SourceDispatcher<ServiceInstanceJVMGC> { private void doInstanceJvmYoungGcTime(ServiceInstanceJVMGC var1) { if ((new StringMatch()).match(var1.getPhase(), GCPhase.NEW)) { InstanceJvmYoungGcTimeMetrics var2 = new InstanceJvmYoungGcTimeMetrics(); var2.setTimeBucket(var1.getTimeBucket()); var2.setEntityId(var1.getEntityId()); var2.setServiceId(var1.getServiceId()); var2.combine(var1.getTime()); MetricsStreamProcessor.getInstance().in(var2); } } private void doInstanceJvmOldGcTime(ServiceInstanceJVMGC var1) { if ((new StringMatch()).match(var1.getPhase(), GCPhase.OLD)) { InstanceJvmOldGcTimeMetrics var2 = new InstanceJvmOldGcTimeMetrics(); var2.setTimeBucket(var1.getTimeBucket()); var2.setEntityId(var1.getEntityId()); var2.setServiceId(var1.getServiceId()); var2.combine(var1.getTime()); MetricsStreamProcessor.getInstance().in(var2); } } private void doInstanceJvmNormalGcTime(ServiceInstanceJVMGC var1) { if ((new StringMatch()).match(var1.getPhase(), GCPhase.NORMAL)) { InstanceJvmNormalGcTimeMetrics var2 = new InstanceJvmNormalGcTimeMetrics(); var2.setTimeBucket(var1.getTimeBucket()); var2.setEntityId(var1.getEntityId()); var2.setServiceId(var1.getServiceId()); var2.combine(var1.getTime()); MetricsStreamProcessor.getInstance().in(var2); } } private void doInstanceJvmYoungGcCount(ServiceInstanceJVMGC var1) { if ((new StringMatch()).match(var1.getPhase(), GCPhase.NEW)) { InstanceJvmYoungGcCountMetrics var2 = new InstanceJvmYoungGcCountMetrics(); var2.setTimeBucket(var1.getTimeBucket()); var2.setEntityId(var1.getEntityId()); var2.setServiceId(var1.getServiceId()); var2.combine(var1.getCount()); MetricsStreamProcessor.getInstance().in(var2); } } private void doInstanceJvmOldGcCount(ServiceInstanceJVMGC var1) { if ((new StringMatch()).match(var1.getPhase(), GCPhase.OLD)) { InstanceJvmOldGcCountMetrics var2 = new InstanceJvmOldGcCountMetrics(); var2.setTimeBucket(var1.getTimeBucket()); var2.setEntityId(var1.getEntityId()); var2.setServiceId(var1.getServiceId()); var2.combine(var1.getCount()); MetricsStreamProcessor.getInstance().in(var2); } } private void doInstanceJvmNormalGcCount(ServiceInstanceJVMGC var1) { if ((new StringMatch()).match(var1.getPhase(), GCPhase.NORMAL)) { InstanceJvmNormalGcCountMetrics var2 = new InstanceJvmNormalGcCountMetrics(); var2.setTimeBucket(var1.getTimeBucket()); var2.setEntityId(var1.getEntityId()); var2.setServiceId(var1.getServiceId()); var2.combine(var1.getCount()); MetricsStreamProcessor.getInstance().in(var2); } } public void dispatch(ISource var1) { ServiceInstanceJVMGC var2 = (ServiceInstanceJVMGC)var1; this.doInstanceJvmYoungGcTime(var2); this.doInstanceJvmOldGcTime(var2); this.doInstanceJvmNormalGcTime(var2); this.doInstanceJvmYoungGcCount(var2); this.doInstanceJvmOldGcCount(var2); this.doInstanceJvmNormalGcCount(var2); } public ServiceInstanceJVMGCDispatcher() { } }InstanceJvmOldGcCountMetrics.class@Stream( name = "instance_jvm_old_gc_count", scopeId = 11, builder = InstanceJvmOldGcCountMetricsBuilder.class, processor = MetricsStreamProcessor.class ) public class InstanceJvmOldGcCountMetrics extends SumMetrics implements WithMetadata { @Column( columnName = "entity_id", length = 512 ) private String entityId; @Column( columnName = "service_id", length = 256 ) private String serviceId; public InstanceJvmOldGcCountMetrics() { } public String getEntityId() { return this.entityId; } public void setEntityId(String var1) { this.entityId = var1; } public String getServiceId() { return this.serviceId; } public void setServiceId(String var1) { this.serviceId = var1; } protected String id0() { StringBuilder var1 = new StringBuilder(String.valueOf(this.getTimeBucket())); var1.append("_").append(this.entityId); return var1.toString(); } public int hashCode() { byte var1 = 17; int var2 = 31 * var1 + this.entityId.hashCode(); var2 = 31 * var2 + (int)this.getTimeBucket(); return var2; } public int remoteHashCode() { byte var1 = 17; int var2 = 31 * var1 + this.entityId.hashCode(); return var2; } public boolean equals(Object var1) { if (this == var1) { return true; } else if (var1 == null) { return false; } else if (this.getClass() != var1.getClass()) { return false; } else { InstanceJvmOldGcCountMetrics var2 = (InstanceJvmOldGcCountMetrics)var1; if (!this.entityId.equals(var2.entityId)) { return false; } else { return this.getTimeBucket() == var2.getTimeBucket(); } } } public Builder serialize() { Builder var1 = RemoteData.newBuilder(); var1.addDataStrings(this.getEntityId()); var1.addDataStrings(this.getServiceId()); var1.addDataLongs(this.getValue()); var1.addDataLongs(this.getTimeBucket()); return var1; } public void deserialize(RemoteData var1) { this.setEntityId(var1.getDataStrings(0)); this.setServiceId(var1.getDataStrings(1)); this.setValue(var1.getDataLongs(0)); this.setTimeBucket(var1.getDataLongs(1)); } public MetricsMetaInfo getMeta() { return new MetricsMetaInfo("instance_jvm_old_gc_count", 11, this.entityId); } public Metrics toHour() { InstanceJvmOldGcCountMetrics var1 = new InstanceJvmOldGcCountMetrics(); var1.setEntityId(this.getEntityId()); var1.setServiceId(this.getServiceId()); var1.setValue(this.getValue()); var1.setTimeBucket(this.toTimeBucketInHour()); return var1; } public Metrics toDay() { InstanceJvmOldGcCountMetrics var1 = new InstanceJvmOldGcCountMetrics(); var1.setEntityId(this.getEntityId()); var1.setServiceId(this.getServiceId()); var1.setValue(this.getValue()); var1.setTimeBucket(this.toTimeBucketInDay()); return var1; } }

- JVMModuleProvider的start方法

接收数据

客户端和服务端采用的是gRPC通信,对应的proto文件是JVMMetric.proto:

syntax = "proto3";

package skywalking.v3;

option java_multiple_files = true;

option java_package = "org.apache.skywalking.apm.network.language.agent.v3";

option csharp_namespace = "SkyWalking.NetworkProtocol.V3";

option go_package = "skywalking.apache.org/repo/goapi/collect/language/agent/v3";

import "common/Common.proto";

// Define the JVM metrics report service.

service JVMMetricReportService {

rpc collect (JVMMetricCollection) returns (Commands) {

}

}

message JVMMetricCollection {

repeated JVMMetric metrics = 1;

string service = 2;

string serviceInstance = 3;

}

message JVMMetric {

int64 time = 1;

CPU cpu = 2;

repeated Memory memory = 3;

repeated MemoryPool memoryPool = 4;

repeated GC gc = 5;

Thread thread = 6;

Class clazz = 7;

}

message Memory {

bool isHeap = 1;

int64 init = 2;

int64 max = 3;

int64 used = 4;

int64 committed = 5;

}

message MemoryPool {

PoolType type = 1;

int64 init = 2;

int64 max = 3;

int64 used = 4;

int64 committed = 5;

}

enum PoolType {

CODE_CACHE_USAGE = 0;

NEWGEN_USAGE = 1;

OLDGEN_USAGE = 2;

SURVIVOR_USAGE = 3;

PERMGEN_USAGE = 4;

METASPACE_USAGE = 5;

}

message GC {

GCPhase phase = 1;

int64 count = 2;

int64 time = 3;

}

enum GCPhase {

NEW = 0;

OLD = 1;

NORMAL = 2; // The type of GC doesn't have new and old phases, like Z Garbage Collector (ZGC)

}

// See: https://docs.oracle.com/javase/8/docs/api/java/lang/management/ThreadMXBean.html

message Thread {

int64 liveCount = 1;

int64 daemonCount = 2;

int64 peakCount = 3;

int64 runnableStateThreadCount = 4;

int64 blockedStateThreadCount = 5;

int64 waitingStateThreadCount = 6;

int64 timedWaitingStateThreadCount = 7;

}

// See: https://docs.oracle.com/javase/8/docs/api/java/lang/management/ClassLoadingMXBean.html

message Class {

int64 loadedClassCount = 1;

int64 totalUnloadedClassCount = 2;

int64 totalLoadedClassCount = 3;

}一个请求实例

metrics{

time: 1652800359303cpu{

usagePercent: 0.03829805239617419

}memory{

isHeap: trueinit: 536870912max: 7635730432used: 301977016committed: 850395136

}memory{

init: 2555904max: -1used: 81238712committed: 84606976

}memoryPool{

init: 2555904max: 251658240used: 22219264committed: 22478848

}memoryPool{

type: METASPACE_USAGEmax: -1used: 52060512committed: 54657024

}memoryPool{

type: PERMGEN_USAGEmax: 1073741824used: 6958936committed: 7471104

}memoryPool{

type: NEWGEN_USAGEinit: 134742016max: 2845310976used: 276025736committed: 588251136

}memoryPool{

type: SURVIVOR_USAGEinit: 22020096max: 11010048used: 10771496committed: 11010048

}memoryPool{

type: OLDGEN_USAGEinit: 358088704max: 5726797824used: 15179784committed: 251133952

}gc{

}gc{

phase: OLD

}thread{

liveCount: 45daemonCount: 41peakCount: 46runnableStateThreadCount: 17waitingStateThreadCount: 14timedWaitingStateThreadCount: 14

}clazz{

loadedClassCount: 9685totalLoadedClassCount: 9685

}

}service: "Your_ApplicationName"serviceInstance: "d7a7de5f385149dfb49b8d23e8b6fbc9@10.4.77.148"- 接收数据的入口是:org.apache.skywalking.oap.server.receiver.jvm.provider.handler.JVMMetricReportServiceHandler#collect,该方法主要完成以下工作:接收JVMMetricCollection并将其转换成Builder,遍历其中的Metrics,并调用jvmSourceDispatcher.sendMetric把数据发送到内存对列中

@Override public void collect(JVMMetricCollection request, StreamObserver<Commands> responseObserver) { if (log.isDebugEnabled()) { log.debug( "receive the jvm metrics from service instance, name: {}, instance: {}", request.getService(), request.getServiceInstance() ); } final JVMMetricCollection.Builder builder = request.toBuilder(); builder.setService(namingControl.formatServiceName(builder.getService())); builder.setServiceInstance(namingControl.formatInstanceName(builder.getServiceInstance())); builder.getMetricsList().forEach(jvmMetric -> { jvmSourceDispatcher.sendMetric(builder.getService(), builder.getServiceInstance(), jvmMetric); }); responseObserver.onNext(Commands.newBuilder().build()); responseObserver.onCompleted(); } - jvmSourceDispatcher.sendMetric会调用SourceReceiverImpl的receive方法,该方法会从dispatcherMap中根据Source的Scope获取出对应的Dispatcher(JVM相关指标的的Dispatcher是通过OAL动态生成的,上文中已介绍)

- 通过上文展示的ServiceInstanceJVMGCDispatcher.class中可以看到,最终会调用MetricsStreamProcessor.java的in方法,最终将数据传入到自定义的阻塞队列org.apache.skywalking.oap.server.library.datacarrier.buffer.Channels(基于ArrayBlockingQueue封装的),此时服务端接收数据的流程基本结束

处理数据

Server端的数据处理环节主要是把内存队列中的数据持久化到存储系统。

- 在上文中提到的OAL动态生成类后,会调用MetricsStreamProcessor的create方法会为每个指标创建工作任务和工作流,其中就包含了三种类型的MetricsPersistentWorker,分别每分钟、小时和天进行一次持久化;同时会调用modelSetter.add通过通知建表监听任务完成数据库表的创建(根据动态生成的类及其父类的字段建表)

public void create(ModuleDefineHolder moduleDefineHolder, StreamDefinition stream, Class<? extends Metrics> metricsClass) throws StorageException { final StorageBuilderFactory storageBuilderFactory = moduleDefineHolder.find(StorageModule.NAME) .provider() .getService(StorageBuilderFactory.class); final Class<? extends StorageBuilder> builder = storageBuilderFactory.builderOf( metricsClass, stream.getBuilder()); StorageDAO storageDAO = moduleDefineHolder.find(StorageModule.NAME).provider().getService(StorageDAO.class); IMetricsDAO metricsDAO; try { metricsDAO = storageDAO.newMetricsDao(builder.getDeclaredConstructor().newInstance()); } catch (InstantiationException | IllegalAccessException | NoSuchMethodException | InvocationTargetException e) { throw new UnexpectedException("Create " + stream.getBuilder().getSimpleName() + " metrics DAO failure.", e); } ModelCreator modelSetter = moduleDefineHolder.find(CoreModule.NAME).provider().getService(ModelCreator.class); DownSamplingConfigService configService = moduleDefineHolder.find(CoreModule.NAME) .provider() .getService(DownSamplingConfigService.class); MetricsPersistentWorker hourPersistentWorker = null; MetricsPersistentWorker dayPersistentWorker = null; MetricsTransWorker transWorker = null; final MetricsExtension metricsExtension = metricsClass.getAnnotation(MetricsExtension.class); /** * All metrics default are `supportDownSampling` and `insertAndUpdate`, unless it has explicit definition. */ boolean supportDownSampling = true; boolean supportUpdate = true; boolean timeRelativeID = true; if (metricsExtension != null) { supportDownSampling = metricsExtension.supportDownSampling(); supportUpdate = metricsExtension.supportUpdate(); timeRelativeID = metricsExtension.timeRelativeID(); } if (supportDownSampling) { if (configService.shouldToHour()) { Model model = modelSetter.add( metricsClass, stream.getScopeId(), new Storage(stream.getName(), timeRelativeID, DownSampling.Hour), false ); hourPersistentWorker = downSamplingWorker(moduleDefineHolder, metricsDAO, model, supportUpdate); } if (configService.shouldToDay()) { Model model = modelSetter.add( metricsClass, stream.getScopeId(), new Storage(stream.getName(), timeRelativeID, DownSampling.Day), false ); dayPersistentWorker = downSamplingWorker(moduleDefineHolder, metricsDAO, model, supportUpdate); } transWorker = new MetricsTransWorker( moduleDefineHolder, hourPersistentWorker, dayPersistentWorker); } Model model = modelSetter.add( metricsClass, stream.getScopeId(), new Storage(stream.getName(), timeRelativeID, DownSampling.Minute), false ); MetricsPersistentWorker minutePersistentWorker = minutePersistentWorker( moduleDefineHolder, metricsDAO, model, transWorker, supportUpdate); String remoteReceiverWorkerName = stream.getName() + "_rec"; IWorkerInstanceSetter workerInstanceSetter = moduleDefineHolder.find(CoreModule.NAME) .provider() .getService(IWorkerInstanceSetter.class); workerInstanceSetter.put(remoteReceiverWorkerName, minutePersistentWorker, metricsClass); MetricsRemoteWorker remoteWorker = new MetricsRemoteWorker(moduleDefineHolder, remoteReceiverWorkerName); MetricsAggregateWorker aggregateWorker = new MetricsAggregateWorker( moduleDefineHolder, remoteWorker, stream.getName(), l1FlushPeriod); entryWorkers.put(metricsClass, aggregateWorker); } -

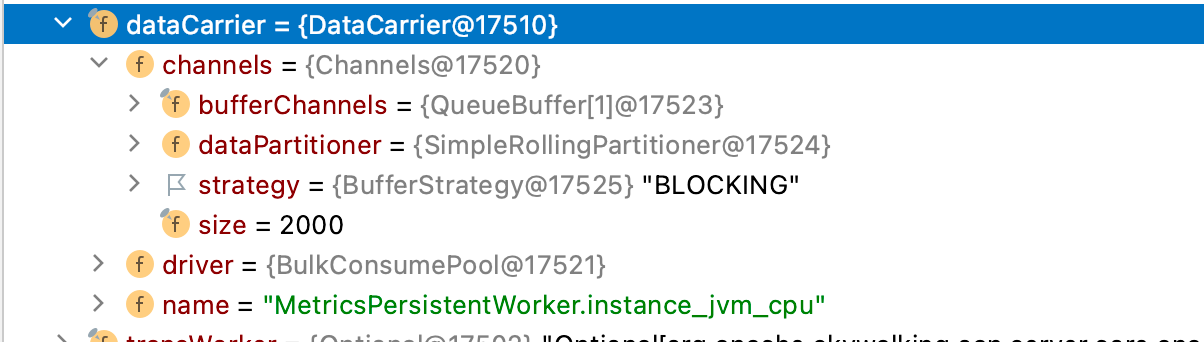

MetricsPersistentWorker会为每种计算类型的指标(比如METRICS_L2_AGGREGATION)创建一个ConsumerPoolFactory,并为每种类型的指标创建一个PersistentConsumer和DataCarrier<Metrics>(封装了暂存Channel的内存队列)

- DataCarrier的consume方法为DataCarrier中的Channel添加一个ConsumerPool去消费,DataCarrier的consume方法会调用ConsumerPool的实现类BulkConsumePool的begin方法,启动所有的Consumer

MetricsPersistentWorker(ModuleDefineHolder moduleDefineHolder, Model model, IMetricsDAO metricsDAO, AbstractWorker<Metrics> nextAlarmWorker, AbstractWorker<ExportEvent> nextExportWorker, MetricsTransWorker transWorker, boolean enableDatabaseSession, boolean supportUpdate, long storageSessionTimeout, int metricsDataTTL) { super(moduleDefineHolder, new ReadWriteSafeCache<>(new MergableBufferedData(), new MergableBufferedData())); this.model = model; this.context = new HashMap<>(100); this.enableDatabaseSession = enableDatabaseSession; this.metricsDAO = metricsDAO; this.nextAlarmWorker = Optional.ofNullable(nextAlarmWorker); this.nextExportWorker = Optional.ofNullable(nextExportWorker); this.transWorker = Optional.ofNullable(transWorker); this.supportUpdate = supportUpdate; this.sessionTimeout = storageSessionTimeout; this.persistentCounter = 0; this.persistentMod = 1; this.metricsDataTTL = metricsDataTTL; this.skipDefaultValueMetric = true; String name = "METRICS_L2_AGGREGATION"; int size = BulkConsumePool.Creator.recommendMaxSize() / 8; if (size == 0) { size = 1; } BulkConsumePool.Creator creator = new BulkConsumePool.Creator(name, size, 20); try { ConsumerPoolFactory.INSTANCE.createIfAbsent(name, creator); } catch (Exception e) { throw new UnexpectedException(e.getMessage(), e); } this.dataCarrier = new DataCarrier<>("MetricsPersistentWorker." + model.getName(), name, 1, 2000); this.dataCarrier.consume(ConsumerPoolFactory.INSTANCE.get(name), new PersistentConsumer()); MetricsCreator metricsCreator = moduleDefineHolder.find(TelemetryModule.NAME) .provider() .getService(MetricsCreator.class); aggregationCounter = metricsCreator.createCounter( "metrics_aggregation", "The number of rows in aggregation", new MetricsTag.Keys("metricName", "level", "dimensionality"), new MetricsTag.Values(model.getName(), "2", model.getDownsampling().getName()) ); skippedMetricsCounter = metricsCreator.createCounter( "metrics_persistence_skipped", "The number of metrics skipped in persistence due to be in default value", new MetricsTag.Keys("metricName", "dimensionality"), new MetricsTag.Values(model.getName(), model.getDownsampling().getName()) ); SESSION_TIMEOUT_OFFSITE_COUNTER++; } /** * set consumeDriver to this Carrier. consumer begin to run when {@link DataCarrier#produce} begin to work. * * @param consumer single instance of consumer, all consumer threads will all use this instance. * @param num number of consumer threads */ public DataCarrier consume(IConsumer<T> consumer, int num, long consumeCycle) { if (driver != null) { driver.close(channels); } driver = new ConsumeDriver<T>(this.name, this.channels, consumer, num, consumeCycle); driver.begin(channels); return this; } - PersistentConsumer将消费对应Channel中的数据,并暂存到ReadWriteSafeCache中

- CoreModule启动时会加载PersistenceTimer,PersistenceTimer会启动一个线程池,线程池中线程执行的方法会:

- 获取MetricsStreamProcessor.getInstance().getPersistentWorkers()的所有PersistentWorkers,包括上面创建的MetricsPersistentWorker

- 调用MetricsPersistentWorker的worker.buildBatchRequests()方法创建批量持久化的请求innerPrepareRequests,buildBatchRequests会读取ReadWriteSafeCache中的数据;

- 调用H2BatchDAO类型的对象batchDAO的flush(innerPrepareRequests)完成持久化

数据存储模型

以存储运行状态的Java线程为例,MySQL建表语句为

CREATE TABLE `instance_jvm_thread_runnable_state_thread_count` (

`id` varchar(512) NOT NULL, /* id = time_bucket + "_" + entity_id */

`entity_id` varchar(512) DEFAULT NULL,/* 根据service_id 和 serviece_instance_id 生成*/

`service_id` varchar(256) DEFAULT NULL, /* 根据客户端配置的service name生成 */

`summation` bigint DEFAULT NULL,

`count` bigint DEFAULT NULL,

`value_` bigint DEFAULT NULL, /* value = (int)summation/count */

`time_bucket` bigint DEFAULT NULL,

PRIMARY KEY (`id`),

KEY `INSTANCE_JVM_THREAD_RUNNABLE_STATE_THREAD_COUNT_0_IDX` (`value_`),

KEY `INSTANCE_JVM_THREAD_RUNNABLE_STATE_THREAD_COUNT_1_IDX` (`time_bucket`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;前端数据查询

- Server端启动时,当CoreModule及其依赖初始化后,会调用UITemplateInitializer(getManager()).initAll()完成UI模版的加载,UI模版的位置是:oap-server/server-starter/src/main/resources/ui-initialized-templates

- 同时也会使用SPI机制加载GraphQLQueryProvider,并调用期prepare和start方法完成查询模块的初始化

- 前端发送的GraphQL被解析到指定的服务,比如MetricsQuery的readMetricsValues方法,该方法会最终调用对应的DAO层代码(根据不同数据库),以MySQL为例,最终调用H2MetricsQueryDAO的readMetricsValue,进行SQL语句的拼接和执行

前端查询示例:

// 请求

{

"query": "query queryData($duration: Duration!,$condition0: MetricsCondition!,$condition1: MetricsCondition!,$condition2: MetricsCondition!,$condition3: MetricsCondition!) {instance_jvm_memory_noheap_max0: readMetricsValues(condition: $condition0, duration: $duration){\n label\n values {\n values {value}\n }\n },instance_jvm_memory_noheap1: readMetricsValues(condition: $condition1, duration: $duration){\n label\n values {\n values {value}\n }\n },instance_jvm_memory_heap2: readMetricsValues(condition: $condition2, duration: $duration){\n label\n values {\n values {value}\n }\n },instance_jvm_memory_heap_max3: readMetricsValues(condition: $condition3, duration: $duration){\n label\n values {\n values {value}\n }\n }}",

"variables": {

"duration": {

"start": "2022-05-18 2041",

"end": "2022-05-18 2111",

"step": "MINUTE"

},

"condition0": {

"name": "instance_jvm_memory_noheap_max",

"entity": {

"scope": "ServiceInstance",

"serviceName": "Your_ApplicationName",

"normal": true,

"serviceInstanceName": "d7a7de5f385149dfb49b8d23e8b6fbc9@10.4.77.148"

}

},

"condition1": {

"name": "instance_jvm_memory_noheap",

"entity": {

"scope": "ServiceInstance",

"serviceName": "Your_ApplicationName",

"normal": true,

"serviceInstanceName": "d7a7de5f385149dfb49b8d23e8b6fbc9@10.4.77.148"

}

},

"condition2": {

"name": "instance_jvm_memory_heap",

"entity": {

"scope": "ServiceInstance",

"serviceName": "Your_ApplicationName",

"normal": true,

"serviceInstanceName": "d7a7de5f385149dfb49b8d23e8b6fbc9@10.4.77.148"

}

},

"condition3": {

"name": "instance_jvm_memory_heap_max",

"entity": {

"scope": "ServiceInstance",

"serviceName": "Your_ApplicationName",

"normal": true,

"serviceInstanceName": "d7a7de5f385149dfb49b8d23e8b6fbc9@10.4.77.148"

}

}

}

}

// 响应

{

"data": {

"instance_jvm_memory_noheap_max0": {

"label": null,

"values": {

"values": [

{

"value": 0

}

// 省略...

]

}

},

"instance_jvm_memory_noheap1": {

"label": null,

"values": {

"values": [

{

"value": 0

},

// 省略...

]

}

},

"instance_jvm_memory_heap2": {

"label": null,

"values": {

"values": [

{

"value": 0

},

// 省略...

}

]

}

},

"instance_jvm_memory_heap_max3": {

"label": null,

"values": {

"values": [

{

"value": 0

} // 省略...

]

}

}

}

}总结

- Agent端和Server端都适用了SPI机制完成相关模块的加载,为系统提供了很高的扩展性

- Agent端收集数据和上报数据的操作通过内存队列LinkedBlockingQueue解耦,可以避免网络通信堵塞造成的数据收集不全

- Agent端和Server端通过gRPC方式通信(go2sky上报trace信息也是如此)

- Server端也创建了大量的线程池和内存队列,用来接收数据、处理数据和持久化数据

- Server端处理数据用到了借助Antlr4定义的OAL语言,处理前端请求使用了GraphQL,后续需要对这两部分有更深入的理解

- SkyWalking的源码用到了大量的设计模式,比如观察者模式(各种listener)、单例模式(Enum实现)等,后续如果发现了某种设计模式,可以及时记录,后期可总结一下

- 更详细的流程还需要进一步阅读和调试代码,此文档会持续更新