Fullgc引发redis超时异常

1、现象

-|2019-11-14 11:50:01.095|ERROR|TID:2254.3964.15737033664569521|DubboServerHandler-192.168.58.20:10880-thread-317|c.s.c.s.p.StoreSkuRpcServiceImpl.selectStoreSkuByOneId:67-查询店铺商品信息出错,skuId=28190648 org.springframework.dao.QueryTimeoutException: Redis command timed out; nested exception is io.lettuce.core.RedisCommandTimeoutException: Command timed out after 6 second(s) at org.springframework.data.redis.connection.lettuce.LettuceExceptionConverter.convert(LettuceExceptionConverter.java:70) at org.springframework.data.redis.connection.lettuce.LettuceExceptionConverter.convert(LettuceExceptionConverter.java:41) at org.springframework.data.redis.PassThroughExceptionTranslationStrategy.translate(PassThroughExceptionTranslationStrategy.java:44) at org.springframework.data.redis.FallbackExceptionTranslationStrategy.translate(FallbackExceptionTranslationStrategy.java:42) at org.springframework.data.redis.connection.lettuce.LettuceConnection.convertLettuceAccessException(LettuceConnection.java:268) at org.springframework.data.redis.connection.lettuce.LettuceStringCommands.convertLettuceAccessException(LettuceStringCommands.java:799) at org.springframework.data.redis.connection.lettuce.LettuceStringCommands.get(LettuceStringCommands.java:68) at org.springframework.data.redis.connection.DefaultedRedisConnection.get(DefaultedRedisConnection.java:253) at sun.reflect.GeneratedMethodAccessor229.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.springframework.data.redis.core.CloseSuppressingInvocationHandler.invoke(CloseSuppressingInvocationHandler.java:61) at com.sun.proxy.$Proxy188.get(Unknown Source) at com.xxx.chaos.server.common.redis.XxxRedisTemplate$11.doInRedis(XxxRedisTemplate.java:288) at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:224) at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:184) at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:171) at com.sexxcoo.chaos.server.common.redis.XxxRedisTemplate.get(XxxRedisTemplate.java:285) at com.xxx.chaos.server.common.redis.XxxRedisTemplate.gets(XxxRedisTemplate.java:308) at com.xxx.chaos.server.common.redis.XxxRedisTemplate.multiGet2Map(XxxRedisTemplate.java:376) at com.xxx.chaos.server.service.impl.StoreSkuServiceImpl.selectStoreSkuBySkuId(StoreSkuServiceImpl.java:47) at com.xxx.chaos.server.service.impl.StoreSkuServiceImpl$$FastClassBySpringCGLIB$$c1612837.invoke(<generated>) at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218) at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:749) at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163) at org.springframework.aop.interceptor.ExposeInvocationInterceptor.invoke(ExposeInvocationInterceptor.java:93) at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186) at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:688) at com.xxx.chaos.server.service.impl.StoreSkuServiceImpl$$EnhancerBySpringCGLIB$$d569daea.selectStoreSkuBySkuId(<generated>) at com.xxx.chaos.server.provider.StoreSkuRpcServiceImpl.selectStoreSkuByOneId(StoreSkuRpcServiceImpl.java:62) at com.alibaba.dubbo.common.bytecode.Wrapper6.invokeMethod(Wrapper6.java) at com.alibaba.dubbo.rpc.proxy.javassist.JavassistProxyFactory$1.doInvoke(JavassistProxyFactory.java:47) at com.alibaba.dubbo.rpc.proxy.AbstractProxyInvoker.invoke(AbstractProxyInvoker.java:76) at com.alibaba.dubbo.config.invoker.DelegateProviderMetaDataInvoker.invoke(DelegateProviderMetaDataInvoker.java:52) at com.alibaba.dubbo.rpc.protocol.InvokerWrapper.invoke(InvokerWrapper.java:56) at com.alibaba.dubbo.rpc.filter.ExceptionFilter.invoke(ExceptionFilter.java:62) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.csp.sentinel.adapter.dubbo.SentinelDubboProviderFilter.invoke(SentinelDubboProviderFilter.java:66) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.monitor.support.MonitorFilter.invoke$original$b6dOrsVO(MonitorFilter.java:75) at com.alibaba.dubbo.monitor.support.MonitorFilter.invoke$original$b6dOrsVO$accessor$6uvazkPi(MonitorFilter.java) at com.alibaba.dubbo.monitor.support.MonitorFilter$auxiliary$BaEkbyx9.call(Unknown Source) at org.apache.skywalking.apm.agent.core.plugin.interceptor.enhance.InstMethodsInter.intercept(InstMethodsInter.java:93) at com.alibaba.dubbo.monitor.support.MonitorFilter.invoke(MonitorFilter.java) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.filter.TimeoutFilter.invoke(TimeoutFilter.java:42) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.protocol.dubbo.filter.TraceFilter.invoke(TraceFilter.java:78) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.filter.ContextFilter.invoke(ContextFilter.java:72) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.filter.GenericFilter.invoke(GenericFilter.java:131) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.filter.ClassLoaderFilter.invoke(ClassLoaderFilter.java:38) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.filter.EchoFilter.invoke(EchoFilter.java:38) at com.alibaba.dubbo.rpc.protocol.ProtocolFilterWrapper$1.invoke(ProtocolFilterWrapper.java:72) at com.alibaba.dubbo.rpc.protocol.dubbo.DubboProtocol$1.reply(DubboProtocol.java:103) at com.alibaba.dubbo.remoting.exchange.support.header.HeaderExchangeHandler.handleRequest(HeaderExchangeHandler.java:96) at com.alibaba.dubbo.remoting.exchange.support.header.HeaderExchangeHandler.received(HeaderExchangeHandler.java:172) at com.alibaba.dubbo.remoting.transport.DecodeHandler.received(DecodeHandler.java:51) at com.alibaba.dubbo.remoting.transport.dispatcher.ChannelEventRunnable.run(ChannelEventRunnable.java:80) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:748) Caused by: io.lettuce.core.RedisCommandTimeoutException: Command timed out after 6 second(s) at io.lettuce.core.ExceptionFactory.createTimeoutException(ExceptionFactory.java:51) at io.lettuce.core.LettuceFutures.awaitOrCancel(LettuceFutures.java:114) at io.lettuce.core.cluster.ClusterFutureSyncInvocationHandler.handleInvocation(ClusterFutureSyncInvocationHandler.java:123) at io.lettuce.core.internal.AbstractInvocationHandler.invoke(AbstractInvocationHandler.java:80) at com.sun.proxy.$Proxy187.get(Unknown Source) at org.springframework.data.redis.connection.lettuce.LettuceStringCommands.get(LettuceStringCommands.java:66) ... 58 common frames omitted

2、通过 falcon、及grafana监控,发现应用与redis系统资源压力正常

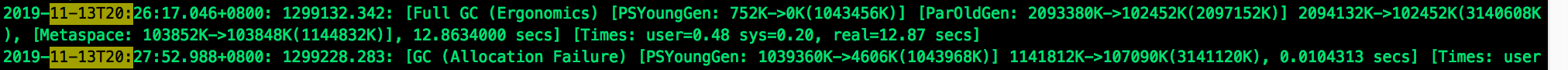

3、由于间歇出现,然后查看GC 情况

应用FullGC了且时间持续12.8s,时间点也刚好与报警异常时间对上,基本确认 FullGC原因引起

4、由于多环境混合部署(阿里云docker+IDC),然后查看了一下 docker 节点应用情况

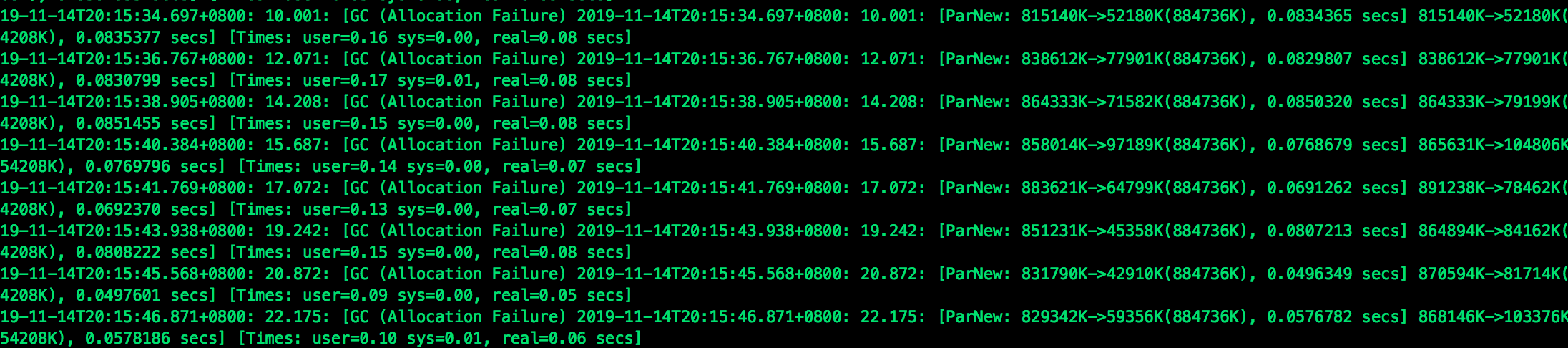

查看发现 IDC 与 docker 节点的 GC 差异较大,docker节点对应gc时间都在>100ms,而 idc基本都< 100ms,

(1)请教了秋伟大神,sudo /usr/local/jdk1.8.0_131/bin/jstack pid | grep 'Paralle'

idc 结果为2,而 docker 里为96个,恰巧 docker 宿主机的cpu 配置未96,难道应用拿到的是宿主机的 cpu 核数?

(2)然后验证了一下 dubbo io线程sudo /usr/local/jdk1.8.0_131/bin/jstack 7004 | grep 'New I/O server worker',IDC 为3,docker 里为37,基本确认应该是这个引起的差异;

(3)于是配置了-XX:ParallelGCThreads=2 -XX:ConcGCThreads=2 上线后,容器的 gc线程数据与 idc 一致,且时间也缩短了。到此基本确定差异原因就是docker 节点获取的是宿主机资源导致,

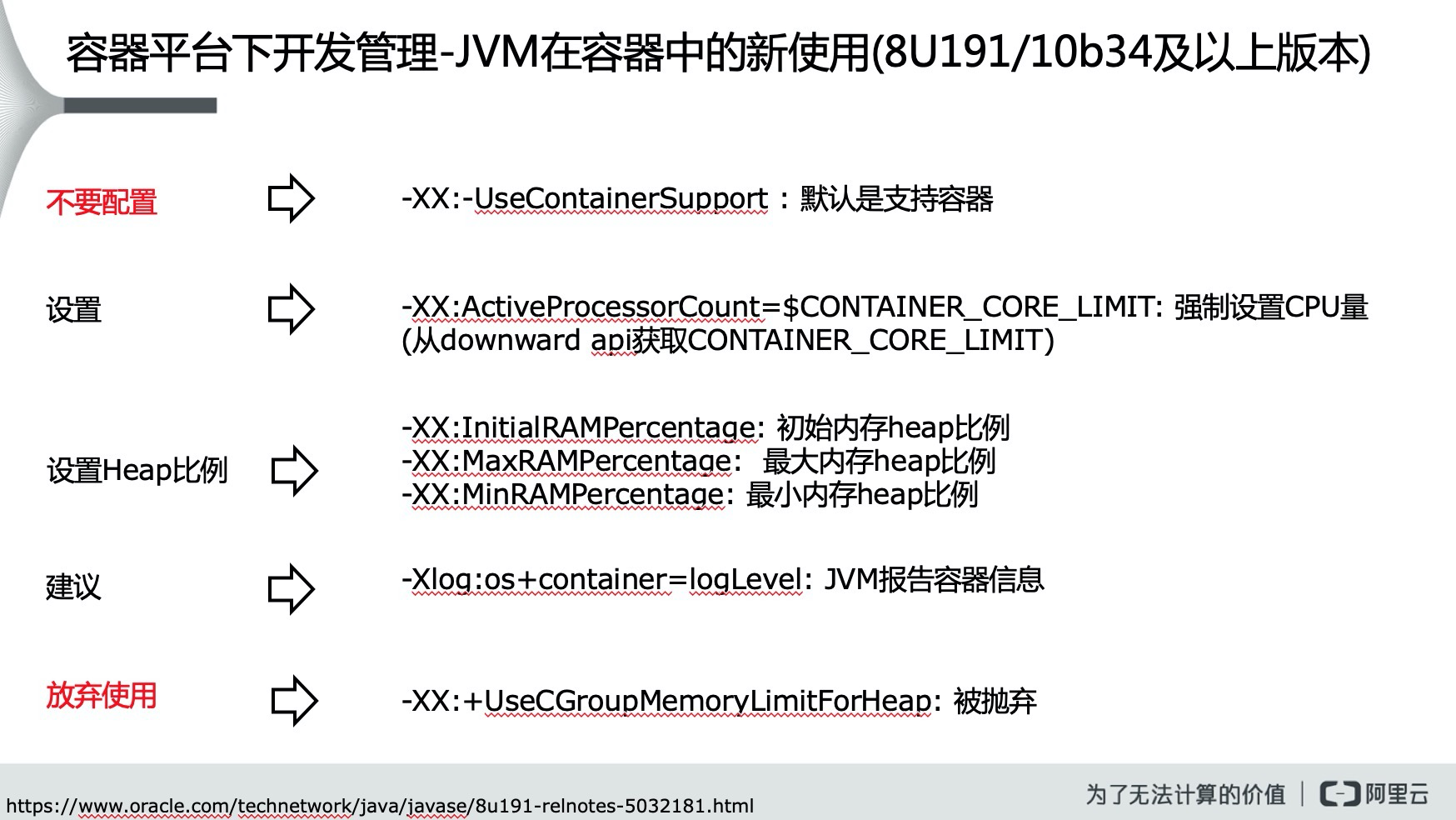

(4)请教运维小伙伴后给出终极方案:但此配置需要 jdk版本>1.8.0_191,之前版本只能1.容器资源隔离2.使用-XX:ParallelGCThreads=2 -XX:ConcGCThreads=2,内存可以由-Xms, -Xmx限制,但这种方法不能避免很多Java库根据availableProcessors()来做相应逻辑处理

5)其实这个问题一早las遇到过(项目基于 openresty 里面有 nginx 配置文件),其中worker_processes auto导致 openresty nginx woker 起了96个,这在4C8G 的 docker 节点上运行性能很差,后来运维部署用模板替换掉了 auto 配置项

5、总结

https://www.robberphex.com/java-and-docker-memory-and-cpu-limits/

浙公网安备 33010602011771号

浙公网安备 33010602011771号