R 《回归分析与线性统计模型》page140,5.1

rm(list = ls())

library(car)

library(MASS)

library(openxlsx)

A = read.xlsx("data140.xlsx")

head(A)

attach(A)

fm = lm(y~x1+x2+x3 , data=A) #建立模型 vif(fm) #查看模型是否存在共线性

> vif(fm) #查看模型是否存在共线性

x1 x2 x3

21.631451 21.894402 1.334751

结果显示存在共线性

summary(fm)

结果:

> summary(fm)

Call:

lm(formula = y ~ x1 + x2 + x3, data = A)

Residuals:

Min 1Q Median 3Q Max

-2.89129 -0.78230 0.00544 0.93147 2.45478

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.2242 3.4598 0.932 0.361983

x1 0.9626 0.2422 3.974 0.000692 ***

x2 -2.6290 3.9000 -0.674 0.507606

x3 -0.1560 3.8838 -0.040 0.968338

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 1.446 on 21 degrees of freedom

Multiple R-squared: 0.9186, Adjusted R-squared: 0.907

F-statistic: 78.99 on 3 and 21 DF, p-value: 1.328e-11

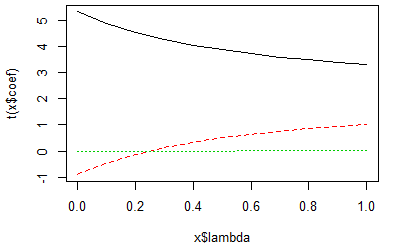

esti_ling = lm.ridge(y~x1+x2+x3 , data=A,lambda = seq(0,1,0.1)) #岭回归 plot(esti_ling)

选取k = 0.6

k = 0.6 X = cbind(1,as.matrix(A[,2:4])) y = A[,5] B_ = solve((t(X)%*%X) + k*diag(4))%*%t(X)%*%y B_

回归系数:

> B_

[,1]

1.6188146

x1 0.8262986

x2 -0.3076330

x3 1.0780444