Ingress资源

kubernetes提供了两种内建的云端负载均衡机制用于发布公共应用,一种是工作于传输层的Service资源,它实现的是“TCP负载均衡器”,另一种是Ingress资源,它实现的是“HTTP(S)负载均衡器”。

(1)TCP负载均衡器

无论是iptables还是ipvs模型的Service资源都配置于Linux内核中的Netfilter之上进行四层调度,是一种类型更为通用的调度器,支持调度HTTP、MySQL等应用层服务。不过,也正是由于工作于传输层从而使得它无法做到类似卸载HTTPS中SSL会话等一类操作,也不支持基于URL的请求调度机制,而且,Kubernetes也不支持为此类负载均衡器配置任何类型的健康状态检查机制。

(2)HTTP(S)负载均衡器

HTTP(S)负载均衡器是应用层负载均衡机制的一种,支持根据环境做出更好的调度决策。与传输层调度器相比,它提供了诸如可自定义URL映射和TLS卸载等功能,并支持多种类型的后端服务器健康状态检查机制。

一、Ingress和Ingress Controller 介绍

kubernetes中,Service资源和pod资源的ip地址仅能用于集群网络内部的通信,所有的网络流量都无法穿透边界路由器以实现集群内外通信。尽管可以为Service使用NodePort或LoadBalancer类型通过节点引入外部流量,但是它依然是4层流量转发,可用的负载均衡器也为传输层负载均衡机制。

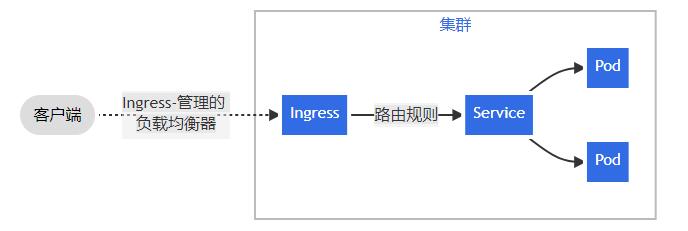

Ingress是kubernetes API 的标准资源类型之一,它其实就是一组基于DNS名称(host)或URL路径把请求转发至指定的Service资源的规则,用于将集群外部的请求流量转发至集群内部完成服务发布。然而,Ingress资源自身并不能进行“流量穿透”,它仅是一组路由规则的集合,这些规则要想真正发挥作用还需要其他功能的辅助,如监听某套接字,然后根据这些规则的匹配机制路由请求流量。这种能够为Ingress资源监听套接字并转发流量的组件称之为Ingress控制器。

1. Ingress

Ingress 公开从集群外部到集群内服务的 HTTP 和 HTTPS 路由。 流量路由由 Ingress 资源上定义的规则控制。

Ingress 可为 Service 提供外部可访问的 URL、负载均衡流量、终止 SSL/TLS,以及基于名称的虚拟托管。

Ingress 不会公开任意端口或协议。 将 HTTP 和 HTTPS 以外的服务公开到 Internet 时,通常使用 Service.Type=NodePort 或 Service.Type=LoadBalancer 类型的 Service。

Ingress 则是定义规则,通过它定义某个域名的请求过来之后转发到集群中指定的 Service。它可以通过 Yaml 文件定义,可以给一个或多个 Service 定义一个或多个 Ingress 规则

2. Ingress Controller

Ingress Controller 是一个七层负载均衡调度器,客户端的请求先到达这个七层负载均衡调度器,由七层负载均衡器在反向代理到后端pod。它可以由任何具有反向代理功能的服务程序实现,如nginx,envoy,Haproxy,vulcand和Traefik等。Ingress控制器自身也是运行于集群中的pod资源对象,它与被代理的运行为pod资源的应用运行于同一网络中。

Ingress Controller 可以理解为控制器,它通过不断的跟 Kubernetes API 交互,实时获取后端Service、Pod的变化,比如新增、删除等,结合Ingress 定义的规则生成配置,然后动态更新上边的 Nginx 或者trafik负载均衡器,并刷新使配置生效,来达到服务自动发现的作用。

注:不同于Deployment控制器等,Ingress控制器并不直接运行为kube-controller-manager的一部分,它是kubernetes集群的一个重要组件,类似于CoreDNS,需要在集群上单独部署。

下面是一个将所有流量都发送到同一 Service 的简单 Ingress 示例:

供大家使用的 Ingress controller 有很多,比如 traefik、nginx-controller、Kubernetes Ingress Controller for Kong、HAProxy Ingress controller,当然你也可以自己实现一个 Ingress Controller,现在普遍用得较多的是 traefik 和 nginx-controller,traefik 的性能较 nginx-controller 差,但是配置使用要简单许多。

二、 部署Ingress Controller

Ingress Controller 其实就是托管于kubernetes系统之上的用于实现在应用层发布服务的pod资源,它将跟踪ingress资源并实时生成配置规则。那么,同样运行pod资源的Ingress Controller进程又该如何接入外部的请求流量呢,常用以下两种解决方案:

1)以Deployment控制器管理Ingress Controller的pod资源,并通过NodePort或LoadBalance类型的service对象为其接入集群外部的请求流量,这就意味着,定义一个Ingress Controller时,必须在其前端定义一个专用的Service资源。

(1)type为NodePort: ingress就会暴露在集群节点ip的特定端口上。由于nodeport暴露的端口是随机端口,一般会在前面再搭建一套负载均衡器来转发请求。该方式一般用于宿主机是相对固定的环境ip地址不变的场景。NodePort方式暴露ingress虽然简单方便,但是NodePort多了一层NAT,在请求量级很大时可能对性能会有一定影响。

(2)type为LoadBalancer:把ingress部署在公有云,需要创建一个type为LoadBalancer的service关联这组pod。大部分公有云,都会为LoadBalancer的service自动创建一个负载均衡器,通常还绑定了公网地址。只要把域名解析指向该地址,就实现了集群服务的对外暴露。

2)借助DaemonSet控制器,将Ingress Controller的pod资源各自以单一实例的方法运行于集群的所有或部分工作节点之上,并配置这类Pod对象以hostPort或者hostNetwork的方式在当前节点接入外部流量。

hostNetwork直接把该pod与宿主机node的网络打通,直接使用宿主机的80/433端口就能访问服务。这时,ingress-controller所在的node机器就很类似传统架构的边缘节点,比如机房入口的nginx服务器。该方式整个请求链路最简单,性能相对NodePort模式更好。缺点是由于直接利用宿主机节点的网络和端口,一个node只能部署一个ingress-controller pod。比较适合大并发的生产环境使用。

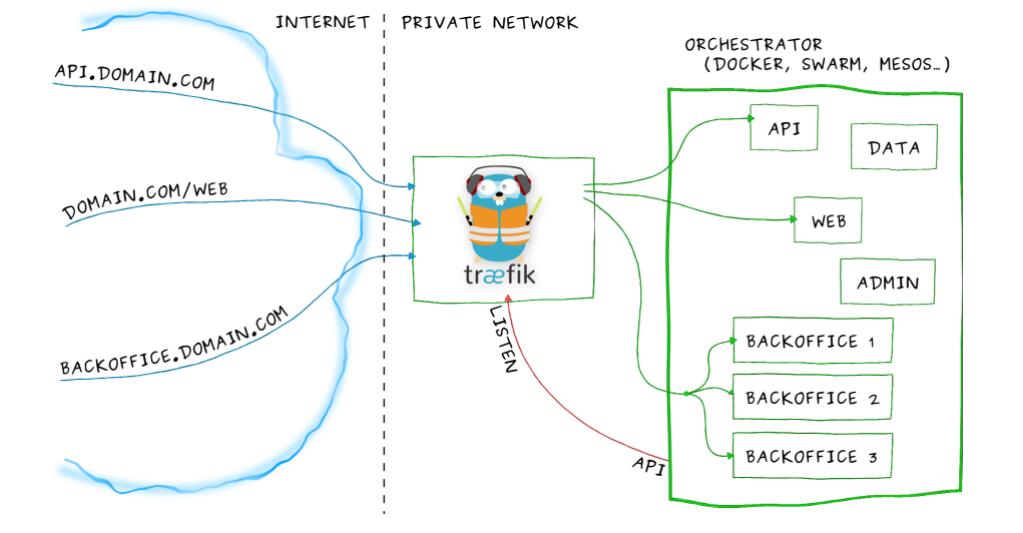

1. Traefik

Traefik 是一款开源的反向代理与负载均衡工具。它最大的优点是能够与常见的微服务系统直接整合,可以实现自动化动态配置。目前支持 Docker、Swarm、Mesos/Marathon、 Mesos、Kubernetes、Consul、Etcd、Zookeeper、BoltDB、Rest API 等等后端模型。

Traefik相关资料:

https://github.com/containous/traefik

https://traefik.cn/

https://docs.traefik.io/user-guides/crd-acme/

要使用 traefik,需要部署 traefik 的 Pod

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 | [root@k8s-master1 ~]# mkdir traefikYou have new mail in /var/spool/mail/root[root@k8s-master1 ~]# cd traefik/[root@k8s-master1 traefik]# vim traefik-deploy.yaml[root@k8s-master1 traefik]# cat traefik-deploy.yamlapiVersion: v1kind: Namespacemetadata: name: traefik-ingress---apiVersion: v1kind: ServiceAccountmetadata: name: traefik-ingress-controller namespace: traefik-ingress---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: traefik-ingress-controllerrules: - apiGroups: - "" resources: - services - endpoints - secrets verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: traefik-ingress-controllerroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: traefik-ingress-controllersubjects:- kind: ServiceAccount name: traefik-ingress-controller namespace: traefik-ingress---kind: DeploymentapiVersion: apps/v1metadata: name: traefik-ingress-controller namespace: traefik-ingress labels: k8s-app: traefik-ingress-lbspec: replicas: 1 selector: matchLabels: k8s-app: traefik-ingress-lb template: metadata: labels: k8s-app: traefik-ingress-lb name: traefik-ingress-lb spec: serviceAccountName: traefik-ingress-controller terminationGracePeriodSeconds: 60 nodeSelector: kubernetes.io/os: linux containers: - image: traefik:1.7.17<br> #需要注意的是最近 Traefik 发布了 2.0 版本,导致默认的 taefik 镜像升级到了最新版本,而 2.x 版本和 1.x 版本变化非常大,而且配置不兼容 imagePullPolicy: IfNotPresent name: traefik-ingress-lb ports: - name: http containerPort: 80 - name: admin containerPort: 8080 args: - --api - --kubernetes - --logLevel=INFO---kind: ServiceapiVersion: v1metadata: name: traefik-ingress-service namespace: traefik-ingressspec: selector: k8s-app: traefik-ingress-lb ports: - protocol: TCP port: 80 name: web - protocol: TCP port: 8080 name: admin type: NodePort[root@k8s-master1 traefik]# kubectl apply -f traefik-deploy.yamlnamespace/traefik-ingress createdserviceaccount/traefik-ingress-controller createdclusterrole.rbac.authorization.k8s.io/traefik-ingress-controller createdclusterrolebinding.rbac.authorization.k8s.io/traefik-ingress-controller createddeployment.apps/traefik-ingress-controller createdservice/traefik-ingress-service created[root@k8s-master1 traefik]# kubectl get pods -n traefik-ingressNAME READY STATUS RESTARTS AGEtraefik-ingress-controller-848d4b57bd-gqhwv 1/1 Running 0 15s[root@k8s-master1 traefik]# kubectl get svc -n traefik-ingressNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEtraefik-ingress-service NodePort 10.105.67.140 <none> 80:32419/TCP,8080:31535/TCP 29s |

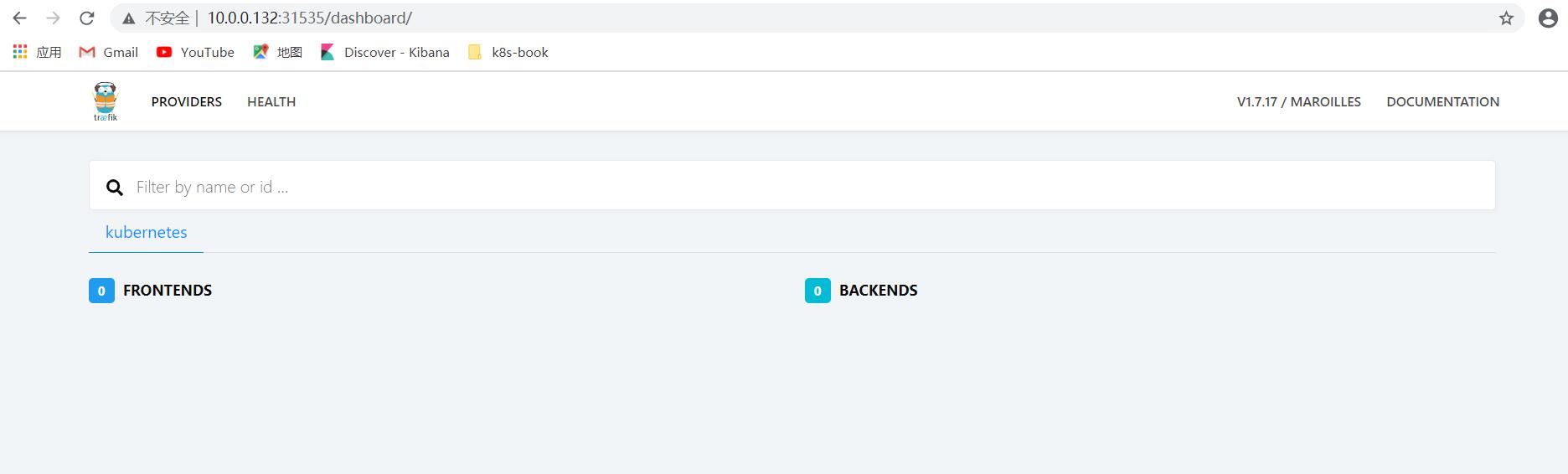

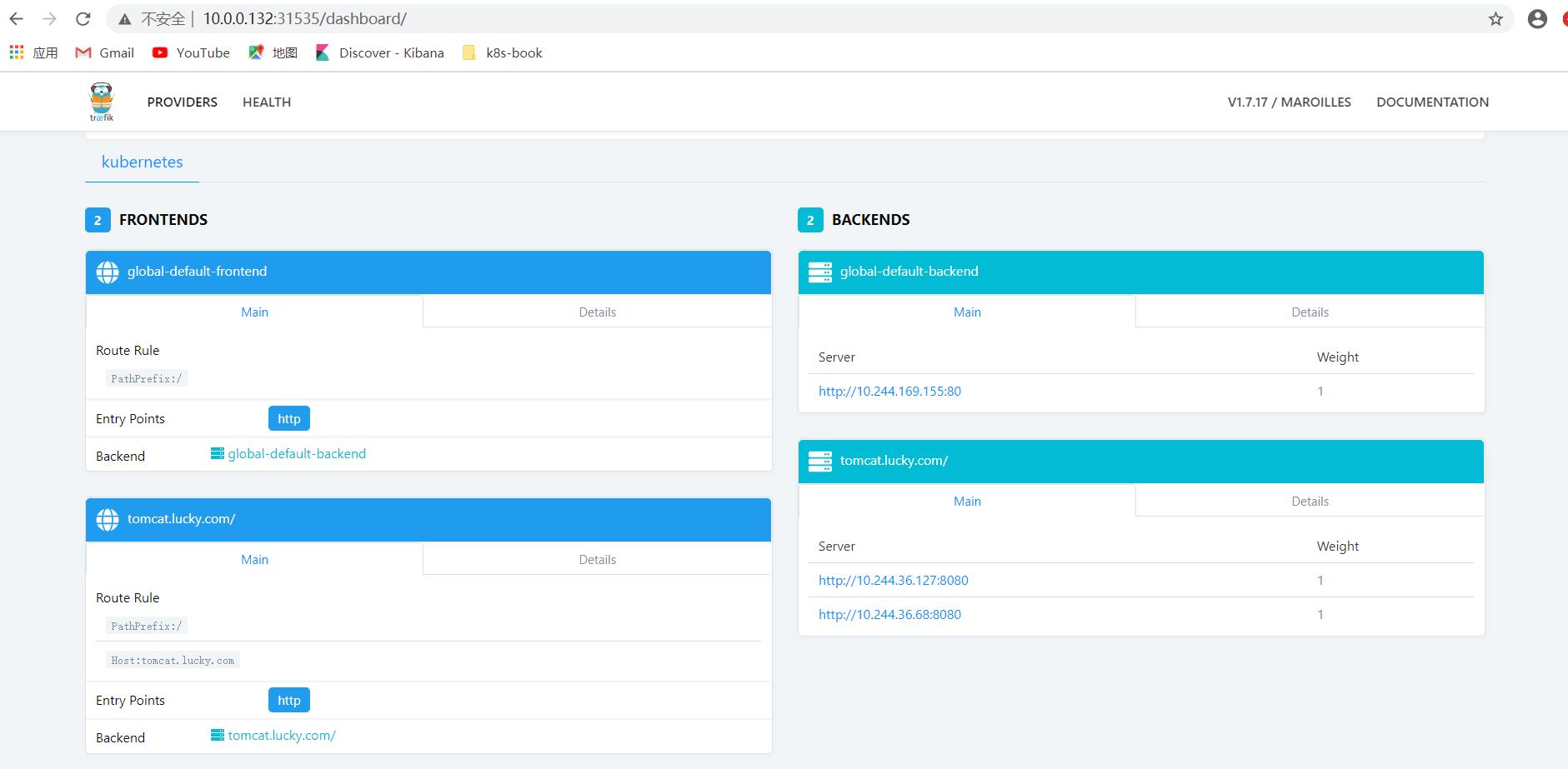

traefik 还提供了一个 web ui 工具,就是上面的 8080 端口对应的服务,为了能够访问到该服务,这里将服务设置成的 NodePort

2. ingress-nginx控制器

nginx-ingress就是动态生成nginx配置,动态更新upstream,并在需要的时候reload程序应用新配置。

部署ingress-controller pod及相关资源,官方文档中,部署只要简单的执行一个yaml:

https://github.com/kubernetes/ingress-nginx/blob/main/deploy/static/provider/baremetal/deploy.yaml

这一个yaml中包含了很多资源的创建,包括namespace、ConfigMap、role,ServiceAccount等等所有部署ingress-controller需要的资源,配置太多就不粘出来了。

拉取相关的镜像:

1 2 3 | [root@k8s-node1 ~]# docker images |grep registry.cn-hangzhou.aliyuncs.comregistry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller v1.1.0 ae1a7201ec95 9 months ago 285MBregistry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.1.1 c41e9fcadf5a 11 months ago 47.7MB |

重点查看deployment部分,修改其中的配置如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 | apiVersion: apps/v1kind: Deploymentmetadata: labels: helm.sh/chart: ingress-nginx-4.0.10 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginxspec: replicas: 2 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: hostNetwork: true #由于ingress 使用到物理机的80/443 端口,所以需要设置为hostNetwork模式 affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchLabels: app.kubernetes.io/name: ingress-nginx topologyKey: kubernetes.io/hostname dnsPolicy: ClusterFirstWithHostNet containers: - name: controller image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0 #替换成阿里云的镜像 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown args: - /nginx-ingress-controller - --election-id=ingress-controller-leader - --controller-class=k8s.io/ingress-nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP - name: webhook containerPort: 8443 protocol: TCP volumeMounts: - name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission |

用Deployment模式部署ingress-controller,并创建对应的服务,使用的type为NodePort。ingress就会暴露在集群节点ip的特定端口上。由于nodeport暴露的端口是随机端口,一般会在前面再搭建一套负载均衡器来转发请求。该方式一般用于宿主机是相对固定的环境ip地址不变的场景。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # Source: ingress-nginx/templates/controller-service.yamlapiVersion: v1kind: Servicemetadata: annotations: labels: helm.sh/chart: ingress-nginx-4.0.10 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginxspec: type: NodePort ipFamilyPolicy: SingleStack ipFamilies: - IPv4 ports: - name: http port: 80 protocol: TCP targetPort: http appProtocol: http - name: https port: 443 protocol: TCP targetPort: https appProtocol: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller |

直接创建ingress-ngnx 控制器资源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | [root@k8s-master1 ~]# mkdir nginx-ingress[root@k8s-master1 ~]# cd nginx-ingress/[root@k8s-master1 nginx-ingress]# kubectl apply -f deploy.yamlnamespace/ingress-nginx createdserviceaccount/ingress-nginx createdconfigmap/ingress-nginx-controller createdclusterrole.rbac.authorization.k8s.io/ingress-nginx createdclusterrolebinding.rbac.authorization.k8s.io/ingress-nginx createdrole.rbac.authorization.k8s.io/ingress-nginx createdrolebinding.rbac.authorization.k8s.io/ingress-nginx createdservice/ingress-nginx-controller-admission createdservice/ingress-nginx-controller createddeployment.apps/ingress-nginx-controller createdingressclass.networking.k8s.io/nginx createdvalidatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission createdserviceaccount/ingress-nginx-admission createdclusterrole.rbac.authorization.k8s.io/ingress-nginx-admission createdclusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission createdrole.rbac.authorization.k8s.io/ingress-nginx-admission createdrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission createdjob.batch/ingress-nginx-admission-create createdjob.batch/ingress-nginx-admission-patch created |

查看相应的资源信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | [root@k8s-master1 nginx-ingress]# kubectl get pods -n ingress-nginxNAME READY STATUS RESTARTS AGEingress-nginx-admission-create-ghrc5 0/1 Completed 0 2m35singress-nginx-admission-patch-qfmsp 0/1 Completed 0 2m35singress-nginx-controller-6c8ffbbfcf-6stl4 1/1 Running 0 2m36singress-nginx-controller-6c8ffbbfcf-xlwd2 1/1 Running 0 2m36sYou have new mail in /var/spool/mail/root[root@k8s-master1 nginx-ingress]# kubectl get svc -n ingress-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEingress-nginx-controller NodePort 10.101.236.38 <none> 80:31864/TCP,443:30520/TCP 2m42singress-nginx-controller-admission ClusterIP 10.101.54.145 <none> 443/TCP 2m43s[root@k8s-master1 nginx-ingress]# kubectl get ingressclass -n ingress-nginxNAME CONTROLLER PARAMETERS AGEnginx k8s.io/ingress-nginx <none> 4m48s |

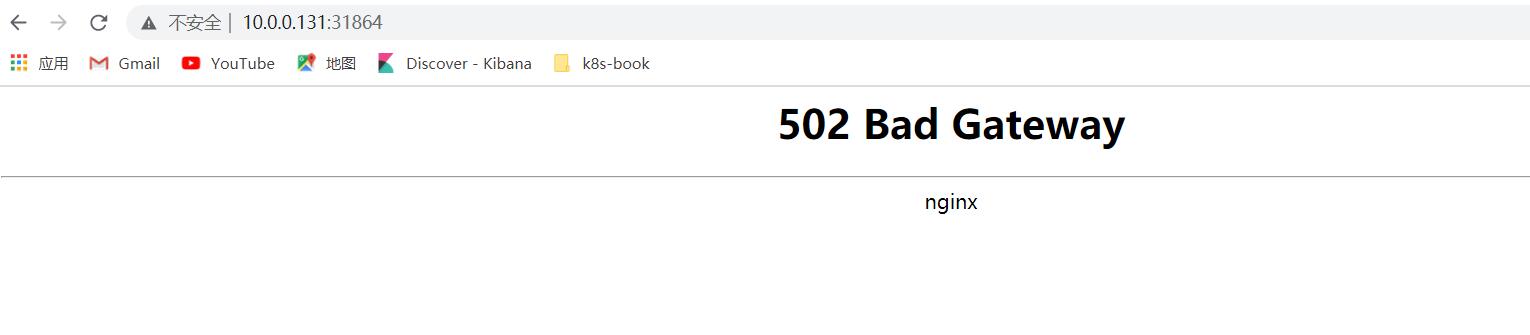

可以看到Service对象ingress-nginx-controller的状态没有问题后,即可于集群外部对其发起访问测试,目标URL为:http://<NodeIP>:31864或者https://<NodeIP>:30520,确认可接收到响应报文后即表示ingress nginx控制器部署完成。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | [root@k8s-master1 nginx-ingress]# curl -kv http://10.0.0.131:31864* About to connect() to 10.0.0.131 port 31864 (#0)* Trying 10.0.0.131...* Connected to 10.0.0.131 (10.0.0.131) port 31864 (#0)> GET / HTTP/1.1> User-Agent: curl/7.29.0> Host: 10.0.0.131:31864> Accept: */*>< HTTP/1.1 502 Bad Gateway< Date: Sat, 17 Sep 2022 13:30:09 GMT< Content-Type: text/html< Content-Length: 150< Connection: keep-alive<<html><head><title>502 Bad Gateway</title></head><body><center><h1>502 Bad Gateway</h1></center><hr><center>nginx</center></body></html>* Connection #0 to host 10.0.0.131 left intact[root@k8s-master1 nginx-ingress]# curl -kv https://10.0.0.131:30520* About to connect() to 10.0.0.131 port 30520 (#0)* Trying 10.0.0.131...* Connected to 10.0.0.131 (10.0.0.131) port 30520 (#0)* Initializing NSS with certpath: sql:/etc/pki/nssdb* skipping SSL peer certificate verification* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256* Server certificate:* subject: CN=Kubernetes Ingress Controller Fake Certificate,O=Acme Co* start date: Sep 17 08:43:21 2022 GMT* expire date: Sep 17 08:43:21 2023 GMT* common name: Kubernetes Ingress Controller Fake Certificate* issuer: CN=Kubernetes Ingress Controller Fake Certificate,O=Acme Co> GET / HTTP/1.1> User-Agent: curl/7.29.0> Host: 10.0.0.131:30520> Accept: */*>< HTTP/1.1 502 Bad Gateway< Date: Sat, 17 Sep 2022 13:30:25 GMT< Content-Type: text/html< Content-Length: 150< Connection: keep-alive< Strict-Transport-Security: max-age=15724800; includeSubDomains<<html><head><title>502 Bad Gateway</title></head><body><center><h1>502 Bad Gateway</h1></center><hr><center>nginx</center></body></html>* Connection #0 to host 10.0.0.131 left intact |

浏览器访问:

三、创建Ingress资源

Ingress资源是基于HTTP虚拟主机或URL的转发规则。

1. Ingress资源的清单文件说明

Ingress在资源配置清单的spec字段中嵌套了rules,backend和tls等字段进行定义。

查看Ingress的字段:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | [root@k8s-master1 nginx-ingress]# kubectl explain ingressKIND: IngressVERSION: networking.k8s.io/v1DESCRIPTION: Ingress is a collection of rules that allow inbound connections to reach the endpoints defined by a backend. An Ingress can be configured to give services externally-reachable urls, load balance traffic, terminate SSL, offer name based virtual hosting etc.FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <Object> Standard object's metadata. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata spec <Object> Spec is the desired state of the Ingress. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status status <Object> Status is the current state of the Ingress. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status |

查看Ingress中的spec字段说明

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | [root@k8s-master1 nginx-ingress]# kubectl explain ingress.specKIND: IngressVERSION: networking.k8s.io/v1RESOURCE: spec <Object>DESCRIPTION: Spec is the desired state of the Ingress. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status IngressSpec describes the Ingress the user wishes to exist.FIELDS: defaultBackend <Object> DefaultBackend is the backend that should handle requests that don't match any rule. If Rules are not specified, DefaultBackend must be specified. If DefaultBackend is not set, the handling of requests that do not match any of the rules will be up to the Ingress controller. ingressClassName <string> IngressClassName is the name of the IngressClass cluster resource. The associated IngressClass defines which controller will implement the resource. This replaces the deprecated `kubernetes.io/ingress.class` annotation. For backwards compatibility, when that annotation is set, it must be given precedence over this field. The controller may emit a warning if the field and annotation have different values. Implementations of this API should ignore Ingresses without a class specified. An IngressClass resource may be marked as default, which can be used to set a default value for this field. For more information, refer to the IngressClass documentation. rules <[]Object> # 用于定义当前Ingress资源的转发规则列表,未由rules定义规则,或者没有匹配到任何规则时,所有流量都会转发到由backend定义的默认后端 A list of host rules used to configure the Ingress. If unspecified, or no rule matches, all traffic is sent to the default backend. tls <[]Object> #TLS配置,目前仅支持通过默认端口443提供服务;如果要配置指定的列表成员指向了不同的主机,则必须通过SNI TLS扩展机制来支持此功能 TLS configuration. Currently the Ingress only supports a single TLS port, 443. If multiple members of this list specify different hosts, they will be multiplexed on the same port according to the hostname specified through the SNI TLS extension, if the ingress controller fulfilling the ingress supports SNI. |

查看Ingress中的spec.rules字段说明

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | [root@k8s-master1 nginx-ingress]# kubectl explain ingress.spec.rulesKIND: IngressVERSION: networking.k8s.io/v1RESOURCE: rules <[]Object>DESCRIPTION: A list of host rules used to configure the Ingress. If unspecified, or no rule matches, all traffic is sent to the default backend. IngressRule represents the rules mapping the paths under a specified host to the related backend services. Incoming requests are first evaluated for a host match, then routed to the backend associated with the matching IngressRuleValue.FIELDS: host <string> # 该值目前不支持使用IP地址,也不支持后跟“:PORT”格式的端口号,且此字段值留空表示通配所有的主机名。这些host规则用于将一个主机上的某个URL路径映射至相关的后端Service对象。 Host is the fully qualified domain name of a network host, as defined by RFC 3986. Note the following deviations from the "host" part of the URI as defined in RFC 3986: 1. IPs are not allowed. Currently an IngressRuleValue can only apply to the IP in the Spec of the parent Ingress. 2. The `:` delimiter is not respected because ports are not allowed. Currently the port of an Ingress is implicitly :80 for http and :443 for https. Both these may change in the future. Incoming requests are matched against the host before the IngressRuleValue. If the host is unspecified, the Ingress routes all traffic based on the specified IngressRuleValue. Host can be "precise" which is a domain name without the terminating dot of a network host (e.g. "foo.bar.com") or "wildcard", which is a domain name prefixed with a single wildcard label (e.g. "*.foo.com"). The wildcard character '*' must appear by itself as the first DNS label and matches only a single label. You cannot have a wildcard label by itself (e.g. Host == "*"). Requests will be matched against the Host field in the following way: 1. If Host is precise, the request matches this rule if the http host header is equal to Host. 2. If Host is a wildcard, then the request matches this rule if the http host header is to equal to the suffix (removing the first label) of the wildcard rule. http <Object> |

下面示例中定义了一个Ingress资源,它包含了一个转发规则,把发往tomcat.lucky.com的请求代理给了名为tomcat的Service资源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: ingress-demo namespace: defaultspec: ingressClassName: nginx rules: #定义后端转发的规则 - host: tomcat.lucky.com #通过域名进行转发 http: paths: - path: / #配置访问路径,如果通过url进行转发,需要修改;空默认为访问的路径为"/" pathType: Prefix backend: #配置后端服务 service: name: tomcat port: number: 8080 |

2. nginx-ingress-controller高可用

根据之前部署的nginx-ingress-controller,分别在k8s-node1和k8s-node2节点上,可以通过keepalive+nginx实现nginx-ingress-controller高可用。

1 2 3 4 5 6 | [root@k8s-master1 nginx-ingress]# kubectl get pods -n ingress-nginx -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESingress-nginx-admission-create-ghrc5 0/1 Completed 0 60m 10.244.36.126 k8s-node1 <none> <none>ingress-nginx-admission-patch-qfmsp 0/1 Completed 0 60m 10.244.36.125 k8s-node1 <none> <none>ingress-nginx-controller-6c8ffbbfcf-6stl4 1/1 Running 0 60m 10.0.0.133 k8s-node2 <none> <none>ingress-nginx-controller-6c8ffbbfcf-xlwd2 1/1 Running 0 60m 10.0.0.132 k8s-node1 <none> <none> |

1)在k8s-node1和k8s-node2节点上分别安装keepalive和nginx

1 2 | [root@k8s-node1 ~]# yum install nginx keepalived -y[root@k8s-node2 ~]# yum install nginx keepalived -y |

2)修改nginx配置文件。主备一样

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | [root@k8s-node1 ~]# yum install nginx-mod-stream -y[root@k8s-node1 ~]# vim /etc/nginx/nginx.conf[root@k8s-node1 ~]# cat /etc/nginx/nginx.conf# For more information on configuration, see:# * Official English Documentation: http://nginx.org/en/docs/# * Official Russian Documentation: http://nginx.org/ru/docs/user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.include /usr/share/nginx/modules/*.conf;events { worker_connections 1024;}stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 10.0.0.132:80; server 10.0.0.133:80; } server { listen 30080; proxy_pass k8s-apiserver; }}http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 4096; include /etc/nginx/mime.types; default_type application/octet-stream;} |

注意:nginx监听端口变成大于30000的端口,比方说30080,这样访问域名:30080就可以了

查看nginx.conf文件是否配置正确,执行以下命令:

1 2 3 | [root@k8s-node1 ~]# nginx -tnginx: the configuration file /etc/nginx/nginx.conf syntax is oknginx: configuration file /etc/nginx/nginx.conf test is successful |

注:k8s-node2节点nginx配置与k8s-node1节点一致。这里不再显示。

3)配置keepalive

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | # 主配置的keepalived[root@k8s-node1 ~]# vim /etc/keepalived/keepalived.conf[root@k8s-node1 ~]# cat /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER}vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 #VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 10.0.0.134/24 } track_script { check_nginx }} |

其中 vrrp_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移),virtual_ipaddress:虚拟IP(VIP)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | [root@k8s-node1 ~]# vim /etc/keepalived/check_nginx.shYou have new mail in /var/spool/mail/root[root@k8s-node1 ~]# cat /etc/keepalived/check_nginx.sh#!/bin/bash#1、判断Nginx是否存活counter=`netstat -lntup |grep 30080 | wc -l` #不能使用查看nginx进程,因为部署的nginx-ingress控制器,也有nginx进程if [ $counter -eq 0 ]; then #2、如果不存活则尝试启动Nginx systemctl start nginx sleep 2 #3、等待2秒后再次获取一次Nginx状态 counter=`netstat -lntup |grep 30080 | wc -l` #4、再次进行判断,如Nginx还不存活则停止Keepalived,让地址进行漂移 if [ $counter -eq 0 ]; then systemctl stop keepalived fifi |

查看nginx进程,UID为101的为nginx-ingress控制器产生的nginx进程;而UID为root和nginx的,是主机自己的nginx查看的进程。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | [root@k8s-node1 ~]# ps -ef |grep nginx101 22852 22832 0 16:43 ? 00:00:00 /usr/bin/dumb-init -- /nginx-ingress-controller --election-id=ingress-controller-leader --controller-class=k8s.io/ingress-nginx --configmap=ingress-nginx/ingress-nginx-controller --validating-webhook=:8443 --validating-webhook-certificate=/usr/local/certificates/cert --validating-webhook-key=/usr/local/certificates/key101 22866 22852 0 16:43 ? 00:00:22 /nginx-ingress-controller --election-id=ingress-controller-leader --controller-class=k8s.io/ingress-nginx --configmap=ingress-nginx/ingress-nginx-controller --validating-webhook=:8443 --validating-webhook-certificate=/usr/local/certificates/cert --validating-webhook-key=/usr/local/certificates/key101 22913 22866 0 16:43 ? 00:00:00 nginx: master process /usr/local/nginx/sbin/nginx -c /etc/nginx/nginx.conf101 22920 22913 0 16:43 ? 00:00:04 nginx: worker process101 22921 22913 0 16:43 ? 00:00:03 nginx: worker process101 22922 22913 0 16:43 ? 00:00:03 nginx: worker process101 22923 22913 0 16:43 ? 00:00:03 nginx: worker process101 22924 22913 0 16:43 ? 00:00:00 nginx: cache manager processroot 92257 1 0 18:17 ? 00:00:00 nginx: master process /usr/sbin/nginxnginx 92258 92257 0 18:17 ? 00:00:00 nginx: worker processnginx 92259 92257 0 18:17 ? 00:00:00 nginx: worker processnginx 92260 92257 0 18:17 ? 00:00:00 nginx: worker processnginx 92261 92257 0 18:17 ? 00:00:00 nginx: worker processroot 100562 81969 0 18:28 pts/2 00:00:00 grep --color=auto nginx |

赋予测试脚本执行权限:

1 2 3 4 | [root@k8s-node1 ~]# chmod +x /etc/keepalived/check_nginx.shYou have new mail in /var/spool/mail/root[root@k8s-node1 ~]# ls -lrt /etc/keepalived/check_nginx.sh-rwxr-xr-x 1 root root 468 Sep 17 18:27 /etc/keepalived/check_nginx.sh |

备节点k8s-node2配置keepalived配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | [root@k8s-node2 ~]# vim /etc/keepalived/keepalived.confYou have new mail in /var/spool/mail/root[root@k8s-node2 ~]# cat /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER}vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 10.0.0.134/24 } track_script { check_nginx }} |

指定检查nginx工作状态脚本,与主节点k8s-node1一致。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | [root@k8s-node2 ~]# cat /etc/keepalived/check_nginx.sh#!/bin/bash#1、判断Nginx是否存活counter=`netstat -lntup |grep 30080 | wc -l`if [ $counter -eq 0 ]; then #2、如果不存活则尝试启动Nginx systemctl start nginx sleep 2 #3、等待2秒后再次获取一次Nginx状态 counter=`netstat -lntup |grep 30080 | wc -l` #4、再次进行判断,如Nginx还不存活则停止Keepalived,让地址进行漂移 if [ $counter -eq 0 ]; then systemctl stop keepalived fifi[root@k8s-node2 ~]# ll /etc/keepalived/check_nginx.sh-rwxr-xr-x 1 root root 468 Sep 17 18:34 /etc/keepalived/check_nginx.sh |

4)启动服务(主备一致)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | [root@k8s-node1 ~]# systemctl daemon-reloadYou have new mail in /var/spool/mail/root[root@k8s-node1 ~]# systemctl enable nginx keepalivedCreated symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.[root@k8s-node1 ~]# systemctl start nginx keepalivedYou have new mail in /var/spool/mail/root[root@k8s-node1 ~]# systemctl status nginx● nginx.service - The nginx HTTP and reverse proxy server Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled) Active: active (running) since Sat 2022-09-17 18:38:43 CST; 4s ago Process: 108019 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS) Process: 108015 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS) Process: 108013 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS) Main PID: 108021 (nginx) Memory: 3.1M CGroup: /system.slice/nginx.service ├─108021 nginx: master process /usr/sbin/nginx ├─108022 nginx: worker process ├─108023 nginx: worker process ├─108024 nginx: worker process └─108025 nginx: worker processSep 17 18:38:43 k8s-node1 systemd[1]: Starting The nginx HTTP and reverse proxy server...Sep 17 18:38:43 k8s-node1 nginx[108015]: nginx: the configuration file /etc/nginx/nginx.conf syntax is okSep 17 18:38:43 k8s-node1 nginx[108015]: nginx: configuration file /etc/nginx/nginx.conf test is successfulSep 17 18:38:43 k8s-node1 systemd[1]: Started The nginx HTTP and reverse proxy server.[root@k8s-node1 ~]# systemctl status keepalived● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled) Active: active (running) since Sat 2022-09-17 18:38:43 CST; 10s ago Process: 108017 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 108026 (keepalived) Memory: 1.7M CGroup: /system.slice/keepalived.service ├─108026 /usr/sbin/keepalived -D ├─108027 /usr/sbin/keepalived -D └─108028 /usr/sbin/keepalived -DSep 17 18:38:45 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:45 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:45 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:45 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:50 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:50 k8s-node1 Keepalived_vrrp[108028]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 10.0.0.134Sep 17 18:38:50 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:50 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:50 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:38:50 k8s-node1 Keepalived_vrrp[108028]: Sending gratuitous ARP on eth0 for 10.0.0.134 |

备节点k8s-node2相同的操作,这里不再赘述。

5)测试vip是否绑定成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | [root@k8s-node1 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:df:4d:f3 brd ff:ff:ff:ff:ff:ff inet 10.0.0.132/24 brd 10.0.0.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.0.0.134/24 scope global secondary eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fedf:4df3/64 scope link valid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:10:98:97:a5 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever7: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN qlen 1 link/ipip 0.0.0.0 brd 0.0.0.0 inet 10.244.36.64/32 scope global tunl0 valid_lft forever preferred_lft forever11: cali458a3d2423b@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::ecee:eeff:feee:eeee/64 scope link valid_lft forever preferred_lft forever |

6)测试keepalived

停止k8s-node1节点上的keepalived服务,查看虚拟ip地址是否会漂移到k8s-node2节点上

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | [root@k8s-node1 ~]# systemctl stop keepalived<br># k8s-node1节点上没有虚拟ip地址[root@k8s-node1 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:df:4d:f3 brd ff:ff:ff:ff:ff:ff inet 10.0.0.132/24 brd 10.0.0.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fedf:4df3/64 scope link valid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:10:98:97:a5 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever7: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN qlen 1 link/ipip 0.0.0.0 brd 0.0.0.0 inet 10.244.36.64/32 scope global tunl0 valid_lft forever preferred_lft forever11: cali458a3d2423b@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::ecee:eeff:feee:eeee/64 scope link valid_lft forever preferred_lft forever |

查看节点k8s-node2上是否有虚拟ip地址

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | #VIP漂移到了k8s-node2备节点上了<br>[root@k8s-node2 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:79:77:44 brd ff:ff:ff:ff:ff:ff inet 10.0.0.133/24 brd 10.0.0.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.0.0.134/24 scope global secondary eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe79:7744/64 scope link valid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:61:01:84:52 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever5: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN qlen 1 link/ipip 0.0.0.0 brd 0.0.0.0 inet 10.244.169.128/32 scope global tunl0 valid_lft forever preferred_lft forever |

启动k8s-node1上的keepalived。Vip又会漂移到k8s-node2上了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 | [root@k8s-node1 ~]# systemctl start keepalivedYou have new mail in /var/spool/mail/root[root@k8s-node1 ~]# systemctl status keepalived● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled) Active: active (running) since Sat 2022-09-17 18:46:21 CST; 11s ago Process: 115315 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 115316 (keepalived) Memory: 1.4M CGroup: /system.slice/keepalived.service ├─115316 /usr/sbin/keepalived -D ├─115317 /usr/sbin/keepalived -D └─115318 /usr/sbin/keepalived -DSep 17 18:46:22 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:22 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:22 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:22 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:27 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:27 k8s-node1 Keepalived_vrrp[115318]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 10.0.0.134Sep 17 18:46:27 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:27 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:27 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134Sep 17 18:46:27 k8s-node1 Keepalived_vrrp[115318]: Sending gratuitous ARP on eth0 for 10.0.0.134[root@k8s-node1 ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:df:4d:f3 brd ff:ff:ff:ff:ff:ff inet 10.0.0.132/24 brd 10.0.0.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.0.0.134/24 scope global secondary eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fedf:4df3/64 scope link valid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:10:98:97:a5 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever7: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN qlen 1 link/ipip 0.0.0.0 brd 0.0.0.0 inet 10.244.36.64/32 scope global tunl0 valid_lft forever preferred_lft forever11: cali458a3d2423b@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::ecee:eeff:feee:eeee/64 scope link valid_lft forever preferred_lft forever |

3. 单Service资源型Ingress

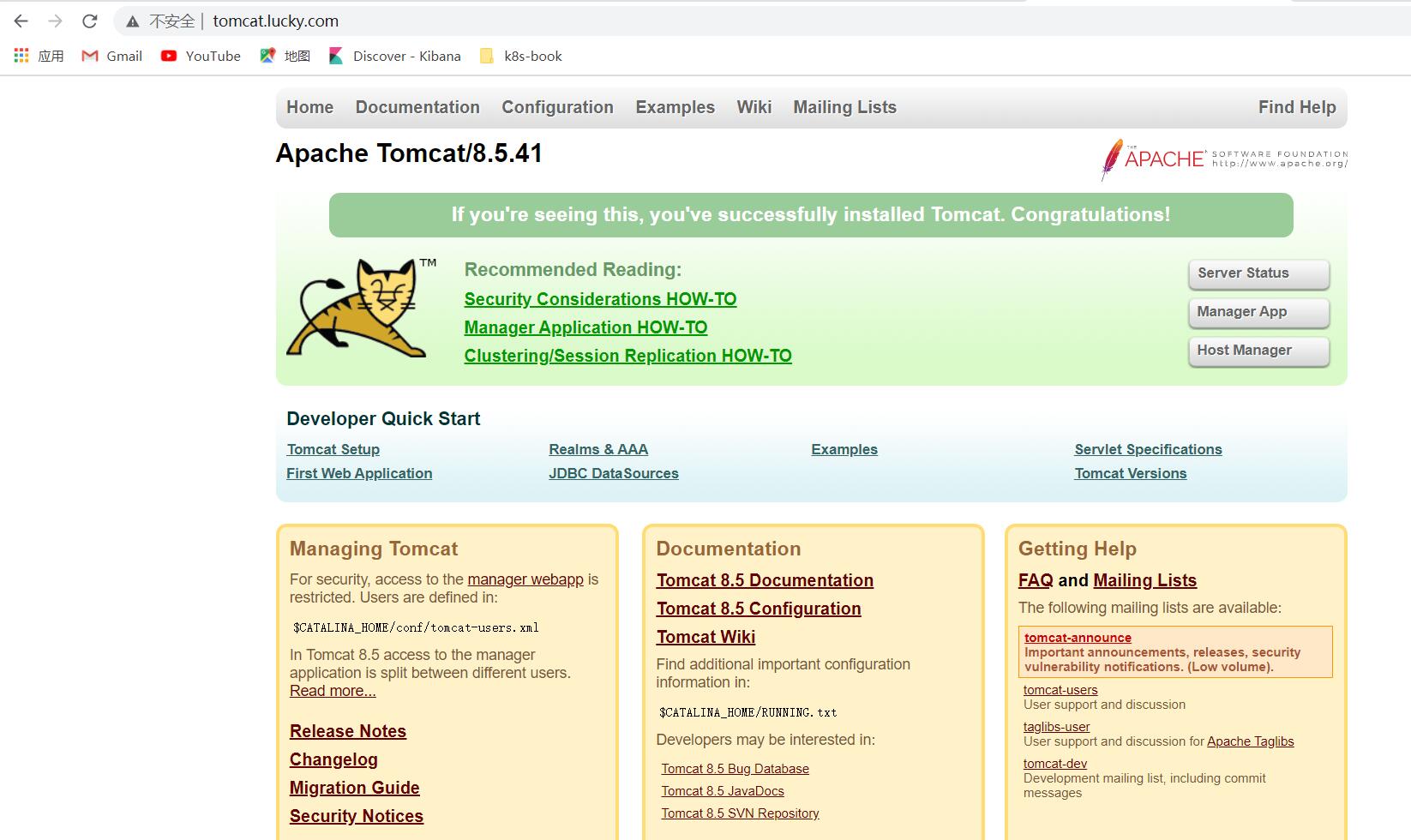

测试Ingress HTTP代理k8s内部站点,在后端部署一个tomcat服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 | [root@k8s-master1 ~]# mkdir ingress[root@k8s-master1 ~]# cd ingress/[root@k8s-master1 ingress]# vim tomcat-demo.yamlYou have new mail in /var/spool/mail/root[root@k8s-master1 ingress]# cat tomcat-demo.yamlapiVersion: v1kind: Servicemetadata: name: tomcatspec: selector: app: tomcat release: canary clusterIP: None ports: - name: http targetPort: 8080 port: 8080 - name: ajp targetPort: 8009 port: 8009---apiVersion: apps/v1kind: Deploymentmetadata: name: tomcat-deployspec: replicas: 2 selector: matchLabels: app: tomcat release: canary template: metadata: labels: app: tomcat release: canary spec: containers: - name: tomcat image: tomcat:8.5-jre8-alpine imagePullPolicy: IfNotPresent ports: - name: http containerPort: 8080 name: ajp containerPort: 8009[root@k8s-master1 ingress]# kubectl apply -f tomcat-demo.yamlservice/tomcat createddeployment.apps/tomcat-deploy unchanged[root@k8s-master1 ingress]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStomcat-deploy-5484c8c5fc-7kcmf 1/1 Running 0 3m3s 10.244.36.127 k8s-node1 <none> <none>tomcat-deploy-5484c8c5fc-jkgt4 1/1 Running 0 3m2s 10.244.36.68 k8s-node1 <none> <none>[root@k8s-master1 ingress]# kubectl get svc -o wideNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 46d <none>tomcat ClusterIP None <none> 8080/TCP,8009/TCP 12s app=tomcat,release=canary |

编写ingress规则清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | [root@k8s-master1 ingress]# vim ingress-demo.yaml[root@k8s-master1 ingress]# cat ingress-demo.yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: ingress-tomcat namespace: defaultspec: ingressClassName: nginx rules: - host: tomcat.lucky.com http: paths: - path: / pathType: Prefix backend: service: name: tomcat port: number: 8080[root@k8s-master1 ingress]# kubectl apply -f ingress-demo.yamlingress.networking.k8s.io/ingress-tomcat created[root@k8s-master1 ingress]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 80 5s |

查看ingress资源详情:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | [root@k8s-master1 ingress]# kubectl describe ing ingress-tomcatName: ingress-tomcatNamespace: defaultAddress: 10.0.0.132,10.0.0.133Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)Rules: Host Path Backends ---- ---- -------- tomcat.lucky.com / tomcat:8080 (10.244.36.127:8080,10.244.36.68:8080)Annotations: <none>Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Sync 21s (x2 over 41s) nginx-ingress-controller Scheduled for sync Normal Sync 21s (x2 over 41s) nginx-ingress-controller Scheduled for sync |

kubectl describe ingresses kubia查看ingresses 时发现default-http-backend not found

定位原因:创建ingresses Controller的过程,没有配置一个Default Backend,没有规则的入口将所有流量发送到一个默认后端。默认后端通常是Ingress控制器的一个配置选项。如果Ingress对象中的主机或路径都不匹配HTTP请求,则流量将被路由到默认后端。

解决办法:创建一个default-back-end 后端,写入配置文件执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | [root@k8s-master1 ingress]# vim backend.yaml[root@k8s-master1 ingress]# cat backend.yamlkind: ServiceapiVersion: v1metadata: name: default-http-backend labels: app: default-http-backendspec: ports: - protocol: TCP port: 80 targetPort: 80 selector: app: default-http-backend---apiVersion: apps/v1kind: Deploymentmetadata: name: default-http-backend labels: app: default-http-backendspec: replicas: 1 selector: matchLabels: app: default-http-backend template: metadata: labels: app: default-http-backend spec: terminationGracePeriodSeconds: 60 containers: - name: default-http-backend image: hb562100/defaultbackend-amd64:1.5 livenessProbe: httpGet: path: /healthz port: 8080 scheme: HTTP initialDelaySeconds: 30 timeoutSeconds: 5 ports: - containerPort: 8080 resources: limits: cpu: 10m memory: 20Mi requests: cpu: 10m memory: 20Mi[root@k8s-master1 ingress]# kubectl apply -f backend.yamlservice/default-http-backend unchangeddeployment.apps/default-http-backend created[root@k8s-master1 ingress]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdefault-http-backend ClusterIP 10.110.15.223 <none> 80/TCP 17mkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 46dtomcat ClusterIP None <none> 8080/TCP,8009/TCP 90m[root@k8s-master1 ingress]# kubectl get podsNAME READY STATUS RESTARTS AGEdefault-http-backend-5468d55578-4wpwd 1/1 Running 0 119stomcat-deploy-5484c8c5fc-7kcmf 1/1 Running 0 93mtomcat-deploy-5484c8c5fc-jkgt4 1/1 Running 0 93m |

修改ingress资源清单ingress-demo.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | [root@k8s-master1 ingress]# vim ingress-demo.yaml[root@k8s-master1 ingress]# cat ingress-demo.yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: ingress-tomcat namespace: defaultspec: ingressClassName: nginx defaultBackend: service: name: default-http-backend port: number: 80 rules: - host: tomcat.lucky.com http: paths: - path: / pathType: Prefix backend: service: name: tomcat port: number: 8080[root@k8s-master1 ingress]# kubectl apply -f ingress-demo.yamlingress.networking.k8s.io/ingress-tomcat created[root@k8s-master1 ingress]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 80 16s[root@k8s-master1 ingress]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 10.0.0.132,10.0.0.133 80 6m23sYou have new mail in /var/spool/mail/root[root@k8s-master1 ingress]# kubectl describe ing ingress-tomcatName: ingress-tomcatNamespace: defaultAddress: 10.0.0.132,10.0.0.133Default backend: default-http-backend:80 (10.244.169.155:80)Rules: Host Path Backends ---- ---- -------- tomcat.lucky.com / tomcat:8080 (10.244.36.127:8080,10.244.36.68:8080)Annotations: <none>Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Sync 6m (x2 over 6m26s) nginx-ingress-controller Scheduled for sync Normal Sync 6m (x2 over 6m26s) nginx-ingress-controller Scheduled for sync |

问题解决。

修改host文件,访问tomcat.lucky.com

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | [root@k8s-master1 ingress]# vim /etc/hostsYou have new mail in /var/spool/mail/root[root@k8s-master1 ingress]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain610.0.0.131 k8s-master110.0.0.132 k8s-node110.0.0.133 k8s-node210.0.0.134 tomcat.lucky.com[root@k8s-master1 ingress]# curl -kv tomcat.lucky.com* About to connect() to tomcat.lucky.com port 80 (#0)* Trying 10.0.0.134...* Connected to tomcat.lucky.com (10.0.0.134) port 80 (#0)> GET / HTTP/1.1> User-Agent: curl/7.29.0> Host: tomcat.lucky.com> Accept: */*>< HTTP/1.1 200< Date: Sat, 17 Sep 2022 12:43:38 GMT< Content-Type: text/html;charset=UTF-8< Transfer-Encoding: chunked< Connection: keep-alive<<!DOCTYPE html><html lang="en"> <head> <meta charset="UTF-8" /> <title>Apache Tomcat/8.5.41</title> <link href="favicon.ico" rel="icon" type="image/x-icon" /> <link href="favicon.ico" rel="shortcut icon" type="image/x-icon" /> <link href="tomcat.css" rel="stylesheet" type="text/css" /> </head> |

修改电脑本地的host文件,增加如下一行

10.0.0.134 tomcat.lucky.com

浏览器访问tomcat.lucky.com,出现如下页面

在traefik 的 dashboard 页面上也可以看到相关的信息:

四、Ingress资源类型实践

以简单的 nginx 为例,先部署一个v1版本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 | [root@k8s-master1 ~]# mkdir web-canary[root@k8s-master1 ~]# cd web-canary/[root@k8s-master1 web-canary]# vim nginx-v1.yamlYou have new mail in /var/spool/mail/root[root@k8s-master1 web-canary]# cat nginx-v1.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: nginx-v1spec: replicas: 1 selector: matchLabels: app: nginx version: v1 template: metadata: labels: app: nginx version: v1 spec: containers: - name: nginx image: "openresty/openresty:centos" imagePullPolicy: IfNotPresent ports: - name: http protocol: TCP containerPort: 80 volumeMounts: - mountPath: /usr/local/openresty/nginx/conf/nginx.conf name: config subPath: nginx.conf volumes: - name: config configMap: name: nginx-v1---apiVersion: v1kind: ConfigMapmetadata: labels: app: nginx version: v1 name: nginx-v1data: nginx.conf: |- worker_processes 1; events { accept_mutex on; multi_accept on; use epoll; worker_connections 1024; } http { ignore_invalid_headers off; server { listen 80; location / { access_by_lua ' local header_str = ngx.say("nginx-v1") '; } } }---apiVersion: v1kind: Servicemetadata: name: nginx-v1spec: type: ClusterIP ports: - port: 80 protocol: TCP name: http selector: app: nginx version: v1[root@k8s-master1 web-canary]# kubectl apply -f nginx-v1.yamldeployment.apps/nginx-v1 createdconfigmap/nginx-v1 createdservice/nginx-v1 created[root@k8s-master1 web-canary]# kubectl get pods -o wide -l app=nginxNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-v1-79bc94ff97-xm7m2 1/1 Running 0 55s 10.244.169.156 k8s-node2 <none> <none> |

再部署一个v2版本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 | [root@k8s-master1 web-canary]# vim nginx-v2.yamlYou have new mail in /var/spool/mail/root[root@k8s-master1 web-canary]# cat nginx-v2.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: nginx-v2spec: replicas: 1 selector: matchLabels: app: nginx version: v2 template: metadata: labels: app: nginx version: v2 spec: containers: - name: nginx image: "openresty/openresty:centos" imagePullPolicy: IfNotPresent ports: - name: http protocol: TCP containerPort: 80 volumeMounts: - mountPath: /usr/local/openresty/nginx/conf/nginx.conf name: config subPath: nginx.conf volumes: - name: config configMap: name: nginx-v2---apiVersion: v1kind: ConfigMapmetadata: labels: app: nginx version: v2 name: nginx-v2data: nginx.conf: |- worker_processes 1; events { accept_mutex on; multi_accept on; use epoll; worker_connections 1024; } http { ignore_invalid_headers off; server { listen 80; location / { access_by_lua ' local header_str = ngx.say("nginx-v2") '; } } }---apiVersion: v1kind: Servicemetadata: name: nginx-v2spec: type: ClusterIP ports: - port: 80 protocol: TCP name: http selector: app: nginx version: v2[root@k8s-master1 web-canary]# kubectl apply -f nginx-v2.yamldeployment.apps/nginx-v2 createdconfigmap/nginx-v2 createdservice/nginx-v2 created[root@k8s-master1 web-canary]# kubectl get pods -o wide -l version=v2NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-v2-5f885975d5-2zvq6 1/1 Running 0 19s 10.244.36.69 k8s-node1 <none> <none> |

1. 基于名称的虚拟托管

基于名称的虚拟主机支持将针对多个主机名的 HTTP 流量路由到同一 IP 地址上。

以下 Ingress 让后台负载均衡器基于host 头部字段 来路由请求:Ingress 会将请求 canary.example.com 的流量路由到 nginx-v1,将请求 test.example.com 的流量路由到 nginx-v2.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 | [root@k8s-master1 web-canary]# vim host-ingress.yaml[root@k8s-master1 web-canary]# cat host-ingress.yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: nginxspec: ingressClassName: nginx defaultBackend: service: name: default-http-backend port: number: 80 rules: - host: canary.example.com http: paths: - path: / pathType: Prefix backend: service: name: nginx-v1 port: number: 80 - host: test.example.com http: paths: - path: / pathType: Prefix backend: service: name: nginx-v2 port: number: 80[root@k8s-master1 web-canary]# kubectl apply -f host-ingress.yamlingress.networking.k8s.io/nginx createdYou have new mail in /var/spool/mail/root[root@k8s-master1 web-canary]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 10.0.0.132,10.0.0.133 80 102mnginx nginx canary.example.com,test.example.com 80 12s[root@k8s-master1 web-canary]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 10.0.0.132,10.0.0.133 80 102mnginx nginx canary.example.com,test.example.com 10.0.0.132,10.0.0.133 80 41s[root@k8s-master1 web-canary]# kubectl describe ing nginxName: nginxNamespace: defaultAddress: 10.0.0.132,10.0.0.133Default backend: default-http-backend:80 (10.244.169.155:80)Rules: Host Path Backends ---- ---- -------- canary.example.com / nginx-v1:80 (10.244.169.156:80) test.example.com / nginx-v2:80 (10.244.36.69:80)Annotations: <none>Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Sync 5s (x2 over 31s) nginx-ingress-controller Scheduled for sync Normal Sync 5s (x2 over 31s) nginx-ingress-controller Scheduled for sync |

访问测试一下

1 2 3 4 5 6 | [root@k8s-master1 web-canary]# curl -H "Host: canary.example.com" http://10.0.0.134nginx-v1You have new mail in /var/spool/mail/root[root@k8s-master1 web-canary]# curl -H "Host: test.example.com" http://10.0.0.134nginx-v2[root@k8s-master1 web-canary]# |

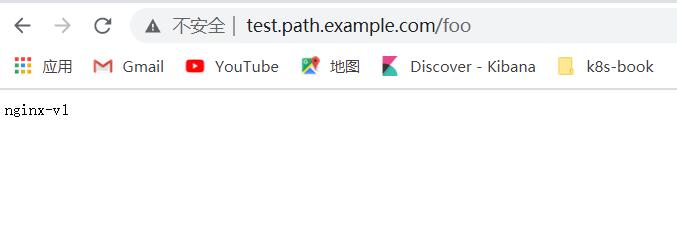

2. 基于URL路径进行流量分发

根据请求的 HTTP URI 将来自同一 IP 地址的流量路由到多个 Service,可通过主机域名的URL路径分别接入。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 | [root@k8s-master1 web-canary]# vim path-ingress.yamlYou have new mail in /var/spool/mail/root[root@k8s-master1 web-canary]# cat path-ingress.yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: path-testspec: ingressClassName: nginx defaultBackend: service: name: default-http-backend port: number: 80 rules: - host: test.path.example.com http: paths: - path: /foo pathType: Prefix backend: service: name: nginx-v1 port: number: 80 - host: test.path.example.com http: paths: - path: /bar pathType: Prefix backend: service: name: nginx-v2 port: number: 80[root@k8s-master1 web-canary]# kubectl apply -f path-ingress.yamlingress.networking.k8s.io/path-test created[root@k8s-master1 web-canary]# kubectl get ing path-test -o wideNAME CLASS HOSTS ADDRESS PORTS AGEpath-test nginx test.path.example.com,test.path.example.com 10.0.0.132,10.0.0.133 80 14s[root@k8s-master1 web-canary]# kubectl describe ing path-testName: path-testNamespace: defaultAddress: 10.0.0.132,10.0.0.133Default backend: default-http-backend:80 (10.244.169.155:80)Rules: Host Path Backends ---- ---- -------- test.path.example.com /foo nginx-v1:80 (10.244.169.156:80) test.path.example.com /bar nginx-v2:80 (10.244.36.69:80)Annotations: <none>Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Sync 18s (x2 over 30s) nginx-ingress-controller Scheduled for sync Normal Sync 18s (x2 over 30s) nginx-ingress-controller Scheduled for sync |

修改host文件,访问http://test.path.example.com/foo 和http://test.path.example.com/bar

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | [root@k8s-master1 web-canary]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain610.0.0.131 k8s-master110.0.0.132 k8s-node110.0.0.133 k8s-node210.0.0.134 tomcat.lucky.com10.0.0.134 test.example.com10.0.0.134 canary.example.com10.0.0.134 test.path.example.com[root@k8s-master1 web-canary]# curl -kv http://test.path.example.com/bar* About to connect() to test.path.example.com port 80 (#0)* Trying 10.0.0.134...* Connected to test.path.example.com (10.0.0.134) port 80 (#0)> GET /bar HTTP/1.1> User-Agent: curl/7.29.0> Host: test.path.example.com> Accept: */*>< HTTP/1.1 200 OK< Date: Sat, 17 Sep 2022 15:02:44 GMT< Content-Type: text/plain< Transfer-Encoding: chunked< Connection: keep-alive<nginx-v2* Connection #0 to host test.path.example.com left intactYou have new mail in /var/spool/mail/root[root@k8s-master1 web-canary]# curl -kv http://test.path.example.com/foo* About to connect() to test.path.example.com port 80 (#0)* Trying 10.0.0.134...* Connected to test.path.example.com (10.0.0.134) port 80 (#0)> GET /foo HTTP/1.1> User-Agent: curl/7.29.0> Host: test.path.example.com> Accept: */*>< HTTP/1.1 200 OK< Date: Sat, 17 Sep 2022 15:02:59 GMT< Content-Type: text/plain< Transfer-Encoding: chunked< Connection: keep-alive<nginx-v1* Connection #0 to host test.path.example.com left intact |

浏览器访问:(注:修改本地电脑的hosts文件)

五、配置TLS Ingress资源

一般来说,如果有基于HTTPS通信的需求,那么它应该由外部的负载均衡器予以实现,并在SSL会话卸载后将访问请求转发到Ingress控制器。不过,如果外部负载均衡器工作于传输层而不是工作于应用层的反向代理服务器,或者存在直接通过Ingress控制器接收客户端请求的需求,又期望它们能够提供HTTPS服务时,就应该配置TLS类型的Ingress资源。

将此类服务公开发布到互联网时,HTTPS服务用到的证书应由公信CA签署并颁发,用户遵循其相应流程准备好相关的数字证书即可。用于测试,将使用一个自签名的证书。openssl工具程序是用于生成自签证书的常用工具,使用它生成用于测试的私钥和自签证书:

私钥文件必须以tls.key为键名

1 2 3 4 5 6 7 | [root@k8s-master1 ingress]# openssl genrsa -out tls.key 2048Generating RSA private key, 2048 bit long modulus...............................................+++.......+++e is 65537 (0x10001)[root@k8s-master1 ingress]# ls -lrt tls.key-rw-r--r-- 1 root root 1679 Sep 18 16:59 tls.key |

自签证书必须以tls.crt作为键名

1 2 3 | [root@k8s-master1 ingress]# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=Shaanxi/L=Weinan/O=DevOps/CN=tomcat.lucky.com -days 3650[root@k8s-master1 ingress]# ls -lrt tls.crt-rw-r--r-- 1 root root 1289 Sep 18 17:05 tls.crt |

注:上面生成的私钥文件和证书文件名将直接保存为键名形式,以便于后面创建Secret对象时直接作为键名引用。

在Ingress控制器上配置HTTPS主机时,不能直接使用私钥和证书文件,而是要使用Secret资源对象来传递相关的数据。所以接下来要根据私钥和证书生成用于配置TLS Ingress的Secret资源,在创建Ingress规则时由其将用到的Secret资源中的信息注入Ingress控制器的pod对象中,用于为配置的HTTPS虚拟主机提供相应的私钥和证书。下面将创建一个TLS类型名为tomcat-ingress-secret的Secret资源:

1 2 3 4 5 | [root@k8s-master1 ingress]# kubectl create secret tls tomcat-ingress-secret --cert=tls.crt --key=tls.keysecret/tomcat-ingress-secret created[root@k8s-master1 ingress]# kubectl get secret tomcat-ingress-secretNAME TYPE DATA AGEtomcat-ingress-secret kubernetes.io/tls 2 25s |

通过详细信息可以确认其创建成功:

1 2 3 4 5 6 7 8 9 10 11 12 | [root@k8s-master1 ingress]# kubectl describe secrets tomcat-ingress-secretName: tomcat-ingress-secretNamespace: defaultLabels: <none>Annotations: <none>Type: kubernetes.io/tlsData====tls.crt: 1289 bytestls.key: 1679 bytes |

然后,定义创建TLS类型Ingress资源的配置清单。下面的配置清单通过spec.rules定义了一组转发规则,并通过.spec.tls将此主机定义为HTTPS类型的虚拟主机,用到的私钥和证书信息则来自于Secret资源tomcat-ingress-secret

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | [root@k8s-master1 ingress]# vim tomcat-ingress-tls.yamlYou have new mail in /var/spool/mail/root[root@k8s-master1 ingress]# cat tomcat-ingress-tls.yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: tomcat-ingress-tls namespace: defaultspec: tls: - hosts: - tomcat.example.com secretName: tomcat-ingress-tls ingressClassName: nginx defaultBackend: service: name: default-http-backend port: number: 80 rules: - host: tomcat.example.com http: paths: - path: / pathType: Prefix backend: service: name: tomcat port: number: 8080 |

运行资源创建命令完成Ingress资源创建:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | [root@k8s-master1 ingress]# kubectl apply -f tomcat-ingress-tls.yamlingress.networking.k8s.io/tomcat-ingress-tls created[root@k8s-master1 ingress]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 10.0.0.132,10.0.0.133 80 20hnginx nginx canary.example.com,test.example.com 10.0.0.132,10.0.0.133 80 18hpath-test nginx test.path.example.com,test.path.example.com 10.0.0.132,10.0.0.133 80 18htomcat-ingress-tls nginx tomcat.example.com 80, 443 6s[root@k8s-master1 ingress]# kubectl get ingNAME CLASS HOSTS ADDRESS PORTS AGEingress-tomcat nginx tomcat.lucky.com 10.0.0.132,10.0.0.133 80 20hnginx nginx canary.example.com,test.example.com 10.0.0.132,10.0.0.133 80 19hpath-test nginx test.path.example.com,test.path.example.com 10.0.0.132,10.0.0.133 80 18htomcat-ingress-tls nginx tomcat.example.com 10.0.0.132,10.0.0.133 80, 443 2m1s |

而后通过详细信息确认其创建成功,且已经正确关联到相应的tomcat资源上:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@k8s-master1 ingress]# kubectl describe ing tomcat-ingress-tlsName: tomcat-ingress-tlsNamespace: defaultAddress: 10.0.0.132,10.0.0.133Default backend: default-http-backend:80 (10.244.169.158:80)TLS: tomcat-ingress-tls terminates tomcat.example.comRules: Host Path Backends ---- ---- -------- tomcat.example.com / tomcat:8080 (10.244.36.70:8080,10.244.36.72:8080)Annotations: <none>Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Sync 3m1s (x2 over 3m31s) nginx-ingress-controller Scheduled for sync Normal Sync 3m1s (x2 over 3m31s) nginx-ingress-controller Scheduled for sync |

使用curl进行访问测试,只要对应的主机能够正确解析tomcat.example.com主机名即可。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | [root@k8s-master1 ingress]# vim /etc/hosts[root@k8s-master1 ingress]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain610.0.0.131 k8s-master110.0.0.132 k8s-node110.0.0.133 k8s-node210.0.0.134 tomcat.lucky.com10.0.0.134 test.example.com10.0.0.134 canary.example.com10.0.0.134 test.path.example.com10.0.0.134 tomcat.example.com[root@k8s-master1 ingress]# curl -kv https://tomcat.example.com* About to connect() to tomcat.example.com port 443 (#0)* Trying 10.0.0.134...* Connected to tomcat.example.com (10.0.0.134) port 443 (#0)* Initializing NSS with certpath: sql:/etc/pki/nssdb* skipping SSL peer certificate verification* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256* Server certificate:* subject: CN=Kubernetes Ingress Controller Fake Certificate,O=Acme Co* start date: Sep 18 08:47:55 2022 GMT* expire date: Sep 18 08:47:55 2023 GMT* common name: Kubernetes Ingress Controller Fake Certificate* issuer: CN=Kubernetes Ingress Controller Fake Certificate,O=Acme Co> GET / HTTP/1.1> User-Agent: curl/7.29.0> Host: tomcat.example.com> Accept: */*>< HTTP/1.1 200< Date: Sun, 18 Sep 2022 09:37:13 GMT< Content-Type: text/html;charset=UTF-8< Transfer-Encoding: chunked< Connection: keep-alive< Strict-Transport-Security: max-age=15724800; includeSubDomains<<!DOCTYPE html><html lang="en"> <head> <meta charset="UTF-8" /> <title>Apache Tomcat/8.5.41</title> <link href="favicon.ico" rel="icon" type="image/x-icon" /> <link href="favicon.ico" rel="shortcut icon" type="image/x-icon" /> <link href="tomcat.css" rel="stylesheet" type="text/css" /> </head>...... |

到此为止,实践配置目标已经全部完成。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏