部署k8s的heapster监控

搭建Heapster+InfluxDB+Grafana集群性能监控平台

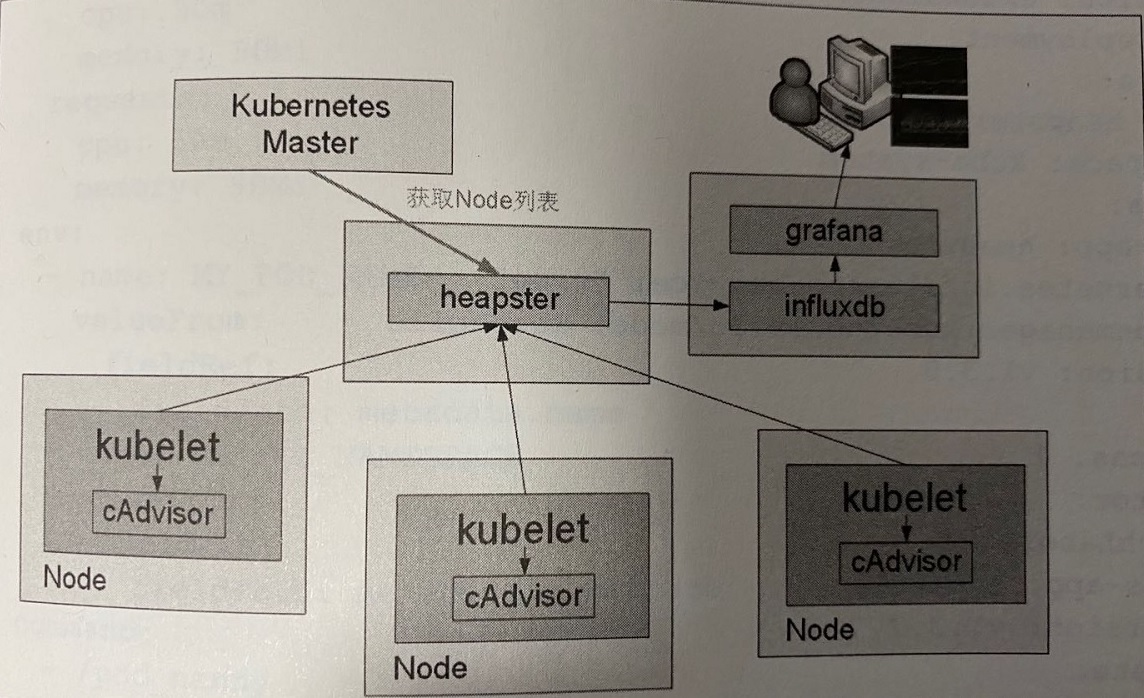

在大规模容器集群中,需要对所有node和全部容器进行性能监控。kubernetes建议使用一套工具来实现集群性能数据的采集、存储和展示:heapster、InfluxDB和Grafana。

heapster:对集群中各个Node上cAdvisor的数据采集汇聚的系统,通过访问每个node上kubelet的API,再通过kubelet调用cAdvisor的API来采集该节点上所有容器的性能数据。Heapster对性能书库进行聚合,并将结果保存到后端存储系统中。

InfluxDB:是分布式时序数据库(每条记录都带有时间戳属性),主要用于实时数据采集、事件跟踪记录、存储时间图表、原始数据等。InfluxDB提供了REST API用于数据的存储和查询。

Grafana:通过dashboard将InfluxDB中的时序数据展现成图表或曲线等形式,便于运维人员查看集群的运行状态。

基于Heapster+InfluxDB+Grafana集群监控系统总体框架如图所示

Heapster+InfluxDB+Grafana均以Pod的形式启动和运行。

1. 上传相关的镜像到私有仓库

[root@kub_master ~]# docker load -i docker_heapster.tar.gz c12ecfd4861d: Loading layer [==================================================>] 130.9 MB/130.9 MB 5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB 998608e2fcd4: Loading layer [==================================================>] 45.16 MB/45.16 MB 591569fa6c34: Loading layer [==================================================>] 126.5 MB/126.5 MB 0b2fe2c6ef6b: Loading layer [==================================================>] 136.2 MB/136.2 MB f9f3fb66a490: Loading layer [==================================================>] 322.9 MB/322.9 MB 6e2e798f8998: Loading layer [==================================================>] 2.56 kB/2.56 kB 21ac53bc7cd6: Loading layer [==================================================>] 5.632 kB/5.632 kB 7f96c89af577: Loading layer [==================================================>] 79.98 MB/79.98 MB 4371d588893a: Loading layer [==================================================>] 150.2 MB/150.2 MB Loaded image: docker.io/kubernetes/heapster:canary

[root@kub_master ~]# docker load -i docker_heapster_influxdb.tar.gz 8ceab61e5aa8: Loading layer [==================================================>] 197.2 MB/197.2 MB 3c84ae1bbde2: Loading layer [==================================================>] 208.9 kB/208.9 kB e8061ac24ae3: Loading layer [==================================================>] 4.608 kB/4.608 kB 5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB 58484cf9c5e7: Loading layer [==================================================>] 63.49 MB/63.49 MB 07d2297acddc: Loading layer [==================================================>] 4.608 kB/4.608 kB Loaded image: docker.io/kubernetes/heapster_influxdb:v0.5 [root@kub_master ~]# docker images |grep heapster_influxdb docker.io/kubernetes/heapster_influxdb v0.5 a47993810aac 5 years ago 251 MB

[root@kub_master ~]# docker load -i docker_heapster_grafana.tar.gz c69ae1aa4698: Loading layer [==================================================>] 131 MB/131 MB 5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB 75a5b97e491c: Loading layer [==================================================>] 127.1 MB/127.1 MB e188e2340071: Loading layer [==================================================>] 16.92 MB/16.92 MB 5e43af080be6: Loading layer [==================================================>] 55.81 kB/55.81 kB 7ecd917a174c: Loading layer [==================================================>] 4.096 kB/4.096 kB Loaded image: docker.io/kubernetes/heapster_grafana:v2.6.0 [root@kub_master ~]# docker images |grep heapster_grafana docker.io/kubernetes/heapster_grafana v2.6.0 4fe73eb13e50 4 years ago 267 MB

#上传以上三款镜像至私有仓库

[root@kub_master ~]# docker tag docker.io/kubernetes/heapster:canary 192.168.0.212:5000/heapster:canary [root@kub_master ~]# docker push 192.168.0.212:5000/heapster:canary The push refers to a repository [192.168.0.212:5000/heapster] 5f70bf18a086: Mounted from kubernetes-dashboard-amd64 4371d588893a: Pushed 7f96c89af577: Pushed 21ac53bc7cd6: Pushed 6e2e798f8998: Pushed f9f3fb66a490: Pushed 0b2fe2c6ef6b: Pushed 591569fa6c34: Pushed 998608e2fcd4: Pushed c12ecfd4861d: Pushed canary: digest: sha256:a024ec78a53b4b9b0bd13b12a6aec29913359e17d30c8d0c35d0a510d10a9d75 size: 4276 [root@kub_master ~]# docker tag docker.io/kubernetes/heapster_grafana:v2.6.0 192.168.0.212:5000/heapster_grafana:v2.6.0 [root@kub_master ~]# docker push 192.168.0.212:5000/heapster_grafana:v2.6.0 The push refers to a repository [192.168.0.212:5000/heapster_grafana] 5f70bf18a086: Mounted from heapster 7ecd917a174c: Pushed 5e43af080be6: Pushed e188e2340071: Pushed 75a5b97e491c: Pushed c69ae1aa4698: Pushed v2.6.0: digest: sha256:1299d1ebb518416f90895a29fc58ad87149af610c8d5d9376c3379d65b6d9568 size: 2811 [root@kub_master ~]# docker tag docker.io/kubernetes/heapster_influxdb:v0.5 192.168.0.212:5000/heapster_influxdb:v0.5 [root@kub_master ~]# docker push 192.168.0.212:5000/heapster_influxdb:v0.5 The push refers to a repository [192.168.0.212:5000/heapster_influxdb] 5f70bf18a086: Mounted from heapster_grafana 07d2297acddc: Pushed 58484cf9c5e7: Pushed e8061ac24ae3: Pushed 3c84ae1bbde2: Pushed 8ceab61e5aa8: Pushed v0.5: digest: sha256:32cdba763a3fab92deeb8074b47ace45ee4a15d537e318ceae89f1e2dd069b53 size: 3012

2. 下载heapster资料包

[root@kub_master ~]# cd k8s/ [root@kub_master k8s]# mkdir heapster [root@kub_master k8s]# cd heapster/ [root@kub_master heapster]# wget https://www.qstack.com.cn/heapster-influxdb.zip --2020-09-28 20:29:42-- https://www.qstack.com.cn/heapster-influxdb.zip Resolving www.qstack.com.cn (www.qstack.com.cn)... 180.96.32.89 Connecting to www.qstack.com.cn (www.qstack.com.cn)|180.96.32.89|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 2636 (2.6K) [application/zip] Saving to: ‘heapster-influxdb.zip’ 100%[===============================================================================================>] 2,636 --.-K/s in 0s 2020-09-28 20:29:43 (40.6 MB/s) - ‘heapster-influxdb.zip’ saved [2636/2636] [root@kub_master heapster]# unzip heapster-influxdb.zip Archive: heapster-influxdb.zip creating: heapster-influxdb/ inflating: heapster-influxdb/grafana-service.yaml inflating: heapster-influxdb/heapster-controller.yaml inflating: heapster-influxdb/heapster-service.yaml inflating: heapster-influxdb/influxdb-grafana-controller.yaml inflating: heapster-influxdb/influxdb-service.yaml [root@kub_master heapster]# cd heapster-influxdb [root@kub_master heapster-influxdb]# ll total 20 -rw-r--r-- 1 root root 414 Sep 14 2016 grafana-service.yaml -rw-r--r-- 1 root root 682 Jul 1 2019 heapster-controller.yaml -rw-r--r-- 1 root root 249 Sep 14 2016 heapster-service.yaml -rw-r--r-- 1 root root 1605 Jul 1 2019 influxdb-grafana-controller.yaml -rw-r--r-- 1 root root 259 Sep 14 2016 influxdb-service.yaml

3. 部署Heapster容器

[root@kub_master heapster-influxdb]# vim heapster-controller.yaml [root@kub_master heapster-influxdb]# cat heapster-controller.yaml apiVersion: v1 kind: ReplicationController metadata: labels: k8s-app: heapster name: heapster version: v6 name: heapster namespace: kube-system spec: replicas: 1 selector: k8s-app: heapster version: v6 template: metadata: labels: k8s-app: heapster version: v6 spec: nodeSelector: kubernetes.io/hostname: 192.168.0.212 containers: - name: heapster image: 192.168.0.212:5000/heapster:canary imagePullPolicy: IfNotPresent command: - /heapster - --source=kubernetes:http://192.168.0.212:8080?inClusterConfig=false #配置采集来源,为master URL地址 - --sink=influxdb:http://monitoring-influxdb:8086 #配置后端存储系统,使用influxdb数据库

[root@kub_master heapster-influxdb]# vim heapster-service.yaml [root@kub_master heapster-influxdb]# cat heapster-service.yaml apiVersion: v1 kind: Service metadata: labels: kubernetes.io/cluster-service: 'true' kubernetes.io/name: Heapster name: heapster namespace: kube-system spec: ports: - port: 80 targetPort: 8082 selector: k8s-app: heapster

[root@kub_master heapster-influxdb]# kubectl create -f heapster-controller.yaml replicationcontroller "heapster" created [root@kub_master heapster-influxdb]# kubectl create -f heapster-service.yaml service "heapster" created [root@kub_master heapster-influxdb]# kubectl get all --namespace=kube-system NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/kube-dns 1 1 1 1 2d deploy/kubernetes-dashboard-latest 1 1 1 1 1d NAME DESIRED CURRENT READY AGE rc/heapster 1 1 1 26s NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/heapster 192.168.211.92 <none> 80/TCP 11s svc/kube-dns 192.168.230.254 <none> 53/UDP,53/TCP 2d svc/kubernetes-dashboard 192.168.108.11 <none> 80/TCP 1d NAME DESIRED CURRENT READY AGE rs/kube-dns-4072910292 1 1 1 2d rs/kubernetes-dashboard-latest-3255858758 1 1 1 1d NAME READY STATUS RESTARTS AGE po/heapster-jhr57 1/1 Running 0 26s po/kube-dns-4072910292-4qb6c 4/4 Running 0 2d po/kubernetes-dashboard-latest-3255858758-fqsbc 1/1 Running 0 1d

4. 部署InfluxDB和Grafana

#InfluxDB和Grafana的RC定义:

[root@kub_master heapster-influxdb]# vim influxdb-grafana-controller.yaml [root@kub_master heapster-influxdb]# cat influxdb-grafana-controller.yaml apiVersion: v1 kind: ReplicationController metadata: labels: name: influxGrafana name: influxdb-grafana namespace: kube-system spec: replicas: 1 selector: name: influxGrafana template: metadata: labels: name: influxGrafana spec: containers: - name: influxdb image: 192.168.0.212:5000/heapster_influxdb:v0.5 imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /data name: influxdb-storage - name: grafana imagePullPolicy: IfNotPresent image: 192.168.0.212:5000/heapster_grafana:v2.6.0 env: - name: INFLUXDB_SERVICE_URL value: http://monitoring-influxdb:8086 # The following env variables are required to make Grafana accessible via # the kubernetes api-server proxy. On production clusters, we recommend # removing these env variables, setup auth for grafana, and expose the grafana # service using a LoadBalancer or a public IP. - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL value: /api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/ volumeMounts: - mountPath: /var name: grafana-storage nodeSelector: kubernetes.io/hostname: 192.168.0.212 volumes: - name: influxdb-storage emptyDir: {} - name: grafana-storage emptyDir: {}

#InfluxDB Service 定义

[root@kub_master heapster-influxdb]# vim influxdb-service.yaml [root@kub_master heapster-influxdb]# cat influxdb-service.yaml apiVersion: v1 kind: Service metadata: labels: null name: monitoring-influxdb namespace: kube-system spec: ports: - name: http port: 8083 targetPort: 8083 - name: api port: 8086 targetPort: 8086 selector: name: influxGrafana

#Grafana Service定义

[root@kub_master heapster-influxdb]# vim grafana-service.yaml [root@kub_master heapster-influxdb]# cat grafana-service.yaml apiVersion: v1 kind: Service metadata: labels: kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-grafana name: monitoring-grafana namespace: kube-system spec: # In a production setup, we recommend accessing Grafana through an external Loadbalancer # or through a public IP. # type: LoadBalancer ports: - port: 80 targetPort: 3000 selector: name: influxGrafana

[root@kub_master heapster-influxdb]# kubectl create -f influxdb-grafana-controller.yaml replicationcontroller "influxdb-grafana" created [root@kub_master heapster-influxdb]# kubectl get all --namespace=kube-system NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/kube-dns 1 1 1 1 2d deploy/kubernetes-dashboard-latest 1 1 1 1 1d NAME DESIRED CURRENT READY AGE rc/heapster 1 1 1 4m rc/influxdb-grafana 1 1 1 5s NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/heapster 192.168.211.92 <none> 80/TCP 4m svc/kube-dns 192.168.230.254 <none> 53/UDP,53/TCP 2d svc/kubernetes-dashboard 192.168.108.11 <none> 80/TCP 1d NAME DESIRED CURRENT READY AGE rs/kube-dns-4072910292 1 1 1 2d rs/kubernetes-dashboard-latest-3255858758 1 1 1 1d NAME READY STATUS RESTARTS AGE po/heapster-jhr57 1/1 Running 0 4m po/influxdb-grafana-thgp6 2/2 Running 0 5s po/kube-dns-4072910292-4qb6c 4/4 Running 0 2d po/kubernetes-dashboard-latest-3255858758-fqsbc 1/1 Running 0 1d [root@kub_master heapster-influxdb]# kubectl create -f influxdb-service.yaml service "monitoring-influxdb" created [root@kub_master heapster-influxdb]# kubectl create -f grafana-service.yaml service "monitoring-grafana" created [root@kub_master heapster-influxdb]# kubectl get all --namespace=kube-system NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/kube-dns 1 1 1 1 2d deploy/kubernetes-dashboard-latest 1 1 1 1 1d NAME DESIRED CURRENT READY AGE rc/heapster 1 1 1 5m rc/influxdb-grafana 1 1 1 44s NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/heapster 192.168.211.92 <none> 80/TCP 5m svc/kube-dns 192.168.230.254 <none> 53/UDP,53/TCP 2d svc/kubernetes-dashboard 192.168.108.11 <none> 80/TCP 1d svc/monitoring-grafana 192.168.15.46 <none> 80/TCP 3s svc/monitoring-influxdb 192.168.188.130 <none> 8083/TCP,8086/TCP 10s NAME DESIRED CURRENT READY AGE rs/kube-dns-4072910292 1 1 1 2d rs/kubernetes-dashboard-latest-3255858758 1 1 1 1d NAME READY STATUS RESTARTS AGE po/heapster-jhr57 1/1 Running 0 5m po/influxdb-grafana-thgp6 2/2 Running 0 44s po/kube-dns-4072910292-4qb6c 4/4 Running 0 2d po/kubernetes-dashboard-latest-3255858758-fqsbc 1/1 Running 0 1d

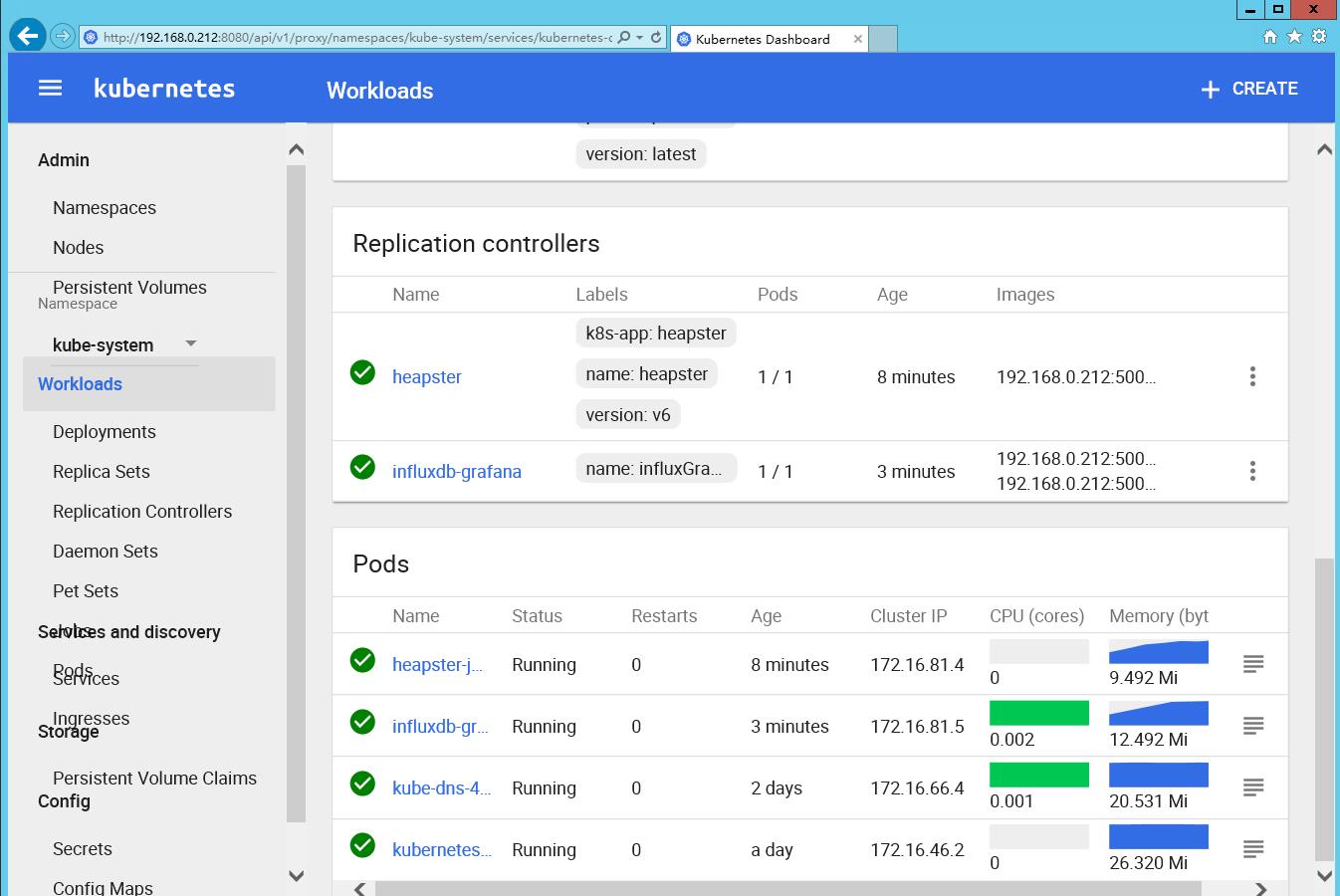

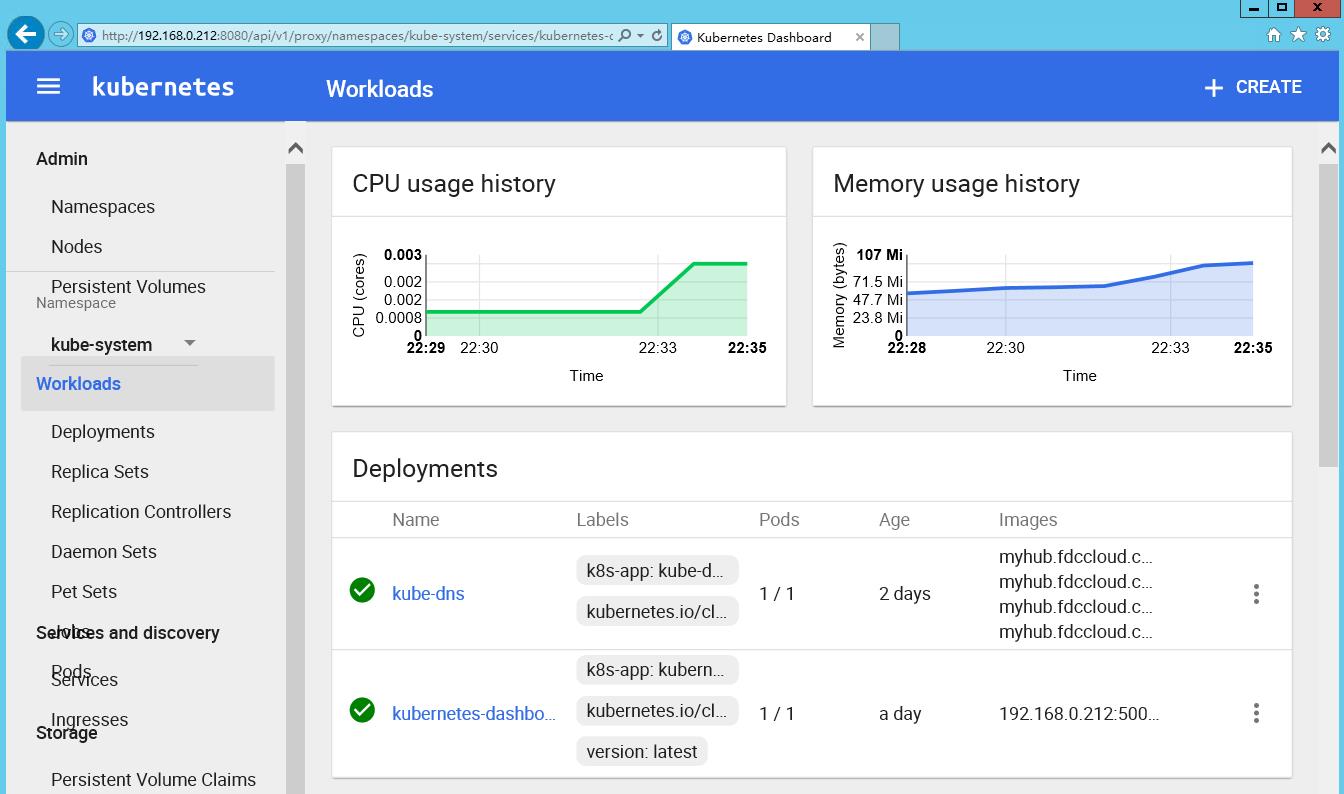

5. 访问dashboard

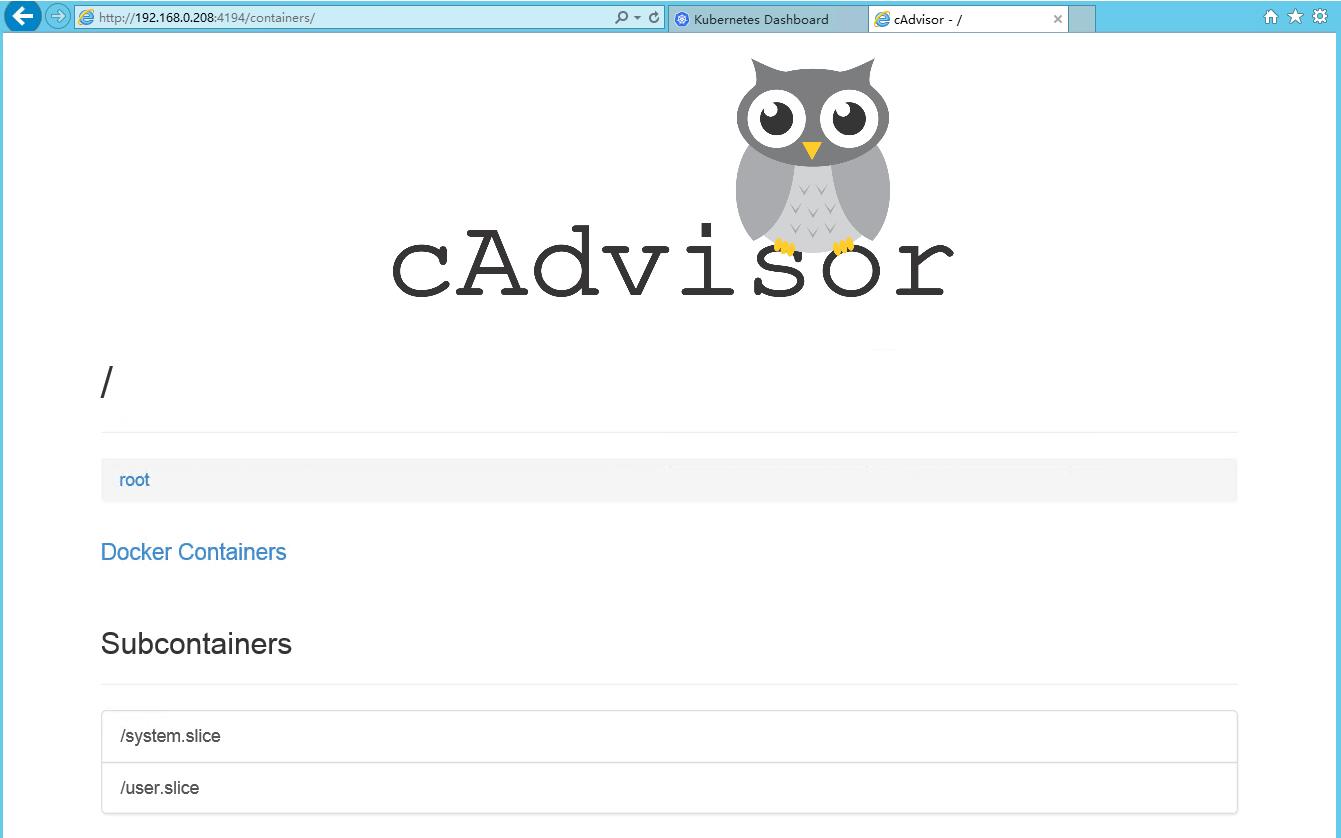

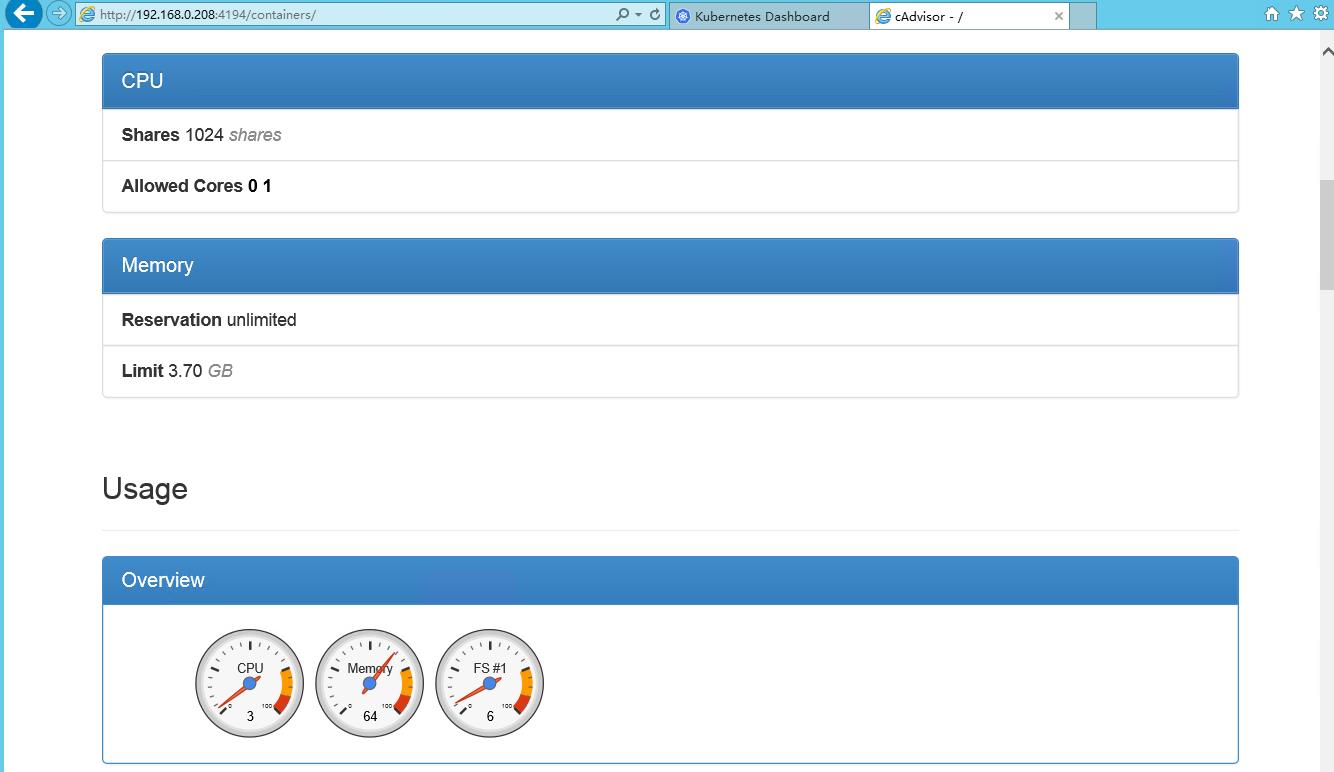

6. 通过cAdvisor页面查看容器的运行状态

在kubernetes系统中,cAdvisor已被默认集成到了kubelet组件内,当kubelet服务启动时,它会自动启动cAdvisor服务,然后cAdvisor会实时采集所在节点的性能指标及在节点上运行的容器的性能指标。kubelet的启动参数--cadvisor-port可自定义cAdvisor对外提供服务的端口号,默认为4194.。

cAdvisor提供了web页面可供浏览器访问。如监控node2节点的性能指标

[root@kub_node2 ~]# vim /etc/kubernetes/kubelet [root@kub_node2 ~]# cat /etc/kubernetes/kubelet ### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=192.168.0.208" # location of the api-server KUBELET_API_SERVER="--api-servers=http://192.168.0.212:8080" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=192.168.0.212:5000/pod-infrastructure:latest" # Add your own! KUBELET_ARGS="--cluster_dns=192.168.230.254 --cluster_domain=cluster.local --cadvisor-port=4194" [root@kub_node2 ~]# systemctl restart kubelet

在浏览器中输入http://192.168.0.212:4194来访问cAdvisor的监控页面。

cAdvisor的主页显示了主机的实时运行状态,包括cpu使用情况、内存使用情况、网络吞吐量及文件系统使用情况等信息。

容器的性能数据对于集群监控非常有用,系统管理员可以根据cAdvisor提供的数据进行分析和警告。但是cAdvisor是在每台Node上运行的,只能采集本机的性能指标数据。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏