部署k8s的dashboard

1. 上传dashboard镜像到私有仓库

[root@kub_master dashboard]# docker load -i kubernetes-dashboard-amd64_v1.4.1.tar.gz 5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB 2e350fa8cbdf: Loading layer [==================================================>] 86.96 MB/86.96 MB Loaded image: index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1 [root@kub_master dashboard]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE docker.io/wordpress latest 420b971d0f8b 2 weeks ago 546 MB 192.168.0.212:5000/wordpress latest 420b971d0f8b 2 weeks ago 546 MB docker.io/busybox latest 6858809bf669 2 weeks ago 1.23 MB docker.io/nginx latest be1f31be9a87 24 months ago 109 MB 192.168.0.212:5000/nginx 1.13 ae513a47849c 2 years ago 109 MB docker.io/nginx 1.13 ae513a47849c 2 years ago 109 MB registry latest a07e3f32a779 2 years ago 33.3 MB 192.168.0.212:5000/pod-infrastructure latest 34d3450d733b 3 years ago 205 MB docker.io/tianyebj/pod-infrastructure latest 34d3450d733b 3 years ago 205 MB index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64 v1.4.1 1dda73f463b2 3 years ago 86.8 MB 192.168.0.212:5000/mysql 5.7 b7dc06006192 4 years ago 386 MB docker.io/mysql 5.7 b7dc06006192 4 years ago 386 MB 192.168.0.212:5000/tomcat-app v1 a29e200a18e9 4 years ago 358 MB [root@kub_master dashboard]# docker tag index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1 192.168.0.212:5000/kubernetes-dashboard-amd64:v1.4.1 [root@kub_master dashboard]# docker push 192.168.0.212:5000/kubernetes-dashboard-amd64:v1.4.1 The push refers to a repository [192.168.0.212:5000/kubernetes-dashboard-amd64] 5f70bf18a086: Mounted from tomcat-app 2e350fa8cbdf: Pushed v1.4.1: digest: sha256:e446d645ff6e6b3147205c58258c2fb431105dc46998e4d742957623bf028014 size: 1147 [root@kub_master dashboard]# ll /opt/myregistry/docker/registry/v2/repositories/kubernetes-dashboard-amd64/_manifests/tags/ total 4 drwxr-xr-x 4 root root 4096 Sep 27 21:25 v1.4.1

2. 创建dashboard的deployment和service资源

1)创建配置文件

[root@kub_master k8s]# wget https://www.qstack.com.cn/dashboard.zip --2020-09-27 21:16:43-- https://www.qstack.com.cn/dashboard.zip Resolving www.qstack.com.cn (www.qstack.com.cn)... 223.111.153.171, 36.159.114.146, 111.62.79.149, ... Connecting to www.qstack.com.cn (www.qstack.com.cn)|223.111.153.171|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 1099 (1.1K) [application/zip] Saving to: ‘dashboard.zip’ 100%[===============================================================================================>] 1,099 --.-K/s in 0s 2020-09-27 21:16:44 (16.8 MB/s) - ‘dashboard.zip’ saved [1099/1099] [root@kub_master k8s]# unzip dashboard.zip Archive: dashboard.zip creating: dashboard/ inflating: dashboard/dashboard-deploy.yaml inflating: dashboard/dashboard-svc.yaml [root@kub_master k8s]# cd dashboard [root@kub_master dashboard]# ll total 8 -rw-r--r-- 1 root root 1004 Apr 8 2019 dashboard-deploy.yaml -rw-r--r-- 1 root root 274 Feb 5 2018 dashboard-svc.yaml

2)修改配置文件

[root@kub_master dashboard]# vim dashboard-deploy.yaml [root@kub_master dashboard]# cat dashboard-deploy.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: # Keep the name in sync with image version and # gce/coreos/kube-manifests/addons/dashboard counterparts name: kubernetes-dashboard-latest namespace: kube-system spec: replicas: 1 template: metadata: labels: k8s-app: kubernetes-dashboard version: latest kubernetes.io/cluster-service: "true" spec: containers: - name: kubernetes-dashboard image: 192.168.0.212:5000/kubernetes-dashboard-amd64:v1.4.1 #镜像下载地址 resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m memory: 50Mi requests: cpu: 100m memory: 50Mi ports: - containerPort: 9090 args: - --apiserver-host=http://192.168.0.212:8080 #apiserver的地址 livenessProbe: httpGet: path: / port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30

[root@kub_master dashboard]# vim dashboard-svc.yaml [root@kub_master dashboard]# cat dashboard-svc.yaml apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" spec: selector: k8s-app: kubernetes-dashboard ports: - port: 80 targetPort: 9090

3). 批量创建

[root@kub_master dashboard]# kubectl create -f . deployment "kubernetes-dashboard-latest" created service "kubernetes-dashboard" created [root@kub_master dashboard]# kubectl get all --namespace=kube-system NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/kube-dns 1 1 1 1 1d deploy/kubernetes-dashboard-latest 1 1 1 1 15s NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/kube-dns 192.168.230.254 <none> 53/UDP,53/TCP 1d svc/kubernetes-dashboard 192.168.108.11 <none> 80/TCP 15s NAME DESIRED CURRENT READY AGE rs/kube-dns-4072910292 1 1 1 1d rs/kubernetes-dashboard-latest-3255858758 1 1 1 15s NAME READY STATUS RESTARTS AGE po/kube-dns-4072910292-4qb6c 4/4 Running 0 1d po/kubernetes-dashboard-latest-3255858758-fqsbc 1/1 Running 0 15s

3. 测试访问

[root@kub_master dashboard]# curl 192.168.0.212:8080 { "paths": [ "/api", "/api/v1", "/apis", "/apis/apps", "/apis/apps/v1beta1", "/apis/authentication.k8s.io", "/apis/authentication.k8s.io/v1beta1", "/apis/authorization.k8s.io", "/apis/authorization.k8s.io/v1beta1", "/apis/autoscaling", "/apis/autoscaling/v1", "/apis/batch", "/apis/batch/v1", "/apis/batch/v2alpha1", "/apis/certificates.k8s.io", "/apis/certificates.k8s.io/v1alpha1", "/apis/extensions", "/apis/extensions/v1beta1", "/apis/policy", "/apis/policy/v1beta1", "/apis/rbac.authorization.k8s.io", "/apis/rbac.authorization.k8s.io/v1alpha1", "/apis/storage.k8s.io", "/apis/storage.k8s.io/v1beta1", "/healthz", "/healthz/ping", "/healthz/poststarthook/bootstrap-controller", "/healthz/poststarthook/extensions/third-party-resources", "/healthz/poststarthook/rbac/bootstrap-roles", "/logs", "/metrics", "/swaggerapi/", "/ui/", "/version" ] }

注:kubernetes API Server通过一个名为kube-apiserver的进程提供服务,该进程运行在master节点上。在默认情况下,kube-apiserver进程在本机的8080端口(对应参数--insecure-port)提供REST服务。可以同时开启HTTPS安全端口(--secure-port=6443)来启动安全机制,加强REST API访问安全性。

命令行工具kubectl与kubernetes API Server交互之间的接口是REST调用。

登录master节点,使用curl命令,得到以json方式返回的kubernetes API的版本信息

[root@kub_master dashboard]# curl 192.168.0.212:8080/api { "kind": "APIVersions", "versions": [ "v1" ], "serverAddressByClientCIDRs": [ { "clientCIDR": "0.0.0.0/0", "serverAddress": "192.168.0.212:6443" }, { "clientCIDR": "192.168.0.0/16", "serverAddress": "192.168.0.1:443" } ] }

运行以下命令,可以查看kubernetes API Server目前支持的资源对象种类

[root@kub_master dashboard]# curl 192.168.0.212:8080/api/v1 { "kind": "APIResourceList", "groupVersion": "v1", "resources": [ { "name": "bindings", "namespaced": true, "kind": "Binding" }, { "name": "componentstatuses", "namespaced": false, "kind": "ComponentStatus" }, { "name": "configmaps", "namespaced": true, "kind": "ConfigMap" }, { "name": "endpoints", "namespaced": true, "kind": "Endpoints" }, { "name": "events", "namespaced": true, "kind": "Event" }, { "name": "limitranges", "namespaced": true, "kind": "LimitRange" }, { "name": "namespaces", "namespaced": false, "kind": "Namespace" }, { "name": "namespaces/finalize", "namespaced": false, "kind": "Namespace" }, { "name": "namespaces/status", "namespaced": false, "kind": "Namespace" }, { "name": "nodes", "namespaced": false, "kind": "Node" }, { "name": "nodes/proxy", "namespaced": false, "kind": "Node" }, { "name": "nodes/status", "namespaced": false, "kind": "Node" }, { "name": "persistentvolumeclaims", "namespaced": true, "kind": "PersistentVolumeClaim" }, { "name": "persistentvolumeclaims/status", "namespaced": true, "kind": "PersistentVolumeClaim" }, { "name": "persistentvolumes", "namespaced": false, "kind": "PersistentVolume" }, { "name": "persistentvolumes/status", "namespaced": false, "kind": "PersistentVolume" }, { "name": "pods", "namespaced": true, "kind": "Pod" }, { "name": "pods/attach", "namespaced": true, "kind": "Pod" }, { "name": "pods/binding", "namespaced": true, "kind": "Binding" }, { "name": "pods/eviction", "namespaced": true, "kind": "Eviction" }, { "name": "pods/exec", "namespaced": true, "kind": "Pod" }, { "name": "pods/log", "namespaced": true, "kind": "Pod" }, { "name": "pods/portforward", "namespaced": true, "kind": "Pod" }, { "name": "pods/proxy", "namespaced": true, "kind": "Pod" }, { "name": "pods/status", "namespaced": true, "kind": "Pod" }, { "name": "podtemplates", "namespaced": true, "kind": "PodTemplate" }, { "name": "replicationcontrollers", "namespaced": true, "kind": "ReplicationController" }, { "name": "replicationcontrollers/scale", "namespaced": true, "kind": "Scale" }, { "name": "replicationcontrollers/status", "namespaced": true, "kind": "ReplicationController" }, { "name": "resourcequotas", "namespaced": true, "kind": "ResourceQuota" }, { "name": "resourcequotas/status", "namespaced": true, "kind": "ResourceQuota" }, { "name": "secrets", "namespaced": true, "kind": "Secret" }, { "name": "securitycontextconstraints", "namespaced": false, "kind": "SecurityContextConstraints" }, { "name": "serviceaccounts", "namespaced": true, "kind": "ServiceAccount" }, { "name": "services", "namespaced": true, "kind": "Service" }, { "name": "services/proxy", "namespaced": true, "kind": "Service" }, { "name": "services/status", "namespaced": true, "kind": "Service" } ] }

根据上述命令的输出结果,可以运行下面curl命令,分别返回集群中的pod列表、service列表,RC列表

[root@kub_master dashboard]# curl 192.168.0.212:8080/api/v1/pods

[root@kub_master dashboard]# curl 192.168.0.212:8080/api/v1/services

[root@kub_master dashboard]# curl 192.168.0.212:8080/api/v1/replicationcontrollers { "kind": "ReplicationControllerList", "apiVersion": "v1", "metadata": { "selfLink": "/api/v1/replicationcontrollers", "resourceVersion": "838833" }, "items": [ { "metadata": { "name": "nginx", "namespace": "default", "selfLink": "/api/v1/namespaces/default/replicationcontrollers/nginx", "uid": "ee107bb2-00c8-11eb-8a8e-fa163e38ad0d", "resourceVersion": "777623", "generation": 1, "creationTimestamp": "2020-09-27T13:54:15Z", "labels": { "app": "nginx", "version": "1.15" } }, "spec": { "replicas": 2, "selector": { "app": "nginx", "version": "1.15" }, "template": { "metadata": { "name": "nginx", "creationTimestamp": null, "labels": { "app": "nginx", "version": "1.15" } }, "spec": { "containers": [ { "name": "nginx", "image": "192.168.0.212:5000/nginx:1.15", "resources": {}, "terminationMessagePath": "/dev/termination-log", "imagePullPolicy": "IfNotPresent", "securityContext": { "privileged": false } } ], "restartPolicy": "Always", "terminationGracePeriodSeconds": 30, "dnsPolicy": "ClusterFirst", "securityContext": {} } } }, "status": { "replicas": 2, "fullyLabeledReplicas": 2, "readyReplicas": 2, "availableReplicas": 2, "observedGeneration": 1 } }, { "metadata": { "name": "myweb", "namespace": "develop", "selfLink": "/api/v1/namespaces/develop/replicationcontrollers/myweb", "uid": "c66af487-012d-11eb-8a8e-fa163e38ad0d", "resourceVersion": "833988", "generation": 1, "creationTimestamp": "2020-09-28T01:56:07Z", "labels": { "app": "myweb" } }, "spec": { "replicas": 2, "selector": { "app": "myweb" }, "template": { "metadata": { "creationTimestamp": null, "labels": { "app": "myweb" } }, "spec": { "containers": [ { "name": "myweb", "image": "192.168.0.212:5000/nginx:1.13", "ports": [ { "containerPort": 80, "protocol": "TCP" } ], "resources": {}, "terminationMessagePath": "/dev/termination-log", "imagePullPolicy": "IfNotPresent" } ], "restartPolicy": "Always", "terminationGracePeriodSeconds": 30, "dnsPolicy": "ClusterFirst", "securityContext": {} } } }, "status": { "replicas": 2, "fullyLabeledReplicas": 2, "readyReplicas": 2, "availableReplicas": 2, "observedGeneration": 1 } }, { "metadata": { "name": "myweb2", "namespace": "develop", "selfLink": "/api/v1/namespaces/develop/replicationcontrollers/myweb2", "uid": "928efe84-0132-11eb-8a8e-fa163e38ad0d", "resourceVersion": "836742", "generation": 1, "creationTimestamp": "2020-09-28T02:30:28Z", "labels": { "app": "myweb2" } }, "spec": { "replicas": 2, "selector": { "app": "myweb2" }, "template": { "metadata": { "creationTimestamp": null, "labels": { "app": "myweb2" } }, "spec": { "containers": [ { "name": "myweb2", "image": "192.168.0.212:5000/nginx:1.15", "ports": [ { "containerPort": 80, "protocol": "TCP" } ], "resources": {}, "terminationMessagePath": "/dev/termination-log", "imagePullPolicy": "IfNotPresent" } ], "restartPolicy": "Always", "terminationGracePeriodSeconds": 30, "dnsPolicy": "ClusterFirst", "securityContext": {} } } }, "status": { "replicas": 2, "fullyLabeledReplicas": 2, "readyReplicas": 2, "availableReplicas": 2, "observedGeneration": 1 } } ] }

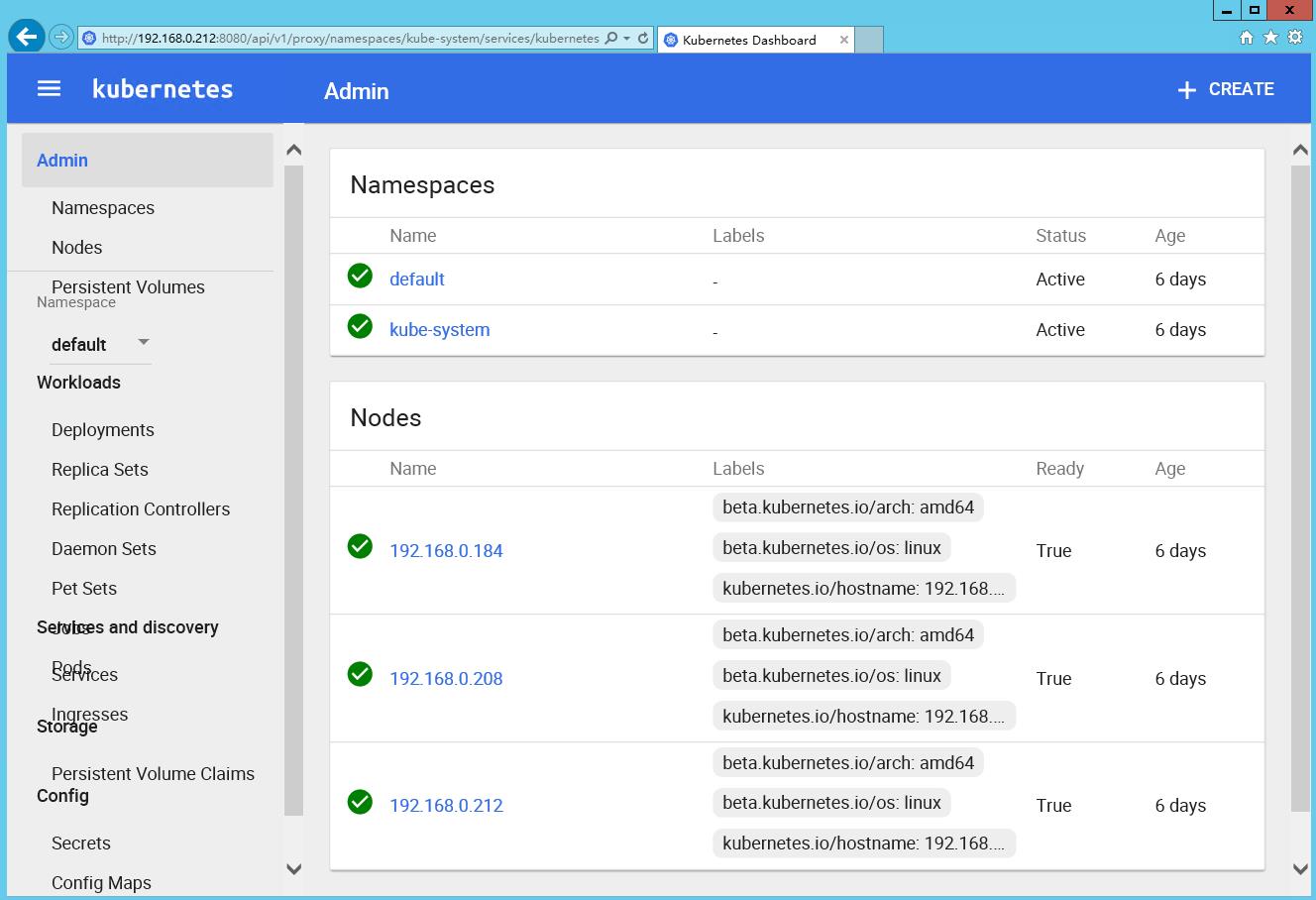

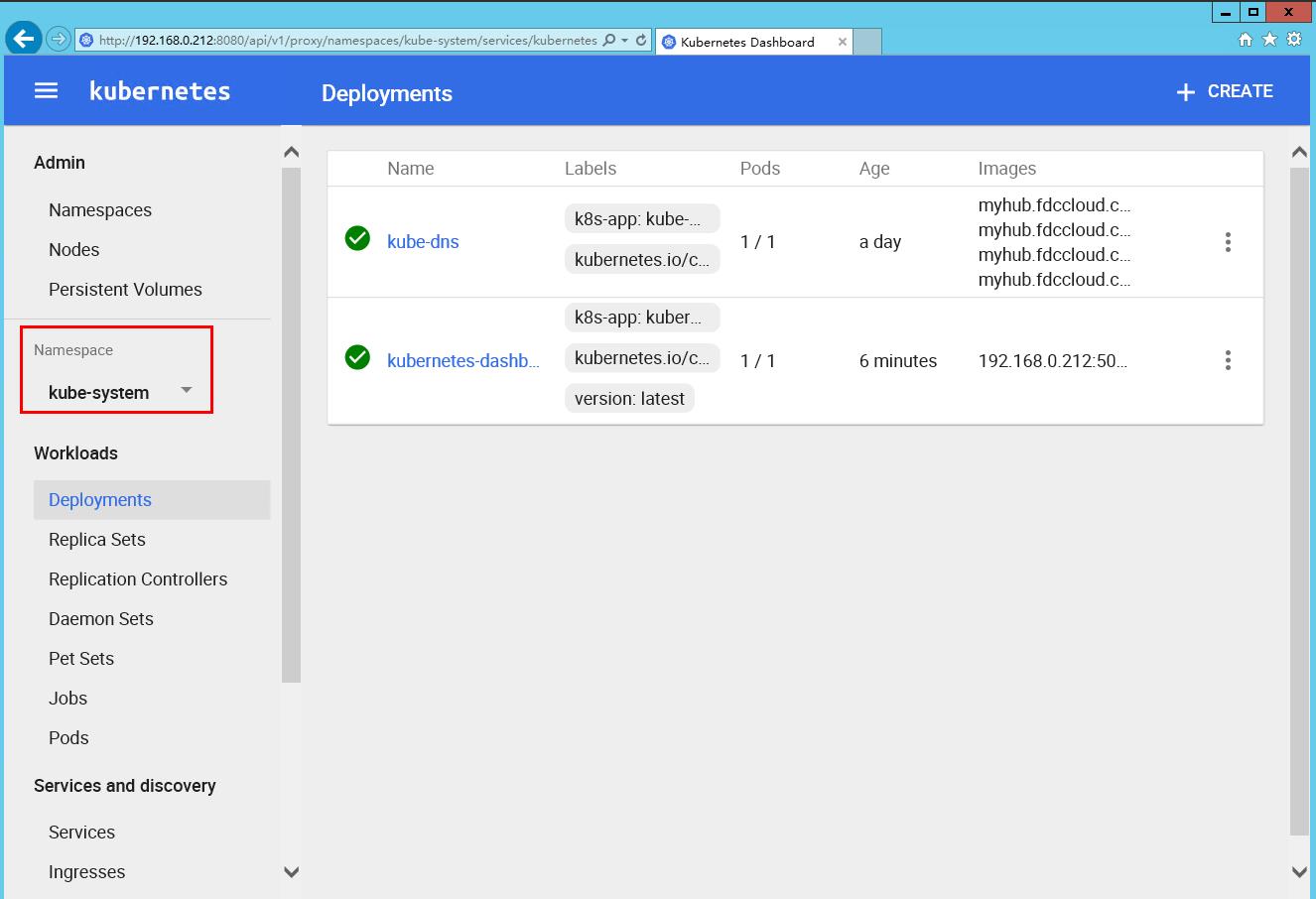

浏览器访问

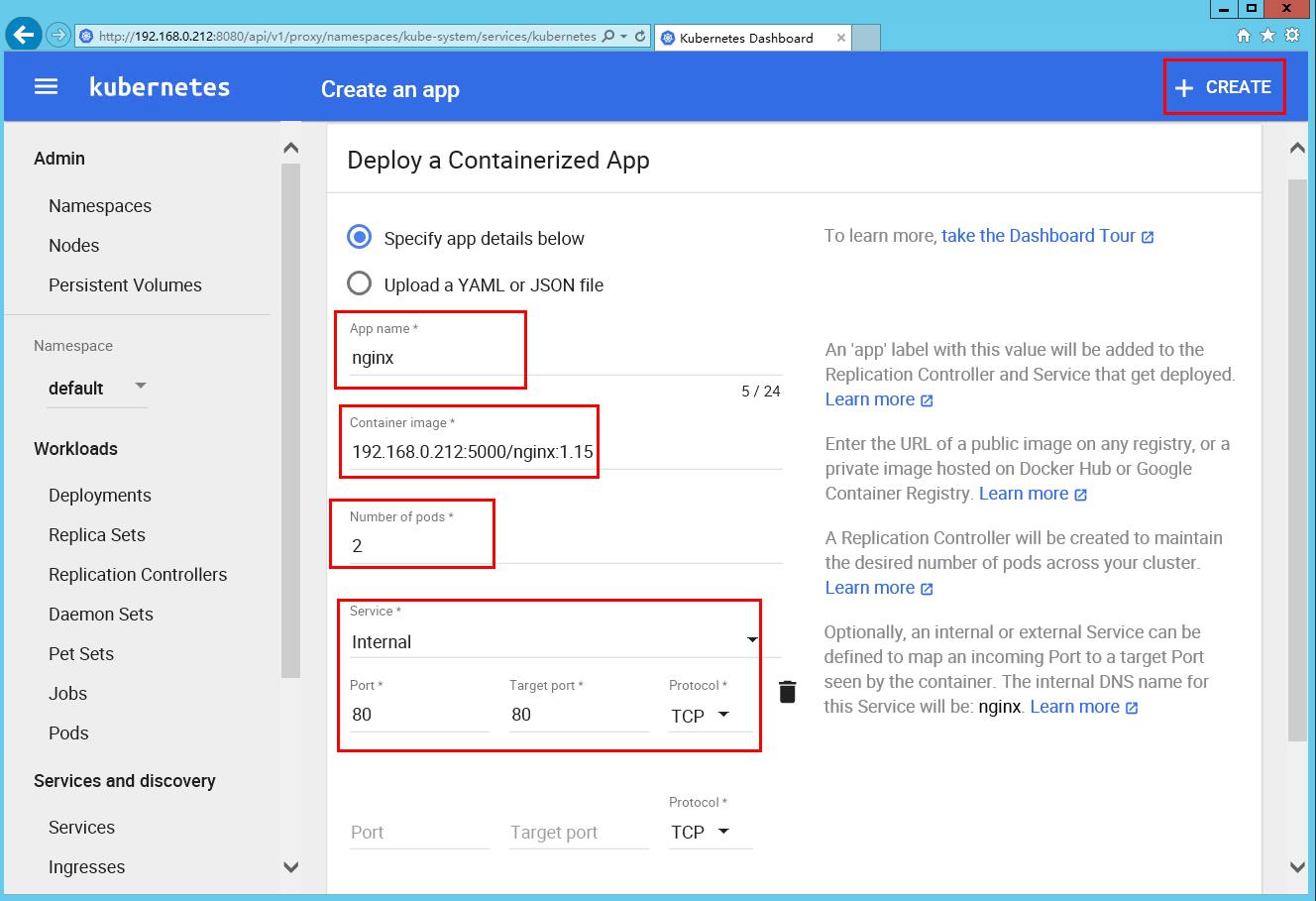

4. 在web界面上创建资源

[root@kub_master dashboard]# kubectl get all -o wide NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR rc/nginx 2 2 2 2m nginx 192.168.0.212:5000/nginx:1.15 app=nginx,version=1.15 NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR svc/kubernetes 192.168.0.1 <none> 443/TCP 6d <none> svc/nginx 192.168.253.54 <none> 80/TCP 2m app=nginx,version=1.15 NAME READY STATUS RESTARTS AGE IP NODE po/nginx-73zq1 1/1 Running 0 2m 172.16.81.3 192.168.0.212 po/nginx-9wnkr 1/1 Running 0 2m 172.16.46.3 192.168.0.184

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏