利用sklearn计算决定系数R2

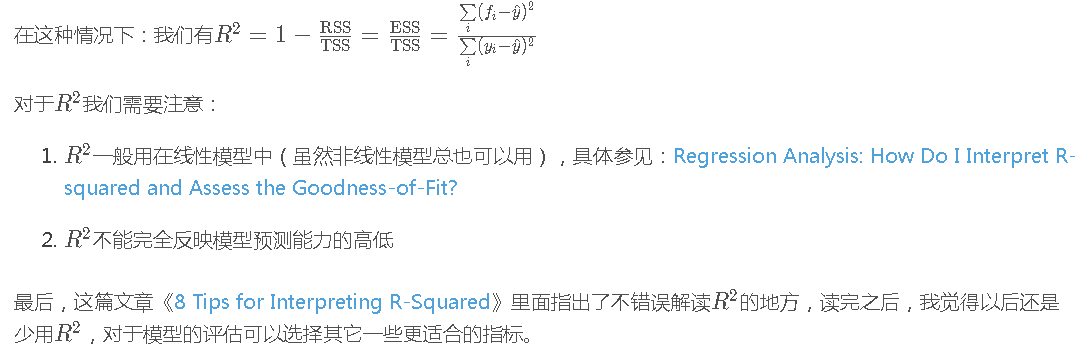

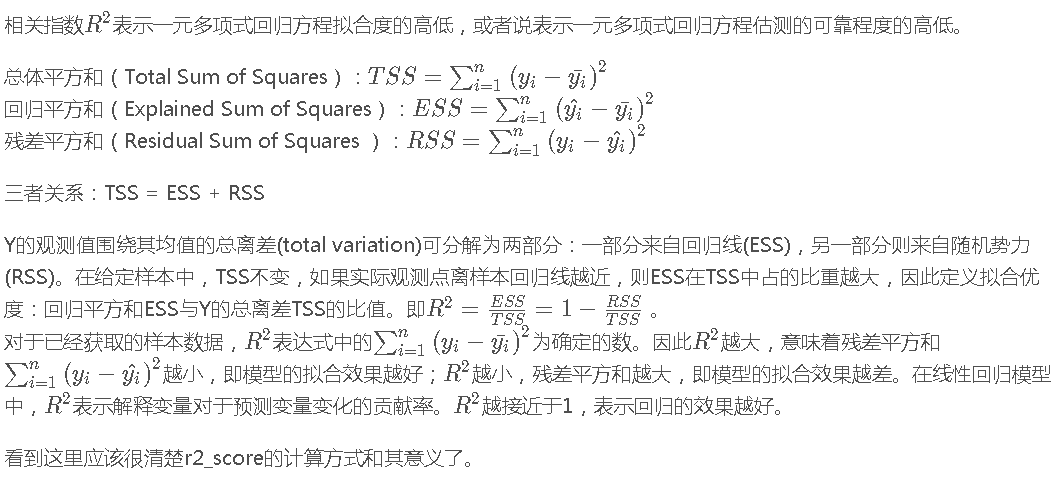

决定系数R2

sklearn.metrics中r2_score

格式

sklearn.metrics.r2_score(y_true, y_pred, sample_weight=None, multioutput=’uniform_average’)

R^2 (coefficient of determination) regression score function.

R2可以是负值(因为模型可以任意差)。如果一个常数模型总是预测y的期望值,而忽略输入特性,则r^2的分数将为0.0。

Best possible score is 1.0 and it can be negative (because the model can be arbitrarily worse). A constant model that always predicts the expected value of y, disregarding the input features, would get a R^2 score of 0.0.

| Parameters: |

|

|---|

| Returns: |

|

|---|

注意:

This is not a symmetric function. (R2是非对称函数!注意输入顺序。)

Unlike most other scores, R^2 score may be negative (it need not actually be the square of a quantity R).(R2可以是负值,它不需要是R的平方!)

from sklearn.metrics import r2_score y_true = y_true = [3, -0.5, 2, 7] y_pred = [2.5, 0.0, 2, 8] r2_score(y_true, y_pred) # 结果:0.9486081370449679 r2_score(y_true, y_pred, multioutput= 'uniform_average') # 结果:0.9486081370449679 y_true = [[0.5, 1], [-1, 1], [7, -6]] y_pred = [[0, 2], [-1, 2], [8, -5]] r2_score(y_true, y_pred, multioutput='variance_weighted') # 结果:0.9382566585956417 y_true = [1, 2, 3] y_pred = [1, 2, 3] r2_score(y_true, y_pred) # 结果: 1.0 y_true = [1, 2, 3] y_pred = [2, 2, 2] r2_score(y_true, y_pred) # 结果:0.0 y_true = [1, 2, 3] # bar{y} = (1+2+3)/ 3 = 2 y_pred = [3, 2, 1] # y - hat{y}(即y_true - y_pred) = [-2, 0, 2] r2_score(y_true, y_pred) # 结果:-3.0 y_true = [[0.5, 1], [-1, 1], [7, -6]] y_pred = [[0, 2], [-1, 2], [8, -5]] r2_score(y_true, y_pred, multioutput='raw_values') # 结果:array([0.96543779, 0.90816327])

posted on 2019-04-09 16:34 那抹阳光1994 阅读(20103) 评论(0) 编辑 收藏 举报