大数据入门第二十五天——logstash入门

一、概述

1.logstash是什么

根据官网介绍:

Logstash 是开源的服务器端数据处理管道,能够同时 从多个来源采集数据、转换数据,然后将数据发送到您最喜欢的 “存储库” 中。(我们的存储库当然是 Elasticsearch。)

//属于elasticsearch旗下产品(JRuby开发,开发者曾说如果他知道有scala,就不会用jruby了。。)

也就是说,它是flume的“后浪”,它解决了“前浪”flume的数据丢失等问题!

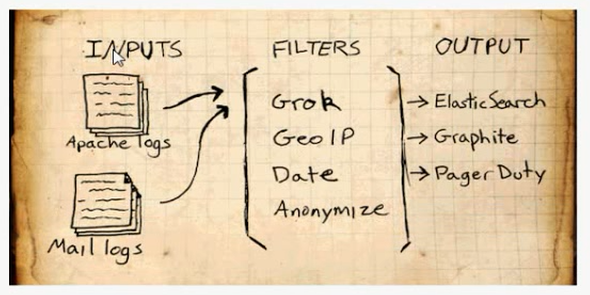

2.基础结构

输入:采集各种来源数据

过滤:实时解析转换数据

输出:选择存储库导出数据

补充:Logstash 每读取一次数据的行为叫做事件。

更多详细介绍,包括具体支持的输入输出等,参考:https://www.elastic.co/guide/index.html

用法博文推荐:https://blog.csdn.net/chenleiking/article/details/73563930

二、安装

logstash5.x 6.x需要JDK1.8+,如未安装,请先安装JDK1.8+

1.下载

https://www.elastic.co/downloads/past-releases

选择合适的版本,下载即可

2.解压

[hadoop@mini1 ~]$ tar -zxvf logstash-5.6.9.tar.gz -C apps/

三、入门使用

1.HelloWorld示例

运行启动命令,并直接给出配置

bin/logstash -e 'input { stdin { } } output { stdout {} }'

常用的启动参数如下:

运行结果如下:输入helloworld,给出message消息:

[hadoop@mini1 logstash-5.6.9]$ bin/logstash -e 'input { stdin { } } output { stdout {} }'

Sending Logstash's logs to /home/hadoop/apps/logstash-5.6.9/logs which is now configured via log4j2.properties

[2018-04-18T16:38:18,309][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/hadoop/apps/logstash-5.6.9/modules/fb_apache/configuration"}

[2018-04-18T16:38:18,323][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/hadoop/apps/logstash-5.6.9/modules/netflow/configuration"}

[2018-04-18T16:38:18,325][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/home/hadoop/apps/logstash-5.6.9/data/queue"}

[2018-04-18T16:38:18,373][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/home/hadoop/apps/logstash-5.6.9/data/dead_letter_queue"}

[2018-04-18T16:38:18,401][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"893c481c-85d1-4746-8562-48a74dcbad08", :path=>"/home/hadoop/apps/logstash-5.6.9/data/uuid"}

[2018-04-18T16:38:18,757][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

[2018-04-18T16:38:18,847][INFO ][logstash.pipeline ] Pipeline main started

The stdin plugin is now waiting for input:

[2018-04-18T16:38:18,929][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

HelloWorld

{

"@version" => "1",

"host" => "mini1",

"@timestamp" => 2018-04-18T08:38:44.798Z,

"message" => "HelloWorld"

}

2.使用配置文件

实际中的 -e 后的配置一般相对更复杂,所以一般会通过 -f 使用配置文件来启动

bin/logstash -f logstash.conf

配置文件大概长这样:

# 输入

input {

...

}

# 过滤器

filter {

...

}

# 输出

output {

...

}

编写一个示例的配置文件:logstash.conf:

input {

# 从文件读取日志信息

file {

path => "/home/hadoop/apps/logstash-5.6.9/logs/1.log"

type => "system"

start_position => "beginning"

}

}

# filter {

#

# }

output {

# 标准输出

stdout { codec => rubydebug }

}

输出结果如下:

[hadoop@mini1 logstash-5.6.9]$ bin/logstash -f logstash.conf

Sending Logstash's logs to /home/hadoop/apps/logstash-5.6.9/logs which is now configured via log4j2.properties

[2018-04-18T16:49:28,522][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/home/hadoop/apps/logstash-5.6.9/modules/fb_apache/configuration"}

[2018-04-18T16:49:28,525][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/home/hadoop/apps/logstash-5.6.9/modules/netflow/configuration"}

[2018-04-18T16:49:28,988][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

[2018-04-18T16:49:29,371][INFO ][logstash.pipeline ] Pipeline main started

{

"@version" => "1",

"host" => "mini1",

"path" => "/home/hadoop/apps/logstash-5.6.9/logs/1.log",

"@timestamp" => 2018-04-18T08:49:29.451Z,

"message" => "Apr 16 17:01:01 mini1 systemd: Started Session 5 of user root.",

"type" => "system"

}

四、插件的使用

logstash主要有3个主插件:输入input,输出output,过滤filter,其他还包括编码解码插件等

1.输入插件input

定义的数据源,支持从文件、stdin、kafka、twitter等来源,甚至可以自己写一个input plugin。

输入的file path等是支持通配的,例如:

path => "/data/web/logstash/logFile/*/*.log"

常用输入插件

1.file

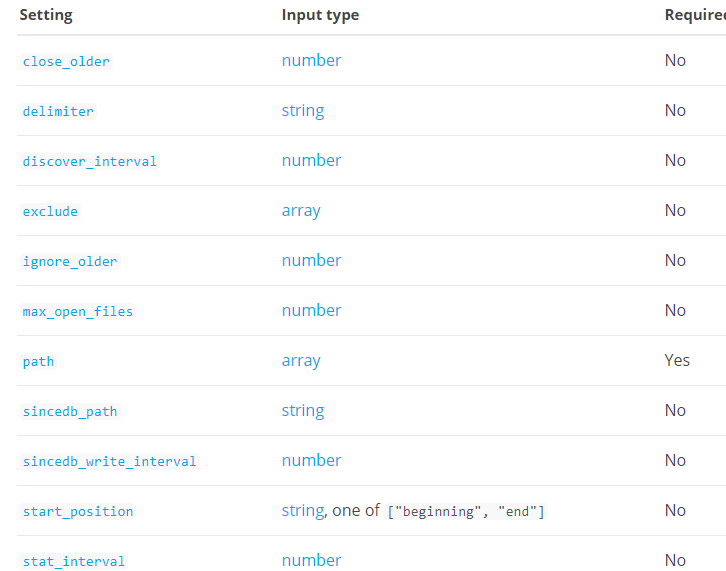

file插件的必选参数只有path一项:部分选项如下:

// 原版的完整参数解释参见官网,中文参见上文博文参考处链接

配置示例:

input

file {

path => ["/var/log/*.log", "/var/log/message"]

type => "system"

start_position => "beginning"

}

}

2.过滤插件、输出插件

同输入插件类似,可以参考官网详细配置与参考博文

浙公网安备 33010602011771号

浙公网安备 33010602011771号