04-MySQL基于MGR的高可用架构的搭建

一、MGR架构的介绍

1、简介

MGR(MySQL Group Replication)是MySQL5.7.17版本引进来的一个数据库高可用架构,解决了传统异步复制和半同步复制的缺陷(主从数据一致性的问题),MGR依靠分布式一致性协议PAXOS,实现了主从数据库的一致性。

PAXOS协议:是一种基于消息传递的一致性算法。MGR中由若干个节点共同组成一个组复制,一个事物的提交,必须经过组内大多数节点(N/2 + 1)投票并通过,才能提交。

2、MGR特点

* 高一致性:基于分布式PAXOS协议实现组复制,保证数据的一致性;

* 高容错性:故障自动检测机制,只要有半数节点存活MGR就可以继续工作;

* 高扩展性:节点的增加与移除会自动更新组成员信息,新节点加入后,自动从其他节点同步增量数据,直到与其他节点数据一致;

* 高灵活性:提供单主模式和多主模式。单主模式在主库宕机后能够自动选主(根据MEMBER_ID的排序情况,最小的升级为主库),所有写操作都在主库上进行,多主模式支持多节点写入;

3、MGR故障检测机制

MGR中有一个故障检测机制,会提供某节点可能死掉的信息,某个server无响应时触发猜测,组内其余成员进行协调以排除该节点,与它隔离开来,该节点无法同步其他节点传送过来的binlog,也无法执行任何本地事物。

4、搭建MGR的前提条件

* 存储引擎必须是Innodb(支持事物处理),并且每张表一定要有主键,用于解决write冲突;

* 必须打开GTID特性,binlog日志格式必须设置为ROW;

* 目前一个MGR集群组最多支持9个节点;

* 多主模式不支持SERIALIZABLE事物隔离级别;

二、MGR的搭建

1、IP配置

| 机器名称 | IP | 服务器角色 | 备注 |

| node1 | 192.168.232.42 | MGR节点1 | 操作系统redhat7.5;MySQL5.7.22 |

| node2 | 192.168.232.43 | MGR节点2 | 操作系统redhat7.5;MySQL5.7.22 |

| node3 | 192.168.232.44 | MGR节点3 | 操作系统redhat7.5;MySQL5.7.22 |

2、配置IP映射

在各节点的/etc/hosts文件中配置如下内容:

192.168.232.42 node1 192.168.232.43 node2 192.168.232.44 node3

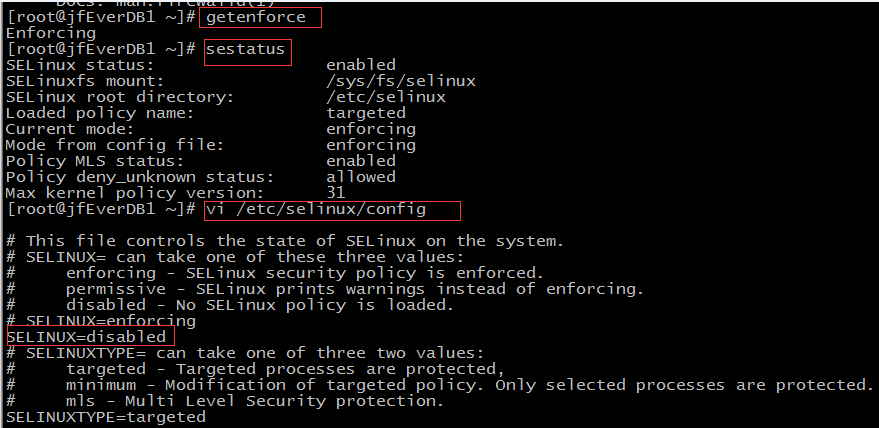

3、所有节点禁用SELinux

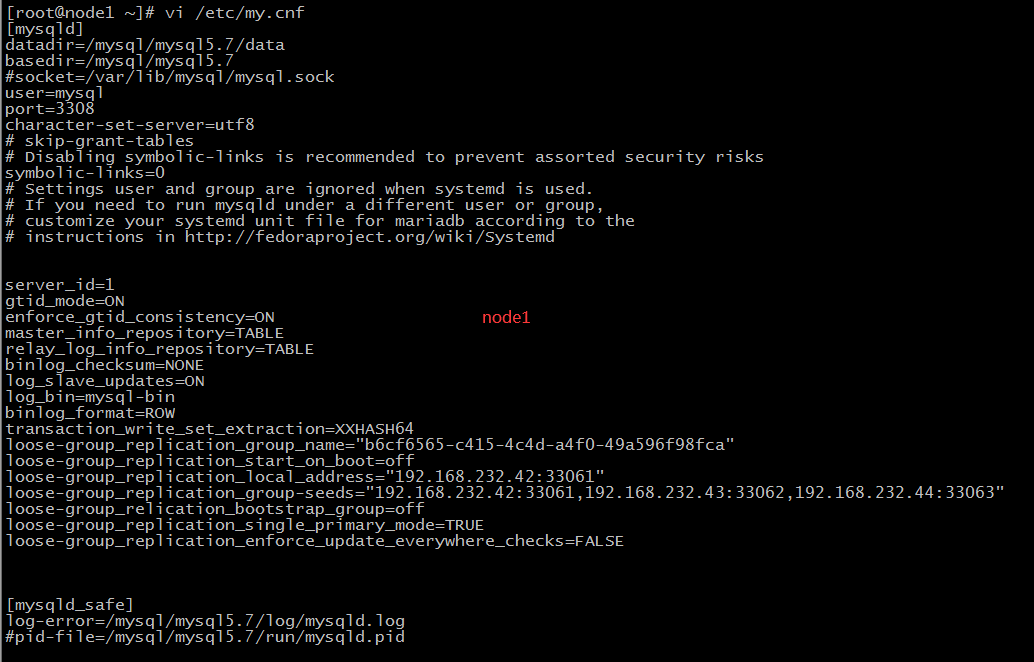

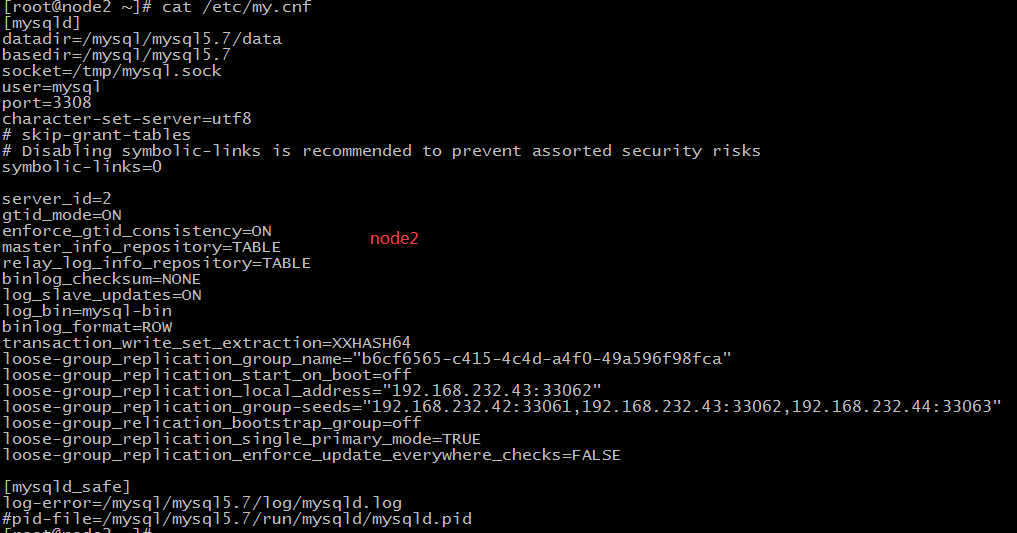

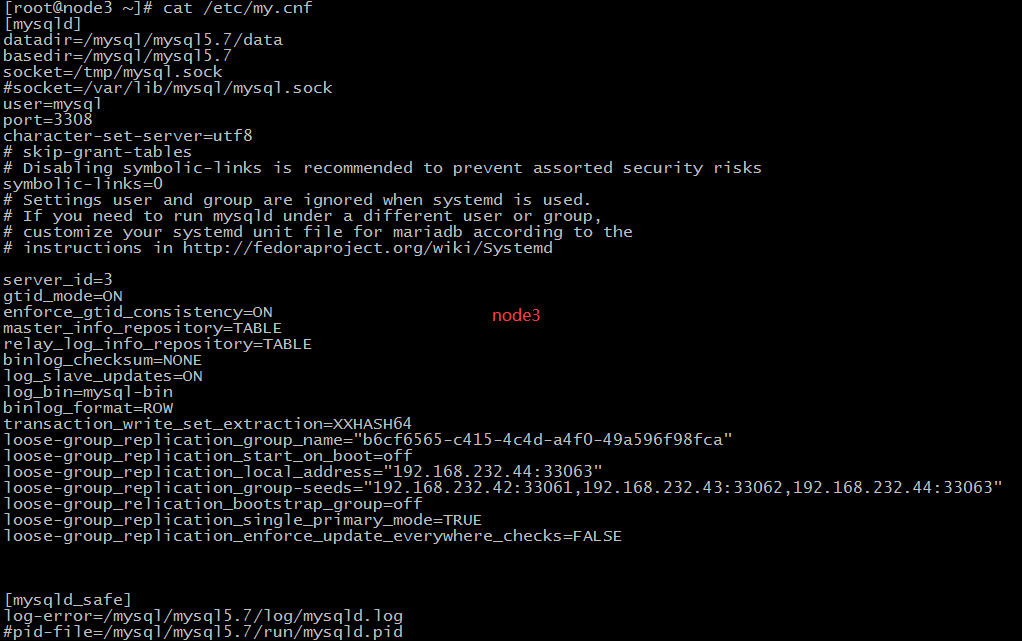

4、配置3个节点的my.cnf文件

[mysqld] datadir=/mysql/mysql5.7/data basedir=/mysql/mysql5.7 #socket=/var/lib/mysql/mysql.sock user=mysql port=3308 character-set-server=utf8 # skip-grant-tables # Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 # Settings user and group are ignored when systemd is used. # If you need to run mysqld under a different user or group, # customize your systemd unit file for mariadb according to the # instructions in http://fedoraproject.org/wiki/Systemd server_id=1 gtid_mode=ON enforce_gtid_consistency=ON master_info_repository=TABLE relay_log_info_repository=TABLE binlog_checksum=NONE log_slave_updates=ON log_bin=mysql-bin binlog_format=ROW # 这里binlog的模式必须是ROW transaction_write_set_extraction=XXHASH64 # 记录事物的算法 loose-group_replication_group_name="b6cf6565-c415-4c4d-a4f0-49a596f98fca" # group的名称,必须是一个有效的uuid,用来区分不同的group loose-group_replication_start_on_boot=off # 是否在启动MySQL时启动组复制,这里设置不自动启动 loose-group_replication_local_address="192.168.232.42:33061" # 本地IP地址字符串,用于组内节点之间的连接 loose-group_replication_group-seeds="192.168.232.42:33061,192.168.232.43:33062,192.168.232.44:33063" # 种子服务器的配置 loose-group_relication_bootstrap_group=off # 配置是否自动引导组,第一次搭建MGR的时候使用 loose-group_replication_single_primary_mode=TRUE # 是否启动单主模式 loose-group_replication_enforce_update_everywhere_checks=FALSE # 是否启动多主模式 [mysqld_safe] log-error=/mysql/mysql5.7/log/mysqld.log #pid-file=/mysql/mysql5.7/run/mysqld.pid

注:

* 变量使用loose-前缀表示Server启用时没有加载复制插件也能继续启动

* 三个节点的loose-group_replication_group_name 必须一样,要是有多个group的话,每个group应该不同

三个节点my.cnf配置如下:

5、安装组复制的引擎

命令:

INSTALL PLUGIN group_replication SONAME 'group_replication.so';

查看是否安装成功:

show plugins;

注:三个节点都安装该插件。

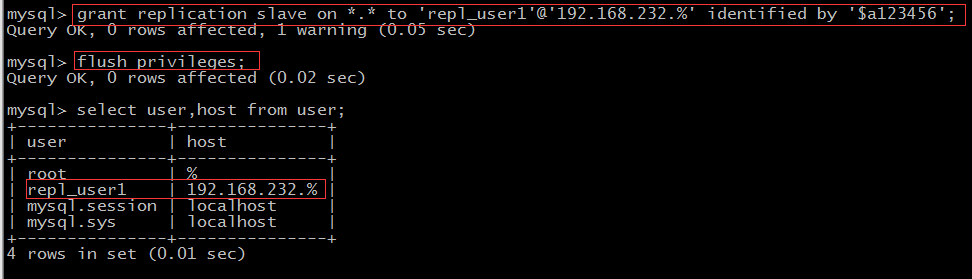

6、创建同步的账号

命令:

mysql> grant replication slave on *.* to 'repl_user1'@'192.168.232.%' identified by '$a123456'; Query OK, 0 rows affected, 1 warning (0.05 sec) mysql> flush privileges; Query OK, 0 rows affected (0.02 sec) mysql> select user,host from user; +---------------+---------------+ | user | host | +---------------+---------------+ | root | % | | repl_user1 | 192.168.232.% | | mysql.session | localhost | | mysql.sys | localhost | +---------------+---------------+ 4 rows in set (0.01 sec) mysql>

注:因为每个节点都有可能成为master,所以3个节点上都需要创建同步的用户,我这里host配置为192.168.232.%意思是192.168.232.网段可以使用该用户。

三、搭建MGR的单主模式

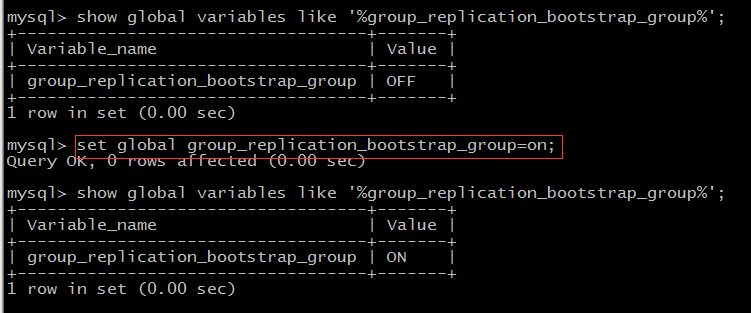

1、先把node1加入group组

注:

* 由于是第一个加入Group,需要启动group_relication_bootstrap_group,加入成功后再关闭就行;

* 一个组Group是在节点参数为group_replication_bootstrap_group为on的条件下执行start group_replication产生的,如果要加入现有的Group,节点需要确保group_replication_bootstrap_group为off;

(1)开启group_relication_bootstrap_group

mysql> show global variables like '%group_replication_bootstrap_group%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | group_replication_bootstrap_group | OFF | +-----------------------------------+-------+ 1 row in set (0.00 sec) mysql> set global group_replication_bootstrap_group=on; Query OK, 0 rows affected (0.00 sec) mysql> show global variables like '%group_replication_bootstrap_group%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | group_replication_bootstrap_group | ON | +-----------------------------------+-------+ 1 row in set (0.00 sec) mysql>

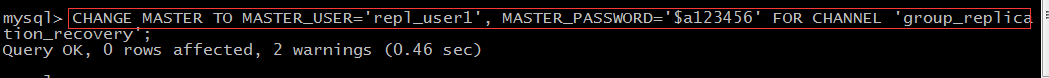

(2)配置MGR

mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.46 sec) mysql>

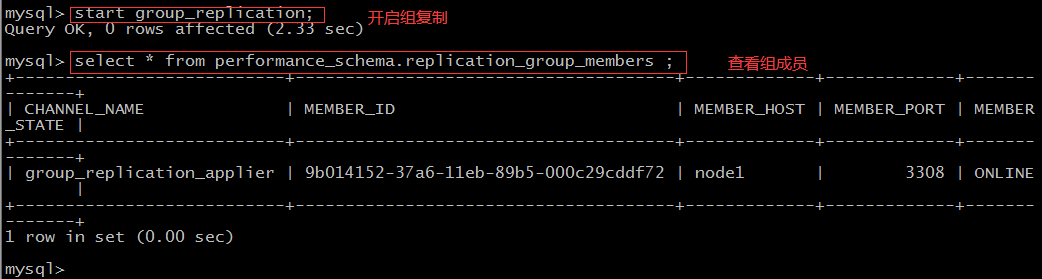

(3)启动MGR

mysql> start group_replication; Query OK, 0 rows affected (2.33 sec) mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 1 row in set (0.00 sec) mysql>

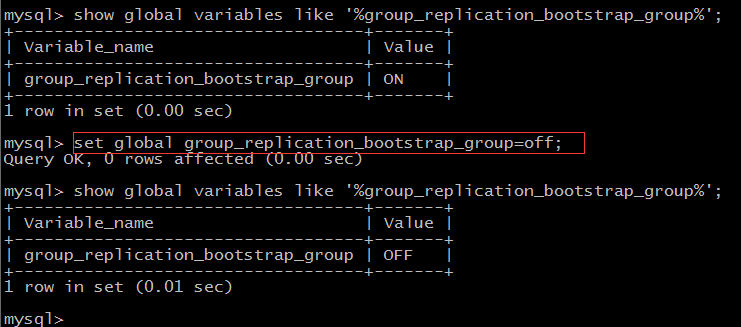

(4)关闭 group_replication_bootstrap_group

mysql> show global variables like '%group_replication_bootstrap_group%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | group_replication_bootstrap_group | ON | +-----------------------------------+-------+ 1 row in set (0.00 sec) mysql> set global group_replication_bootstrap_group=off; Query OK, 0 rows affected (0.00 sec) mysql> show global variables like '%group_replication_bootstrap_group%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | group_replication_bootstrap_group | OFF | +-----------------------------------+-------+ 1 row in set (0.01 sec) mysql>

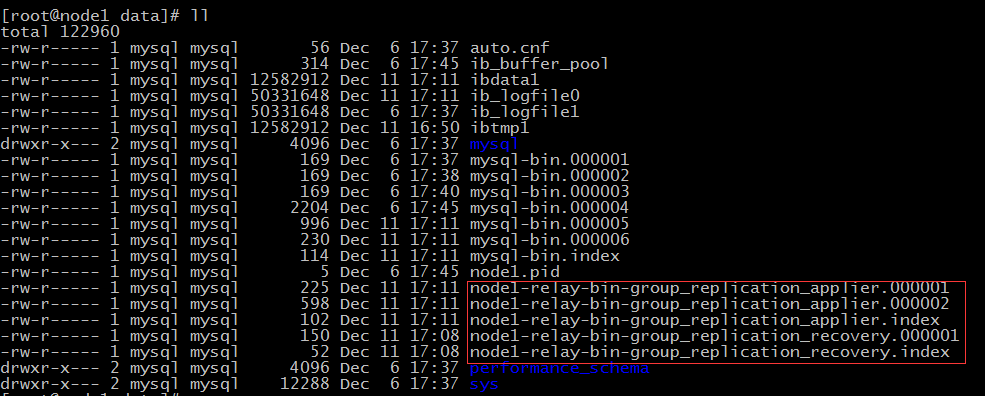

注:启动成功后,发现data目录下面多了几个_applier和_recovery文件

* _applier:保存同个组内其他Server的binlog信息;

* _recovery:用来存储需要恢复的binlog信息,新加入的组成员是通过group_replication_recovery来恢复数据的,所需要的binlog日志都存放在_recovery系统文件中;

2、在node1上造数据

mysql> create database customer default character set utf8; Query OK, 1 row affected (0.01 sec) mysql> use customer; Database changed mysql> create table person (id int(10) primary key auto_increment not null,username varchar(20),age int(10)) ENGINE=InnoDB DEFAULT CHARSET=utf8; Query OK, 0 rows affected (0.04 sec) mysql> insert into person(username,age) values('jack',19); Query OK, 1 row affected (0.00 sec) mysql> insert into person(username,age) values('rose',20); Query OK, 1 row affected (0.00 sec) mysql> select * from person; +----+----------+------+ | id | username | age | +----+----------+------+ | 1 | jack | 19 | | 2 | rose | 20 | +----+----------+------+ 2 rows in set (0.00 sec) mysql>

3、把node2加入到mgr组中

mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.07 sec) mysql> start group_replication; Query OK, 0 rows affected (4.88 sec) mysql>

加入的时候,遇到的错误参考文章最后的相关错误

在node1上查看组内有几个节点:

mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 66720a6a-37a8-11eb-befc-000c29c918c1 | node2 | 3308 | RECOVERING | | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 2 rows in set (0.00 sec) mysql>

4、把node3加入到MGR组中

mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.01 sec) mysql> start group_replication; Query OK, 0 rows affected (3.39 sec) mysql>

在node1上查看组内有几个节点:

mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 02c2cfed-37a9-11eb-b238-000c2927b3e8 | node3 | 3308 | ONLINE | | group_replication_applier | 66720a6a-37a8-11eb-befc-000c29c918c1 | node2 | 3308 | ONLINE | | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 3 rows in set (0.00 sec) mysql>

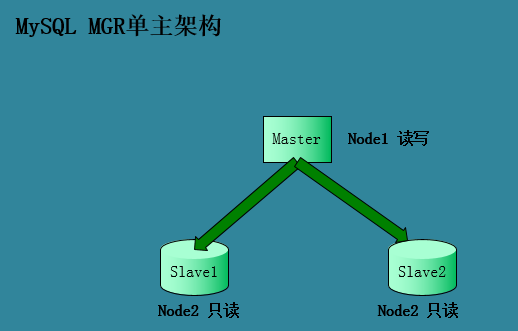

至此,3个节点的单主模式已经搭建好了,node1是master节点,提供读写,node2和node3是只读节点,提供只读操作。

5、MGR单主模式灾备切换

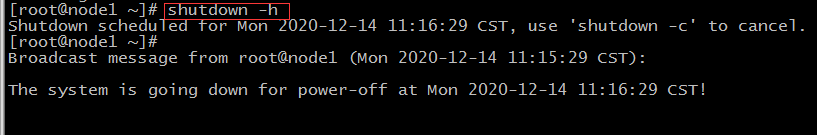

(1)模拟主库宕机,因为我这里是虚拟机,直接shutdown即可

shutdown -h

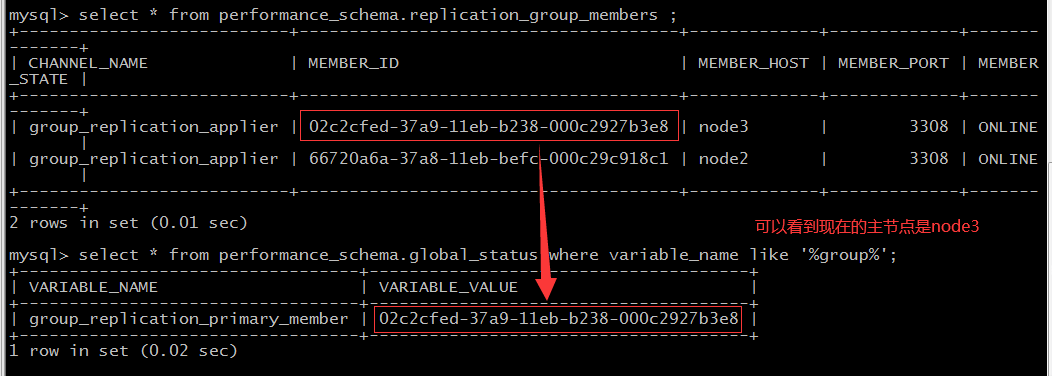

(2)查看哪个节点升级为主库

mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 02c2cfed-37a9-11eb-b238-000c2927b3e8 | node3 | 3308 | ONLINE | | group_replication_applier | 66720a6a-37a8-11eb-befc-000c29c918c1 | node2 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 2 rows in set (0.01 sec) mysql> select * from performance_schema.global_status where variable_name like '%group%'; +----------------------------------+--------------------------------------+ | VARIABLE_NAME | VARIABLE_VALUE | +----------------------------------+--------------------------------------+ | group_replication_primary_member | 02c2cfed-37a9-11eb-b238-000c2927b3e8 | +----------------------------------+--------------------------------------+ 1 row in set (0.02 sec) mysql>

注:performance_schema.replication_group_members的结果是按照MEMBER_ID升序排列的,主库的升级的时候,MEMBER_ID最小的升级为主库。

(3)查看node2和node3的读写状态

--1、node2(只读的) mysql> show global variables like '%super%'; +-----------------+-------+ | Variable_name | Value | +-----------------+-------+ | super_read_only | ON | +-----------------+-------+ 1 row in set (0.01 sec) --2、node3(读写的,说明node3是主) mysql> show global variables like '%super%'; +-----------------+-------+ | Variable_name | Value | +-----------------+-------+ | super_read_only | OFF | +-----------------+-------+ 1 row in set (0.02 sec)

(4)测试现在的主从数据是否可以同步

--node3上插入数据 mysql> use customer; Database changed mysql> select * from person; +----+----------+------+ | id | username | age | +----+----------+------+ | 1 | jack | 19 | | 2 | jack | 19 | | 3 | jack | 19 | | 4 | jack | 19 | | 5 | jack | 19 | | 6 | jack | 19 | | 7 | jack | 19 | +----+----------+------+ 7 rows in set (0.00 sec) mysql> insert into person(username,age) values('sdsd',20); Query OK, 1 row affected (0.02 sec) mysql> select * from person; +----+----------+------+ | id | username | age | +----+----------+------+ | 1 | jack | 19 | | 2 | jack | 19 | | 3 | jack | 19 | | 4 | jack | 19 | | 5 | jack | 19 | | 6 | jack | 19 | | 7 | jack | 19 | | 8 | sdsd | 20 | +----+----------+------+ 8 rows in set (0.00 sec) --node2上查看是否同步 mysql> use customer; Database changed mysql> select * from person; +----+----------+------+ | id | username | age | +----+----------+------+ | 1 | jack | 19 | | 2 | jack | 19 | | 3 | jack | 19 | | 4 | jack | 19 | | 5 | jack | 19 | | 6 | jack | 19 | | 7 | jack | 19 | | 8 | sdsd | 20 | +----+----------+------+ 8 rows in set (0.00 sec)

注:可以看到数据可以从新主同步数据到从节点

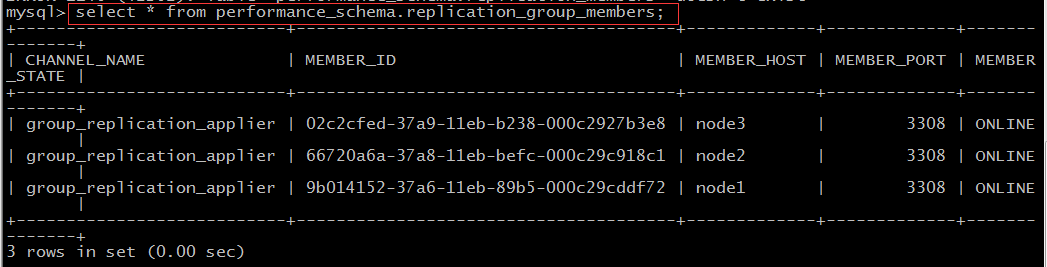

(5)node1重启后,重新加入到MGR组中

mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.01 sec) mysql> start group_replication; Query OK, 0 rows affected (3.03 sec) mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 02c2cfed-37a9-11eb-b238-000c2927b3e8 | node3 | 3308 | ONLINE | | group_replication_applier | 66720a6a-37a8-11eb-befc-000c29c918c1 | node2 | 3308 | ONLINE | | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 3 rows in set (0.00 sec) mysql> use customer; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> select * from person; +----+----------+------+ | id | username | age | +----+----------+------+ | 1 | jack | 19 | | 2 | jack | 19 | | 3 | jack | 19 | | 4 | jack | 19 | | 5 | jack | 19 | | 6 | jack | 19 | | 7 | jack | 19 | | 8 | sdsd | 20 | +----+----------+------+ 8 rows in set (0.00 sec)

(6)查看Group的当前视图

注:16079120577135158:12---- 表示发生了12次视图切换

四、启动MGR为多主模式

1、多主模式的搭建

如果从头搭建多主模式的话,和单主模式的搭建步骤基本一致,只是把下面的参数改一下就行了

--动态配置 set global group_replication_single_primary_mode=FALSE set global group_replication_enforce_update_everywhere_checks=TRUE --配置文件修改 loose-group_replication_single_primary_mode=FALSE loose-group_replication_enforce_update_everywhere_checks=TRUE

2、单主模式修改为多主模式

已经是单主模式要想修改为多主模式,直接动态修改参数会报错,如下:

mysql> set global group_replication_single_primary_mode=off; ERROR 3093 (HY000): Cannot change into or from single primary mode while Group Replication is running. mysql>

所以,需要停掉group_replication,才能从单主模式修改为多主。操作步骤如下

(1)组成员逐个退出group

--node3上退出group mysql> stop group_replication; Query OK, 0 rows affected (9.58 sec) --node2上退出group mysql> stop group_replication; Query OK, 0 rows affected (9.57 sec) --node1上退出group mysql> stop group_replication; Query OK, 0 rows affected (9.57 sec)

节点的状态都是offline:

mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | OFFLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 1 row in set (0.00 sec)

(2)修改group_replication_single_primary_mode参数为off(node1、node2、node3上都要执行)

mysql> set global group_replication_single_primary_mode=FALSE; Query OK, 0 rows affected (0.01 sec) mysql> set global group_replication_enforce_update_everywhere_checks=TRUE; Query OK, 0 rows affected (0.00 sec)

(3)在node1上新增数据,模拟生成环境业务不停

mysql> use customer; Database changed mysql> create table tb2(id int primary key auto_increment not null,name varchar(100)); Query OK, 0 rows affected (0.46 sec) mysql> mysql> insert into tb2(name) values('wade'); Query OK, 1 row affected (0.00 sec) mysql> select * from tb2; +----+------+ | id | name | +----+------+ | 1 | wade | +----+------+ 1 row in set (0.00 sec)

(4)逐个启动group

--node1加入group mysql> show global variables like '%group_replication_bootstrap_group%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | group_replication_bootstrap_group | OFF | +-----------------------------------+-------+ 1 row in set (0.01 sec) mysql> set global group_replication_bootstrap_group=ON; Query OK, 0 rows affected (0.00 sec) mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.02 sec) mysql> start group_replication; Query OK, 0 rows affected (2.04 sec) mysql> set global group_replication_bootstrap_group=off; Query OK, 0 rows affected (0.00 sec) mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 1 row in set (0.00 sec) mysql> --node2加入group mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.01 sec) mysql> start group_replication; Query OK, 0 rows affected (4.07 sec) --node3加入group mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.02 sec) mysql> start group_replication; Query OK, 0 rows affected (3.09 sec) --node1上查看组成员 mysql> select * from performance_schema.replication_group_members ; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 02c2cfed-37a9-11eb-b238-000c2927b3e8 | node3 | 3308 | ONLINE | | group_replication_applier | 66720a6a-37a8-11eb-befc-000c29c918c1 | node2 | 3308 | ONLINE | | group_replication_applier | 9b014152-37a6-11eb-89b5-000c29cddf72 | node1 | 3308 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 3 rows in set (0.00 sec)

(5)查看数据是否同步

--node2上查看 mysql> select * from customer.tb2; +----+------+ | id | name | +----+------+ | 1 | wade | +----+------+ 1 row in set (0.00 sec) --node3上查看 mysql> select * from customer.tb2; +----+------+ | id | name | +----+------+ | 1 | wade | +----+------+ 1 row in set (0.00 sec)

注:可以看到数据已经同步过去了

(6)查看各个节点的读写状态

mysql> show global variables like '%super_read_only%'; +-----------------+-------+ | Variable_name | Value | +-----------------+-------+ | super_read_only | OFF | +-----------------+-------+ 1 row in set (0.00 sec)

注:发现3个节点的super_read_only状态都是off,都是读写的,这时候再查看global_status状态发现为空,无法确定主库是哪个,因为都是主库

到这里,MySQL之MGR的多主模式已经搭建完成了。

五、运维常用的SQL

1、查看group成员

select * from performance_schema.replication_group_members;

2、查看当前SERVER在GROUP中的同步情况(查看applier通道的同步情况)

select * from performance_schema.replication_group_member_stats;

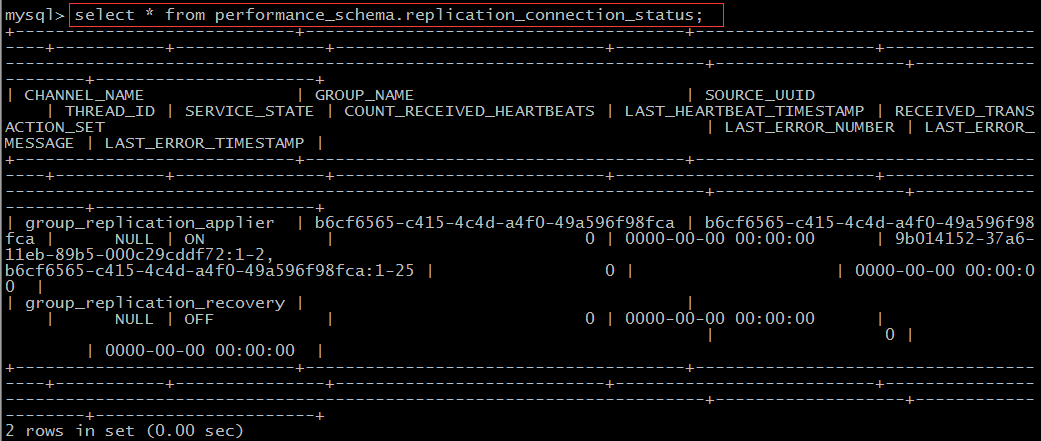

3、查看当前server中各个通道的使用情况

注:applier通道是一定有显示,recovery通道看是否使用过,如果有则显示,没有则不显示。

select * from performance_schema.replication_connection_status;

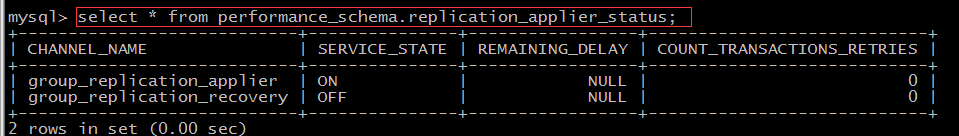

4、查看当前server中各个通道是否启用

select * from performance_schema.replication_applier_status;

5、查看global参数的状态

select * from performance_schema.global_status;

六、遇到的问题总结

1、加入MGR组时报的错误1

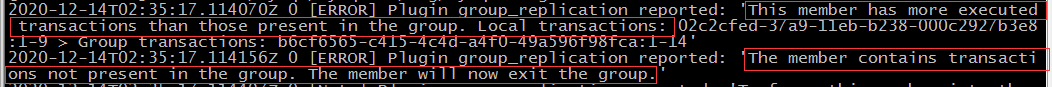

(1)错误如下:

[ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the group. Local transactions: 02c2cfed-37a9-11eb-b238-000c2927b3e8:1-9 > Group transactions: b6cf6565-c415-4c4d-a4f0-49a596f98fca:1-14' 2020-12-14T02:35:17.114156Z 0 [ERROR] Plugin group_replication reported: 'The member contains transactions not present in the group. The member will now exit the group.'

(2)解决办法

原因是node2节点包含了非group内的事务。查看node2的数据,确实有与其他节点不一样的数据。应该是没启动GR服务时,被当做独立库操作了。

在node2节点上,重置master的信息,重新加入MGR,再次start解决了。

mysql> start group_replication; ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log. mysql> reset master; Query OK, 0 rows affected (0.02 sec) mysql> CHANGE MASTER TO MASTER_USER='repl_user1', MASTER_PASSWORD='$a123456' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.01 sec) mysql> start group_replication; Query OK, 0 rows affected (3.39 sec)

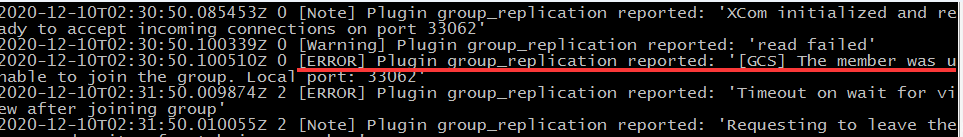

2、加入MGR组时报的错误2

(1)错误截图如下

(2)解决办法

出现这个问题的原因是节点1、2、3中的group_replication_group_name使用的是每台机器的uuid,但是这个配置需要3台机器保持一致。

修改三个节点的group_replication_group_name一致后,问题解决。