spark基础编程四——RDD 编程初级实践

今天进行spark基础编程四——RDD 编程初级实践

一、实验目的

(1)熟悉 Spark 的 RDD 基本操作及键值对操作;

(2)熟悉使用 RDD 编程解决实际具体问题的方法。

二、实验平台

操作系统:Ubuntu16.04

Spark 版本:2.1.0

三、实验内容和要求

1.spark-shell 交互式编程

请到本教程官网的“下载专区”的“数据集”中下载 chapter5-data1.txt,该数据集包含

了某大学计算机系的成绩,数据格式如下所示:

Tom,DataBase,80

Tom,Algorithm,50

Tom,DataStructure,60

Jim,DataBase,90

Jim,Algorithm,60

Jim,DataStructure,80

……

请根据给定的实验数据,在 spark-shell 中通过编程来计算以下内容:

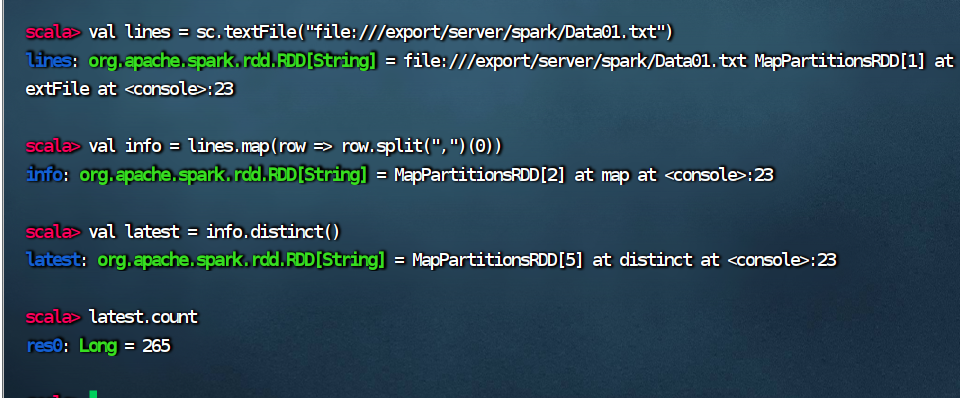

(1)该系总共有多少学生;

scala> val lines = sc.textFile("file:///export/server/spark/Data01.txt") lines: org.apache.spark.rdd.RDD[String] = file:///export/server/spark/Data01.txt MapPartitionsRDD[1] at textFile at <console>:23 scala> val info = lines.map(row => row.split(",")(0)) info: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[2] at map at <console>:23 scala> val latest = info.distinct() latest: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[5] at distinct at <console>:23 scala> latest.count res0: Long = 265

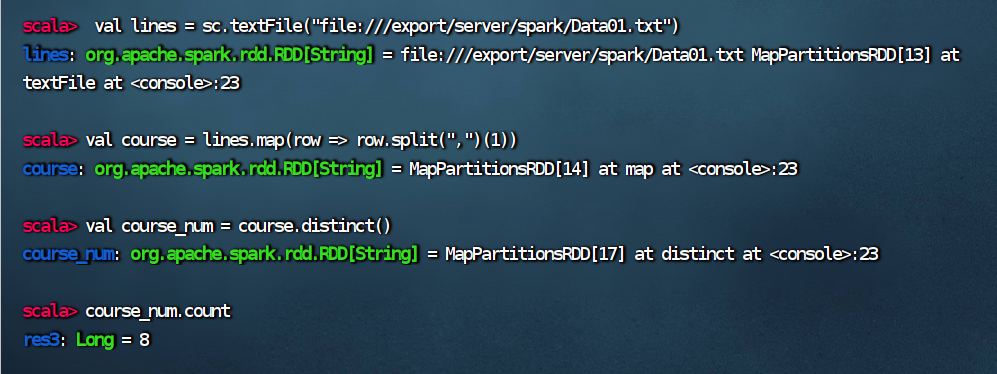

(2)该系共开设来多少门课程;

scala> val lines = sc.textFile("file:///export/server/spark/Data01.txt") lines: org.apache.spark.rdd.RDD[String] = file:///export/server/spark/Data01.txt MapPartitionsRDD[13] at textFile at <console>:23 scala> val course = lines.map(row => row.split(",")(1)) course: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[14] at map at <console>:23 scala> val course_num = course.distinct() course_num: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[17] at distinct at <console>:23 scala> course_num.count res3: Long = 8

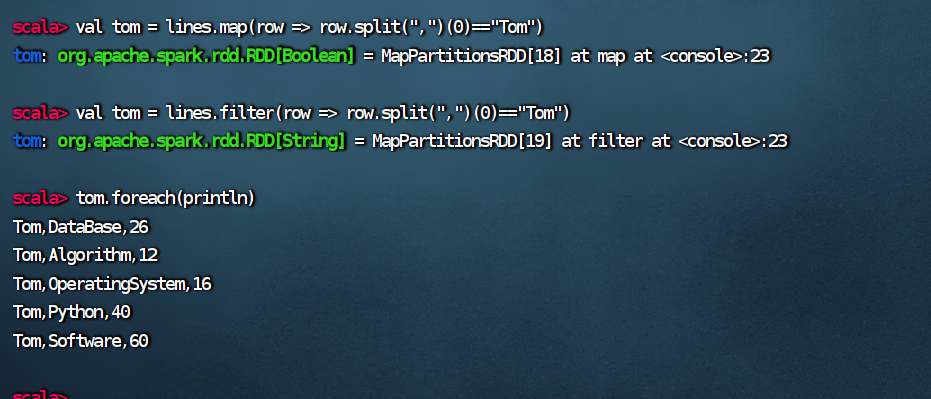

(3)Tom 同学的总成绩平均分是多少;

scala> val tom = lines.map(row => row.split(",")(0)=="Tom") tom: org.apache.spark.rdd.RDD[Boolean] = MapPartitionsRDD[18] at map at <console>:23 scala> val tom = lines.filter(row => row.split(",")(0)=="Tom") tom: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[19] at filter at <console>:23 scala> tom.foreach(println) Tom,DataBase,26 Tom,Algorithm,12 Tom,OperatingSystem,16 Tom,Python,40 Tom,Software,60

(4)求每名同学的选修的课程门数;

scala> val c_num = lines.map(row=>(row.split(",")(0),row.split(",")(1))) c_num: org.apache.spark.rdd.RDD[(String, String)] = MapPartitionsRDD[20] at map at <console>:23 scala> c_num.mapValues(x => (x,1)).reduceByKey((x,y) => (" ",x._2 + y._2)).mapValues(x => x._2).foreach(println)

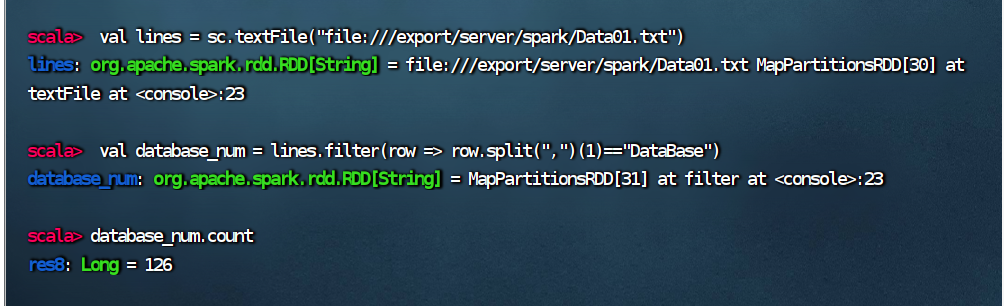

(5)该系 DataBase 课程共有多少人选修;

scala> val lines = sc.textFile("file:///export/server/spark/Data01.txt") lines: org.apache.spark.rdd.RDD[String] = file:///export/server/spark/Data01.txt MapPartitionsRDD[30] at textFile at <console>:23 scala> val database_num = lines.filter(row => row.split(",")(1)=="DataBase") database_num: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[31] at filter at <console>:23 scala> database_num.count res8: Long = 126

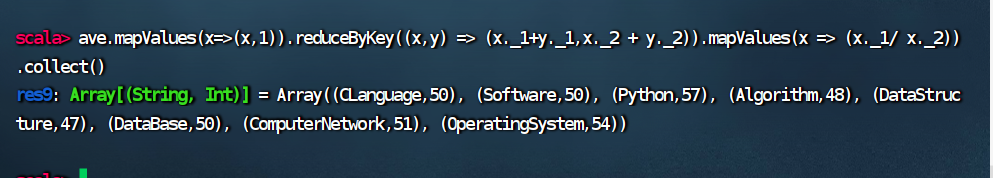

(6)各门课程的平均分是多少;

scala> val ave = lines.map(row=>(row.split(",")(1),row.split(",")(2).toInt)) ave: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[32] at map at <console>:23 scala> ave.mapValues(x=>(x,1)).reduceByKey((x,y) => (x._1+y._1,x._2 + y._2)).mapValues(x => (x._1/ x._2)).collect() res9: Array[(String, Int)] = Array((CLanguage,50), (Software,50), (Python,57), (Algorithm,48), (DataStructure,47), (DataBase,50), (ComputerNetwork,51), (OperatingSystem,54))

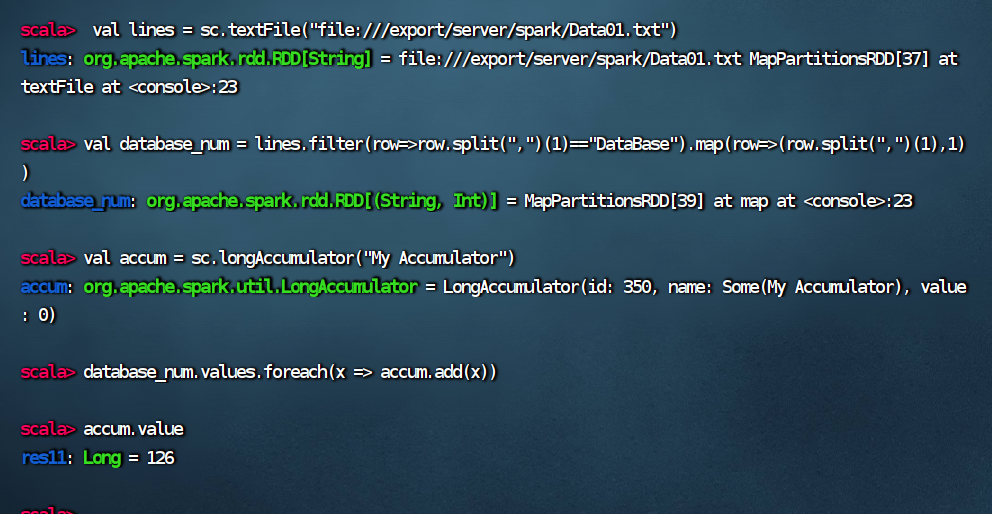

(7)使用累加器计算共有多少人选了 DataBase 这门课。

scala> val lines = sc.textFile("file:///export/server/spark/Data01.txt") lines: org.apache.spark.rdd.RDD[String] = file:///export/server/spark/Data01.txt MapPartitionsRDD[37] at textFile at <console>:23 scala> val database_num = lines.filter(row=>row.split(",")(1)=="DataBase").map(row=>(row.split(",")(1),1)) database_num: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[39] at map at <console>:23 scala> val accum = sc.longAccumulator("My Accumulator") accum: org.apache.spark.util.LongAccumulator = LongAccumulator(id: 350, name: Some(My Accumulator), value: 0) scala> database_num.values.foreach(x => accum.add(x)) scala> accum.value res11: Long = 126

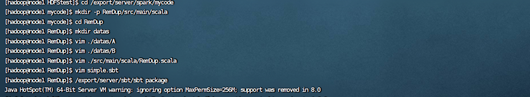

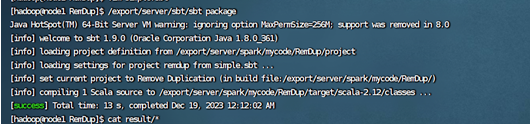

2.编写独立应用程序实现数据去重

对于两个输入文件 A 和 B,编写 Spark 独立应用程序,对两个文件进行合并,并剔除其

中重复的内容,得到一个新文件 C。下面是输入文件和输出文件的一个样例,供参考。

输入文件 A 的样例如下:

20170101 x

20170102 y

20170103 x

20170104 y

20170105 z

20170106 z

输入文件 B 的样例如下:

20170101 y

20170102 y

20170103 x

20170104 z

20170105 y

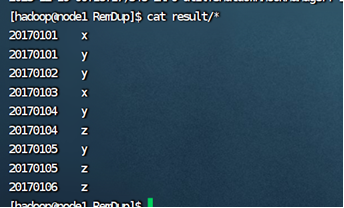

根据输入的文件 A 和 B 合并得到的输出文件 C 的样例如下:

20170101 x

20170101 y

20170102 y

20170103 x

20170104 y

20170104 z

20170105 y

20170105 z

20170106 z

3.编写独立应用程序实现求平均值问题

每个输入文件表示班级学生某个学科的成绩,每行内容由两个字段组成,第一个是学生

名字,第二个是学生的成绩;编写 Spark 独立应用程序求出所有学生的平均成绩,并输出到

一个新文件中。下面是输入文件和输出文件的一个样例,供参考。

Algorithm 成绩:

小明 92

小红 87

小新 82

小丽 90

Database 成绩:

小明 95

小红 81

小新 89

小丽 85

Python 成绩:

小明 82

小红 83

小新 94

小丽 91

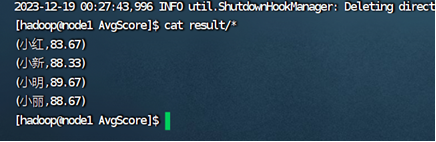

平均成绩如下:

(小红,83.67)

(小新,88.33)

(小明,89.67)

(小丽,88.67)