每日随笔——Spark

今天学习如何使用Spark技术。

一、下载spark

下载spark-3.4.0-bin-without-hadoop.tgz文件,百度网盘链接:https://pan.baidu.com/s/181shkgg-i0WEytQMqeeqxA(提取码:9ekc )

二、安装hadoop和Javajdk(这些在之前博客中已经发布,默认已经安装成功)

三、安装spark

sudo tar -zxf /export/server/spark-3.4.0-bin-without-hadoop.tgz -C /export/server/ cd /export/server/ sudo mv ./spark-3.4.0-bin-without-hadoop/ ./spark sudo chown -R hadoop:hadoop ./spark

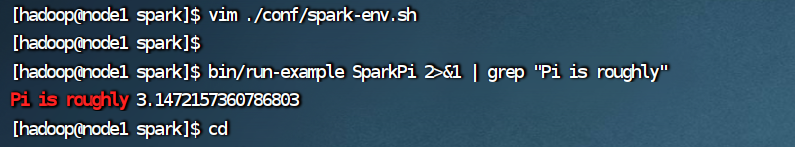

修改Spark的配置文件spark-env.sh

cd /export/server/spark

cp ./conf/spark-env.sh.template ./conf/spark-env.sh

编辑spark-env.sh文件(vim ./conf/spark-env.sh),在第一行添加以下配置信息:

export SPARK_DIST_CLASSPATH=$(/export/server/hadoop/bin/hadoop classpath)

四、验证Spark是否安装成功。

cd /export/server/spark bin/run-example SparkPi 2>&1 | grep "Pi is"

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 上周热点回顾(3.3-3.9)

· AI 智能体引爆开源社区「GitHub 热点速览」