kubernetes集群 二进制安装部署dashboard-2

cfssl签发证书dashboard

在k8s-5节点签发

[root@k8s-5 ~]# cd /opt/certs/

[root@k8s-5 certs]# cp client-csr.json od.com-csr.json

[root@k8s-5 certs]# vim od.com-csr.json

{

"CN": "*.od.com",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@k8s-5 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server od.com-csr.json |cfssl-json -bare od.com

在k8s-1和k8s-2节点操作

[root@k8s-1 certs]# scp k8s-5:/opt/certs/od.com-key.pem .

[root@k8s-1 certs]# scp k8s-5:/opt/certs/od.com.pem .

[root@k8s-2 certs]# scp k8s-1:/etc/nginx/certs/* .

在k8s-2配置

[root@k8s-2 conf.d]# scp k8s-1://etc/nginx/conf.d/dashboard.od.com.conf .

重启k8s-1 k8s-2nginx

[root@k8s-1 certs]# systemctl restart nginx.service

[root@k8s-2 certs]# systemctl restart nginx.service

到此nginx已经配置完成告一段落

安装部署heapster

配置本地镜像仓库

[root@k8s-5 ~]# cd /data/k8s-yaml/dashboard/

[root@k8s-5 dashboard]# mkdir heapster

[root@k8s-5 dashboard]# cd heapster/

[root@k8s-5 heapster]# docker pull quay.io/bitnami/heapster:1.5.4

[root@k8s-5 heapster]# docker tag c359b95ad38b harbor.od.com/public/dashboard:v1.5.4

[root@k8s-5 heapster]# docker push harbor.od.com/public/dashboard:v1.5.4

创建rbac.yaml 配置文件

[root@k8s-5 ~]# cd /data/k8s-yaml/dashboard/heapster/

[root@k8s-5 heapster]# vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: heapster

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

创建deployment 配置文件

[root@k8s-5 heapster]# vim dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.od.com/public/dashboard/heapster:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /opt/bitnami/heapster/bin/heapster

- --source=kubernetes:https://kubernetes.default

创建svc配置文件

[root@k8s-5 heapster]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

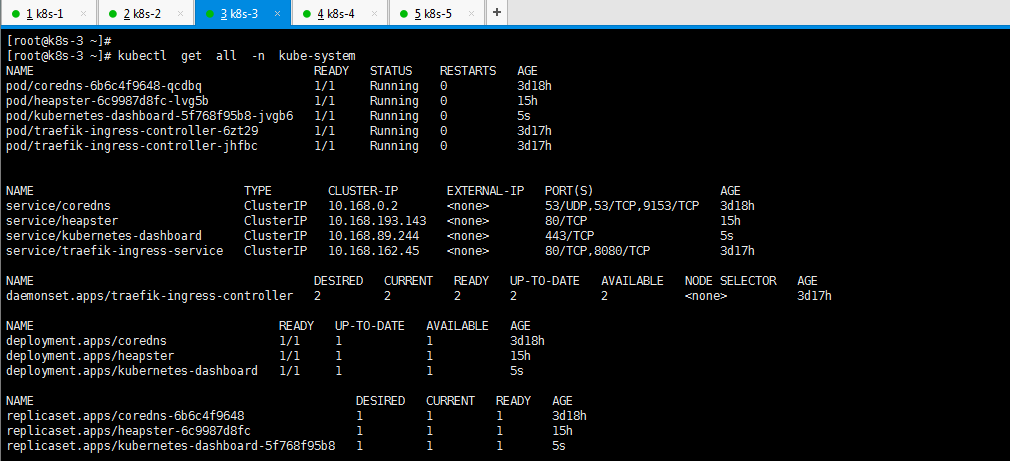

检查

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/heapster/rbac.yaml

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/heapster/dp.yaml

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/heapster/svc.yaml

[root@k8s-3 ~]# kubectl get all -n kube-system

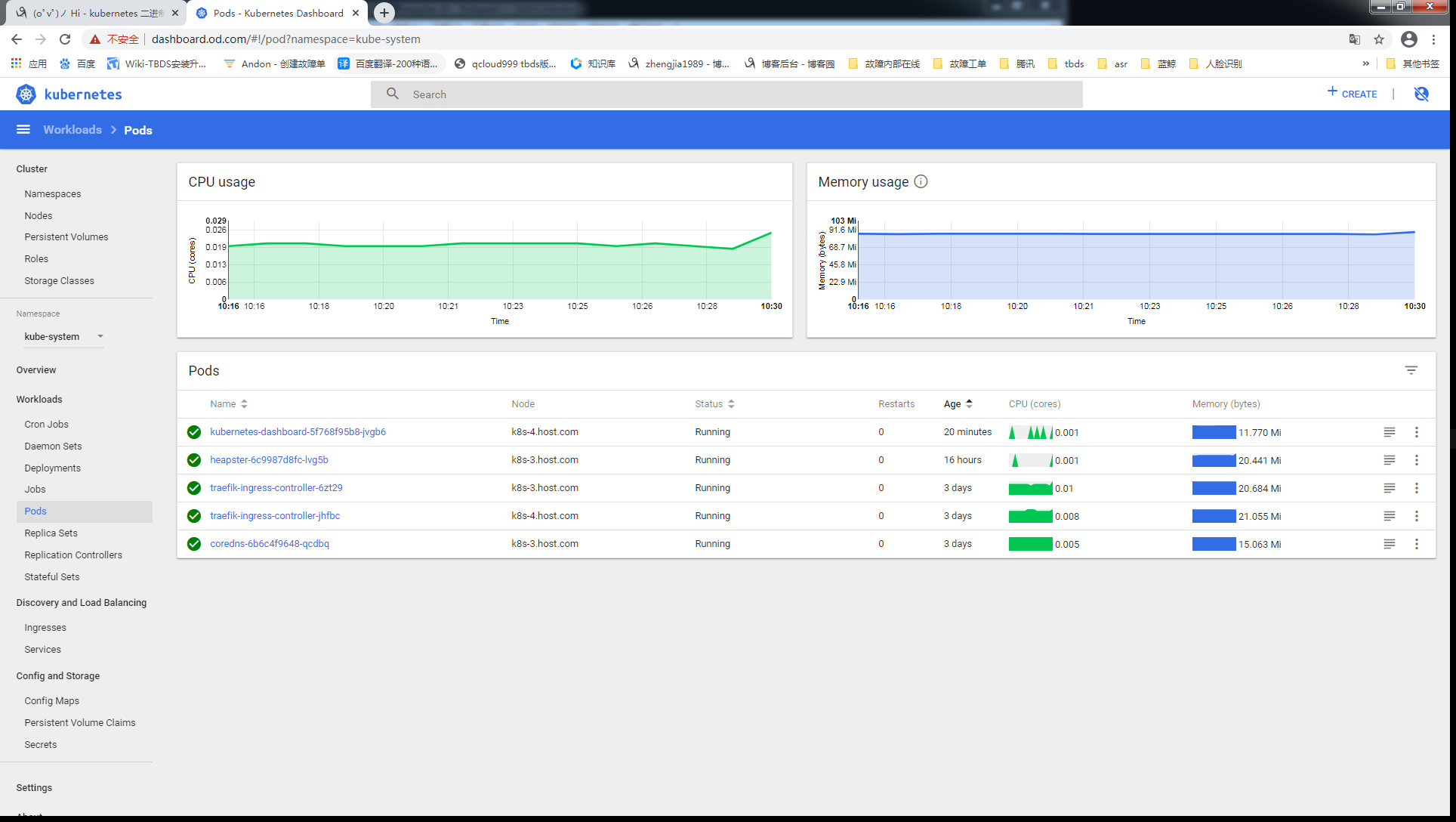

页面测试

k8s集群平滑升级和回退

1.在k8s-1节点注释掉4层7层的负载均衡

[root@k8s-1 ~]# vim /etc/nginx/nginx.conf

stream {

upstream kube-apiserver {

# server 192.168.50.126:6443 max_fails=3 fail_timeout=30s;

server 192.168.50.154:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

[root@k8s-1 ~]# vim /etc/nginx/conf.d/od.com.conf

upstream default_backend_traefik {

# server 192.168.50.126:81 max_fails=3 fail_timeout=10s;

server 192.168.50.154:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

检查

[root@k8s-1 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@k8s-1 ~]# systemctl restart nginx.service

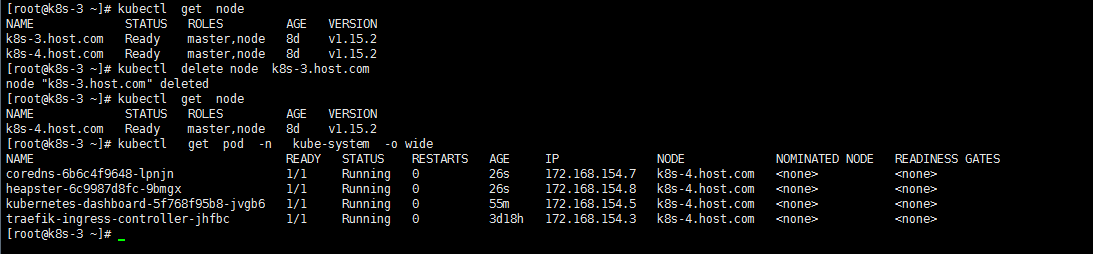

2.把nodes从集群中删除

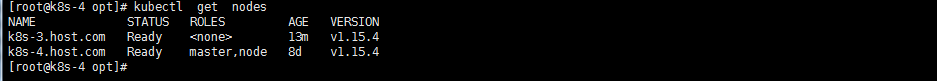

[root@k8s-3 ~]# kubectl get node

[root@k8s-3 ~]# kubectl delete node k8s-3.host.com

[root@k8s-3 ~]# kubectl get node

如图:

3.查看coredns是否受影响

[root@k8s-3 ~]# dig -t A kubernetes.default.svc.cluster.local. @10.168.0.2 +short

10.168.0.1

4.上传升级版本1.15.4到src目录并解压到当前目录

[root@k8s-3 ~]# cd /opt/src/

[root@k8s-3 src]# tar xf kubernetes-server-linux-amd64-v1.15.4.tar.gz

[root@k8s-3 src]# mv kubernetes kubernetes-v1.15.4

[root@k8s-3 src]# mv kubernetes-v1.15.4/ /opt/

[root@k8s-3 opt]# cd /opt/kubernetes-v1.15.4/

[root@k8s-3 kubernetes-v1.15.4]# rm -rf kubernetes-src.tar.gz

[root@k8s-3 ~]# cd /opt/kubernetes-v1.15.4/server/bin/

[root@k8s-3 bin]# rm -rf *.tar

[root@k8s-3 bin]# rm -rf *_tag

[root@k8s-3 bin]# mkdir conf

[root@k8s-3 bin]# mkdir cert

[root@k8s-3 bin]# cd conf/

[root@k8s-3 conf]# cp /opt/kubernetes/server/bin/conf/* .

[root@k8s-3 conf]# cd ../cert/

[root@k8s-3 cert]# cp /opt/kubernetes/server/bin/cert/* .

[root@k8s-3 cert]# cd ..

[root@k8s-3 bin]# cp /opt/kubernetes/server/bin/*.sh .

[root@k8s-3 opt]# cd /opt

[root@k8s-3 opt]# rm -rf kubernetes

[root@k8s-3 opt]# ln -s /opt/kubernetes-v1.15.4 /opt/kubernetes

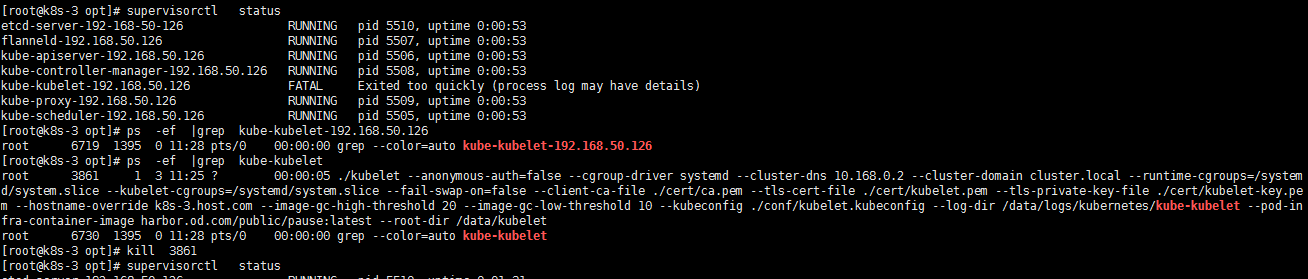

5.重启supervisord.service

###注意生产上别瞎搞 得一个一个的重启####

[root@k8s-3 opt]# systemctl restart supervisord.service

6.检查

[root@k8s-3 opt]# supervisorctl status

etcd-server-192-168-50-126 RUNNING pid 5510, uptime 0:02:26

flanneld-192.168.50.126 RUNNING pid 5507, uptime 0:02:26

kube-apiserver-192.168.50.126 RUNNING pid 5506, uptime 0:02:26

kube-controller-manager-192.168.50.126 RUNNING pid 5508, uptime 0:02:26

kube-kubelet-192.168.50.126 RUNNING pid 6874, uptime 0:00:34

kube-proxy-192.168.50.126 RUNNING pid 5509, uptime 0:02:26

kube-scheduler-192.168.50.126 RUNNING pid 5505, uptime 0:02:26

[root@k8s-3 opt]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-3.host.com Ready <none> 4m56s v1.15.4

k8s-4.host.com Ready master,node 8d v1.15.2

7.如果出现无法启动,是因为进程没有完全杀死 需要kill掉进程

8.k8s-4节点和k8s-3节点同样操作

1.需要替换负载均衡的注释

2.替换完成并重启nginx

9.回退就删除软连接创建软连接 比如

[root@k8s-3 opt]# rm -rf kubernetes

[root@k8s-3 opt]# ln -s /opt/kubernetes-v1.15.2 /opt/kubernetes

[root@k8s-3 opt]# systemctl restart supervisord.service

[root@k8s-3 opt]# supervisorctl status

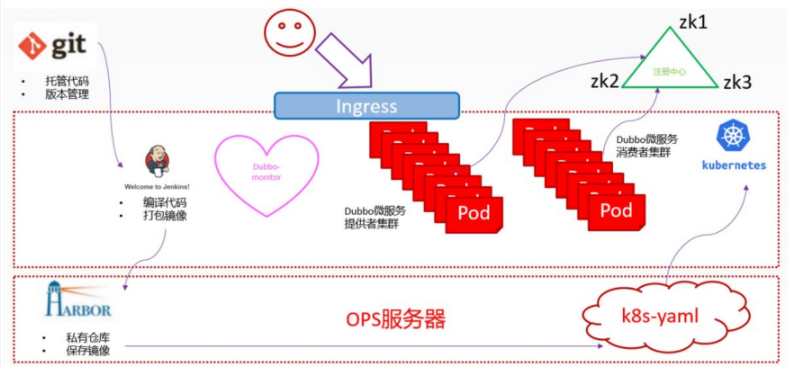

交付dubbo微服务到kubernetes集群

- Zookeeper是Dubbo微服务集群的注册中心

- 它的高可用机制和k8s的etcd集群一致

- java编写,需要jdk环境

1.2.节点规划

| 主机名 | 角色 | ip |

|---|---|---|

| k8s-1.host.com | k8s代理节点1,zk1 | 192.168.50.215 |

| k8s-2.host.com | k8s代理节点2,zk2 | 192.168.50.145 |

| k8s-3.host.com | k8s运算节点1,zk3 | 192.168.50.126 |

| k8s-4.host.com | k8s运算节点2,jenkins | 192.168.50.154 |

| k8s-5.host.com | k8s运维节点(docker仓库) | 192.168.50.109 |

2.部署zookeeper

2.1.安装jdk 1.8(3台zk节点都要安装)

安装jdk1.8版本 (3台zk节点都要安装)

k8s-1 k8s-2 k8s-3

[root@k8s-1 ~]# #mkdir -p /opt/src

[root@k8s-1 ~]# cd /opt/src/

[root@k8s-1 src]# scp k8s-5:/data/k8s-yaml/software/k8s/jdk-8u221-linux-x64.tar.gz .

[root@k8s-1 src]# mkdir /usr/java

[root@k8s-1 src]# tar xf jdk-8u221-linux-x64.tar.gz -C /usr/java/

[root@k8s-1 src]# ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

创建环境变量

[root@k8s-1 src]# vim /etc/profile

export JAVA_HOME=/usr/java/jdk

export PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATH

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

source并检查

[root@k8s-1 src]# source /etc/profile

[root@k8s-1 src]# java -version

java version "1.8.0_221"

2.2.安装zk(3台节点都要安装)

zookeeper官方地址

2.2.1.解压,创建软链接

[root@k8s-1 src]# tar xf zookeeper-3.4.14.tar.gz -C /opt/

[root@k8s-1 src]# ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

2.2.2.创建数据目录和日志目录

[root@k8s-1 opt]# mkdir -pv /data/zookeeper/data /data/zookeeper/logs

mkdir: created directory ‘/data’

mkdir: created directory ‘/data/zookeeper’

mkdir: created directory ‘/data/zookeeper/data’

mkdir: created directory ‘/data/zookeeper/logs’

2.2.3.配置

//k8s-1 k8s-2 k8s-3 节点相同

[root@k8s-1 opt]# vim /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.od.com:2888:3888

server.2=zk2.od.com:2888:3888

server.3=zk3.od.com:2888:3888

配置zk的myid集群

[root@k8s-1 src]# vim /data/zookeeper/data/myid

1

[root@k8s-2 src]# vim /data/zookeeper/data/myid

2

[root@k8s-3 src]# vim /data/zookeeper/data/myid

3

2.2.4.做dns解析

[root@k8s-1 java]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111011 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.50.215

harbor A 192.168.50.109

k8s-yaml A 192.168.50.109

traefik A 192.168.50.10

dashboard A 192.168.50.10

zk1 A 192.168.50.215

zk2 A 192.168.50.145

zk3 A 192.168.50.126

2.2.5验证

[root@k8s-1 java]# dig -t A zk1.od.com @192.168.50.215 +short

192.168.50.21

2.2.6.依次启动并检查

启动

[root@k8s-1 java]# /opt/zookeeper/bin/zkServer.sh start

[root@k8s-2 java]# /opt/zookeeper/bin/zkServer.sh start

[root@k8s-3 java]# /opt/zookeeper/bin/zkServer.sh start

[root@k8s-1 java]# ps -ef |grep zo

2.2.7查看zk主备

[root@k8s-1 opt]# zookeeper/bin/zkServer.sh status

3.部署jenkins

3.1.准备镜像

k8s-5上

[root@k8s-5 ~]# docker pull jenkins/jenkins:2.190.3

[root@k8s-5 ~]# docker images |grep jenkins

[root@k8s-5 ~]# docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.190.3

[root@k8s-5 ~]# docker push harbor.od.com/public/jenkins:v2.190.3

3.2.制作自定义镜像

3.2.1.生成ssh秘钥对

[root@k8s-5 ~]# cd .ssh/

[root@k8s-5 .ssh]# ssh-agent bash

[root@k8s-5 .ssh]# ssh-add ~/.ssh/id_rsa

[root@k8s-5 ~]# ssh-keygen -t rsa -b 2048 -C "498577310@163.com" -N "" -f /root/.ssh/id_rsa

此处用自己的邮箱

3.2.2.准备get-docker.sh文件

[root@k8s-5 ~]# curl -fsSL get.docker.com -o get-docker.sh

[root@k8s-5 ~]# chmod +x get-docker.sh

3.2.3.准备config.json文件

[root@k8s-5 ~]# cp /root/.docker/config.json .

[root@k8s-5 ~]# cat /root/.docker/config.json

{

"auths": {

"harbor.od.com": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.4 (linux)"

}

3.2.4.创建目录并准备Dockerfile

[root@k8s-5 ~]# mkdir /data/dockerfile/jenkins -p

[root@k8s-5 ~]# cd /data/dockerfile/jenkins/

[root@hdss7-200 jenkins]# vi Dockerfile

FROM harbor.od.com/public/jenkins:v2.190.3

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

ADD get-docker.sh /get-docker.sh

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh

- 设置容器用户为root

- 设置容器内的时区

- 将创建的ssh私钥加入(使用git拉代码是要用,配对的公钥配置在gitlab中)

- 加入了登陆自建harbor仓库的config文件

- 修改了ssh客户端的配置,不做指纹验证

- 安装一个docker的客户端 //build如果失败,在get-docker.sh 后加--mirror=Aliyun

3.3登录harbor创建infra仓库

3.4.制作自定义镜像

拷贝至/data/dockerfile/jenkins

[root@k8s-5 ~]# cp config.json get-docker.sh /data/dockerfile/jenkins/

[root@k8s-5 jenkins]# cd /root/.ssh/

[root@k8s-5 .ssh]# cp id_rsa /data/dockerfile/jenkins/

[root@k8s-5 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.190.3

3.3.1执行build

[root@k8s-5 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.190.3

Sending build context to Docker daemon 20.48kB

Step 1/7 : FROM harbor.od.com/public/jenkins:v2.190.3

---> 22b8b9a84dbe

Step 2/7 : USER root

---> Using cache

---> 49b57acf3d53

Successfully built aa06799f5fac

Successfully tagged harbor.od.com/infra/jenkins:v2.190.3

3.3.2上传镜像到仓库

[root@k8s-5 jenkins]# docker push harbor.od.com/infra/jenkins:v2.190.3

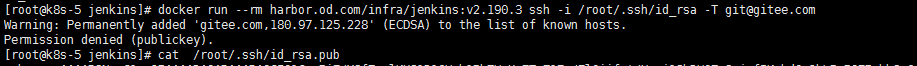

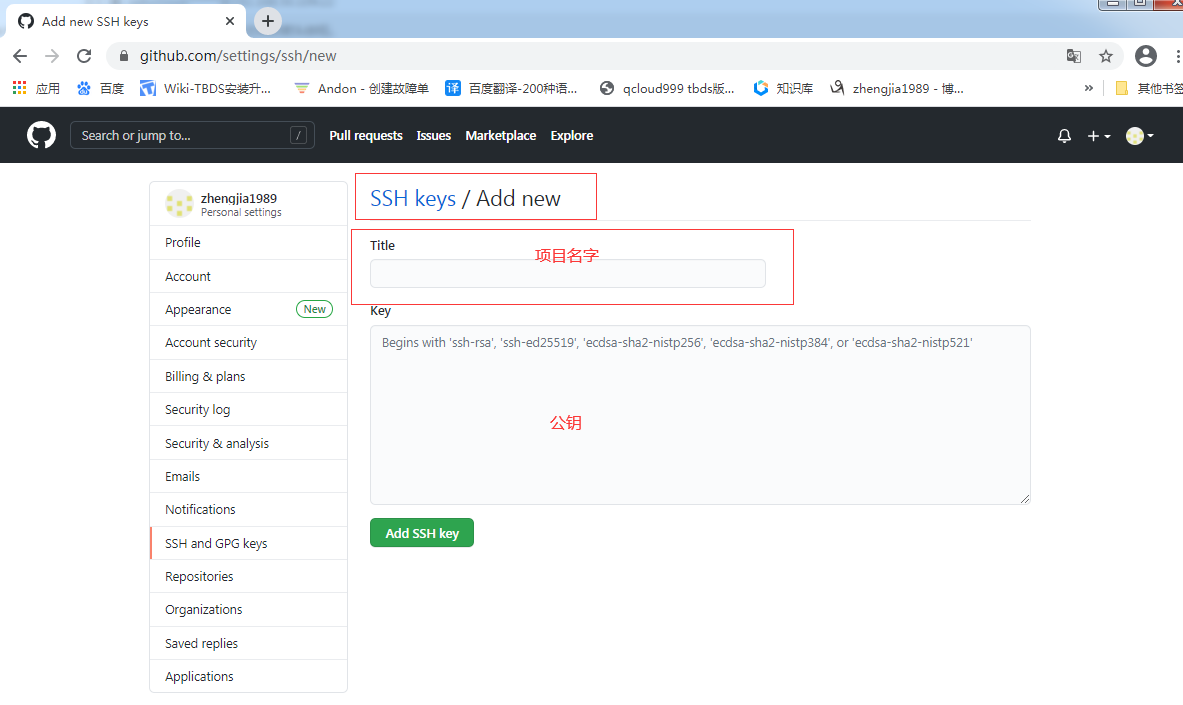

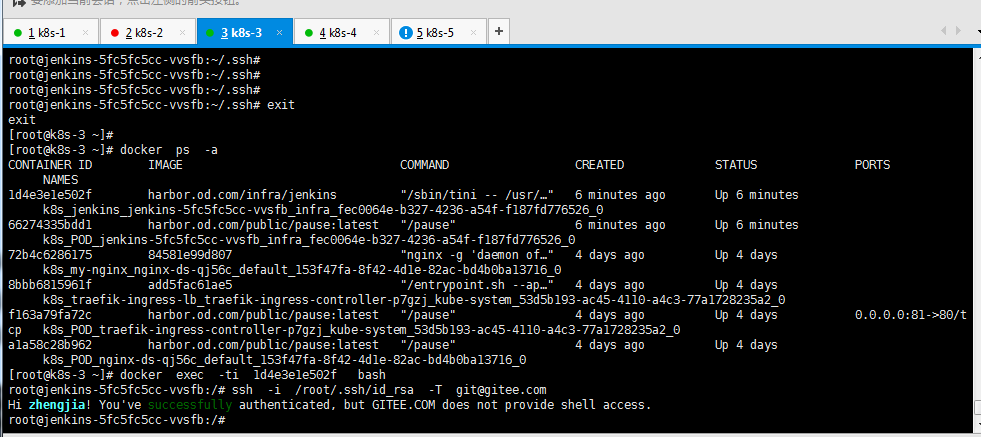

3.3.3公钥上传到gitee测试此镜像是否可以成功连接

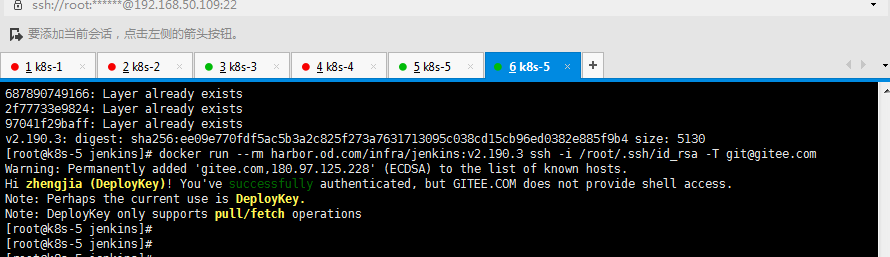

[root@k8s-5 jenkins]# docker run --rm harbor.od.com/infra/jenkins:v2.190.3 ssh -i /root/.ssh/id_rsa -T git@gitee.com

登录失败是因为github没有把公钥做好

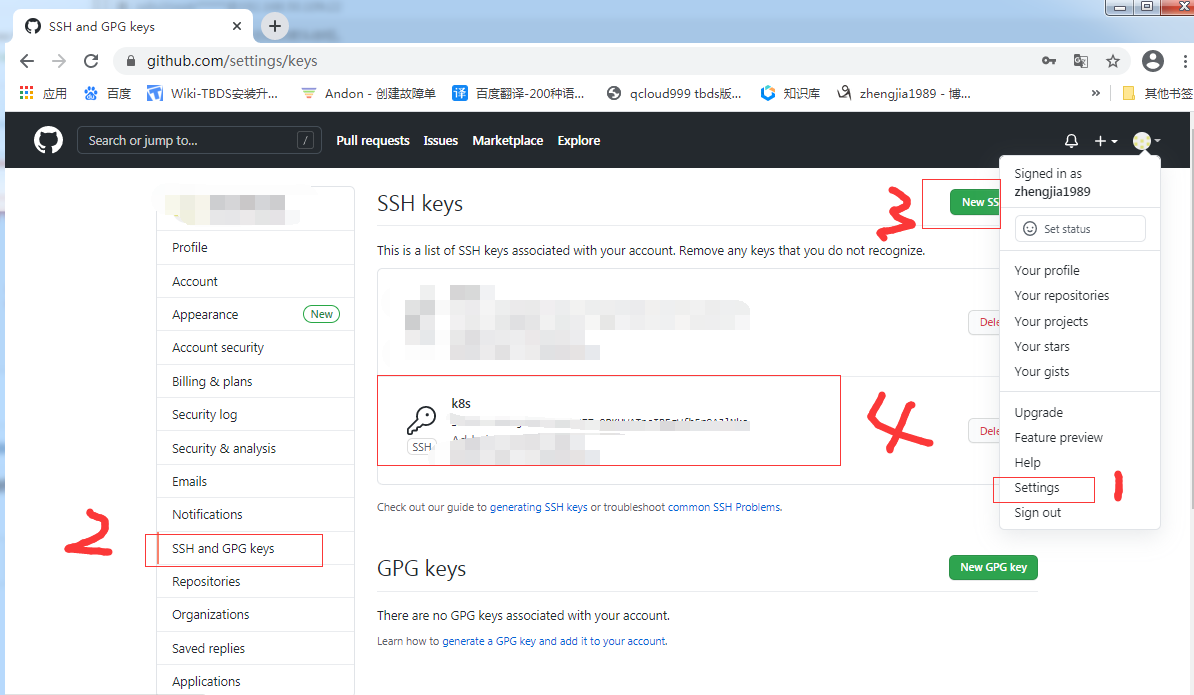

3.3.4登录git验证密钥对

3.3.5再次验证

公钥上传到gitee测试此镜像是否可以成功连接

[root@k8s-5 ~]# cd /data/harbor/

[root@k8s-5 harbor]# docker run --rm harbor.od.com/infra/jenkins:v2.190.3 ssh -i /root/.ssh/id_rsa -T git@gitee.com

3.5.创建kubernetes名称空间并在此创建secret

[root@k8s-3 ~]# kubectl create namespace infra

[root@k8s-3 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infra

3.6.推送镜像

[root@k8s-5 jenkins]# docker push harbor.od.com/infra/jenkins:v2.190.3

3.7.准备共享存储

运维主机k8s-5和所有运算节点上

3.7.1.安装nfs-utils -y k8s-3 k8s-4 k8s-5 执行

3.7.2.配置NFS服务

运维主机k8s-5

[root@k8s-5 jenkins]# yum install nfs-utils -y

3.7.2.配置NFS服务

运维主机k8s-5

[root@k8s-5 jenkins]# vi /etc/exports

/data/nfs-volume 192.168.50.0/24(rw,no_root_squash)

3.7.3.启动NFS服务

运维主机k8s-5上

[root@k8s-5 ~]# mkdir -p /data/nfs-volume

[root@k8s-5 ~]# systemctl start nfs

[root@k8s-5 ~]# systemctl enable nfs

3.8.准备资源配置清单

运维主机k8s-5上

[root@k8s-5 ~]# cd /data/k8s-yaml/

[root@k8s-5 k8s-yaml]# mkdir /data/k8s-yaml/jenkins && mkdir /data/nfs-volume/jenkins_home && cd jenkins

3.8.1创建dp资源清单

[root@k8s-5 jenkins]# vim dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: k8s-5

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.od.com/infra/jenkins:v2.190.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

3.8.2创建svc.yaml

[root@k8s-5 jenkins]# vim svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkins

3.8.3 创建ingress.yaml

[root@k8s-5 jenkins]# vim ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.od.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

3.9应用资源配置清单

[root@k8s-3 ~]# kubectl delete -f http://k8s-yaml.od.com/jenkins/dp.yaml

[root@k8s-3 ~]# kubectl delete -f http://k8s-yaml.od.com/jenkins/svc.yaml

[root@k8s-3 ~]# kubectl delete -f http://k8s-yaml.od.com/jenkins/ingress.yaml

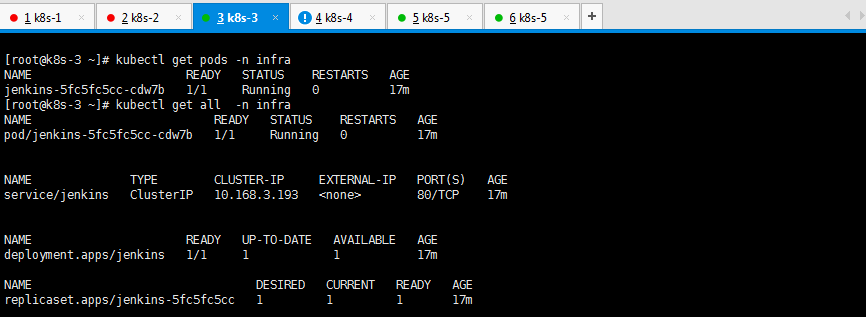

3.9.1验证

[root@k8s-3 ~]# kubectl get pods -n infra

[root@k8s-3 ~]# kubectl get all -n infra

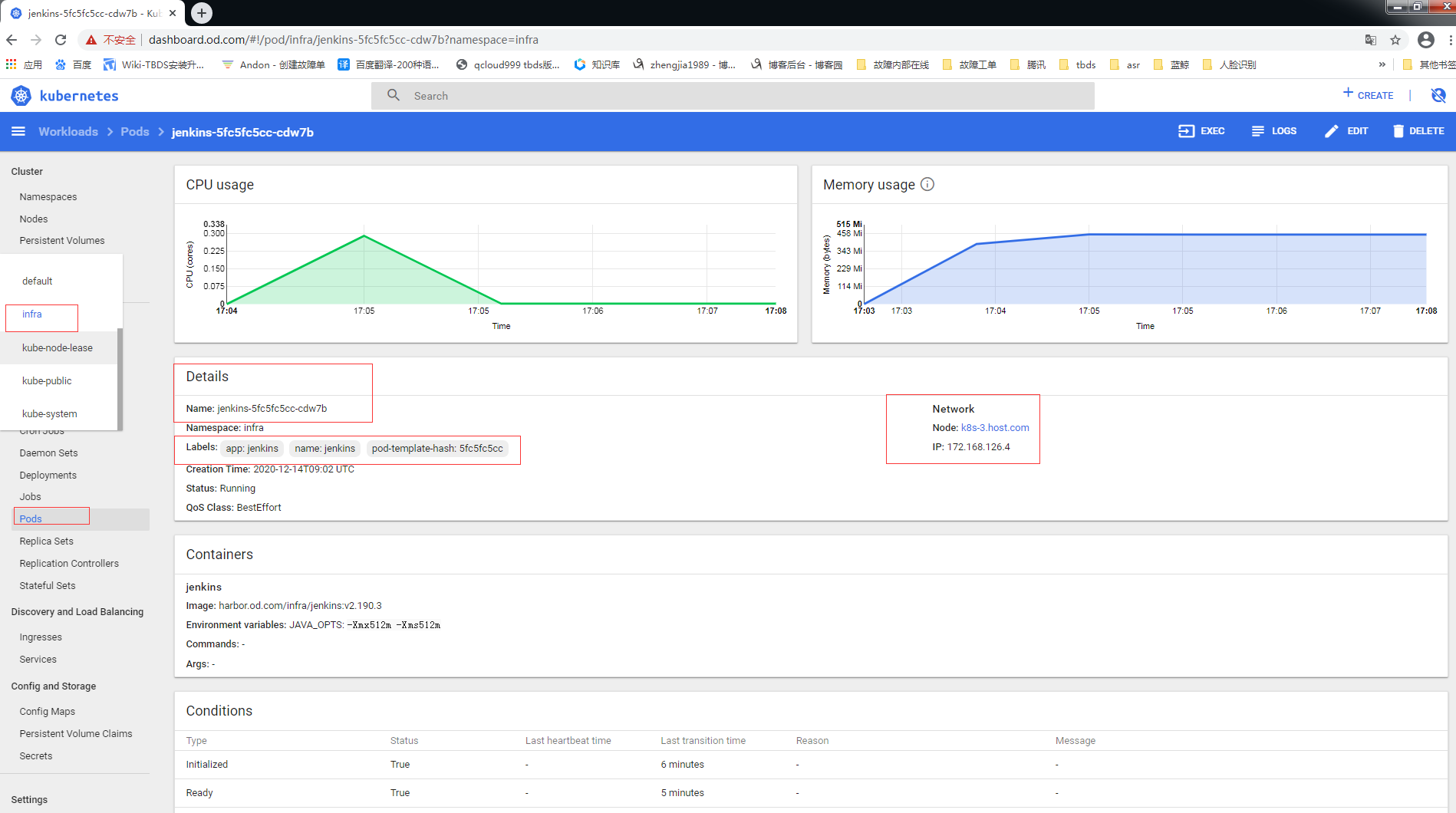

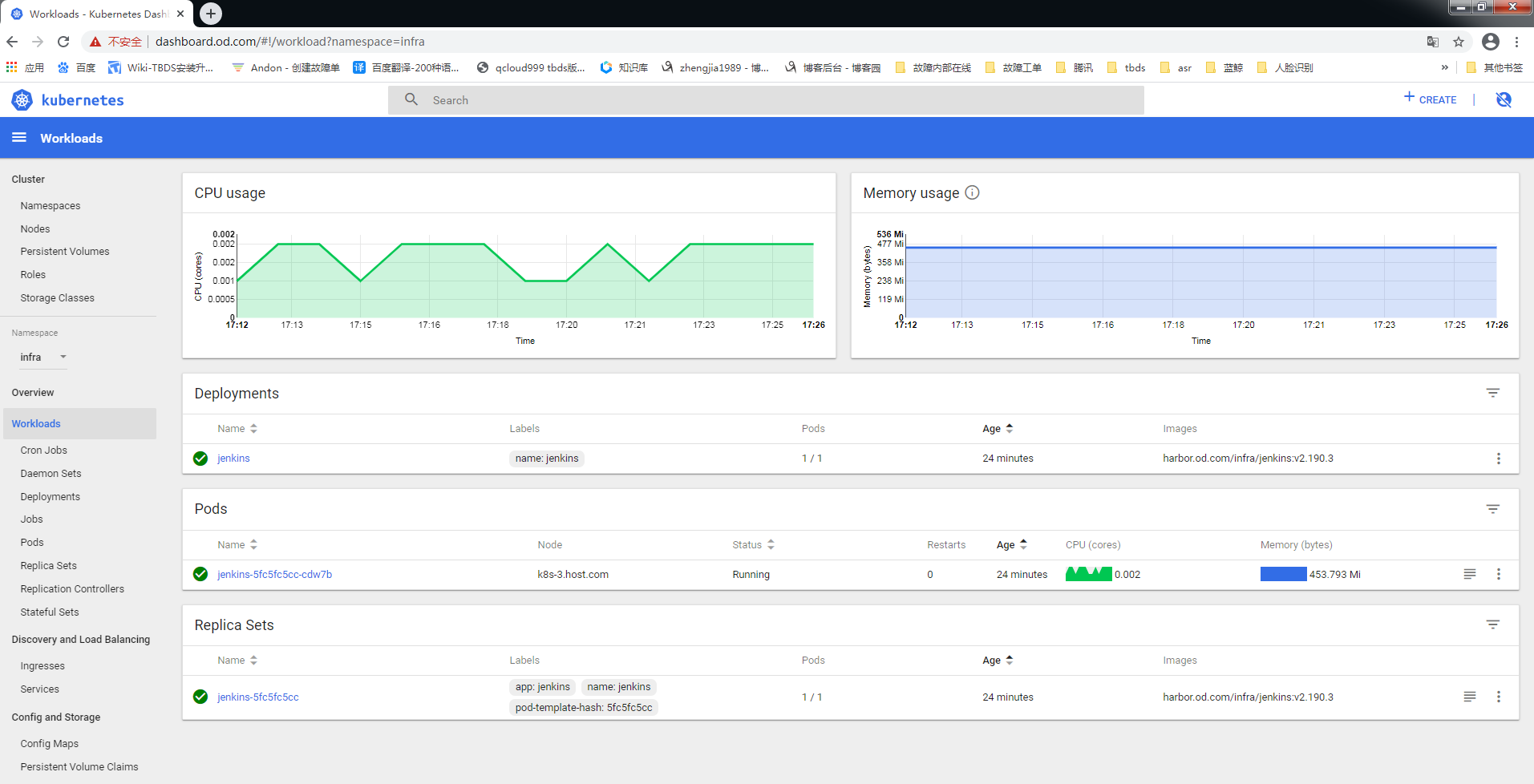

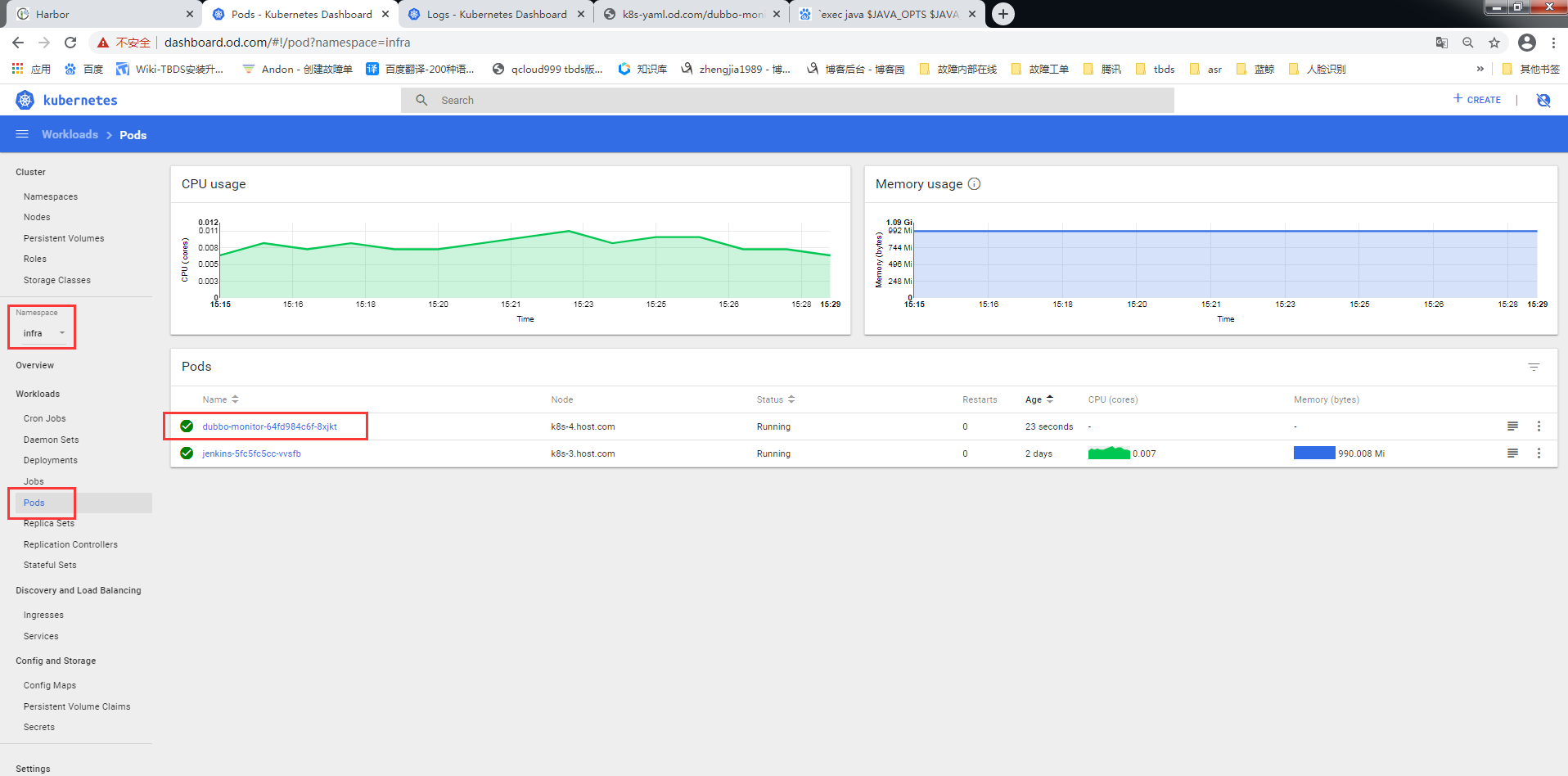

3.9.2登录https://dashboard.od.com/页面验证

3.10.解析域名

[root@k8s-1 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111012 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.50.215

harbor A 192.168.50.109

k8s-yaml A 192.168.50.109

traefik A 192.168.50.10

dashboard A 192.168.50.10

zk1 A 192.168.50.215

zk2 A 192.168.50.145

zk3 A 192.168.50.126

jenkins A 192.168.50.10

3.10.1启动

[root@k8s-1 ~]# systemctl restart named

3.10.2检查

[root@k8s-1 ~]# dig -t A jenkins.od.com @192.168.50.215 +short

192.168.50.10

3.10.3网页访问

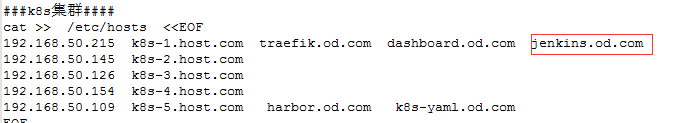

1.本地hosts解析

2.访问http://jenkins.od.com/login?from=%2F

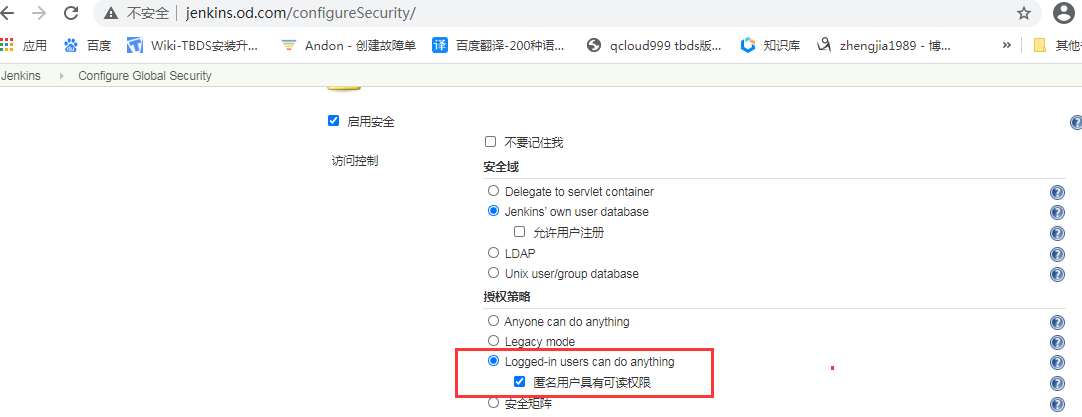

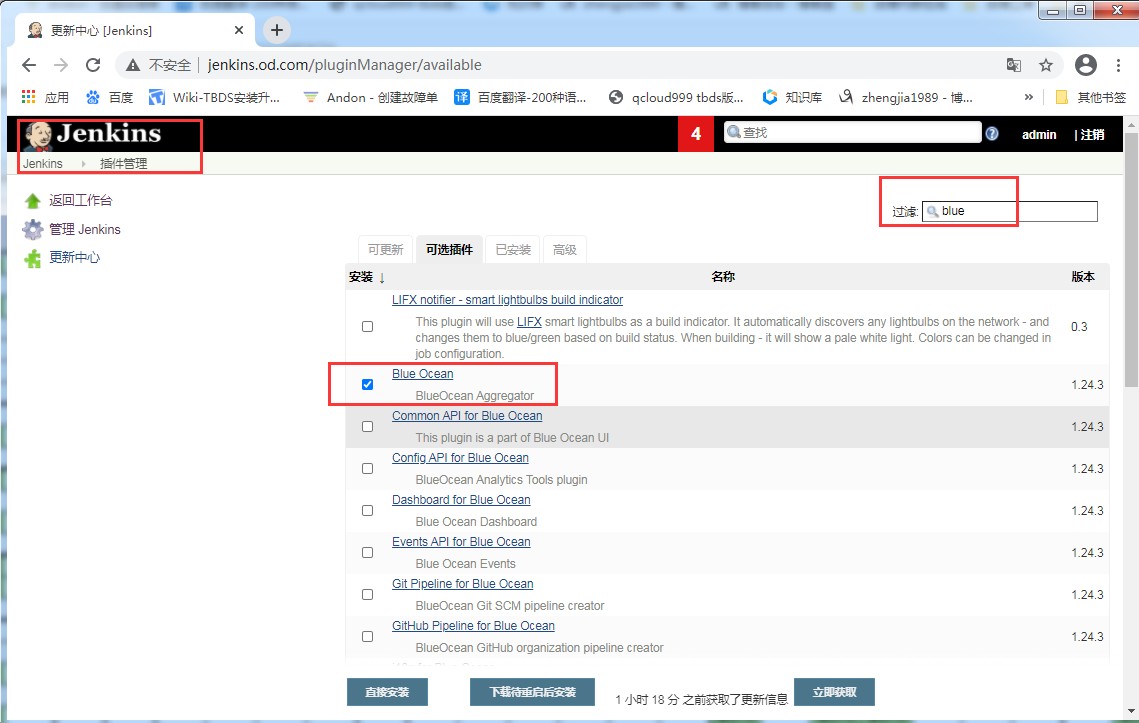

3.12.页面配置jenkins

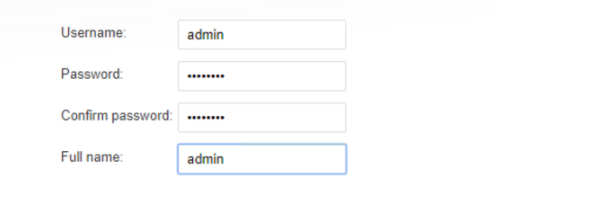

3.12.1.配置用户名密码

用户名:admin 密码:admin123 //后续依赖此密码,请务必设置此密码

3.12.2.安装好流水线插件Blue-Ocean

注意安装插件慢的话可以设置清华大学加速

k8s-5上

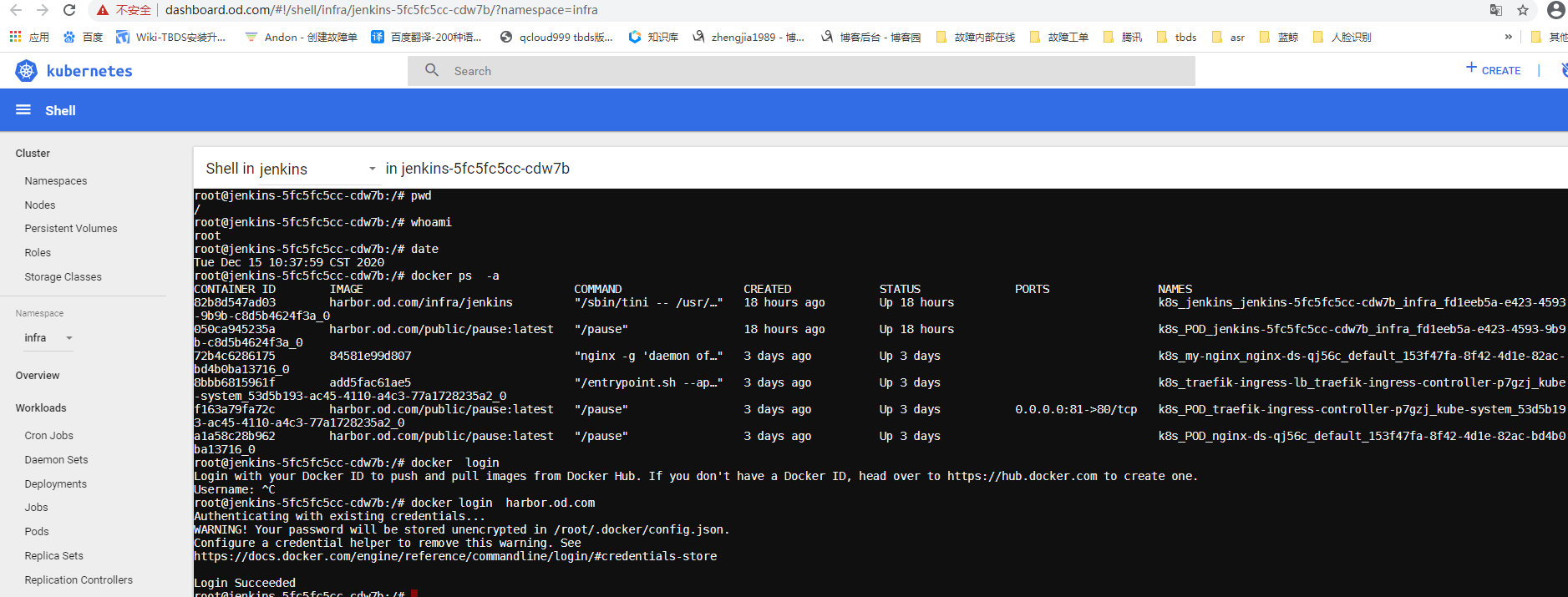

3.11.3检查jenkins容器里的docker客户端

验证当前用户,时区

验证Jenkins是否有问题是否能连接仓库

3.11.4检查jenkins容器里的SSH key

3.12部署maven软件

编译java,早些年用javac-->ant -->maven-->Gragle

在运维主机hdss7-200上二进制部署,这里部署maven-3.6.2版本

mvn命令是一个脚本,如果用jdk7,可以在脚本里修改

3.12.1.下载安装包

maven官方下载地址

https://archive.apache.org/dist/maven/maven-3

3.12.2.创建目录并解压

目录8u232是根据docker容器里的jenkins的jdk版本命名,请严格按照此命名

[root@k8s-5 k8s]# cp apache-maven-3.6.1-bin.tar.gz /opt/src/

[root@k8s-5 k8s]# cd /opt/src/

[root@k8s-5 src]# mkdir /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@k8s-5 src]# tar xf apache-maven-3.6.1-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@k8s-5 src]# cd /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@k8s-5 maven-3.6.1-8u232]# mv apache-maven-3.6.1/ ../ && mv ../apache-maven-3.6.1/* .

[root@k8s-5 maven-3.6.1-8u232]# ll

total 40

drwxr-xr-x 2 root root 4096 Dec 15 17:42 bin

drwxr-xr-x 2 root root 4096 Dec 15 17:42 boot

drwxr-xr-x 3 501 games 4096 Apr 5 2019 conf

drwxr-xr-x 4 501 games 4096 Dec 15 17:42 lib

-rw-r--r-- 1 501 games 13437 Apr 5 2019 LICENSE

-rw-r--r-- 1 501 games 182 Apr 5 2019 NOTICE

-rw-r--r-- 1 501 games 2533 Apr 5 2019 README.txt

3.12.3.设置settings.xml国内镜像源

[root@k8s-5 maven-3.6.1-8u232]# vim /data/nfs-volume/jenkins_home/maven-3.6.1-8u232/conf/settings.xml +151

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

3.13.jenkins镜像制作

3.13.1打包镜像并push到harbor仓库

[root@k8s-5 ~]# cd /data/dockerfile/jenkins/

[root@k8s-5 jenkins]# cp /data/k8s-yaml/software/k8s/jenkins-v2.176.2-with-docker.tar .

[root@k8s-5 jenkins]# ll

total 966216

-rw------- 1 root root 152 Dec 14 12:28 config.json

-rw-r--r-- 1 root root 365 Dec 15 15:54 Dockerfile

-rwxr-xr-x 1 root root 13857 Dec 14 12:28 get-docker.sh

-rw------- 1 root root 1679 Dec 15 11:58 id_rsa

-rw-r--r-- 1 root root 989369856 Dec 15 17:53 jenkins-v2.176.2-with-docker.tar

drwxr-xr-x 3 root root 4096 Dec 15 11:05 k8s

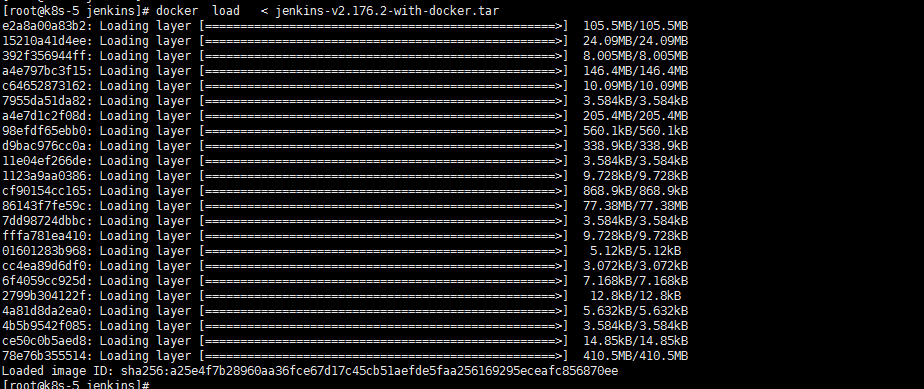

[root@k8s-5 jenkins]# docker load < jenkins-v2.176.2-with-docker.tar

3.13.2查看镜像是否load成功

[root@k8s-5 jenkins]# docker images

[root@k8s-5 jenkins]# docker images |grep none

<none> <none> a25e4f7b2896 16 months ago 977MB

3.13.3打包并push

[root@k8s-5 jenkins]# docker tag a25e4f7b2896 harbor.od.com/public/jenkins:v2.176.2

[root@k8s-5 jenkins]# docker push harbor.od.com/public/jenkins:v2.176.2

3.13.4拷贝配置文件并编辑

[root@k8s-5 jenkins]# cp Dockerfile Dockerfile.BAKUP

[root@k8s-5 jenkins]# vim Dockerfile

FROM harbor.od.com/public/jenkins:v2.176.2

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh

3.13.5验证

##注意build不成功有可能网络问题 多来几次

[root@k8s-5 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.176.2

4.制作dubbo微服务的底包镜像

运维主机k8s-5上

4.1.准备jre底包

[root@k8s-5 ~]# cd /data/nfs-volume/jenkins_home

[root@k8s-5 jenkins_home]# docker pull docker.io/stanleyws/jre7:7u80 ##7版本

[root@k8s-5 jenkins_home]# docker pull docker.io/stanleyws/jre8:8u112 ##8版本

[root@k8s-5 ~]# docker images |grep jre

stanleyws/jre8 8u112 fa3a085d6ef1 3 years ago 363MB

stanleyws/jre7 7u80 cc2ddfc866c2 3 years ago 299MB

[root@k8s-5 ~]# docker tag fa3a085d6ef1 harbor.od.com/public/jre:8u112

[root@k8s-5 ~]# docker tag cc2ddfc866c2 harbor.od.com/public/jre:7u80

[root@k8s-5 ~]# docker push harbor.od.com/public/jre:8u112

[root@k8s-5 ~]# docker push harbor.od.com/public/jre:7u80

4.2.准备java-agent的jar包

[root@k8s-5 jre8]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

4.3.自定义Dockerfile

4.3.1创建目录

[root@k8s-5 ~]# cd /data/dockerfile/

[root@k8s-5 dockerfile]# mkdir jre8

[root@k8s-5 dockerfile]# cd jre8/

4.3.2编辑配置文件

[root@k8s-5 jre8]# vim Dockerfile

FROM harbor.od.com/public/jre:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/

WORKDIR /opt/project_dir

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

**普罗米修斯的监控匹配规则**

**java agent 收集jvm的信息,采集jvm的jar包**

**docker运行的默认启动脚本entrypoint.sh**

4.3.3.准备config.yml

[root@k8s-5 jre8]# vim config.yml

---

rules:

- pattern: '.*'

4.3.4.entrypoint.sh

[root@k8s-5 jre8]# vim entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

[root@k8s-5 jre8]# chmod +x entrypoint.sh

[root@k8s-5 jre8]# ll

total 372

-rw-r--r-- 1 root root 29 Dec 16 16:26 config.yml

-rw-r--r-- 1 root root 297 Dec 16 17:11 Dockerfile

-rwxr-xr-x 1 root root 234 Dec 16 16:27 entrypoint.sh

-rw-r--r-- 1 root root 367417 May 10 2018 jmx_javaagent-0.3.1.jar

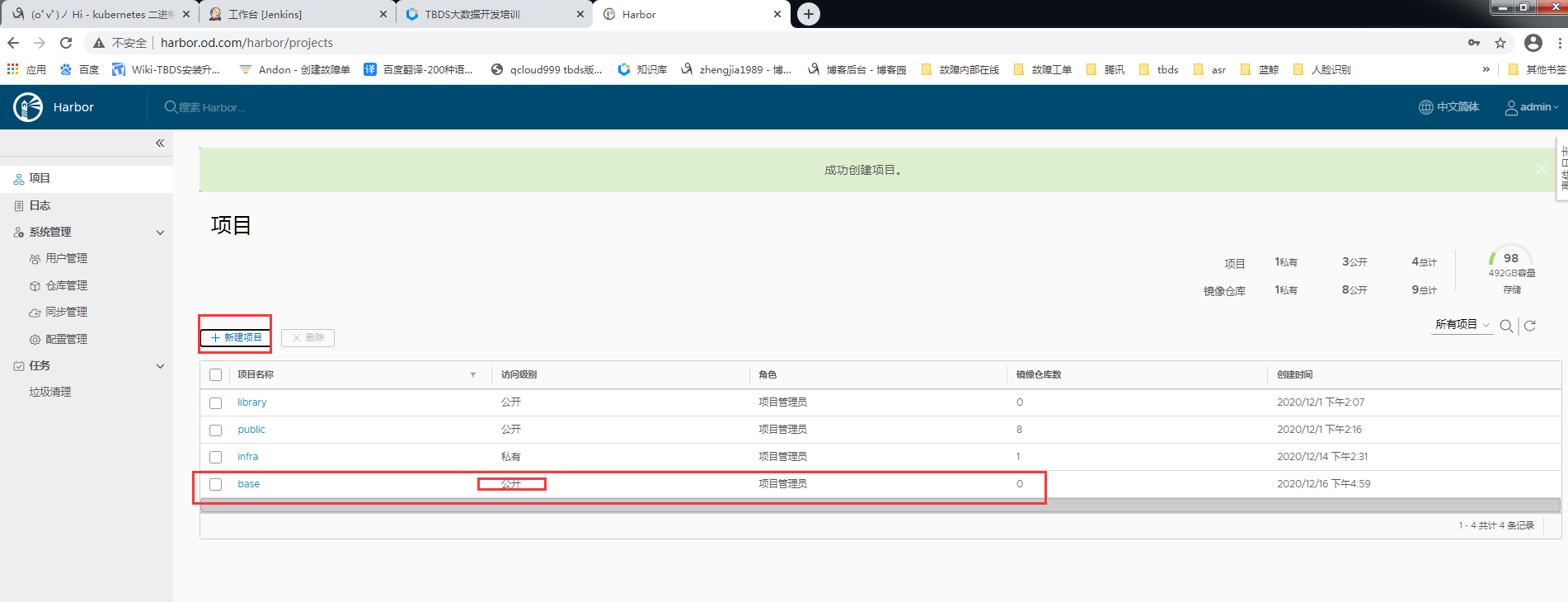

4.3.5.harbor创建base项目

4.3.6构建dubbo微服务的底包并推到harbor仓库

[root@k8s-5 jre8]# docker build . -t harbor.od.com/base/jre8:8u112

[root@k8s-5 jre8]# docker push harbor.od.com/base/jre8:8u112

5.交付dubbo微服务至kubenetes集群

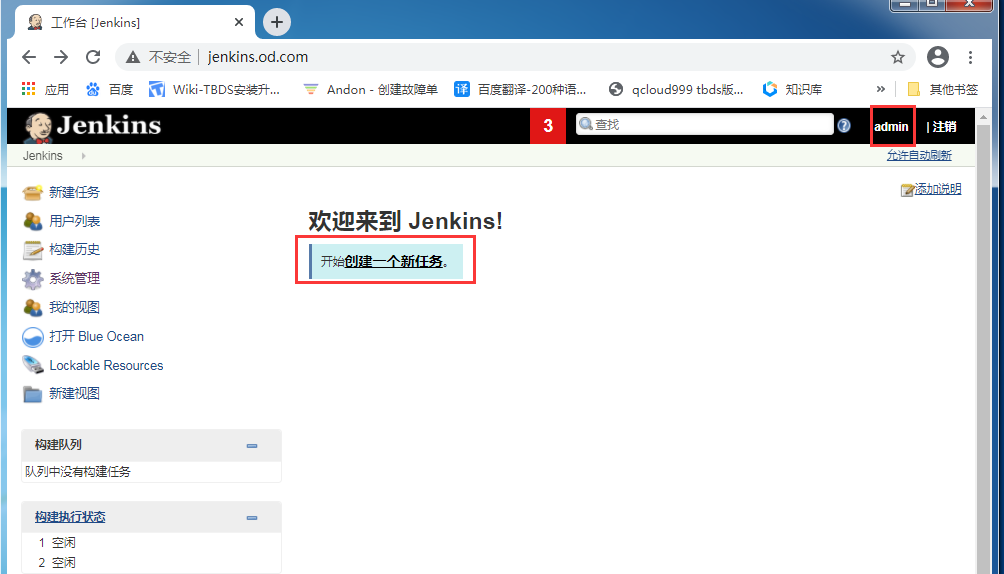

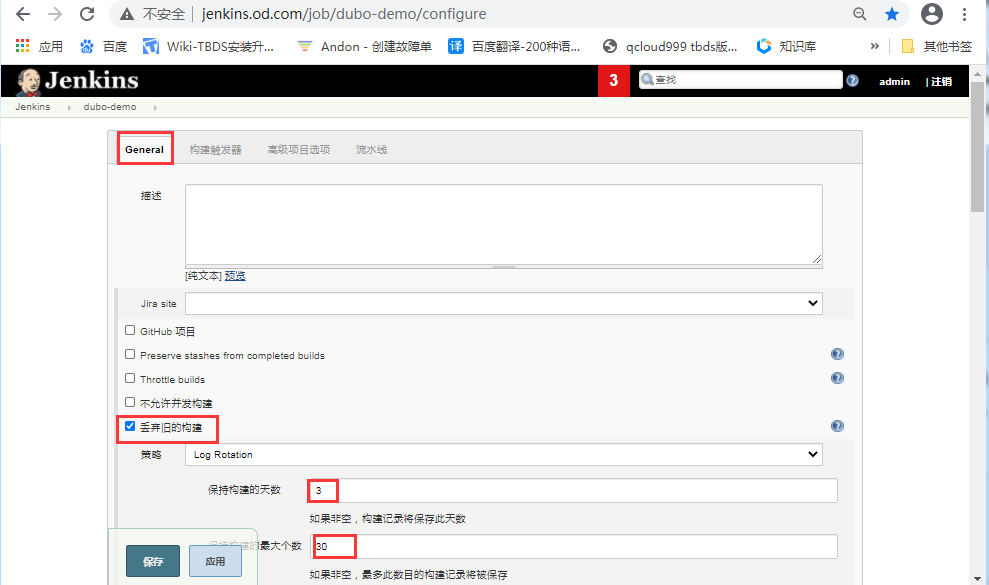

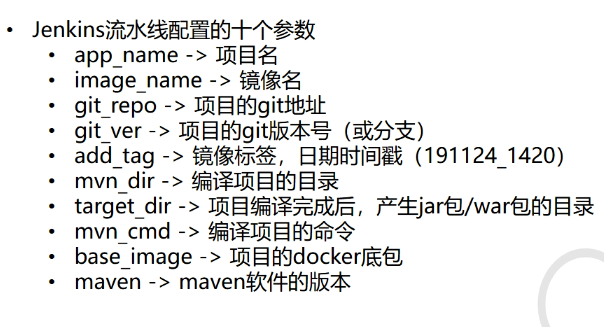

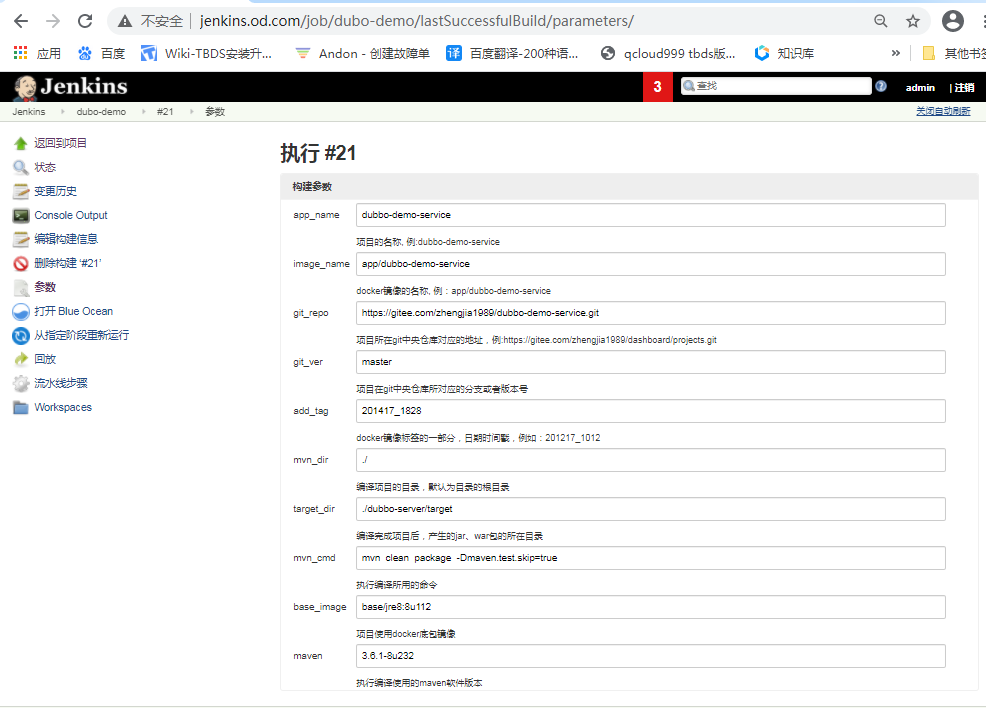

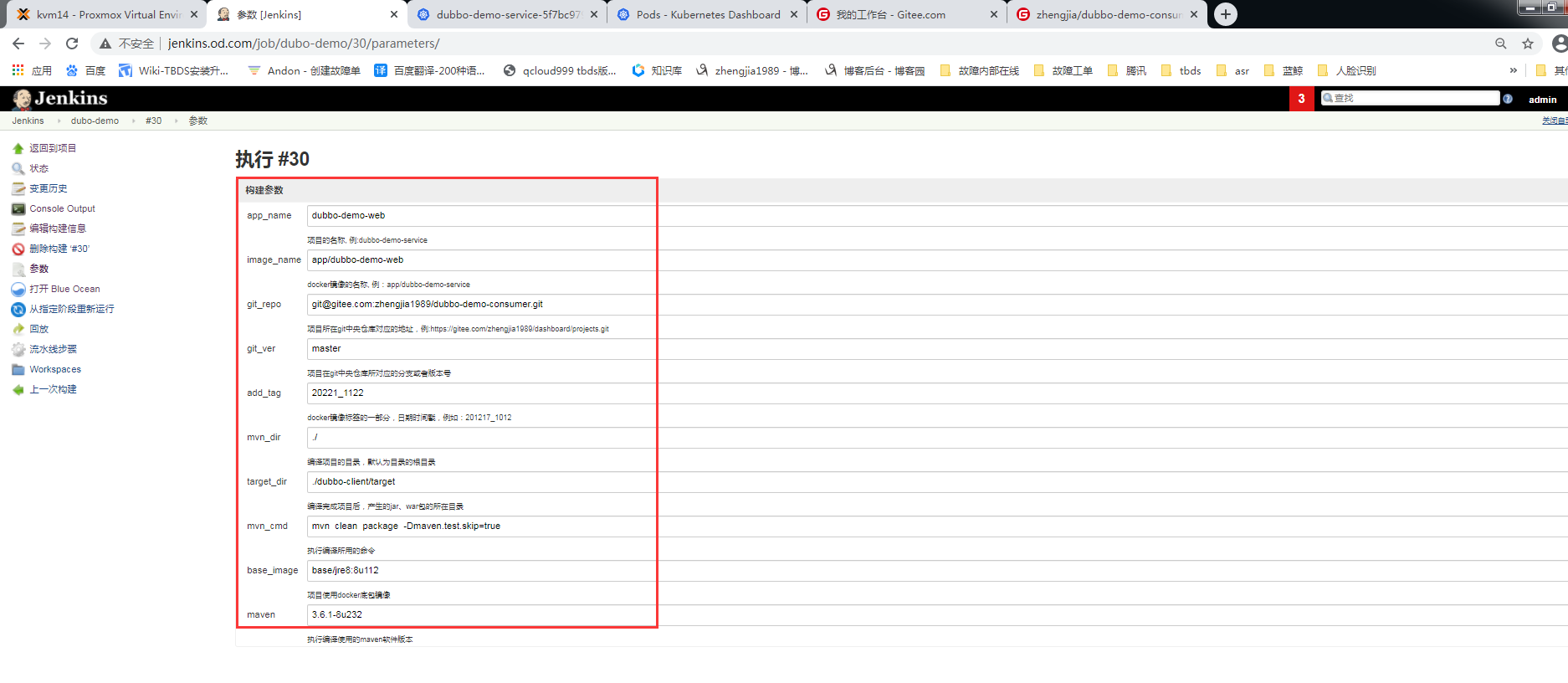

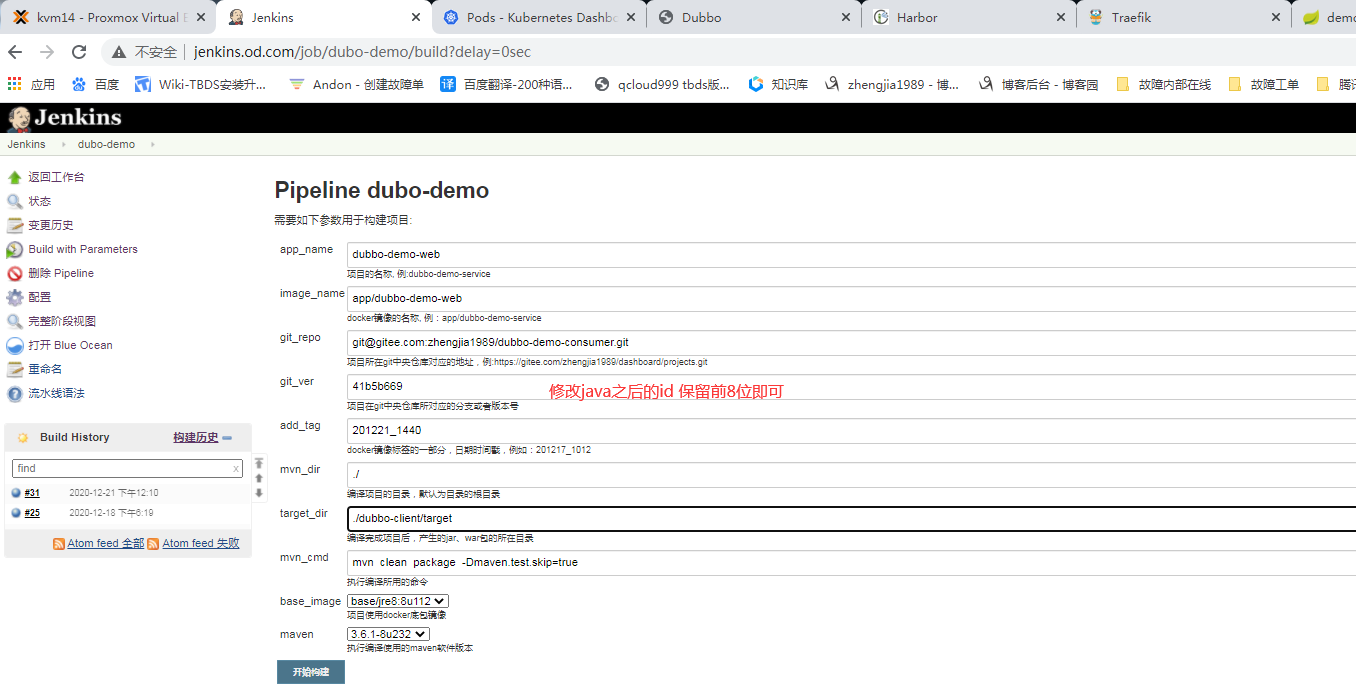

5.1.配置New job流水线

添加构建参数:

登陆jenkins----->选择NEW-ITEM----->item name :dubbo-demo----->Pipeline------>ok

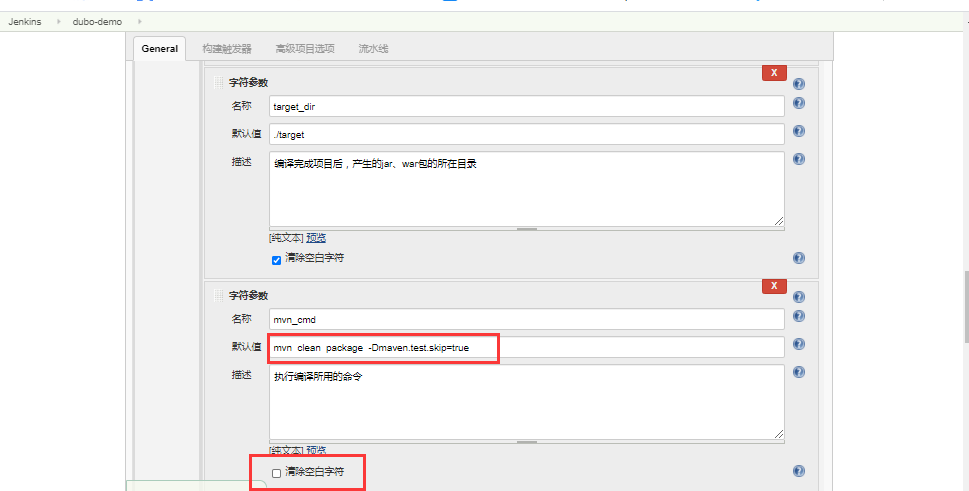

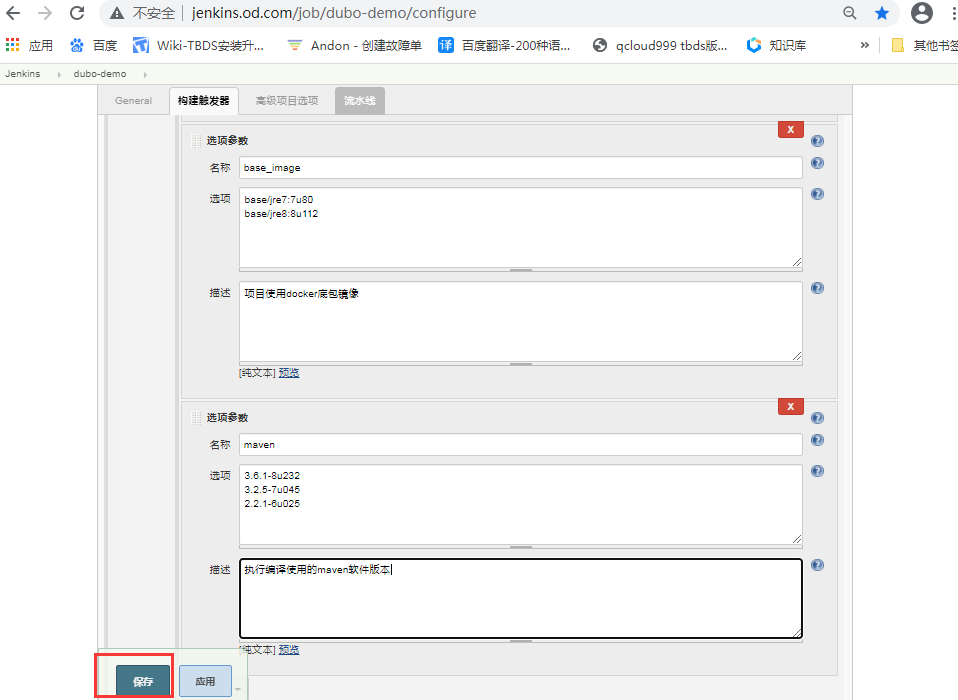

5.2.字符参数配置12个

5.3.添加Choice Parameter:2个(选择参数)

ng

ng

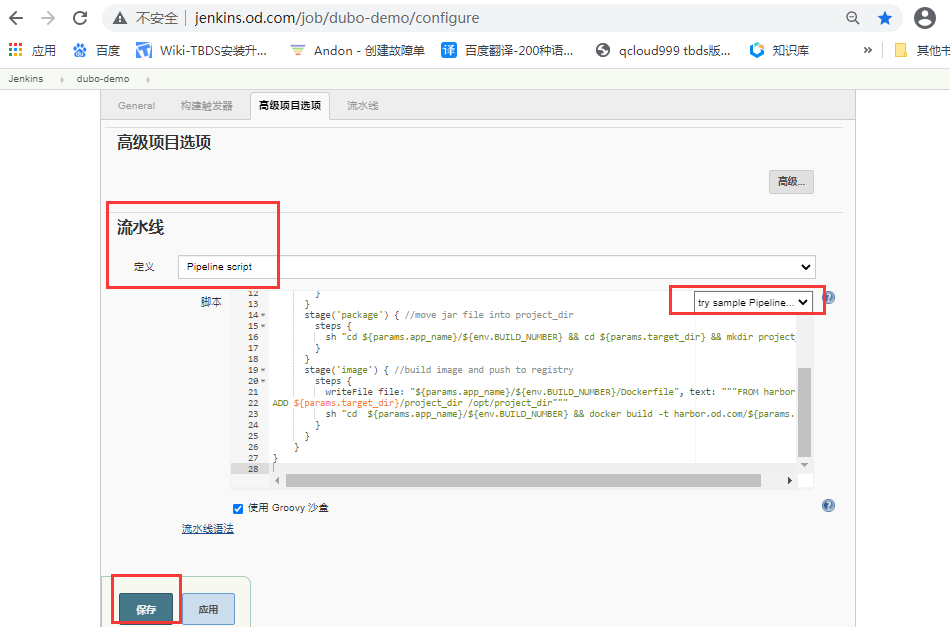

5.4.Pipeline Script

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('package') { //move jar file into project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/project_dir"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

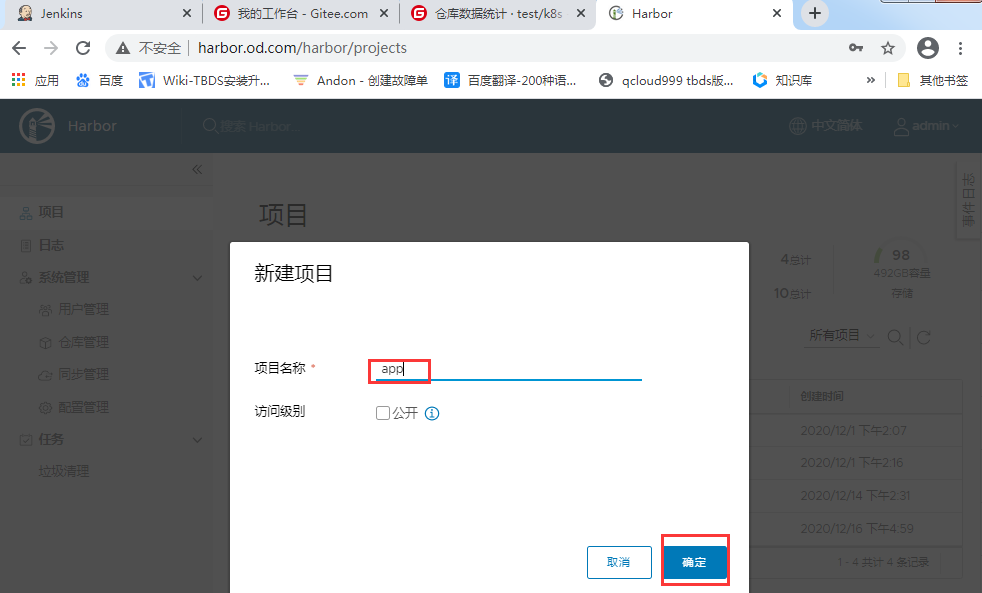

5.5.进入harbor创建项目

dubbo-demo-service

app/dubbo-demo-service

./dubbo-server/target

https://gitee.com/stanleywang/debbo-demo-service.git

mvn clean package -Dmaven.test.skip=true

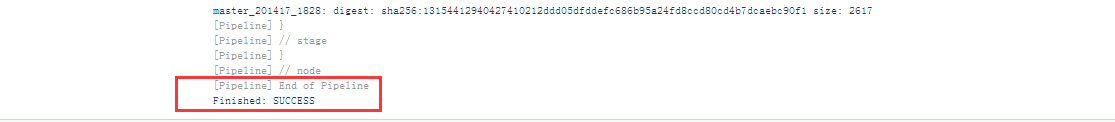

5.5.验证

5.6.创建dubbo-demo-service的资源配置清单

特别注意:dp.yaml的image替换成自己打包的镜像名称

在k8s-5上面执行

[root@k8s-5 src]# cd /data/k8s-yaml/

[root@k8s-5 k8s-yaml]# mkdir dubbo-demo-service

[root@k8s-5 k8s-yaml]# cd dubbo-demo-service/

[root@k8s-5 dubbo-demo-service]# vim dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: app

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

spec:

containers:

- name: dubbo-demo-service

image: harbor.od.com/app/dubbo-demo-service:master_201417_1828 ##注意时间戳自己修改

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

5.7.创建app名称空间,并添加secret资源

任意运算节点上

因为要去拉app私有仓库的镜像,所以添加secret资源

[root@k8s-3 ~]# kubectl create ns app

[root@k8s-3 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n app

5.8.检查环境

[root@k8s-1 ~]# cd /opt/zookeeper

[root@k8s-1 zookeeper]# bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower

##检查zk目录

[root@k8s-1 zookeeper]# bin/zkCli.sh -server localhost:2181

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper]

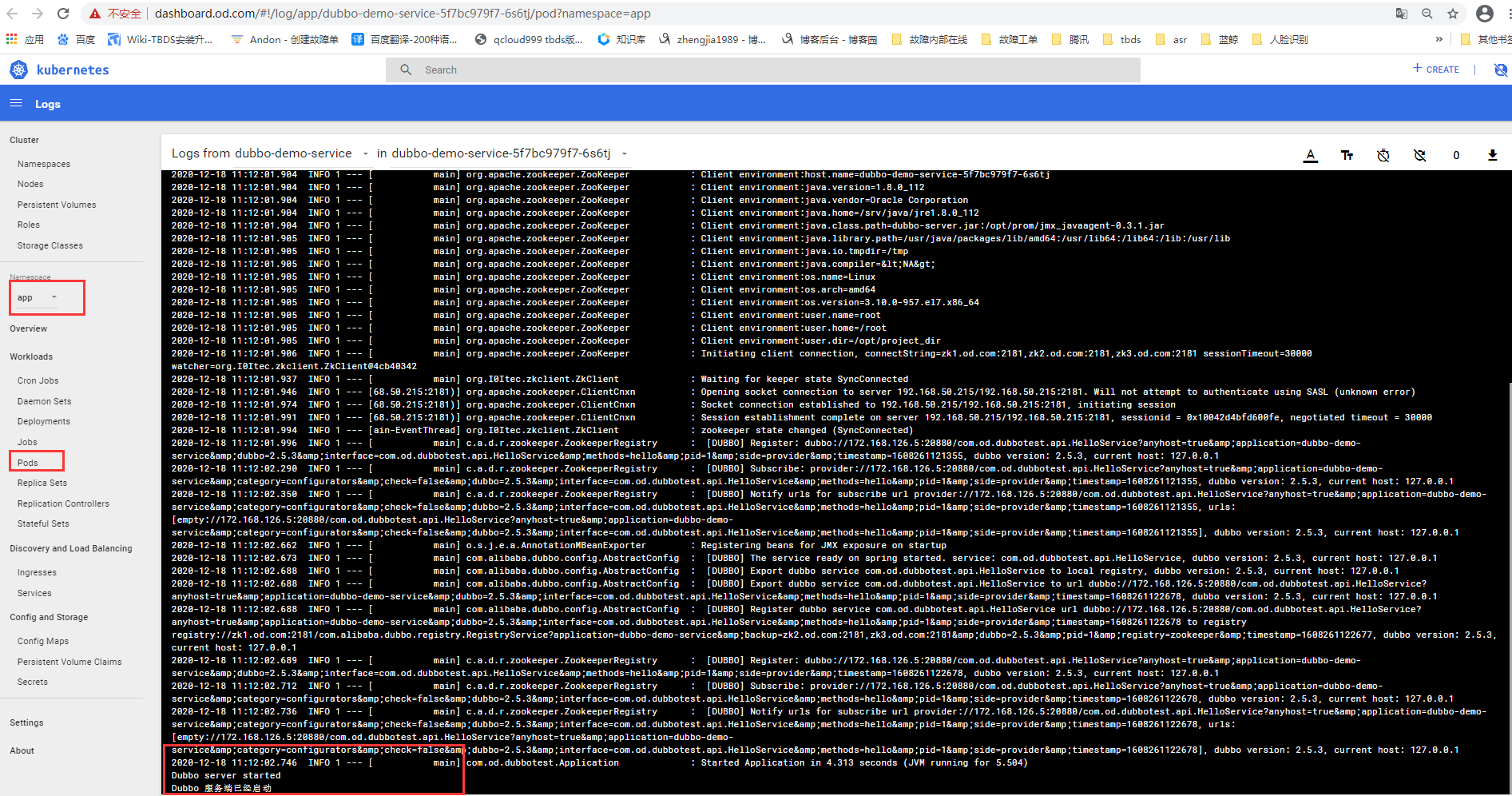

5.9应用资源清单并查看结果

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/dp.yaml

deployment.extensions/dubbo-demo-service created

[zk: localhost:2181(CONNECTED) 1] ls /

[dubbo, zookeeper]

[zk: localhost:2181(CONNECTED) 3] ls /dubbo

[com.od.dubbotest.api.HelloService]

6.dubbo-demo-service工具

准备docker镜像

下载源码并上传到k8s-5节点

[root@k8s-5 ~]# cd /opt/src/

[root@k8s-5 src]# wget http://k8s-yaml.od.com/software/k8s/dubbo-monitor-master.zip

[root@k8s-5 src]# unzip dubbo-monitor-master.zip

[root@k8s-5 src]# mv dubbo-monitor-master dubbo-monitor

[root@k8s-5 src]# cd dubbo-monitor/

6.1.修改以下项配置

[root@k8s-5 dubbo-monitor]# vim dubbo-monitor-simple/conf/dubbo_origin.properties

dubbo.container=log4j,spring,registry,jetty

dubbo.application.name=simple-monitor

dubbo.application.owner=zhengjia

dubbo.registry.address=zookeeper://zk1.od.com:2181?backup=zk2.od.com:2181,zk3.od.com:2181

dubbo.protocol.port=20880

dubbo.jetty.port=8080

dubbo.jetty.directory=/dubbo-monitor-simple/monitor

dubbo.charts.directory=/dubbo-monitor-simple/statistics

dubbo.statistics.directory=/dubbo-monitor-simple/charts

dubbo.log4j.file=logs/dubbo-monitor-simple.log

dubbo.log4j.level=WARN

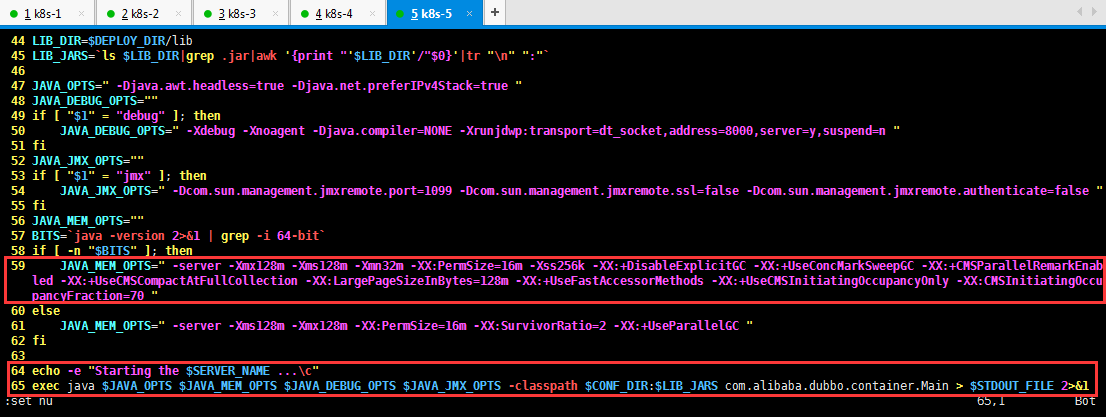

6.2.制作镜像

1.准备环境

由于是虚拟机环境,这里java给的内存太大,需要给小一些,nohup 替换成exec,要在前台跑,去掉结尾&符,删除nohup 行下所有行

[root@k8s-5 ~]# vim /opt/src/dubbo-monitor/dubbo-monitor-simple/bin/start.sh

59 JAVA_MEM_OPTS=" -server -Xmx128m -Xms128m -Xmn32m -XX:PermSize=16m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMS

CompactAtFullCollection -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "

60 else

61 JAVA_MEM_OPTS=" -server -Xms128m -Xmx128m -XX:PermSize=16m -XX:SurvivorRatio=2 -XX:+UseParallelGC "

62 fi

63

64 echo -e "Starting the $SERVER_NAME ...\c"

方法一、手动更改

[root@k8s-5 ~]# vim /opt/src/dubbo-monitor/dubbo-monitor-simple/bin/start.sh

65 exec java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1

##exec后面全干掉

方法二、sed命令替换,用到了sed模式空间

[root@k8s-5 ~]# cd /opt/src/dubbo-monitor/

[root@k8s-5 dubbo-monitor]# sed -r -i -e '/^nohup/{p;:a;N;$!ba;d}' ./dubbo-monitor-simple/bin/start.sh && sed -r -i -e "s%^nohup(.*)%exec \1%" /opt/src/dubbo-monitor/dubbo-monitor-simple/bin/start.sh

2.修改好的配置

3.规范目录,复制到data下

[root@k8s-5 src]# cp dubbo-monitor /data/dockerfile/

cp: omitting directory ‘dubbo-monitor’

[root@k8s-5 src]# cp -a dubbo-monitor /data/dockerfile/

[root@k8s-5 src]# cd /data/dockerfile/

[root@k8s-5 dockerfile]# cd dubbo-monitor/

[root@k8s-5 dubbo-monitor]# ll

total 12

-rw-r--r-- 1 root root 155 Jul 27 2016 Dockerfile

drwxr-xr-x 5 root root 4096 Jul 27 2016 dubbo-monitor-simple

-rw-r--r-- 1 root root 16 Jul 27 2016 README.md

[root@k8s-5 dubbo-monitor]# cat Dockerfile

FROM jeromefromcn/docker-alpine-java-bash

MAINTAINER Jerome Jiang

COPY dubbo-monitor-simple/ /dubbo-monitor-simple/

CMD /dubbo-monitor-simple/bin/start.sh

##有了Dockerfile 就用默认的没有在添加

6.3.build镜像并push到harbor仓库

[root@k8s-5 ~]# cd /data/dockerfile/dubbo-monitor

[root@k8s-5 dubbo-monitor]# docker build . -t harbor.od.com/infra/dubbo-monitor:latest

[root@k8s-5 dubbo-monitor]# docker push harbor.od.com/infra/dubbo-monitor:latest

6.4.准备k8s资源配置清单

[root@k8s-5 ~]# cd /data/k8s-yaml/

[root@k8s-5 k8s-yaml]# mkdir dubbo-monitor

[root@k8s-5 k8s-yaml]# cd dubbo-monitor/

dp.yaml

[root@k8s-5 dubbo-monitor]# vim dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.od.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

svc.yam

[root@k8s-5 dubbo-monitor]# vim svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-monitor

namespace: infra

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-monitor

ingress.yaml

[root@k8s-5 dubbo-monitor]# vim ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

spec:

rules:

- host: dubbo-monitor.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-monitor

servicePort: 8080

6.5.应用资源配置清单

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml

deployment.extensions/dubbo-monitor created

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/svc.yaml

service/dubbo-monitor created

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/ingress.yaml

ingress.extensions/dubbo-monitor created

6.6验证

6.7.解析域名

[root@k8s-1 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111013 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.50.215

dubbo-monitor A 192.168.50.10

[root@k8s-1 ~]# systemctl restart named

[root@k8s-1 ~]# dig -t A dubbo-monitor.od.com @192.168.50.215 +short

192.168.50.10

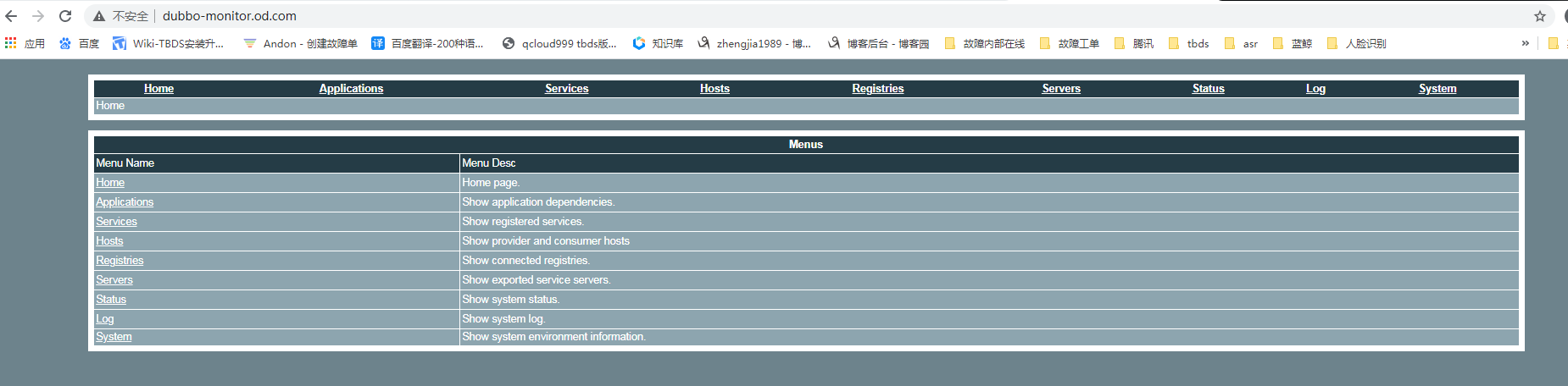

6.7.1验证访问网页

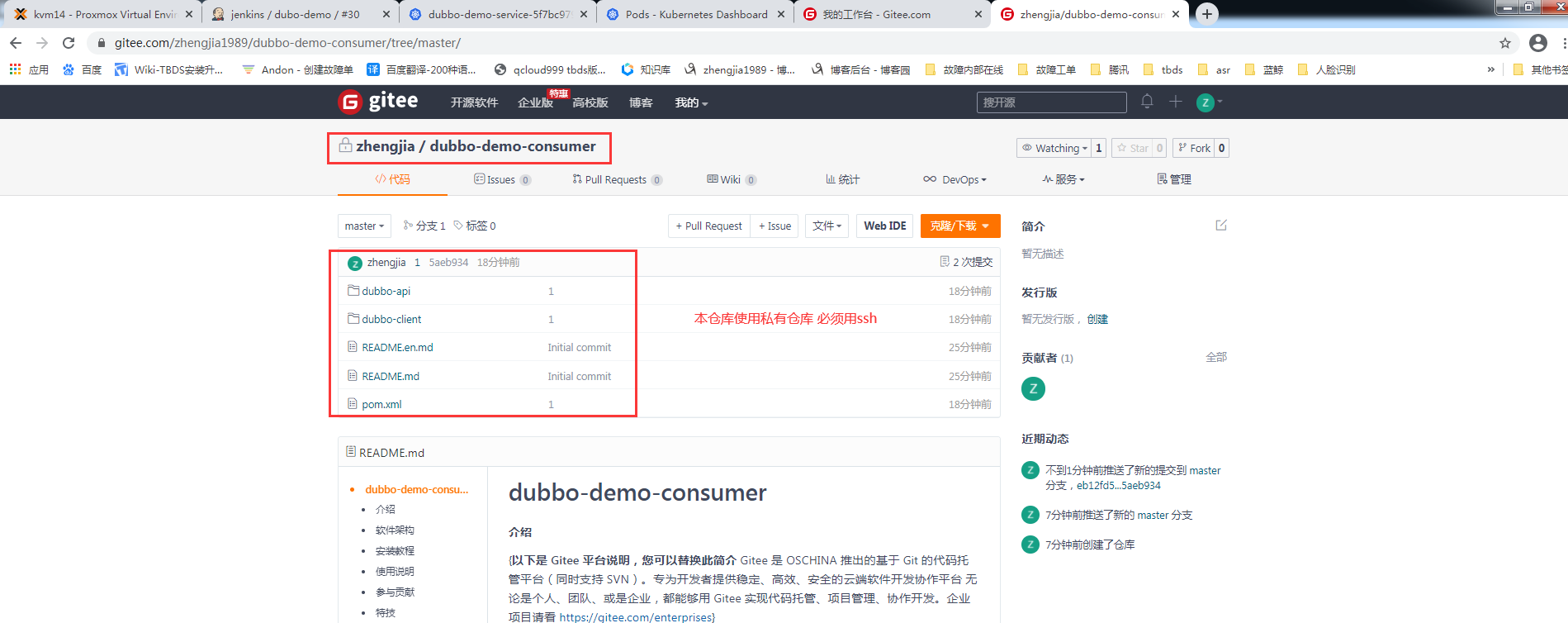

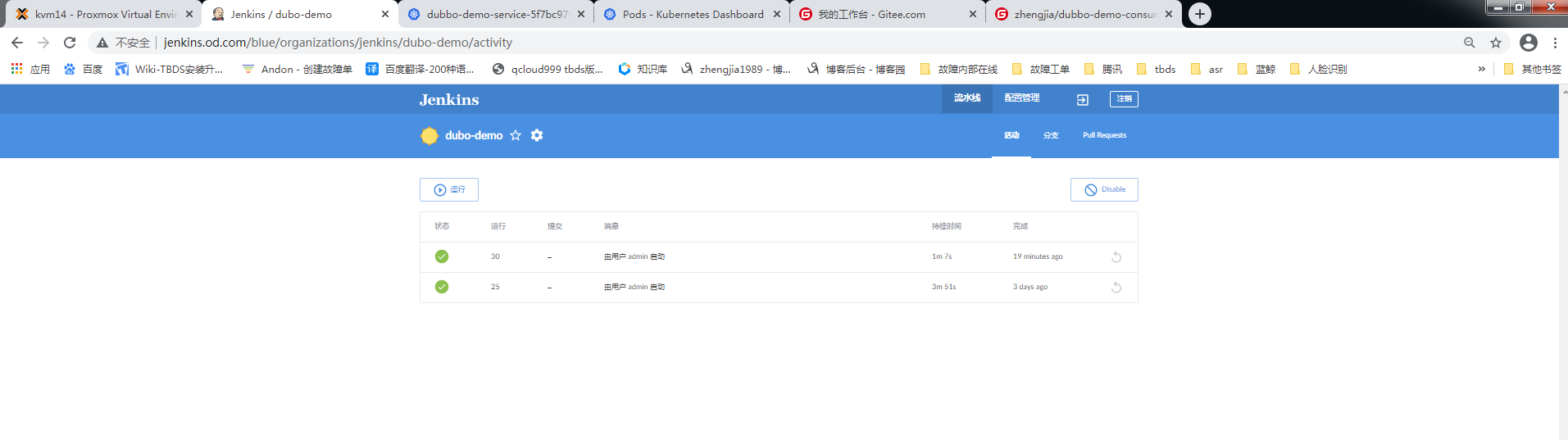

5.交付dubbo-demo-consumer

5.1.jenkins传参,构建dubbo-demo-service镜像,传到harbor

5.1.1准备仓库java包

dubbo-demo-web

app/dubbo-demo-web

git@gitee.com:zhengjia1989/dubbo-demo-consumer.git

master

20221_1122

./dubbo-client/target

5.1.2配置

5.1.3构建

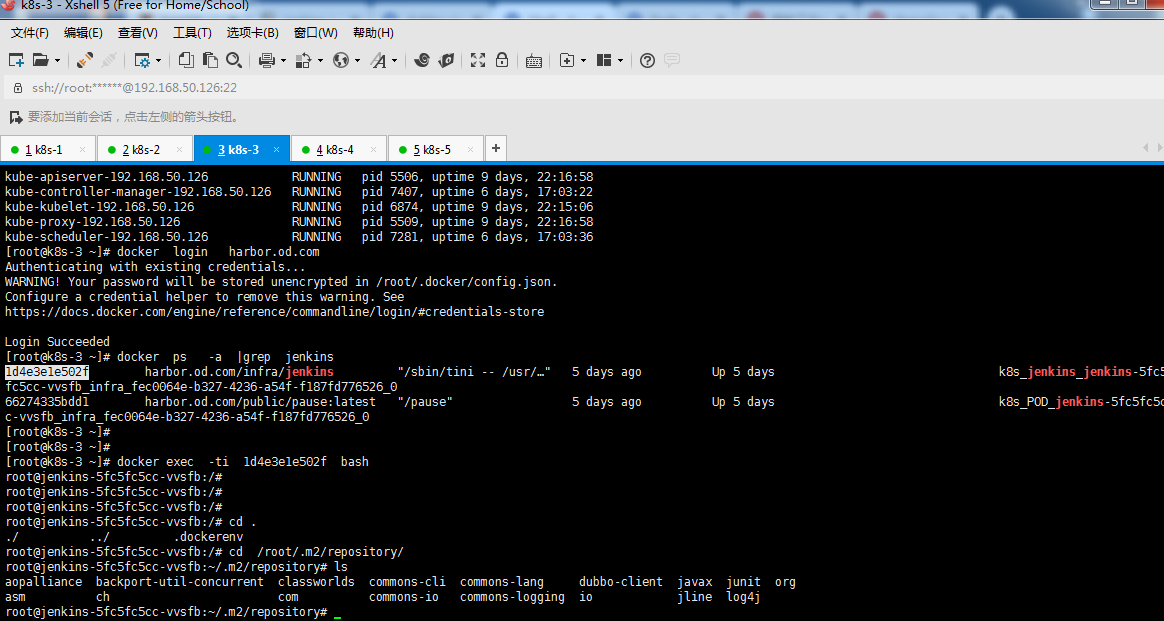

5.1.4.jenkins的jar包本地缓存

5.2.创建dubbo-demo-consumer的资源配置清单

运维主机hdss7-200上

特别注意:dp.yaml的image替换成自己打包的镜像名称

5.2.1.创建dp资源配置清单

[root@k8s-5 dubbo-demo-consumer]# vim dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-web:master_201221_1209

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

5.2.2.创建svc.yanl

[root@k8s-5 dubbo-demo-consumer]# vim svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-demo-consumer

5.2.3创建ingress.yaml

[root@k8s-5 dubbo-demo-consumer]# vim ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

rules:

- host: demo.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-demo-consumer

servicePort: 8080

5.2.4解析域名

在k8s-1上解析

[root@k8s-1 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111014 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.50.215

demo A 192.168.50.10

[root@k8s-1 ~]# systemctl restart named

[root@k8s-1 ~]# dig -t A demo.od.com @192.168.50.215 +short

192.168.50.10

[root@k8s-1 ~]# dig -t A demo.od.com @192.168.50.1 +short

69.172.201.153

5.2.5应用dubbo-demo-consumer资源配置清单

任意运算节点上

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/dp.yaml

deployment.extensions/dubbo-demo-consumer created

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/svc.yaml

service/dubbo-demo-consumer creat

[root@k8s-3 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/ingress.yaml

ingress.extensions/dubbo-demo-consumer created

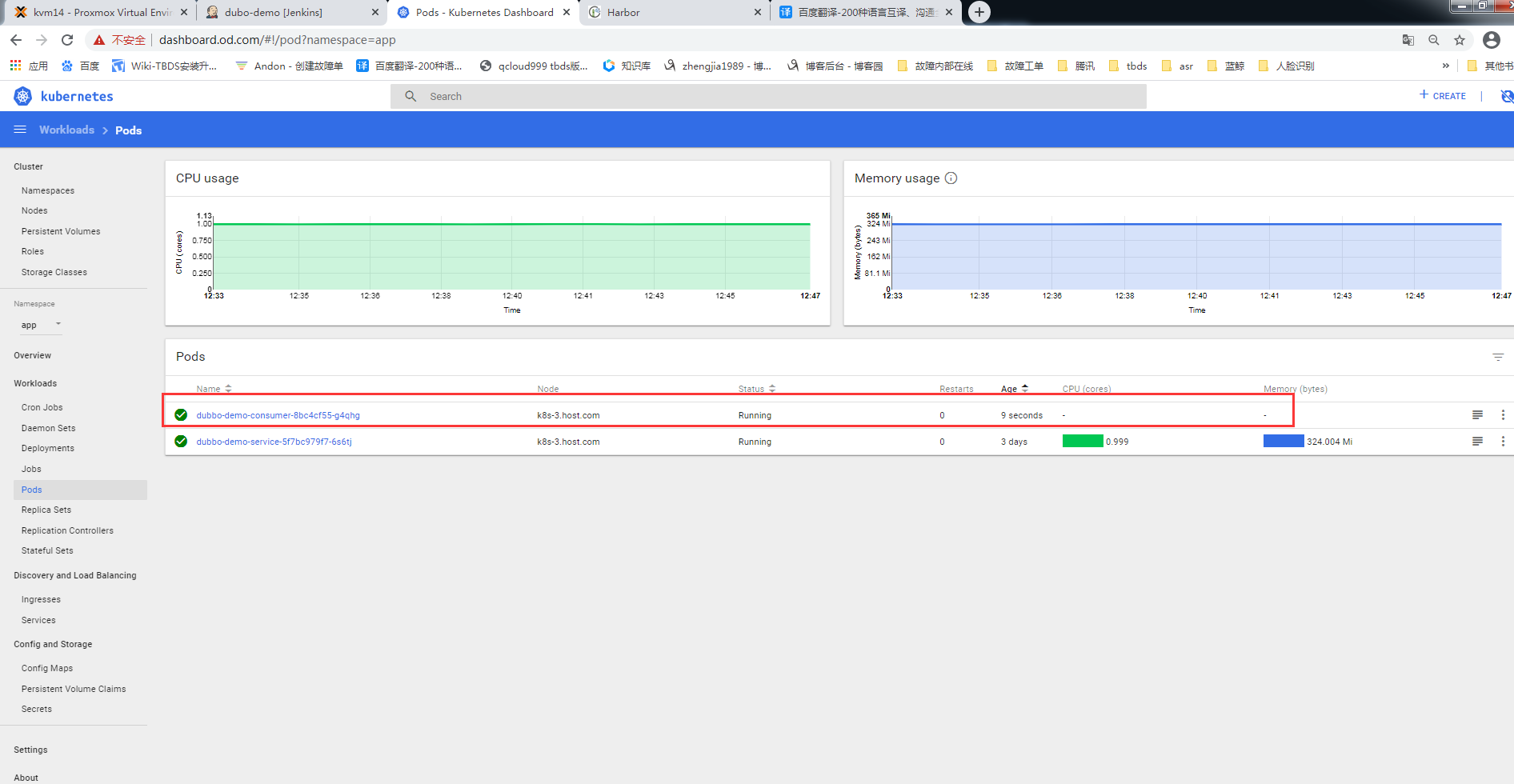

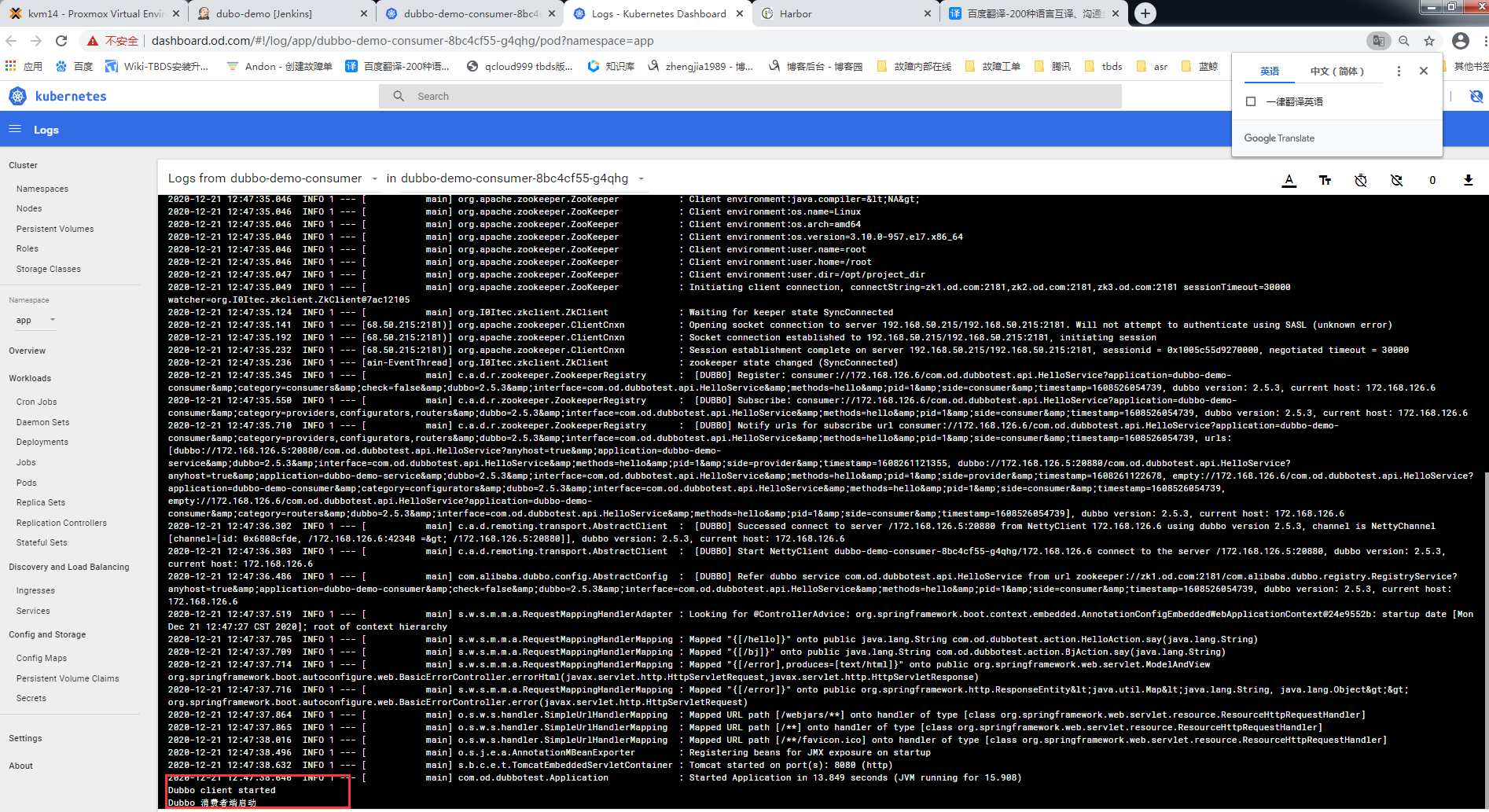

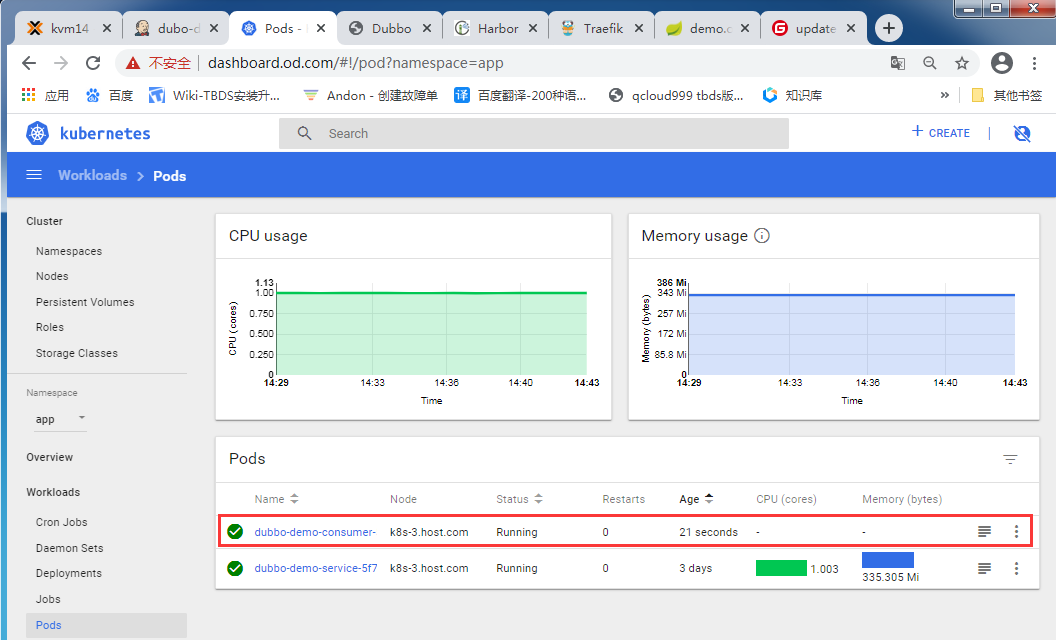

5.2.6检查启动状态

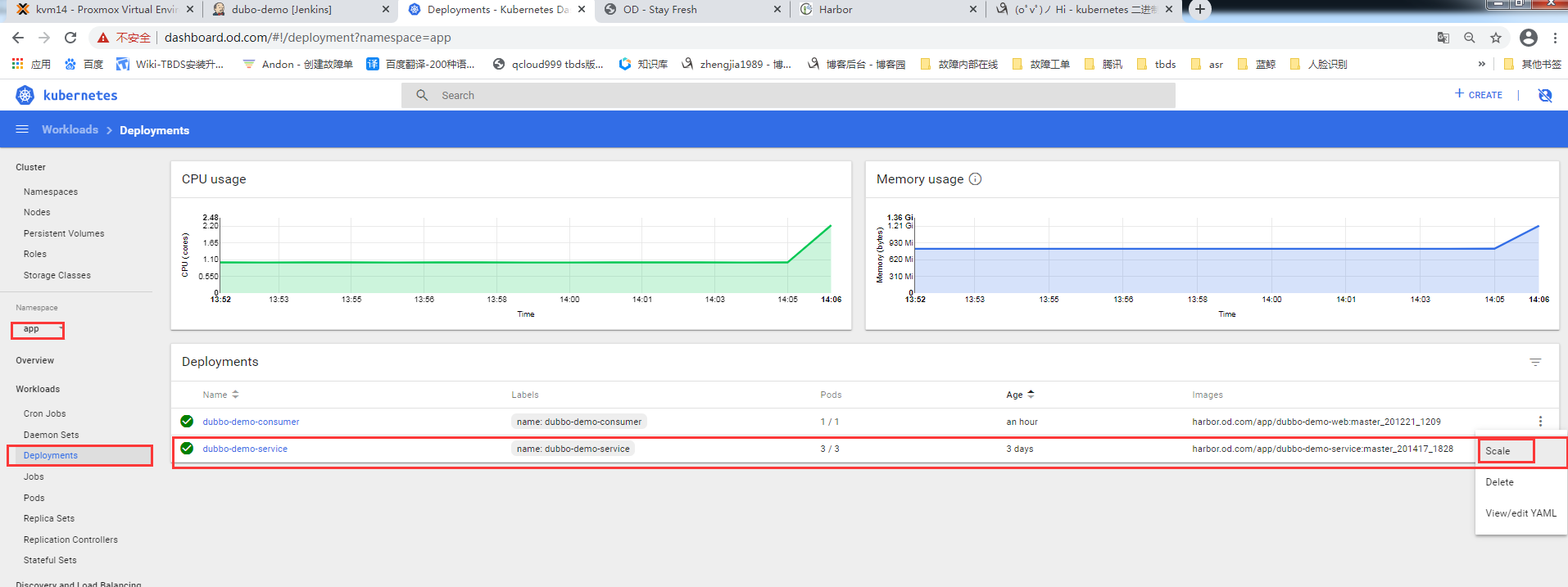

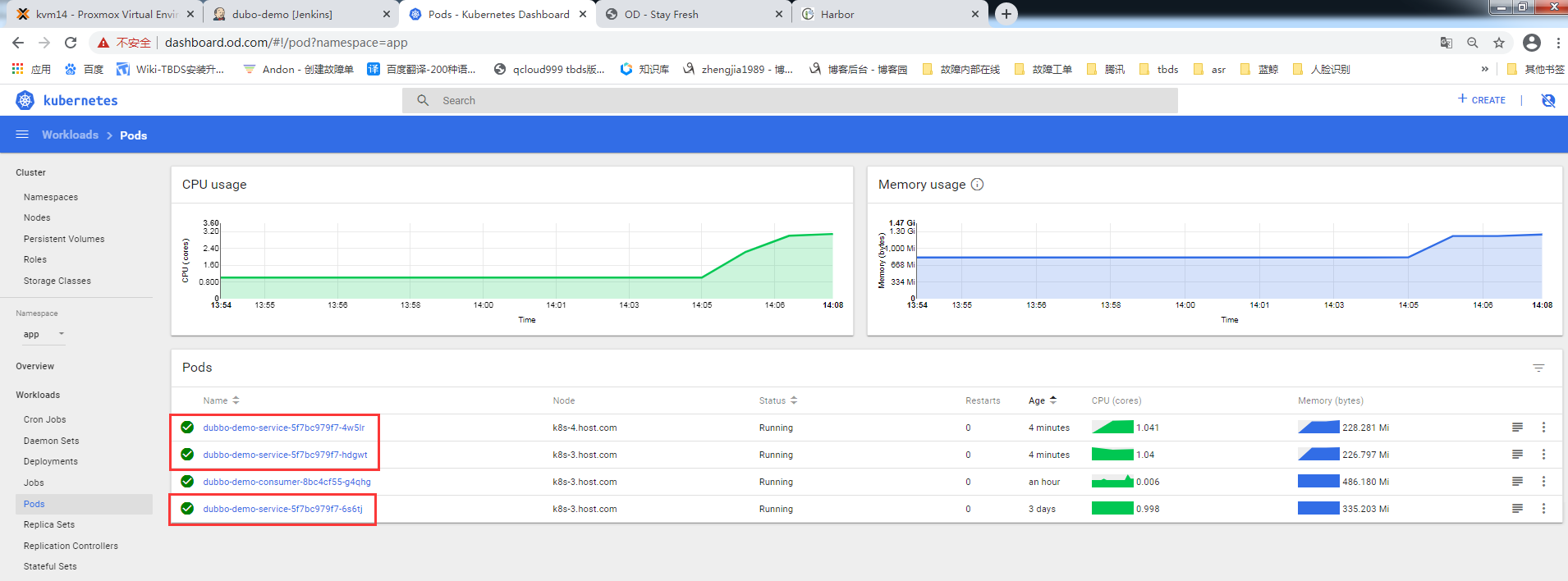

dashboard查看:

dashboard:

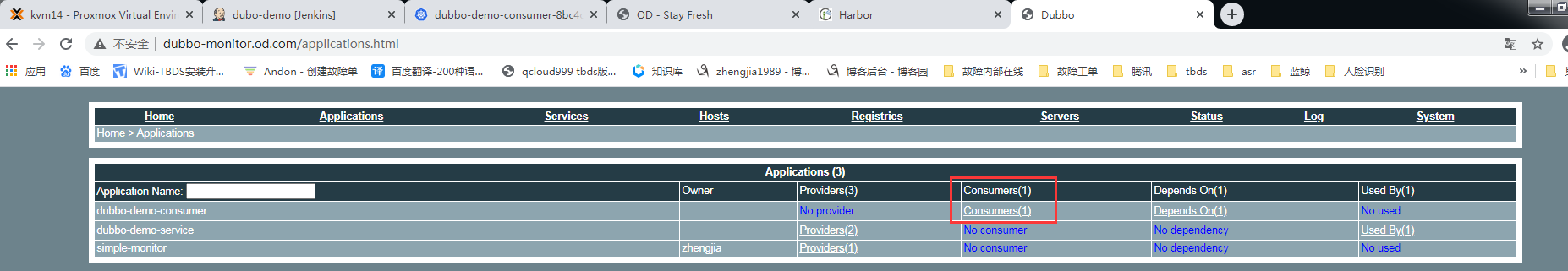

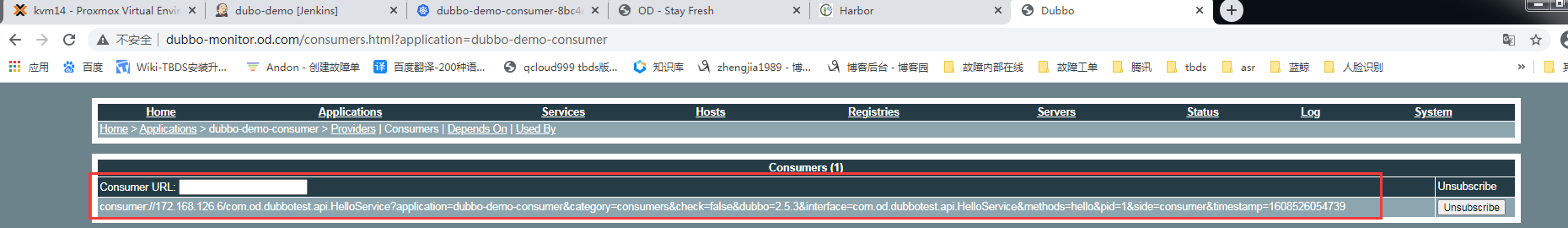

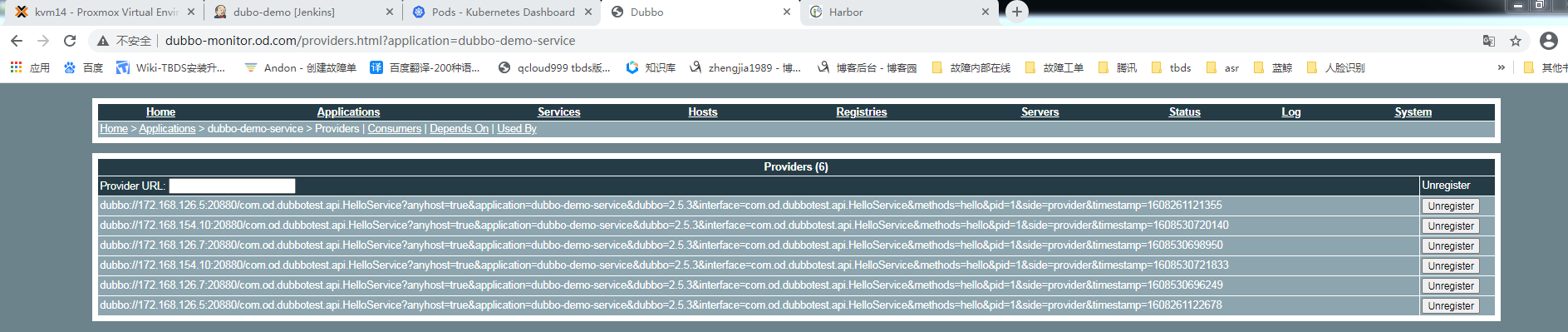

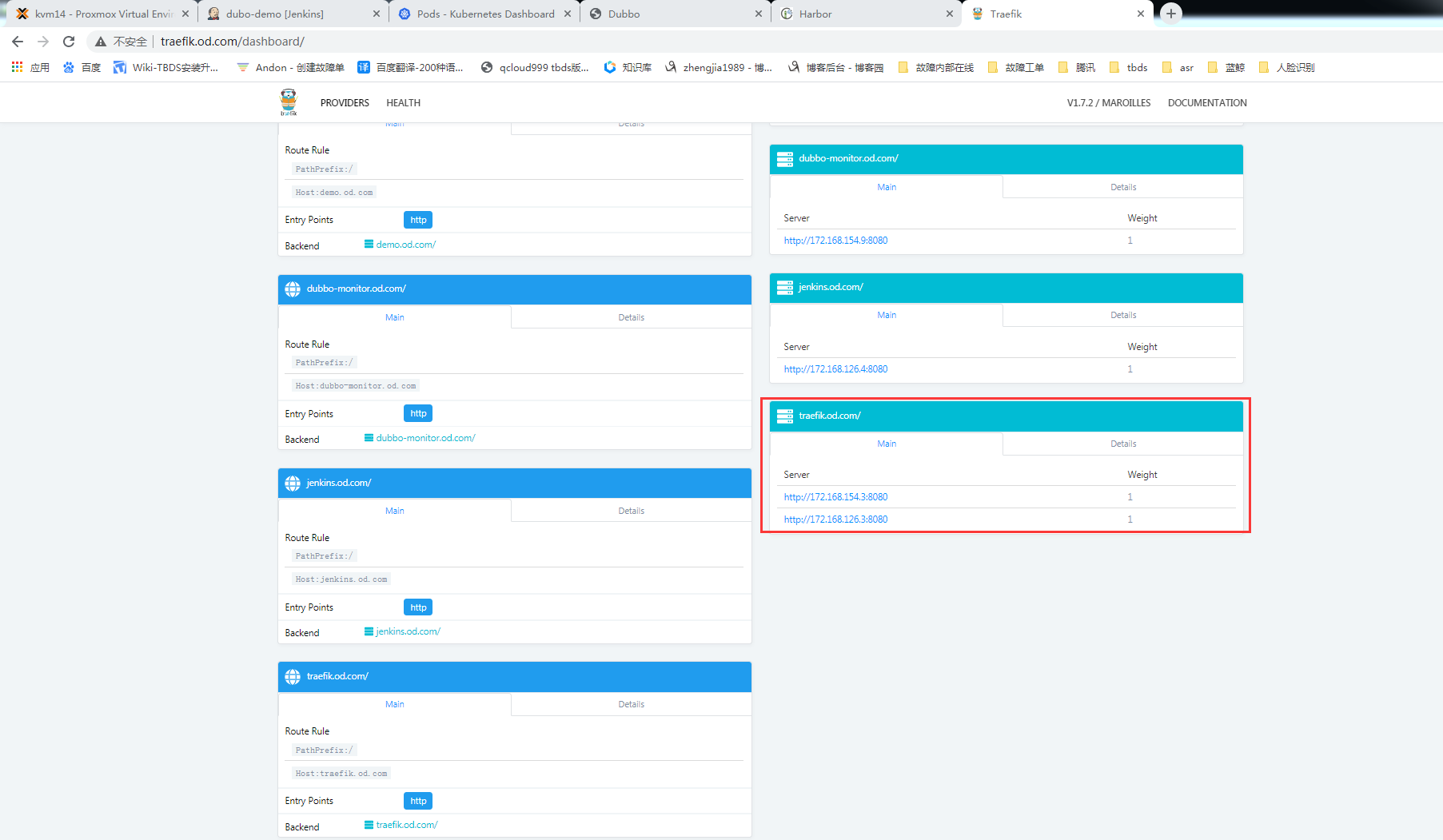

dubbo-monitor查看:

dubbo-monitor:

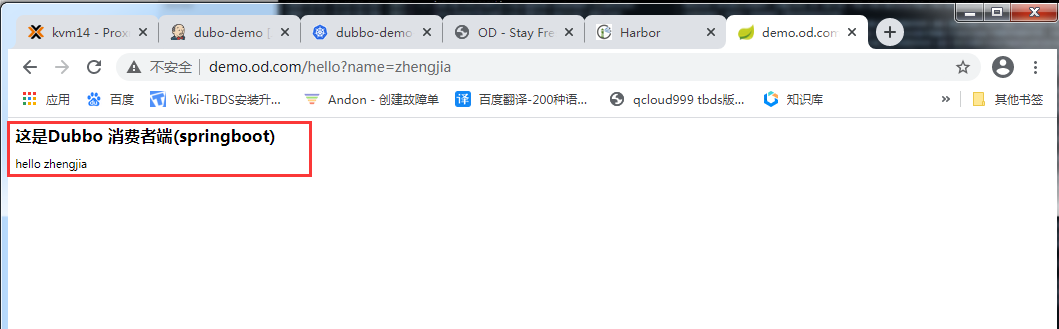

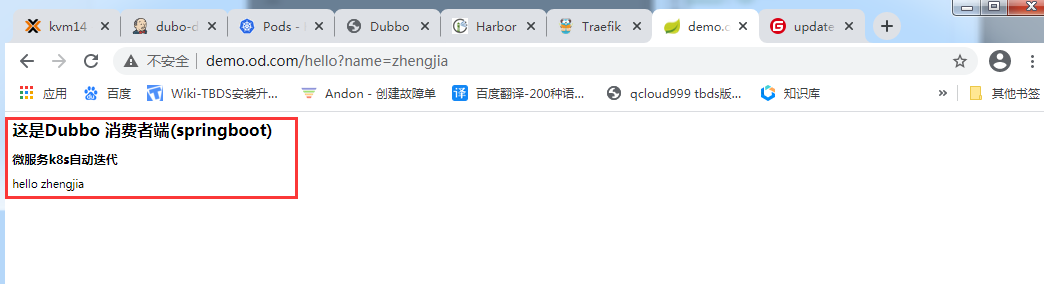

访问demo.od.com查看:

demo.od.com:

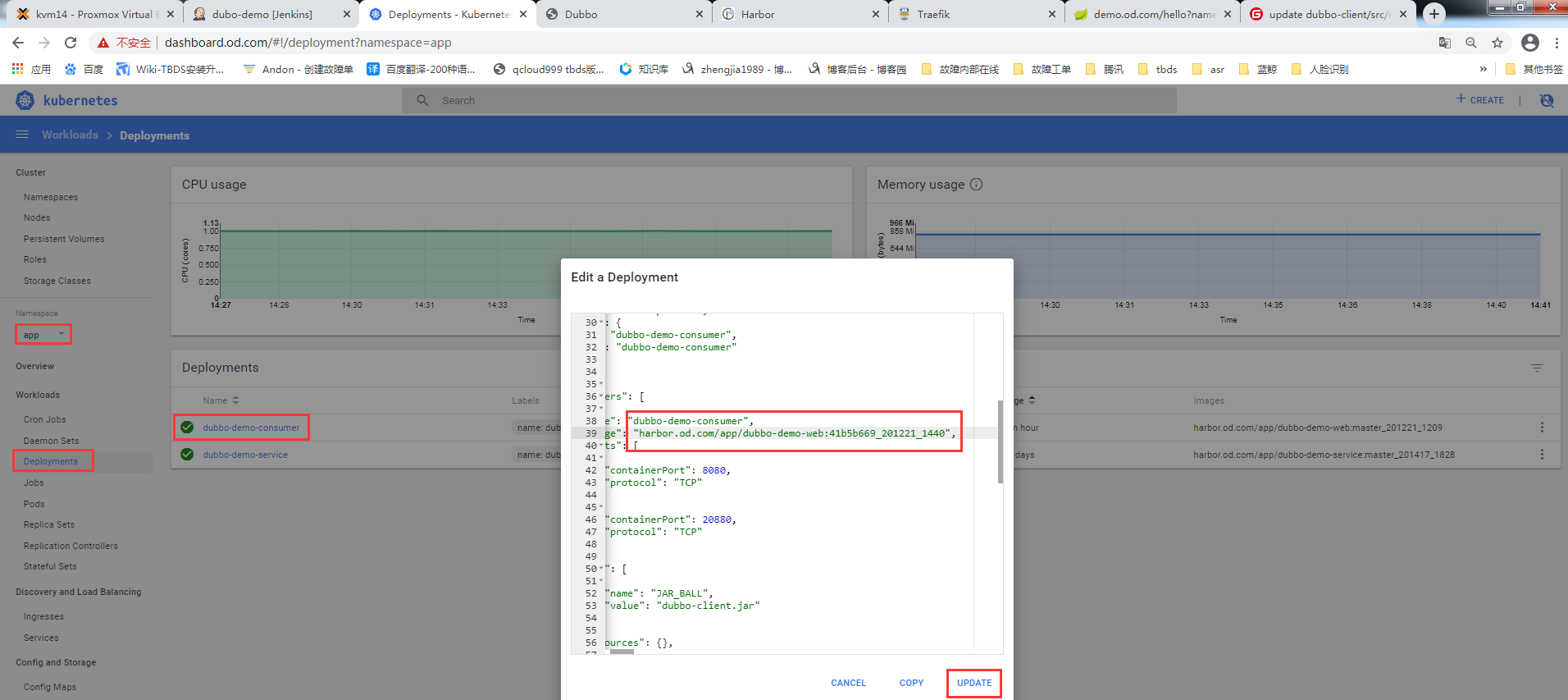

6.实战维护dubbo微服务集群

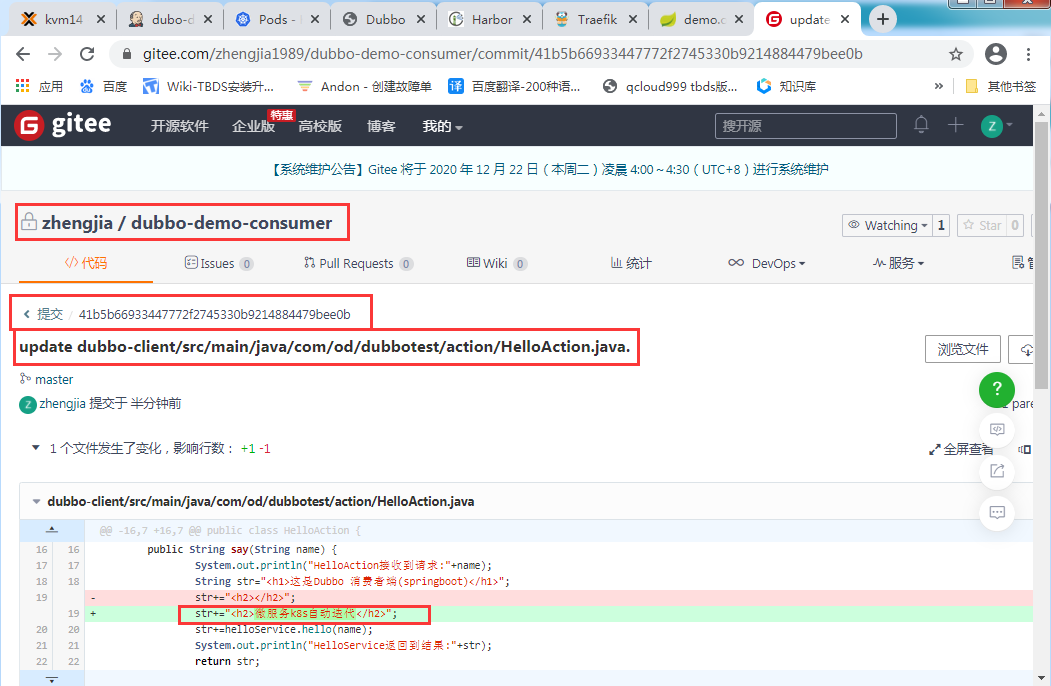

6.1.更新(rolling update)

修改代码提交GIT(发版)

使用jenkins进行CI(持续构建)

修改并应用k8s资源配置清单

或者在k8s上修改yaml的harbor镜像地址

6.2.扩容(scaling)

6.2.1在k8s的dashboard上直接操作:登陆dashboard页面-->部署-->伸缩-->修改数量-->确定

6.2.2命令行扩容,如下示例:

* Examples:

# Scale a replicaset named 'foo' to 3.

kubectl scale --replicas=3 rs/foo

# Scale a resource identified by type and name specified in "foo.yaml" to 3.

kubectl scale --replicas=3 -f foo.yaml

# If the deployment named mysql's current size is 2, scale mysql to 3.

kubectl scale --current-replicas=2 --replicas=3 deployment/mysql

# Scale multiple replication controllers.

kubectl scale --replicas=5 rc/foo rc/bar rc/baz

# Scale statefulset named 'web' to 3.

kubectl scale --replicas=3 statefulset/web

6.3验证

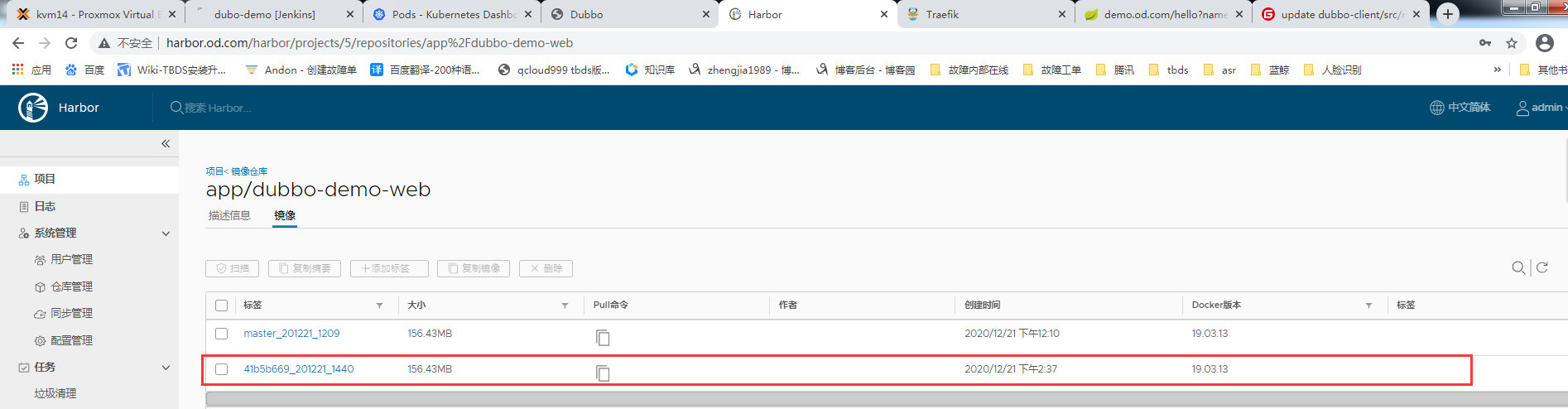

6.4.模拟更新迭代

6.4.1.修改jav包

6.4.2.构建镜像到harbor仓库

6.4.3.查看仓库是否存在

6.4.4.迭代版本

6.4.5.验证是否成功

6.4.6.如果出现节点已经宕机了

处理办法

1.先把节点干掉

##如k8s-3节点挂了

登录k8s-4节点干掉k8s-3

[root@k8s-4 ~]# kubectl delete node k8s-3.host.com

2.先注释掉nginx负载均衡

[root@k8s-1 ~]# vim /etc/nginx/conf.d/od.com.conf

[root@k8s-1 ~]# vim /etc/nginx/conf.d/dashboard.od.com.conf

3.重启nginx

[root@k8s-1 ~]# systemctl restart nginx.service

4.修复好k8s-3节点并启动会启动加入集群

[root@k8s-3 ~]# supervisorctl status

##只要全部running 就说明已经加入集群

[root@k8s-3 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-3.host.com Ready <none> 10d v1.15.4

k8s-4.host.com Ready master,node 19d v1.15.4

[root@k8s-3 ~]# kubectl label node k8s-3.host.com node-role.kubernetes.io/node=

node/k8s-3.host.com labeled

[root@k8s-3 ~]# kubectl label node k8s-3.host.com node-role.kubernetes.io/master=

node/k8s-3.host.com labeled

[root@k8s-3 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-3.host.com Ready master,node 10d v1.15.4

k8s-4.host.com Ready master,node 19d v1.15.4

5.放开nginx的负载均衡

[root@k8s-1 ~]# vim /etc/nginx/conf.d/od.com.conf

[root@k8s-1 ~]# vim /etc/nginx/conf.d/dashboard.od.com.conf

[root@k8s-1 ~]# systemctl restart nginx.service

6.修复调度

删除k8s-4节点的调度自动加入k8s-3

##查看

[root@k8s-3 ~]# kubectl get pods -n app -o wide

[root@k8s-3 ~]# kubectl get pods -n kube-system

##干掉

[root@k8s-3 ~]# kubectl delete dubbo-demo-consumer-5d56858bc-gzp96 -n app

[root@k8s-3 ~]# kubectl delete pods kubernetes-dashboard-5f768f95b8-jvgb6 -n kube-system

7.查看iptables规则

[root@k8s-3 ~]# iptables -t nat -D POSTROUTING -s 172.168.154.0/24 ! -o docker0 -j MASQUERADE

[root@k8s-3 ~]# iptables -t nat -I POSTROUTING -s 172.168.154.0/24 ! -d 172.168.0.0/16 ! -o docker0 -j MASQUERADE

6.5.FAQ

6.5.1.supervisor restart 不成功?

[root@k8s-3 ~]# vim /etc/supervisord.d/xxx.ini 追加:

killasgroup=true

stopasgroup=true