CentOS 安装 Kubernetes(K8s)

通过 kubeadm 安装,kubernetes CHANGELOG:https://github.com/kubernetes/kubernetes/releases

一、安装 docker(所有节点)

这里使用 Docker 作为运行时环境,安装参考:https://www.cnblogs.com/jhxxb/p/11410816.html

安装完成后需要进行一些配置:https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/#docker

Docker Engine 没有实现 Kubernetes Container Runtime Interface(CRI),而 CRI 是容器运行时在 Kubernetes 中工作所必须的

为此,须安装一个额外的服务 cri-dockerd。 cri-dockerd 是一个基于传统的内置 Docker 引擎支持的项目,它在 1.24 版本从 kubelet 中移除

# 下载安装 wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1-3.el7.x86_64.rpm sudo yum install -y cri-dockerd-0.3.1-3.el7.x86_64.rpm # 修改启动文件,在 ExecStart 后面追加 # --network-plugin=cni,告诉容器使用 kubernetes 的网络接口 # --pod-infra-container-image,覆盖默认的沙盒(pause)镜像 sudo sed -i 's,^ExecStart.*,& --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9,' /usr/lib/systemd/system/cri-docker.service # 启动 cri-dockerd,依赖 docker,需要先安装 docker sudo systemctl daemon-reload sudo systemctl enable --now cri-docker.service sudo systemctl enable --now cri-docker.socket # 查看 cri-dockerd 状态 systemctl status cri-docker.service

二、安装 kubeadm(所有节点)

https://developer.aliyun.com/mirror/kubernetes

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm

准备

# 关闭防火墙 sudo systemctl stop firewalld.service sudo systemctl disable firewalld.service # 阿里 yum 源 sudo mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup sudo curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo sudo sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo yum makecache # 将 SELinux 设置为 permissive 模式(相当于将其禁用) sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config # 关闭 swap,swap 为 0 sudo sed -ri 's/.*swap.*/#&/' /etc/fstab sudo swapoff -a free -g # 允许 iptables 检查桥接流量 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sudo sysctl --system

安装

# 添加 kubernetes 阿里云 yum 源,安装 kubeadm,kubelet 和 kubectl sudo bash -c 'cat << EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl EOF' # 安装 sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes # 开机启动,sudo systemctl enable kubelet && sudo systemctl start kubelet sudo systemctl enable --now kubelet # 查看 kubelet 状态 systemctl status kubelet kubelet --version # 重新启动 kubelet sudo systemctl daemon-reload sudo systemctl restart kubelet

三、使用 kubeadm 安装 Kubernetes(Master 安装,其它节点加入)

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm

Master 节点初始化安装 kubernetes

init 参数:https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init

这里 https://github.com/zhangguanzhang/google_containers 提供了 google_containers 国内的镜像地址:registry.aliyuncs.com/k8sxio

nju docker 镜像:https://doc.nju.edu.cn/books/35f4a,在 https://mirror.nju.edu.cn 搜索 docker 可查看同步状态

DaoCloud docker 镜像:https://github.com/DaoCloud/public-image-mirror

确保主机名不是 localhost,并且已经写入到 /etc/hosts 文件,且可以 ping 通

# --apiserver-advertise-address 的值换成 Master 主机 IP # 不指定 --kubernetes-version 默认会从 https://dl.k8s.io/release/stable-1.txt 获取最新版本号 sudo kubeadm init \ --apiserver-advertise-address=10.70.19.33 \ --image-repository registry.aliyuncs.com/google_containers \ --service-cidr=10.96.0.0/16 \ --pod-network-cidr=192.168.0.0/16 --cri-socket unix:///var/run/cri-dockerd.sock

初始化过程中若下载失败,可暂时下载 latest,然后打 tag,例如 coredns:v1.8.4

# 查看所需镜像 kubeadm config images list docker pull registry.aliyuncs.com/google_containers/coredns docker tag registry.aliyuncs.com/google_containers/coredns:latest registry.aliyuncs.com/google_containers/coredns:v1.8.4

初始化好后按照提示执行:https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/#更多信息

Master 节点安装 Pod 网络

集群只能安装一个 Pod 网络,通过 kubectl get pods --all-namespaces 检查 CoreDNS Pod 是否 Running 来确认其是否正常运行。一旦 CoreDNS Pod 启用并运行,就让 Node 可以加入 Master 了

# 主节点安装网络 # kubectl apply -f https://raw.githubusercontent.com/coreos/flanne/master/Documentation/kube-flannel.yml # calico 新版本 https://projectcalico.docs.tigera.io/getting-started/kubernetes/quickstart,安装 tigera-operator.yaml 和 custom-resources.yaml(需要修改 cidr,对应 kubeadm init 时的 --pod-network-cidr) kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml kubectl get ns # 查看所有名称空间的 pods # kubectl get pods --all-namespaces kubectl get pod -o wide -A # 查看指定名称空间的 pods kubectl get pods -n kube-system

允许 Master 点部署 Pod(可选)

# 允许 Master 节点部署 Pod kubectl taint nodes --all node-role.kubernetes.io/master- kubectl taint nodes --all node-role.kubernetes.io/control-plane- # 如果不允许调度 kubectl taint nodes master1 node-role.kubernetes.io/master=:NoSchedule # 污点可选参数: # NoSchedule: 一定不能被调度 # PreferNoSchedule: 尽量不要调度 # NoExecute: 不仅不会调度, 还会驱逐 Node 上已有的 Pod

其它节点加入主节点

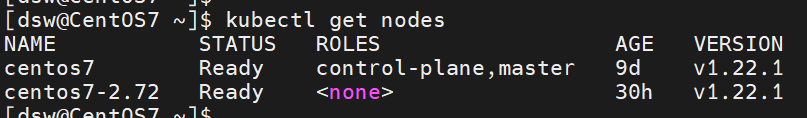

# 其它节点加入,token 会失效 sudo kubeadm join 10.70.19.33:6443 --token tqaitp.3imn92ur339n4olo --discovery-token-ca-cert-hash sha256:fb3da80b6f1dd5ce6f78cb304bc1d42f775fdbbdc80773ff7c59 --cri-socket unix:///var/run/cri-dockerd.sock # 如果超过 2 小时忘记了令牌 # 打印新令牌 kubeadm token create --print-join-command # 创建一个永不过期的令牌 kubeadm token create --ttl 0 --print-join-command # 主节点监控 pod 进度,等待 3-10 分钟,完全都是 running 以后继续 watch kubectl get pod -n kube-system -o wide # 等到所有的 status 都变为 running kubectl get nodes

到这里 K8s 集群就安装完成了,下面的不是必须步骤

四、Kubernetes Dashboard

https://github.com/kubernetes/dashboard/tree/master/docs

安装

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml # 修改 type: ClusterIP 为 type: NodePort kubectl -n kubernetes-dashboard edit service kubernetes-dashboard # 查看 IP 和 Port,获取访问地址,这里为 https://<master-ip>:31707 kubectl -n kubernetes-dashboard get service kubernetes-dashboard # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE # kubernetes-dashboard NodePort 10.100.124.90 <nodes> 443:31707/TCP 21h # 修改权限:新增权限绑定或修改原有绑定的权限 # 新增权限绑定 kubectl create clusterrolebinding kubernetes-dashboard-operator --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard # 修改原有绑定的权限,先查看默认账户(ServiceAccount)绑定的权限(ClusterRoleBinding) #kubectl edit ClusterRoleBinding kubernetes-dashboard -n kubernetes-dashboard # 再修改,让账户(ServiceAccount)绑定的权限和内置的 cluster-admin 权限一样 #kubectl get ClusterRole cluster-admin -o yaml #kubectl edit ClusterRole kubernetes-dashboard # 获取默认账户 Token,有过期时间 kubectl -n kubernetes-dashboard create token kubernetes-dashboard

# 创建 cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Secret metadata: name: kubernetes-dashboard-token namespace: kubernetes-dashboard annotations: kubernetes.io/service-account.name: kubernetes-dashboard type: kubernetes.io/service-account-token EOF # 获取不过期 token kubectl -n kubernetes-dashboard get secret kubernetes-dashboard-token -o go-template="{{.data.token | base64decode}}" kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/kubernetes-dashboard -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}" kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep kubernetes-dashboard-token | awk '{print $1}') # 设置 kubernetes-dashboard 不过期 kubectl -n kubernetes-dashboard edit deployment kubernetes-dashboard 添加 --token-ttl=0

安装监控:metrics-server

https://github.com/kubernetes-sigs/metrics-server

Kubernetes Dashboard 默认监控信息为空,安装 metrics-server,用来监控 pod、node 资源情况(默认只有 cpu、memory 的信息),并在 Kubernetes Dashboard 上显示,更多信息需要对接 Prometheus

# 测试环境,修改 yaml 文件,添加 --kubelet-insecure-tls,不验证客户端证书,修改镜像地址(也可下载后 tag 改名) kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml # 查看下日志,镜像下载可能会失败 kubectl get pods -A kubectl -n kube-system describe pod metrics-server-687dd7b749-vtgtt # 可使用其它代替,也可以修改 components.yaml 中的镜像地址 registry.aliyuncs.com/google_containers/metrics-server:v0.6.2 #registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.2 #docker pull bitnami/metrics-server:0.5.0 #docker pull willdockerhub/metrics-server:v0.5.0 #docker tag willdockerhub/metrics-server:v0.5.0 k8s.gcr.io/metrics-server/metrics-server:v0.5.0 # 安装好后查看 kubectl top nodes kubectl top pods -A

除了 Kubernetes Dashboard,还有其它 UI

kubesphere:https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s,若安装过程中有 pod 一直无法启动,可看看是否为 etcd 监控证书找不到

# 证书在下面路径 # --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt # --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt # --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key # 创建证书: kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key # 创建后可以看到 kube-etcd-client-certs ps -ef | grep kube-apiserver

kuboard:https://kuboard.cn

五、持久化(PV、PVC),这里使用 NFS

https://kubernetes.io/zh/docs/concepts/storage/persistent-volumes

https://cloud.tencent.com/document/product/457/47014

安装 NFS 服务端(所有节点,因为需要执行 mount -t nfs)

sudo yum install -y nfs-utils # 执行命令 vi /etc/exports,创建 exports 文件,文件内容如下(使用 no_root_squash 不安全): sudo bash -c 'echo "/ifs/ *(rw,sync,no_wdelay,no_root_squash)" > /etc/exports' # 创建共享目录,设置所属用户与组 sudo mkdir -p /ifs/kubernetes sudo chown -R nfsnobody:nfsnobody /ifs/ # 启动 nfs 服务 sudo systemctl enable --now rpcbind sudo systemctl enable --now nfs-server # -a 全部挂载或者全部卸载,-r 重新挂载,-u 卸载某一个目录,-v 显示共享目录 sudo exportfs -arv # 检查配置是否生效,会输出 /ifs <world> sudo exportfs

安装 NFS 客户端。可不装,K8S 直连 NFS 服务端

# 注意服务端 NFS 端口放行,否则客户端无法连接,安装客户端 sudo yum install -y nfs-utils # 检查 NFS 服务器端是否有设置共享目录 showmount -e NFS服务端IP # 输出结果如下所示 # Export list for 172.26.165.243: # /nfs * # 执行以下命令挂载 nfs 服务器上的共享目录到本机路径 ~/nfsmount sudo mkdir ~/nfsmount sudo mount -t nfs NFS服务端IP:/nfs/data ~/nfsmount # 卸载 sudo umount ~/nfsmount/ # 写入一个测试文件 echo "hello nfs server" > ~/nfsmount/test.txt # 在 NFS 服务器上查看,验证文件写入成功 cat /nfs/data/test.txt

创建 PV 测试

https://github.com/kubernetes/examples/blob/master/staging/volumes/nfs/nfs-pv.yaml

PV 没有 namespace 租户的概念,PVC 有,当需要在某个 namespace 下使用 PVC 时,需要指定该 PVC 所属 namespace

vim nfs-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: nfs spec: capacity: storage: 100Mi accessModes: - ReadWriteMany nfs: server: 10.74.2.71 path: "/nfs/data" kubectl create -f nfs-pv.yaml

创建 PVC 测试

https://github.com/kubernetes/examples/blob/master/staging/volumes/nfs/nfs-pvc.yaml

vim nfs-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs namespace: default spec: accessModes: - ReadWriteMany # https://kubernetes.io/zh/docs/concepts/storage/persistent-volumes/#access-modes storageClassName: "" # 此处须显式设置空字符串,否则会被设置为默认的 StorageClass resources: requests: storage: 10Mi kubectl create -f nfs-pvc.yaml

六、安装 sc(StorageClass),使用 NFS

https://kubernetes.io/zh/docs/concepts/storage/storage-classes

默认不支持 NFS,需要插件:https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

运维人员创建 PV,开发操作 PVC。在大规模集群中可能会有很多 PV,如果这些 PV 都需要运维手动来处理,也是一件很繁琐的事情,所以就有了动态供给(Dynamic Provisioning)概念

上面创建的 PV 都是静态供给方式(Static Provisioning),而动态供给的关键就是 StorageClass,它的作用就是创建 PV 模板

创建的 StorageClass 里面需要定义 PV 属性,如存储类型、大小等,另外创建这种 PV 需要用到存储插件。最终效果是用户创建 PVC,里面指定存储类型(StorageClass),如果符合我们定义的 StorageClass,则会为其自动创建 PV 并进行绑定

# 先创建授权,创建 PV 需要相关权限,命名空间默认为 default,若为其它名字,需要下载后修改再创建 kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/nfs-subdir-external-provisioner-4.0.17/deploy/rbac.yaml # 配置 NFS curl -O https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/nfs-subdir-external-provisioner-4.0.17/deploy/deployment.yaml vim deployment.yaml # 只列出修改部分,已注释 containers: - name: nfs-client-provisioner image: willdockerhub/nfs-subdir-external-provisioner:v4.0.2 # (kubebiz/nfs-subdir-external-provisioner:v4.0.2)可以下载就不用换 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner # 供应者名字,可随意命名,但后面引用要一致 - name: NFS_SERVER value: 10.74.2.71 # NFS 地址 - name: NFS_PATH value: /ifs/kubernetes # NFS 路径 volumes: - name: nfs-client-root nfs: server: 10.74.2.71 # NFS 地址 path: /ifs/kubernetes # NFS 路径 # 再创建文件夹(若没有) sudo mkdir /nfs/kubernetes kubectl apply -f deployment.yaml # 最后创建 storage class,其中 provisioner 值为上面定义的供应者名字 kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/nfs-subdir-external-provisioner-4.0.17/deploy/class.yaml # 查看 kubectl get sc # 将 nfs-client 设置为默认,再查看 name 上会显示 default kubectl patch storageclass nfs-client -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

七 、ingress

https://kubernetes.io/zh/docs/concepts/services-networking/ingress-controllers

ingress-nginx 版本对应关系:https://github.com/kubernetes/ingress-nginx/blob/main/README.md#supported-versions-table

https://kubernetes.github.io/ingress-nginx/deploy/#quick-start

作用是通过域名(ingress 控制器)访问服务(ingress 资源),需要配置 hosts 文件,地址为集群内任意节点 ip

# 安装 ingress 控制器,这里用 ingress-nginx kubectl apply -f http://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.6.4/deploy/static/provider/cloud/deploy.yaml # 若无法下载镜像,可编辑用其它源代替(https://github.com/anjia0532/gcr.io_mirror) image: registry.k8s.io/ingress-nginx/controller:v1.6.4@sha256:15be4666c53052484dd2992efacf2f50ea77a78ae8aa21ccd91af6baaa7ea22f image: dyrnq/ingress-nginx-controller:v1.6.4@sha256:15be4666c53052484dd2992efacf2f50ea77a78ae8aa21ccd91af6baaa7ea22f image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343@sha256:39c5b2e3310dc4264d638ad28d9d1d96c4cbb2b2dcfb52368fe4e3c63f61e10f image: registry.aliyuncs.com/google_containers/kube-webhook-certgen:v20220916-gd32f8c343@sha256:39c5b2e3310dc4264d638ad28d9d1d96c4cbb2b2dcfb52368fe4e3c63f61e10f # 查看 ingress 控制器状态 kubectl get pods --namespace=ingress-nginx # NAME READY STATUS RESTARTS AGE # ingress-nginx-admission-create--1-jpb4z 0/1 Completed 0 24m # ingress-nginx-admission-patch--1-jhzng 0/1 Completed 1 24m # ingress-nginx-controller-5c9fd6c974-sbkmw 1/1 Running 0 24m # 查看 ingress 控制器地址,后面访问服务要用,http 为 58013,https 为 63536 kubectl get svc -n ingress-nginx # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE # ingress-nginx-controller LoadBalancer 10.96.213.91 <pending> 80:58013/TCP,443:63536/TCP 27m # ingress-nginx-controller-admission ClusterIP 10.96.56.233 <none> 443/TCP 27m

部署例子:kubernetes-dashboard-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

annotations:

nginx.ingress.kubernetes.io/backend-protocol: HTTPS

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443

host: dashboard.kubernetes.com

tls:

- hosts:

- dashboard.kubernetes.com

secretName: kubernetes-dashboard-certs

访问方式

若部署了一个 Ingress 资源,其 host 为 jenkins.example.com,那么访问时需要先配置本机 hosts 文件,然后看是 http 还是 https,加上对应端口即可访问

# 查看 nginx-ingress 地址 kubectl get pods -n ingress-nginx -o wide # 设置 hosts xxx.xxx.xxx.xxx xxx.com # 查看 nginx 配置文件 kubectl get pod -A | grep 'ingre' kubectl exec -it -n ingress-nginx nginx-ingress-controller-xxxxx bash cat /etc/nginx/nginx.conf # 查看 ingress 资源 kubectl get ingress -A -o wide # NAME CLASS HOSTS ADDRESS PORTS AGE # jenkins nginx jenkins.example.com 80, 443 163m # 查看 ingress 资源详情 kubectl describe ingress jenkins

或者直接使用 curl 测试:curl -vk -D- https://10.74.2.71:63536 -H 'Host: jenkins.example.com'

转发 TCP/UDP

默认只能转发 HTTP/HTTPS,转发 TCP/UDP 需要配置:https://kubernetes.github.io/ingress-nginx/user-guide/exposing-tcp-udp-services,以暴露 Service TCP 5000 端口为例(和 Ingress 资源无关,也就是说可以通过 IP:5000 直接访问)

# 添加两行参数,读取 tcp-services 和 udp-services kubectl edit deployment ingress-nginx-controller -n ingress-nginx - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services # 添加暴露 5000 端口 kubectl edit service ingress-nginx-controller -n ingress-nginx ports: - name: tcp-5000 nodePort: 5000 port: 5000 protocol: TCP targetPort: 5000

继续添加 ConfigMap,data 部分格式为:"外部端口": "<namespace/service name>:<service port>:[PROXY]:[PROXY]"

apiVersion: v1 kind: ConfigMap metadata: name: tcp-services namespace: ingress-nginx data: "5000": "devops/docker-registry-service:5000" --- apiVersion: v1 kind: ConfigMap metadata: name: udp-services namespace: ingress-nginx data: 53: "kube-system/kube-dns:53"

部署 tcp-udp.yaml

kubectl apply -f tcp-udp.yaml

# 查看端口

kubectl get svc -n ingress-nginx

八、部署应用

# 查看资源类型 kubectl api-resources # 查看命名空间下所有资源 kubectl api-resources --namespaced --verbs=list -o name | xargs -n 1 kubectl get --ignore-not-found --show-kind -n xxx

https://kubernetes.io/zh/docs/reference/kubectl/overview/#资源类型

https://www.cnblogs.com/jhxxb/p/15298810.html

九、卸载

sudo kubeadm reset sudo rm -rf /etc/cni/net.d/ sudo rm -rf ~/.kube/config docker container stop $(docker container ls -a -q) docker system prune --all --force --volumes