PyTorch-Multilayer

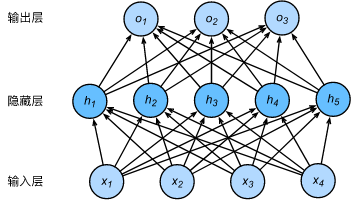

- 多层感知机

- 代码:

import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import torch.optim as optim

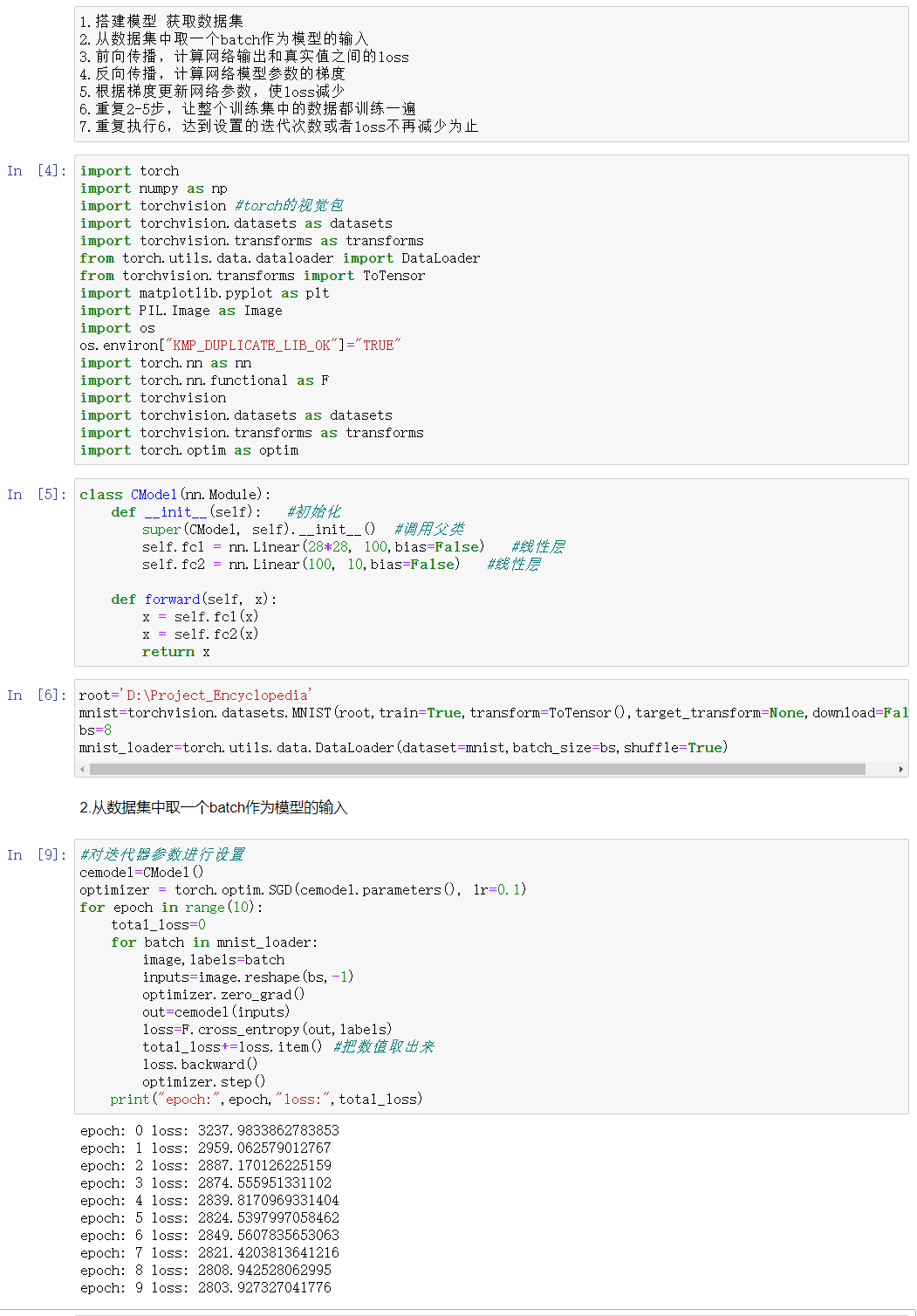

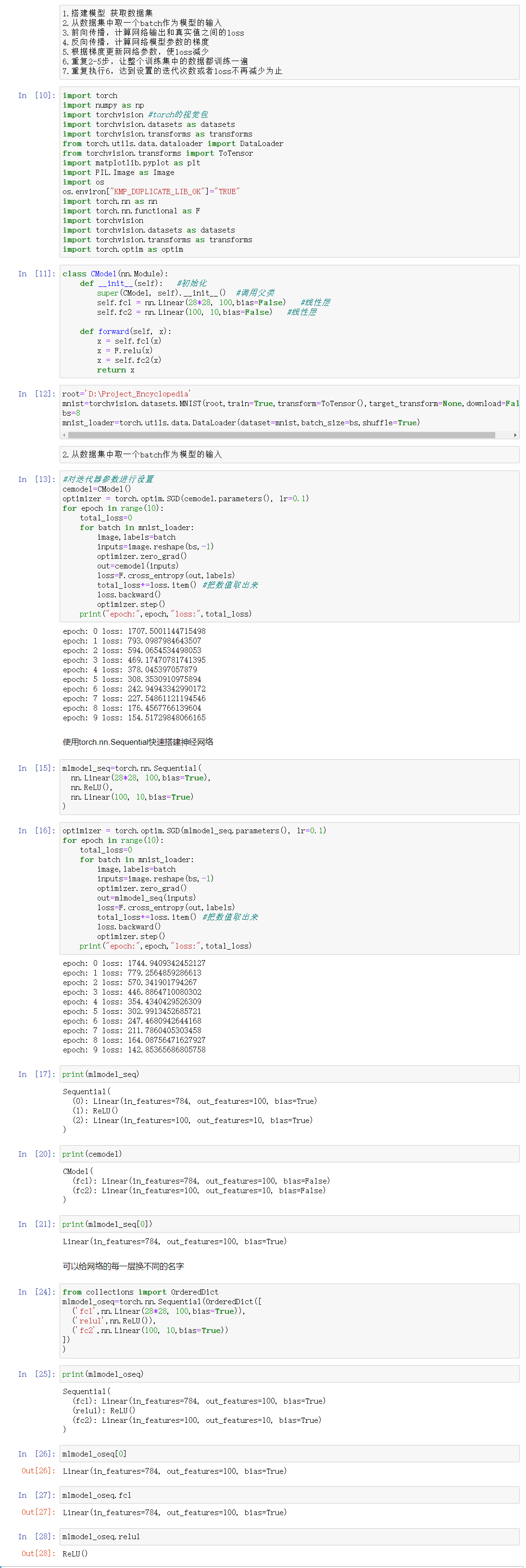

class CModel(nn.Module):

def __init__(self): #初始化

super(CModel, self).__init__() #调用父类

self.fc1 = nn.Linear(28*28, 100,bias=False) #线性层

self.fc2 = nn.Linear(100, 10,bias=False) #线性层

def forward(self, x):

x = self.fc1(x)

x = self.fc2(x)

return x

root='D:\Project_Encyclopedia'

mnist=torchvision.datasets.MNIST(root,train=True,transform=ToTensor(),target_transform=None,download=False)

bs=8

mnist_loader=torch.utils.data.DataLoader(dataset=mnist,batch_size=bs,shuffle=True)

#对迭代器参数进行设置

cemodel=CModel()

optimizer = torch.optim.SGD(cemodel.parameters(), lr=0.1)

for epoch in range(10):

total_loss=0

for batch in mnist_loader:

image,labels=batch

inputs=image.reshape(bs,-1)

optimizer.zero_grad()

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

total_loss+=loss.item() #把数值取出来

loss.backward()

optimizer.step()

print("epoch:",epoch,"loss:",total_loss)

- 会发现loss下降的并不多 因为只是多了一层线性层 其实网络并不稳定 需要加入非线性层

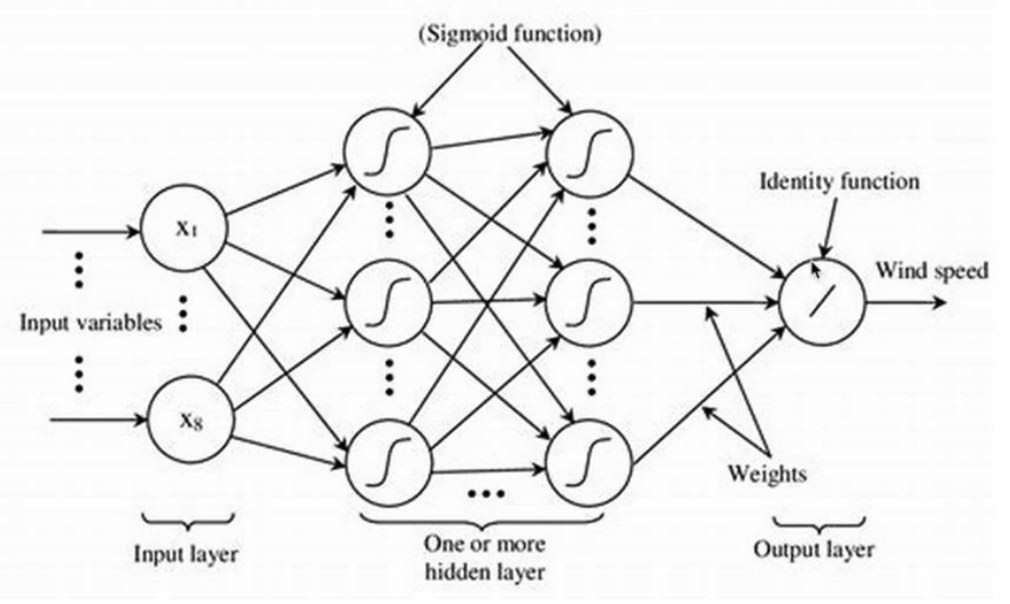

所以我们在网络中加入relu()函数进行训练,当然还有其他函数 比如:

- sigmoid()

- tanh()

- leaky_relu()

代码:

import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import torch.optim as optim

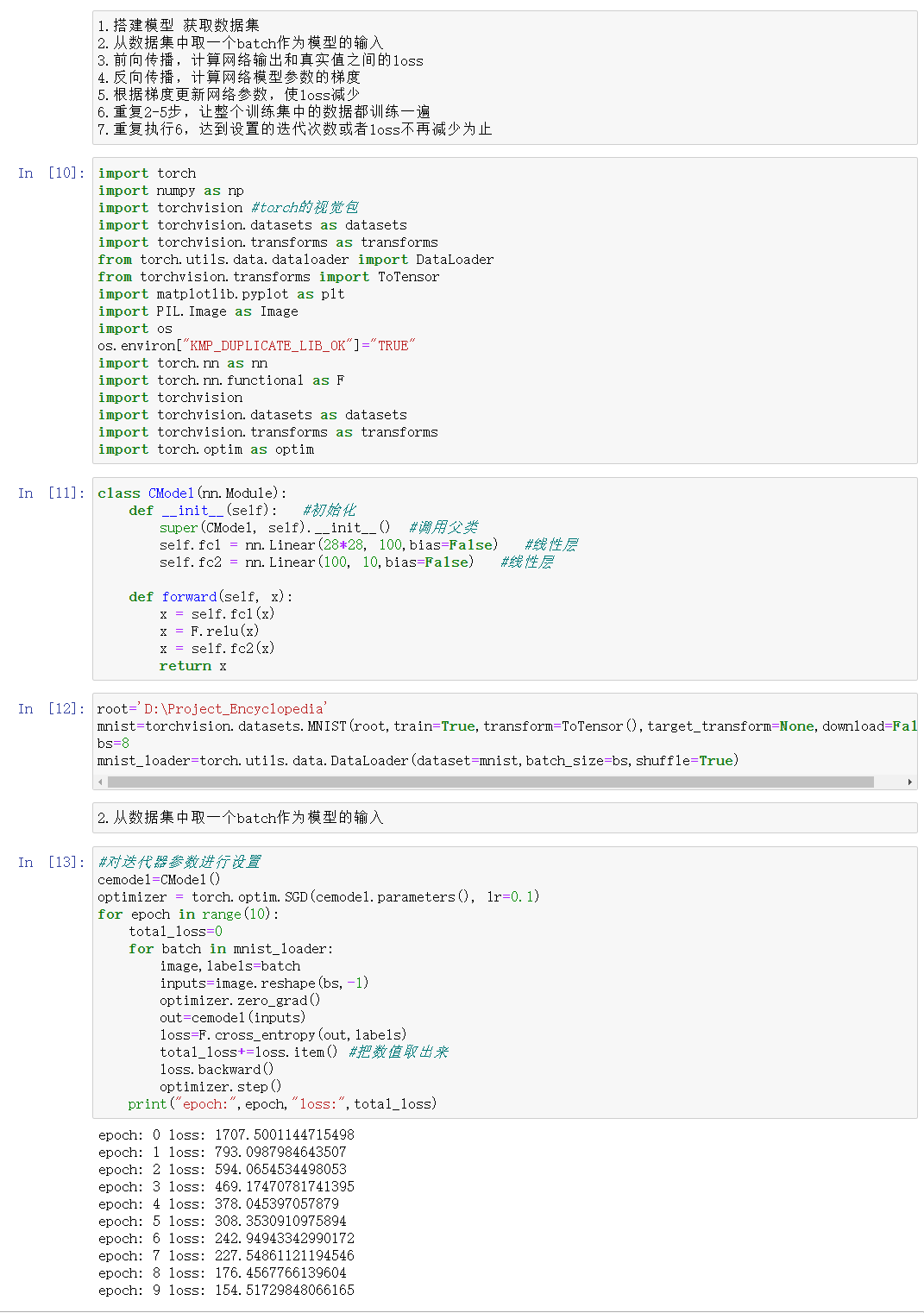

class CModel(nn.Module):

def __init__(self): #初始化

super(CModel, self).__init__() #调用父类

self.fc1 = nn.Linear(28*28, 100,bias=False) #线性层

self.fc2 = nn.Linear(100, 10,bias=False) #线性层

def forward(self, x):

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

return x

root='D:\Project_Encyclopedia'

mnist=torchvision.datasets.MNIST(root,train=True,transform=ToTensor(),target_transform=None,download=False)

bs=8

mnist_loader=torch.utils.data.DataLoader(dataset=mnist,batch_size=bs,shuffle=True)

#对迭代器参数进行设置

cemodel=CModel()

optimizer = torch.optim.SGD(cemodel.parameters(), lr=0.1)

for epoch in range(10):

total_loss=0

for batch in mnist_loader:

image,labels=batch

inputs=image.reshape(bs,-1)

optimizer.zero_grad()

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

total_loss+=loss.item() #把数值取出来

loss.backward()

optimizer.step()

print("epoch:",epoch,"loss:",total_loss)

- 其实还可以用torch.nn.Sequential快速搭建神经网络

https://ptorch.com/news/57.html

torch.nn.Sequential与torch.nn.Module区别与选择

-

使用

torch.nn.Module,我们可以根据自己的需求改变传播过程,如RNN等 - 如果你需要快速构建或者不需要过多的过程,直接使用

torch.nn.Sequential即可。

import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import torch.optim as optim

root='D:\Project_Encyclopedia'

mnist=torchvision.datasets.MNIST(root,train=True,transform=ToTensor(),target_transform=None,download=False)

bs=8

mnist_loader=torch.utils.data.DataLoader(dataset=mnist,batch_size=bs,shuffle=True)

mlmodel_seq=torch.nn.Sequential(

nn.Linear(28*28, 100,bias=True),

nn.ReLU(),

nn.Linear(100, 10,bias=True)

)

optimizer = torch.optim.SGD(mlmodel_seq.parameters(), lr=0.1)

for epoch in range(10):

total_loss=0

for batch in mnist_loader:

image,labels=batch

inputs=image.reshape(bs,-1)

optimizer.zero_grad()

out=mlmodel_seq(inputs)

loss=F.cross_entropy(out,labels)

total_loss+=loss.item() #把数值取出来

loss.backward()

optimizer.step()

print("epoch:",epoch,"loss:",total_loss)

print(mlmodel_seq)

print(cemodel)

print(mlmodel_seq[0])

from collections import OrderedDict

mlmodel_oseq=torch.nn.Sequential(OrderedDict([

('fc1',nn.Linear(28*28, 100,bias=True)),

('relu1',nn.ReLU()),

('fc2',nn.Linear(100, 10,bias=True))

])

)

print(mlmodel_oseq)

mlmodel_oseq[0]

mlmodel_oseq.fc1

mlmodel_oseq.relu1

转载请注明出处,欢迎讨论和交流!