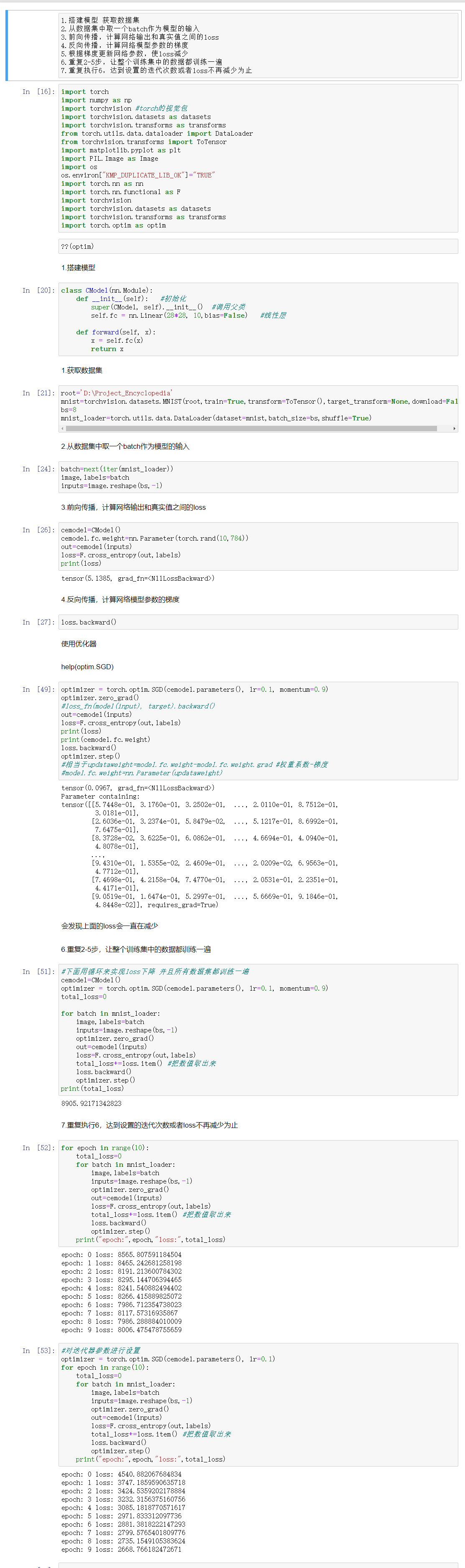

PyTorch-Training

- 对对MNIST数据集进行训练

代码:

import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import torch.optim as optim

class CModel(nn.Module):

def __init__(self): #初始化

super(CModel, self).__init__() #调用父类

self.fc = nn.Linear(28*28, 10,bias=False) #线性层

def forward(self, x):

x = self.fc(x)

return x

root='D:\Project_Encyclopedia'

mnist=torchvision.datasets.MNIST(root,train=True,transform=ToTensor(),target_transform=None,download=False)

bs=8

mnist_loader=torch.utils.data.DataLoader(dataset=mnist,batch_size=bs,shuffle=True)

batch=next(iter(mnist_loader))

image,labels=batch

inputs=image.reshape(bs,-1)

cemodel=CModel()

cemodel.fc.weight=nn.Parameter(torch.rand(10,784))

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

print(loss)

loss.backward()

optimizer = torch.optim.SGD(cemodel.parameters(), lr=0.1, momentum=0.9)

optimizer.zero_grad()

#loss_fn(model(input), target).backward()

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

print(loss)

print(cemodel.fc.weight)

loss.backward()

optimizer.step()

#相当于updataweight=model.fc.weight-model.fc.weight.grad #权重系数-梯度

#model.fc.weight=nn.Parameter(updataweight)

#下面用循环来实现loss下降 并且所有数据集都训练一遍

cemodel=CModel()

optimizer = torch.optim.SGD(cemodel.parameters(), lr=0.1, momentum=0.9)

total_loss=0

for batch in mnist_loader:

image,labels=batch

inputs=image.reshape(bs,-1)

optimizer.zero_grad()

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

total_loss+=loss.item() #把数值取出来

loss.backward()

optimizer.step()

print(total_loss)

for epoch in range(10):

total_loss=0

for batch in mnist_loader:

image,labels=batch

inputs=image.reshape(bs,-1)

optimizer.zero_grad()

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

total_loss+=loss.item() #把数值取出来

loss.backward()

optimizer.step()

print("epoch:",epoch,"loss:",total_loss)

#对迭代器参数进行设置

optimizer = torch.optim.SGD(cemodel.parameters(), lr=0.1)

for epoch in range(10):

total_loss=0

for batch in mnist_loader:

image,labels=batch

inputs=image.reshape(bs,-1)

optimizer.zero_grad()

out=cemodel(inputs)

loss=F.cross_entropy(out,labels)

total_loss+=loss.item() #把数值取出来

loss.backward()

optimizer.step()

print("epoch:",epoch,"loss:",total_loss)

转载请注明出处,欢迎讨论和交流!