PyTorch-Loss Function

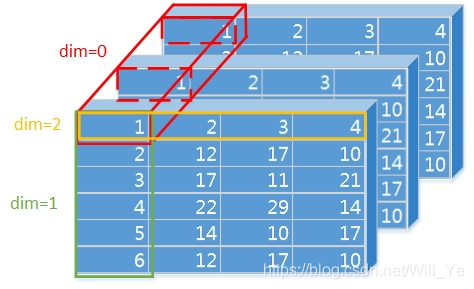

- softmax中dim维度这个参数的取值

- 准则是dim等于多少就是,该索引维度数据可以变化,dim=0, 二维 索引 [0,0]+[1,0]+[2,0],三维度 [0,0,0]+[1,0,0]+[2,0,0],dim=1,[0,0]+[0,1]+[0,2]

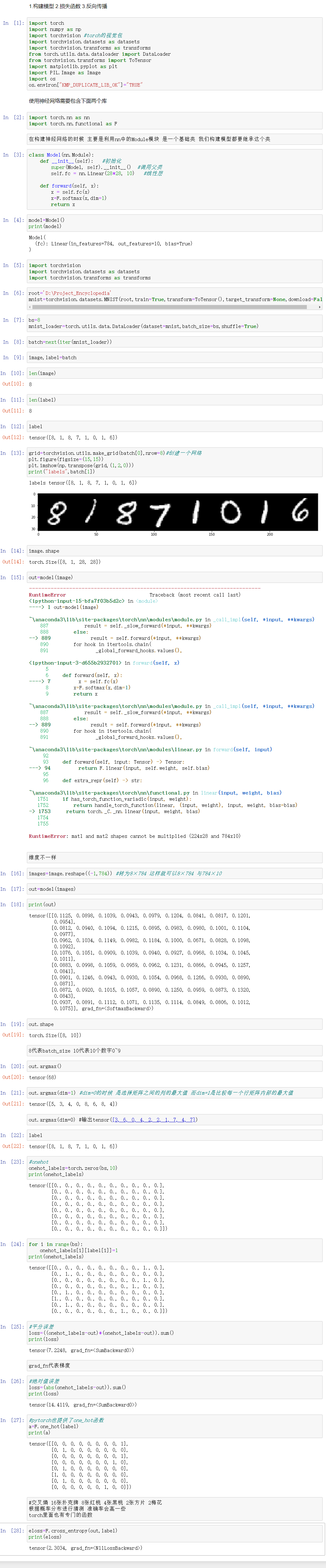

代码:

import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self): #初始化

super(Model, self).__init__() #调用父类

self.fc = nn.Linear(28*28, 10) #线性层

def forward(self, x):

x = self.fc(x)

x=F.softmax(x,dim=1)

return x

model=Model()

print(model)

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

root='D:\Project_Encyclopedia'

mnist=torchvision.datasets.MNIST(root,train=True,transform=ToTensor(),target_transform=None,download=False)

bs=8

mnist_loader=torch.utils.data.DataLoader(dataset=mnist,batch_size=bs,shuffle=True)

batch=next(iter(mnist_loader))

image,label=batch

len(image)

len(label)

label

grid=torchvision.utils.make_grid(batch[0],nrow=8)#创建一个网络

plt.figure(figsize=(15,15))

plt.imshow(np.transpose(grid,(1,2,0)))

print("labels",batch[1])

image.shape

images=image.reshape((-1,784)) #转为8×784 这样就可以8×784 与784×10

out=model(images)

print(out)

out.shape

out.argmax()

out.argmax(dim=1) #dim=0的时候 是选择矩阵之间的列的最大值 而dim=1是比较每一个行矩阵内部的最大值

label

#onehot

onehot_labels=torch.zeros(bs,10)

print(onehot_labels)

for i in range(bs):

onehot_labels[i][label[i]]=1

print(onehot_labels)

#平分误差

loss=((onehot_labels-out)*(onehot_labels-out)).sum()

print(loss)

#绝对值误差

loss=(abs(onehot_labels-out)).sum()

print(loss)

#pytorch也提供了one_hot函数

a=F.one_hot(label)

print(a)

eloss=F.cross_entropy(out,label)

print(eloss)

转载请注明出处,欢迎讨论和交流!