[AI] 深度数学 - Bayes

数学似宇宙,韭菜只关心其中实用的部分。

scikit-learn (sklearn) 官方文档中文版

scikit-learn Machine Learning in Python

一个新颖的online图书资源集,非常棒。

Bayesian Machine Learning

9. [Bayesian] “我是bayesian我怕谁”系列 - Gaussian Process【ignore】

随机过程

[Scikit-learn] 1.1 Generalized Linear Models - Bayesian Ridge Regression【等价效果】

8. [Bayesian] “我是bayesian我怕谁”系列 - Variational Autoencoders

稀疏表达

[UFLDL] *Sparse Representation【稀疏表达】

7. [Bayesian] “我是bayesian我怕谁”系列 - Boltzmann Distribution【ignore】

贝叶斯网络

[Scikit-learn] Dynamic Bayesian Network - Conditional Random Field【去噪、词性标注】

6. [Bayesian] “我是bayesian我怕谁”系列 - Markov and Hidden Markov Models【隐马及其扩展】

时序模型

[Scikit-learn] Dynamic Bayesian Network - HMM【基础实践】

[Scikit-learn] Dynamic Bayesian Network - Kalman Filter【车定位预测】

[Scikit-learn] *Dynamic Bayesian Network - Partical Filter【机器人自我定位】

5. [Bayesian] “我是bayesian我怕谁”系列 - Continuous Latent Variables【降维:PCA, PPCA, FA, ICA】

概率降维

[Scikit-learn] 4.4 Dimensionality reduction - PCA

[Scikit-learn] 2.5 Dimensionality reduction - Probabilistic PCA & Factor Analysis

[Scikit-learn] 2.5 Dimensionality reduction - ICA

[Scikit-learn] 1.2 Dimensionality reduction - Linear and Quadratic Discriminant Analysis

4. [Bayesian] “我是bayesian我怕谁”系列 - Variational Inference【公式推导解读】

概率聚类

[Scikit-learn] 2.1 Clustering - Gaussian mixture models & EM

[Scikit-learn] 2.1 Clustering - Variational Bayesian Gaussian Mixture

3. [Bayesian] “我是bayesian我怕谁”系列 - Latent Variables【概念解读】

隐变量模型

[Bayes] Concept Search and LSI

[Bayes] Concept Search and PLSA

[Bayes] Concept Search and LDA

2. [Bayesian] “我是bayesian我怕谁”系列 - Exact Inference【ignore】

1. [Bayesian] “我是bayesian我怕谁”系列 - Naive Bayes with Prior【贝叶斯在文本分类的极简例子】

朴素贝叶斯

[ML] Naive Bayes for Text Classification【原理概览】

[Bayes] Maximum Likelihood estimates for text classification【代码实现】

[Scikit-learn] 1.9 Naive Bayes【不同先验的朴素贝叶斯】

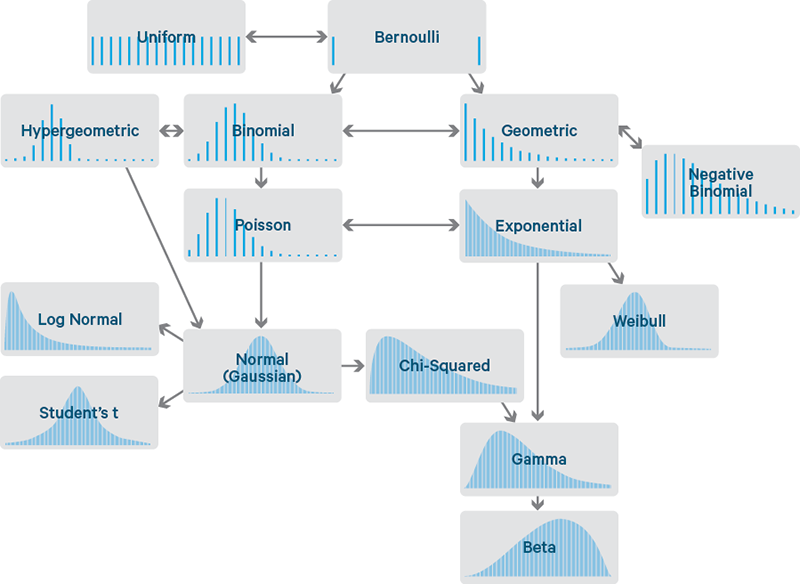

常见分布关系

<Statistical Inference> goto: 647/686

先验分布与后验分布

[Math] From Prior to Posterior distribution【先验后验基础知识】

[Bayes] qgamma & rgamma: Central Credible Interval【后验区间估计】

[Bayes] Multinomials and Dirichlet distribution【狄利克雷分布】

其中两个概念比较重要:

-

-

- 无信息先验分布 (Non-informative prior)

- Jeffreys先验分布 (Jeffreys prior)

-

后验即是:贝叶斯统计推断

-

-

- 后验分布与充分性 (Posterior distribution and sufficiency)

- 无信息先验下的后验分布 (Posterior distribution with noninformative prior)

- 共轭先验下的后验分布 (Posterior distribution with conjugate prior)

-

结合损失函数:贝叶斯统计决策

-

-

- 平方损失 (square loss)

- 加权平方损失 (weighted squared loss)

- 绝对值损失 (absolute loss)

- 线性损失函数 (linear loss function)

-

抽样方法

一种逼近求值策略:贝叶斯计算方法

-

- [Bayes] What is Sampling【采样法大纲】

- 直接抽样法 & 可视化方法

- [Bayes] Point --> Line: Estimate "π" by R【撒点逼近Pi值 - 可视化 by line】

- [Bayes] Point --> Hist: Estimate "π" by R【撒点逼近Pi值 - 可视化 by hist】

- [Bayes] runif: Inversion Sampling【利用反函数的技巧采样】

-

- 接受-拒绝抽样(Acceptance-Rejection sampling)

- 重要性抽样(Importance sampling)

-

- MCMC抽样方法

[Bayes] MCMC (Markov Chain Monte Carlo)【利用了马尔科夫的平稳性】

(a). Metropolis-Hasting算法

(b). Gibbs采样算法

-

- 采样估参

- [Bayes] Parameter estimation by Sampling【估计出概率分布函数,期望就是参数估值】

- 采样估参

其他未整理

non-Bayesian Machine Learning

Algorithm Outline

[ML] Roadmap: a long way to go【学习路线北斗导航】

基本概念

[UFLDL] Basic Concept【基本ML概念】

基本算法

[Scikit-learn] 1.5 Generalized Linear Models - SGD for Regression

[Scikit-learn] 1.5 Generalized Linear Models - SGD for Classification

Online Learning

[Scikit-learn] 1.1 Generalized Linear Models - Comparing various online solvers

[Scikit-learn] Yield miniBatch for online learning.

线性问题

[UFLDL] Linear Regression & Classification

线性拟合

[Scikit-learn] 1.1 Generalized Linear Models - from Linear Regression to L1&L2【最小二乘 --> 正则化】

[Scikit-learn] 1.1 Generalized Linear Models - Lasso Regression【L2相关“内容”,正则化分类当然也可以用】

[ML] Bayesian Linear Regression【增量在线学习的例子】

[Scikit-learn] 1.4 Support Vector Regression【依据最外边距】

[Scikit-learn] Theil-Sen Regression【抗噪能力较好】

线性分类

# Discriminative Models

[Scikit-learn] 1.1 Generalized Linear Models - Logistic regression & Softmax【转化为最大似然,也可以将参数“正则”】

[Scikit-learn] 1.1 Generalized Linear Models - Neural network models【MLP多层感知机】

[ML] Bayesian Logistic Regression【统计分类方法的区别】

[Scikit-learn] 1.4 Support Vector Regression【线性可分】

# Generative Models

Naive Bayes【参见 "贝叶斯机器学习"】

[ML] Linear Discriminant Analysis【ing】

决策树

[ML] Decision Tree & Ensembling Metholds【Bagging pk Boosting pk SVM】

降维

[UFLDL] Dimensionality Reduction【广义降维方法概述】

聚类

[Scikit-learn] 2.3 Clustering - kmeans

[Scikit-learn] 2.3 Clustering - Spectral clustering

[Scikit-learn] *2.3 Clustering - DBSCAN: Density-Based Spatial Clustering of Applications with Noise

[Scikit-learn] *2.3 Clustering - MeanShift

End.

浙公网安备 33010602011771号

浙公网安备 33010602011771号