[action] MMPose - 3D Human

Ref: https://mmpose.readthedocs.io/en/latest/demo.html【API demo, Doc】

Ref: [Solved] Importerror: libgl.so.1: cannot open shared object file: no such file or directory

Ref: 3D 人体姿态估计简述

3D Multiview Human Pose Estimation Image Demo

Paper: VoxelPose: Towards Multi-Camera 3D Human Pose Estimation in Wild Environment【Super High Cost】

python demo/body3d_multiview_detect_and_regress_img_demo.py \ configs/body/3d_kpt_mview_rgb_img/voxelpose/panoptic/voxelpose_prn64x64x64_cpn80x80x20_panoptic_cam5.py \ https://download.openmmlab.com/mmpose/body3d/voxelpose/voxelpose_prn64x64x64_cpn80x80x20_panoptic_cam5-545c150e_20211103.pth \ --out-img-root vis_results \ --camera-param-file tests/data/panoptic_body3d/demo/camera_parameters.json \ --visualize-single-view

Installation log.

/home/jeff/.virtualenvs/pose/lib/python3.7/site-packages/mmcv/cnn/bricks/transformer.py:33: UserWarning: Fail to import ``MultiScaleDeformableAttention`` from ``mmcv.ops.multi_scale_deform_attn``, You should install ``mmcv-full`` if you need this module. warnings.warn('Fail to import ``MultiScaleDeformableAttention`` from ' The argument `img_root` is not set. Default Panoptic3D demo data will be used. /home/jeff/.virtualenvs/pose/lib/python3.7/site-packages/mmcv/cnn/bricks/conv_module.py:154: UserWarning: Unnecessary conv bias before batch/instance norm 'Unnecessary conv bias before batch/instance norm') load checkpoint from http path: https://download.openmmlab.com/mmpose/body3d/voxelpose/voxelpose_prn64x64x64_cpn80x80x20_panoptic_cam5-545c150e_20211103.pth The model and loaded state dict do not match exactly missing keys in source state_dict: human_detector.center_head.grid_size, human_detector.center_head.cube_size, human_detector.center_head.grid_center [ ] 0/5, elapsed: 0s, ETA:/home/jeffrey/Desktop/action_recognition/3d-human/mmpose/mmpose/models/heads/voxelpose_head.py:91: UserWarning: __floordiv__ is deprecated, and its behavior will change in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values. To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor'). (shape[1] * shape[2])).reshape(batch_size, num_people, -1) /home/jeff/Desktop/action_recognition/3d-human/mmpose/mmpose/models/heads/voxelpose_head.py:93: UserWarning: __floordiv__ is deprecated, and its behavior will change in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values. To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor'). shape[2]).reshape(batch_size, num_people, -1) [>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>] 5/5, 0.0 task/s, elapsed: 145s, ETA: 0s(pose)

3D关键点

15个关节。

2D关键点

目前COCO keypoint track是人体关键点检测的权威公开比赛之一,COCO数据集中把人体关键点表示为17个关节,分别是鼻子,左右眼,左右耳,左右肩,左右肘,左右腕,左右臀,左右膝,左右脚踝。而人体关键点检测的任务就是从输入的图片中检测到人体及对应的关键点位置。

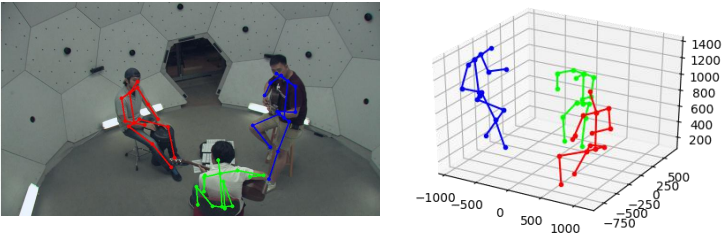

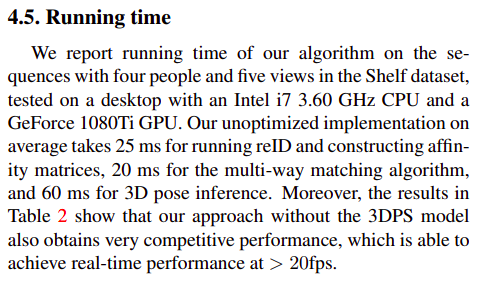

Ref: 基于多视角人体姿态(一)Fast and Robust Multi-Person 3D Pose Estimation

Multiview版本是不是会更好一些?Yes.

找到被检测关键点之间的跨视图对应关系以及关键点属于具体哪一个人。对于这项挑战,以前的研究一般采用 3DPS。

当相机数量很少时,它鲁棒性不好,因为3DPS只使用多视图几何来连接视图之间的2D检测,外观线索被忽略。

创新点: 本文则是通过 匹配在多个视图中检测到的2D姿态来生成2D姿态的聚类,其中每个聚类包含同一个人在不同视图中的2D姿态,然后,可以从相应的2D位姿中推断出每个人的3D位姿,由于减少了状态空间,这比多个位姿的联合推断要快得多。

Docker 安装 mmaction2: https://mmaction2.readthedocs.io/en/latest/install.html#another-option-docker-image

# build an image with PyTorch 1.6.0, CUDA 10.1, CUDNN 7. docker build -f ./docker/Dockerfile --rm -t mmaction2 .

升级 Dockerfile,使第三个 demo 可用。

# This is for v2 ARG PYTORCH="1.12.0" ARG CUDA="11.3" ARG CUDNN="8" # This is for v2.1 of testing. # ARG PYTORCH="1.7.1" # ARG CUDA="11.0" # ARG CUDNN="8" FROM pytorch/pytorch:${PYTORCH}-cuda${CUDA}-cudnn${CUDNN}-devel ENV TORCH_CUDA_ARCH_LIST="6.0 6.1 7.0+PTX" ENV TORCH_NVCC_FLAGS="-Xfatbin -compress-all" ENV CMAKE_PREFIX_PATH="$(dirname $(which conda))/../" # To fix GPG key error when running apt-get update RUN apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/3bf863cc.pub RUN apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1804/x86_64/7fa2af80.pub RUN apt-get update && apt-get install -y git ninja-build libglib2.0-0 libsm6 libxrender-dev libxext6 libgl1-mesa-glx\ && apt-get clean \ && rm -rf /var/lib/apt/lists/* # Install xtcocotools RUN pip install cython RUN pip install xtcocotools # Install MMCV RUN pip install --no-cache-dir --upgrade pip wheel setuptools # This is for v2 # RUN pip install --no-cache-dir mmcv-full==1.3.17 -f https://download.openmmlab.com/mmcv/dist/cu101/torch1.6.0/index.html RUN pip install --no-cache-dir mmcv-full==1.6.2 -f https://download.openmmlab.com/mmcv/dist/cu11.3/torch1.12.0/index.html # Install MMPose RUN conda clean --all RUN git clone https://github.com/open-mmlab/mmpose.git /mmpose WORKDIR /mmpose RUN mkdir -p /mmpose/data ENV FORCE_CUDA="1" RUN pip install -r requirements/build.txt RUN pip install --no-cache-dir -e .

API DOC: https://mmpose.readthedocs.io/en/latest/api.html

如何获取2D人物的重心?

3D的重心随着2D的重心移动~

keypoints (ndarray[K, 2 or 3]): x, y, [score] track_id (int): unique id of each person, required when with_track_id==True. bbox ((4, ) or (5, )): left, right, top, bottom, [score]

bbox ((4, ) or (5, )): left, right, top, bottom, [score] 左上角,右下角。

INFO:root: [3D] frame_idx: 57, frame: 1, lift... INFO:root: [3D] frame_idx: 57, frame: 1, k: track_id, v: 1 INFO:root: [3D] frame_idx: 57, frame: 1, k: keypoints, v:

[[7.4659851e+02 5.7033533e+02 7.9726595e-01] [7.7597681e+02 5.7033533e+02 7.9769897e-01] [7.9801050e+02 7.3926062e+02 9.0934819e-01] [7.6863220e+02 9.0818591e+02 8.8756669e-01] [7.1722021e+02 5.7033533e+02 7.9683292e-01] [7.3925391e+02 7.3191614e+02 9.1661692e-01] [7.3925391e+02 8.8615222e+02 8.8442808e-01] [7.4659851e+02 4.6751123e+02 8.4288752e-01] [7.4659851e+02 3.6468713e+02 8.8850909e-01] [7.9801050e+02 2.8389673e+02 9.2287987e-01] [7.9433826e+02 2.7287985e+02 8.3610755e-01] [7.0987561e+02 3.6468713e+02 8.6702514e-01] [7.5394312e+02 4.7485583e+02 5.0482041e-01] [8.2004431e+02 5.7033533e+02 2.3346280e-01] [7.8332141e+02 3.6468713e+02 9.0999305e-01] [8.0535510e+02 4.8220038e+02 9.1393793e-01] [8.4207800e+02 5.7767993e+02 9.3690771e-01]] INFO:root: [3D] frame_idx: 57, frame: 1, k: keypoints_3d, v:

[[ 1.29847816e-04 -2.70384317e-05 8.31725836e-01] [-6.90194517e-02 -8.04729015e-02 8.16785812e-01] [-9.09736827e-02 2.48020254e-02 4.02913660e-01] [-3.34124342e-02 -2.97708567e-02 0.00000000e+00] [ 6.90148398e-02 8.04709643e-02 8.46652329e-01] [ 3.74519490e-02 2.00692788e-01 4.45585966e-01] [ 3.88435423e-02 2.94269353e-01 2.96720266e-02] [-5.26640331e-03 -4.75178696e-02 1.06766450e+00] [-7.24574830e-03 -6.47186115e-02 1.32360411e+00] [-1.16821714e-01 -4.15696912e-02 1.45325255e+00] [-1.00589104e-01 -6.69958144e-02 1.48291636e+00] [ 6.59773722e-02 4.42432687e-02 1.27060449e+00] [-4.21388559e-02 1.46030188e-01 1.02413082e+00] [-1.50968015e-01 1.74997285e-01 9.23010349e-01] [-8.45253319e-02 -1.59734204e-01 1.26092529e+00] [-1.50213629e-01 -1.63592935e-01 9.87687349e-01] [-2.30161473e-01 -7.66144320e-02 7.70348728e-01]] INFO:root: [3D] frame_idx: 57, frame: 1, k: title, v:

Prediction (1) INFO:root: [3D] frame_idx: 57, frame: 1, k: bbox, v:

[671.6858 212.65431 872.92334 964.7392 0.99958724]

INFO:root:[2D-to-3D] frame_idx: 57, lift... INFO:root: [3D] frame_idx: 57, frame: 0, lift... INFO:root: [3D] frame_idx: 57, frame: 0, k: track_id, v: 0 INFO:root: [3D] frame_idx: 57, frame: 0, k: keypoints, v: [[1.08505505e+03 5.26746826e+02 8.20256948e-01] [1.05713647e+03 5.26746826e+02 8.23503971e-01] [1.06272009e+03 6.44005005e+02 8.69704127e-01] [1.06830383e+03 7.55679565e+02 9.08635497e-01] [1.11297363e+03 5.26746826e+02 8.17009866e-01] [1.10180627e+03 6.49588745e+02 8.83062124e-01] [1.10738989e+03 7.66846924e+02 9.06886101e-01] [1.08924292e+03 4.45782898e+02 8.51077318e-01] [1.09343066e+03 3.64818939e+02 8.81897688e-01] [1.07388757e+03 3.03397980e+02 9.50902224e-01] [1.07947131e+03 2.97814240e+02 9.74770665e-01] [1.12972485e+03 3.59235199e+02 9.01219368e-01] [1.09063879e+03 3.59235199e+02 8.96922529e-01] [1.04038525e+03 3.31316620e+02 9.00191665e-01] [1.05713647e+03 3.70402679e+02 8.62576008e-01] [1.04596899e+03 4.54158478e+02 8.93887997e-01] [1.02363416e+03 5.26746826e+02 9.17729378e-01]] INFO:root: [3D] frame_idx: 57, frame: 0, k: keypoints_3d, v: [[ 1.05755746e-04 -2.56062376e-05 7.78431654e-01] [ 9.72001404e-02 5.30838184e-02 7.86051869e-01] [ 8.15232694e-02 -7.12830108e-03 4.00748670e-01] [ 5.80005832e-02 1.60602063e-01 3.97626162e-02] [-9.72249955e-02 -5.30988574e-02 7.70828545e-01] [-6.36302158e-02 -5.43382466e-02 3.76823336e-01] [-7.67837092e-02 9.50057283e-02 0.00000000e+00] [-8.32690578e-03 -3.82783487e-02 9.89334226e-01] [-2.51326542e-02 -4.86073829e-02 1.24378967e+00] [ 2.53135636e-02 -7.69510940e-02 1.36566305e+00] [-4.87496844e-03 4.31682840e-02 1.39608550e+00] [-1.37663975e-01 -1.32745802e-01 1.19156837e+00] [-4.39133644e-02 -4.11054492e-01 1.15066540e+00] [ 9.48008224e-02 -2.41953641e-01 1.25577998e+00] [ 8.15404356e-02 2.52909716e-02 1.19171631e+00] [ 1.23144068e-01 1.13848761e-01 9.53529835e-01] [ 1.74745336e-01 5.97312823e-02 7.29172945e-01]] INFO:root: [3D] frame_idx: 57, frame: 0, k: title, v: Prediction (0) INFO:root: [3D] frame_idx: 57, frame: 0, k: bbox, v: [9.8651373e+02 2.4365218e+02 1.1556776e+03 8.1542535e+02 9.9972349e-01] INFO:root: [3D] frame_idx: 57, frame: 1, lift... INFO:root: [3D] frame_idx: 57, frame: 1, k: track_id, v: 1 INFO:root: [3D] frame_idx: 57, frame: 1, k: keypoints, v: [[7.4659851e+02 5.7033533e+02 7.9726595e-01] [7.7597681e+02 5.7033533e+02 7.9769897e-01] [7.9801050e+02 7.3926062e+02 9.0934819e-01] [7.6863220e+02 9.0818591e+02 8.8756669e-01] [7.1722021e+02 5.7033533e+02 7.9683292e-01] [7.3925391e+02 7.3191614e+02 9.1661692e-01] [7.3925391e+02 8.8615222e+02 8.8442808e-01] [7.4659851e+02 4.6751123e+02 8.4288752e-01] [7.4659851e+02 3.6468713e+02 8.8850909e-01] [7.9801050e+02 2.8389673e+02 9.2287987e-01] [7.9433826e+02 2.7287985e+02 8.3610755e-01] [7.0987561e+02 3.6468713e+02 8.6702514e-01] [7.5394312e+02 4.7485583e+02 5.0482041e-01] [8.2004431e+02 5.7033533e+02 2.3346280e-01] [7.8332141e+02 3.6468713e+02 9.0999305e-01] [8.0535510e+02 4.8220038e+02 9.1393793e-01] [8.4207800e+02 5.7767993e+02 9.3690771e-01]] INFO:root: [3D] frame_idx: 57, frame: 1, k: keypoints_3d, v: [[ 1.29847816e-04 -2.70384317e-05 8.31725836e-01] [-6.90194517e-02 -8.04729015e-02 8.16785812e-01] [-9.09736827e-02 2.48020254e-02 4.02913660e-01] [-3.34124342e-02 -2.97708567e-02 0.00000000e+00] [ 6.90148398e-02 8.04709643e-02 8.46652329e-01] [ 3.74519490e-02 2.00692788e-01 4.45585966e-01] [ 3.88435423e-02 2.94269353e-01 2.96720266e-02] [-5.26640331e-03 -4.75178696e-02 1.06766450e+00] [-7.24574830e-03 -6.47186115e-02 1.32360411e+00] [-1.16821714e-01 -4.15696912e-02 1.45325255e+00] [-1.00589104e-01 -6.69958144e-02 1.48291636e+00] [ 6.59773722e-02 4.42432687e-02 1.27060449e+00] [-4.21388559e-02 1.46030188e-01 1.02413082e+00] [-1.50968015e-01 1.74997285e-01 9.23010349e-01] [-8.45253319e-02 -1.59734204e-01 1.26092529e+00] [-1.50213629e-01 -1.63592935e-01 9.87687349e-01] [-2.30161473e-01 -7.66144320e-02 7.70348728e-01]] INFO:root: [3D] frame_idx: 57, frame: 1, k: title, v: Prediction (1) INFO:root: [3D] frame_idx: 57, frame: 1, k: bbox, v: [671.6858 212.65431 872.92334 964.7392 0.99958724]

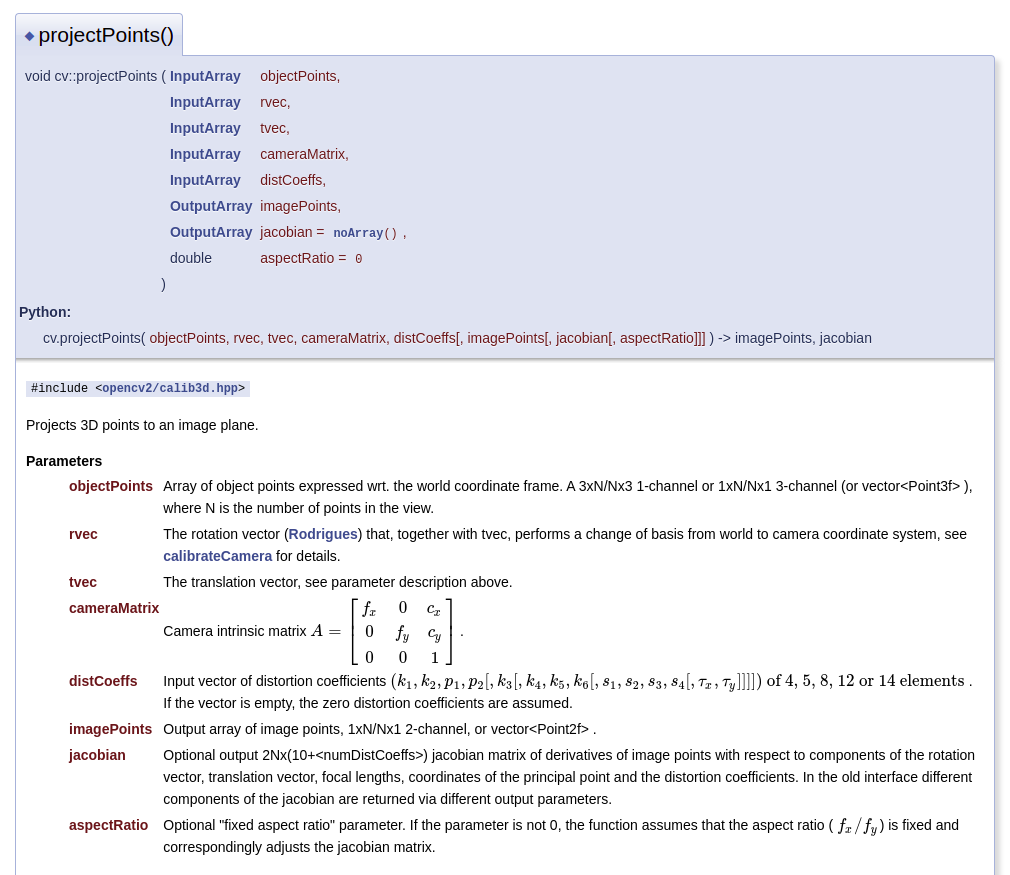

Ref: opencv中3D点根据相机参数投影成2D点+solvePnP函数计算相机姿态+2D坐标到3D+相机参数calibration(标定与配准,求得深度彩色相机的内参与外参,再进行配准)

看看openGL的方案?

Ref: https://docs.opencv.org/3.4/d9/d0c/group__calib3d.html#ga1019495a2c8d1743ed5cc23fa0daff8c

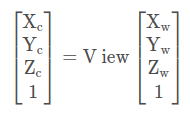

观察矩阵,例子不错

Ref: https://learnopengl-cn.github.io/01%20Getting%20started/09%20Camera/

Ref: [OpenGL] 视图矩阵(View)矩阵与glm::lookAt函数源码解析(不错的样子)

End.

浙公网安备 33010602011771号

浙公网安备 33010602011771号