[Argo] 07 - Parameters passing

Ref: https://codefresh.io/learn/argo-workflows/learn-argo-workflows-with-8-simple-examples/

Ref: https://kedro.readthedocs.io/en/stable/deployment/argo.html

Anatomy of a workflow/pipeline

初步介绍

class ArgoDemo { public static void main(String[] args) { int result = addFour(2); if (result > 5) { sayHello(); } }

// ------------------------------------- public int addFour(int a) { return a + 4; } public void sayHello() { System.out.println("Hello Intuit!"); } }

通过argo yaml来实现/表示上面的程序。

- name: main

steps:

- - name: addFour

template: addFour

arguments: {parameters: [{name: "a", value: "2"}]}

- - name: sayHello

template: sayHello

when: "{{steps.addFour.outputs.result}} > 5"

- name: addFour

inputs: {parameters: [{name: "a"}]}

container:

image: alpine:latest

command: [sh, -c]

args: ["echo $(( {{inputs.parameters.a}} + 4 ))"]

- name: sayHello

container:

image: alpine:latest

command: [sh, -c]

args: [echo "Hello Intuit!"]

三种类型的工作流案例,可参考:[Argo] 01 - Create Argo Workflows

传参 KEY-VALUE

Ref: How to Pass Key-Values between Argo Workflows Part 1

If you create multiple steps in Argo Workflows, values will inevitably have to be passed between these steps at some point.

This page will explore three different ways to achieve passing "key-values" between steps in a workflow

Using parameters.

Using scripts and results

Using output.parameters

Artifacts

重点解决传参和传文件的问题。

input/output

-

mino

在安装argo的时候,已经安装了mino作为制品库。

{ "apiVersion": "argoproj.io/v1alpha1", "kind": "Workflow", "metadata": { "generateName": "artifact-passing-" }, "spec": { "entrypoint": "artifact-example", "templates": [ { "name": "artifact-example", "steps": [ [ { "name": "generate-artifact", "template": "whalesay" } ], [ { "name": "consume-artifact", "template": "print-message", "arguments": { "artifacts": [ { "name": "message", "from": "{{steps.generate-artifact.outputs.artifacts.hello-art}}" } ] } } ] ] }, { "name": "whalesay", "container": { "image": "docker/whalesay:latest", "command": [ "sh", "-c" ], "args": [ "sleep 1; cowsay hello world | tee /tmp/hello_world.txt" ] }, "outputs": { "artifacts": [ { "name": "hello-art", "path": "/tmp/hello_world.txt" } ] } }, { "name": "print-message", "inputs": { "artifacts": [ { "name": "message", "path": "/tmp/message" } ] }, "container": { "image": "alpine:latest", "command": [ "sh", "-c" ], "args": [ "cat /tmp/message" ] } } ] } }

通过 input/output 以及 artifacts 实现 Job 之间数据传递。

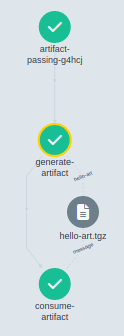

在工作流中,某些步骤产生或者消费构件,是很常见的需求。通常,前一环节的输出构件,用作下一环节的输入构件。

下面的例子包含两个Step,前一个步骤产生构件供后一个消费。

apiVersion: argoproj.io/v1alpha1 kind: Workflow metadata: generateName: artifact-passing- spec: entrypoint: artifact-example templates: - name: artifact-example steps: # 产生构件 - - name: generate-artifact template: whalesay # 消费构件 - - name: consume-artifact template: print-message arguments: artifacts: # 绑定构件名message到generate-artifact,输出制品库 hello-art.tgz 内容 - name: message from: "{{steps.generate-artifact.outputs.artifacts.hello-art}}" # 此模板产生构件 - name: whalesay container: image: docker/whalesay:latest command: [sh, -c] args: ["cowsay hello world | tee /tmp/hello_world.txt"] # 输出构件声明 outputs: artifacts: - name: hello-art # 生成制品共享 hello-art path: /tmp/hello_world.txt # 把这个文件"打包后"上传到制品库中 # 此模板消费构件 - name: print-message # 输入构件声明 inputs: artifacts: - name: message # ----> 从message中得知了名字,然后在如下path中的加载改名字的.tgz包 path: /tmp/message container: image: alpine:latest command: [sh, -c] args: ["cat /tmp/message"]

Shared volume

Ref: Kubernetes 原生 CI/CD 构建框架 Argo 详解

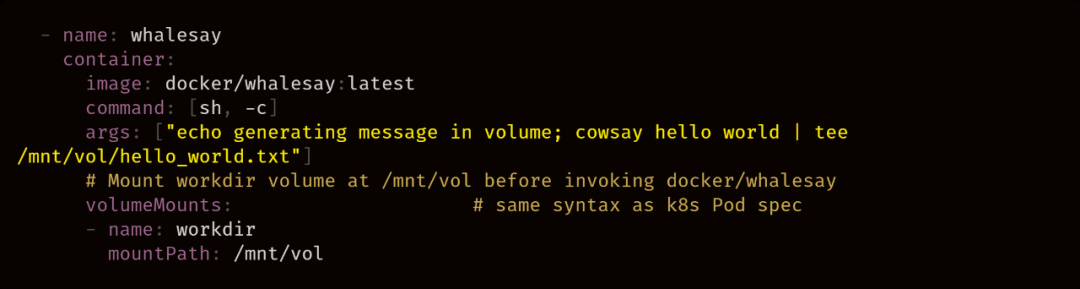

这并不是 Argo 处理产物传递的一种标准方式,但是通过共享存储,我们显然也能达到共通产物的结果。当然,如果使用 Volume,我们则无需借助 Inputs 和 Outputs。

在 Workflow 的 Spec 中,我们定义一个 Volume 模板:

并在其他的 template 中 mount 该 Volume:

Custom Image

Build custom image

Ref: KiND - How I Wasted a Day Loading Local Docker Images

Ref: [Argo] 03 - KinD

(1) KinD加载镜像。

jeff@unsw-ThinkPad-T490:ECR$ kind load docker-image sagemaker-mobilenet-argo:latest Image: "sagemaker-mobilenet-argo:latest" with ID "sha256:39f7fe4cdc42f3f7ead3a6283a1eee5e33cde95c3d066ce4b3e0af92882ea409" not yet present on node "kind-control-plane", loading...

(2) 通过 crictl 查看。

jeff@unsw-ThinkPad-T490:ECR$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 708e16cc79a8 kindest/node:v1.25.0 "/usr/local/bin/entr…" 34 hours ago Up 34 hours 127.0.0.1:37575->6443/tcp kind-control-plane jeff@unsw-ThinkPad-T490:ECR$ sudo docker exec -it kind-control-plane bash root@kind-control-plane:/# root@kind-control-plane:/# which crictl /usr/local/bin/crictl

(3) Cluster中能看到镜像。

root@kind-control-plane:/# crictl images IMAGE TAG IMAGE ID SIZE docker.io/library/sagemaker-mobilenet-argo latest 39f7fe4cdc42f 1.22GB

(4) Invoke the pipeline 的初始参数。

$ argo submit -n argo parallel.yaml

apiVersion: argoproj.io/v1alpha1 kind: Workflow metadata: generateName: parallel- labels: workflows.argoproj.io/archive-strategy: "false" spec: entrypoint: hello serviceAccountName: argo templates: - name: hello steps: - - name: ls template: template-ls - - name: sleep-a template: template-sleep - name: sleep-b template: template-sleep - - name: delay template: template-delay - - name: sleep template: template-sleep - name: template-ls container: image: sagemaker-mobilenet-workflow:v1 command: [python3] args: ["/opt/ml/code/wrapper.py"] - name: template-sleep script: image: alpine command: [sleep] args: ["10"] - name: template-delay suspend: duration: "600s"

(5) images之间的参数传递

如下可以考虑,第一个image,将“超参数” return,然后在yaml中捕获后,作为参数传递给第二个image。

- - name: sayHello template: sayHello when: "{{steps.addFour.outputs.result}} > 5"

Ref: Argo Workflows and Pipelines - CI/CD, Machine Learning, and Other Kubernetes Workflows

这里涉及到了docker中build image: [Argo] 05 - Kaniko.

End.

浙公网安备 33010602011771号

浙公网安备 33010602011771号