[SageMaker] Invoked by AWS Lambda

资源

Deploying to TensorFlow Serving Endpoints - 不大的参考价值

Table of Contents

Module 13 Video Material - 深度较低,前两个稍微有点用。

-

Part 13.1: Flask and Deep Learning Web Services [Video] [Notebook] 【训练好,用训练结果搭建一个 api 服务】

-

Part 13.2: Deploying a Model to AWS [Video][Notebook] 【部署一个已有的模型到endpoint,并测试】

-

Part 13.3: Using a Keras Deep Neural Network with a Web Application [Video][Notebook]

-

Part 13.4: When to Retrain Your Neural Network [Video][Notebook]

-

Part 13.5: AI at the Edge: Using Keras on a Mobile Device [Video][Notebook]

触发"训练"

一、Lambda 触发训练任务

Ref: Retraining SageMaker models with Chalice and Serverless【示范代码来源】

Code: https://github.com/juliensimon/aws/blob/master/lambda_frameworks/chalice/sagemakertrainer/app.py

Video: Scheduling the training of a SageMaker model with a Lambda function

代码: https://github.com/juliensimon/aws/tree/master/lambda_frameworks/serverless/sagemakerscheduler【scheduler的在此不是重点】

通过3个API,了解训练参数。

HyperParameters 如何通过 CreateTrainingJob 传递参数到自定义的 docker 的问题。

-

Listing jobs

@app.route('/list/{results}') def list_jobs(results): jobs = sm.list_training_jobs(MaxResults=int(results), SortBy="CreationTime", SortOrder="Descending") job_names = map(lambda job: [job['TrainingJobName'], job['TrainingJobStatus']], jobs['TrainingJobSummaries']) return {'jobs': list(job_names)}

$ http $URL/list/1

-

Describing a job

@app.route('/get/{name}') def get_job_by_name(name): job = sm.describe_training_job(TrainingJobName=name) return {'job': str(job)}

$ http get $URL/job/imageclassification-2018-05-12

-

Retraining a job

其实也无需接收这么多参数,直接 create_training_job 即可。

@app.route('/train/{name}', methods=['POST']) def train_job_by_name(name): job = sm.describe_training_job(TrainingJobName=name) body = app.current_request.json_body if 'TrainingJobName' not in body: raise BadRequestError('Missing new job name') else: job['TrainingJobName'] = body['TrainingJobName']

if 'S3OutputPath' in body: job['OutputDataConfig']['S3OutputPath'] = body['S3OutputPath']

if 'InstanceType' in body: job['ResourceConfig']['InstanceType'] = body['InstanceType']

if 'InstanceCount' in body: job['ResourceConfig']['InstanceCount'] = int(body['InstanceCount']) if 'VpcConfig' in job: resp = sm.create_training_job( TrainingJobName=job['TrainingJobName'], AlgorithmSpecification=job['AlgorithmSpecification'], RoleArn=job['RoleArn'], InputDataConfig=job['InputDataConfig'], OutputDataConfig=job['OutputDataConfig'], ResourceConfig=job['ResourceConfig'], StoppingCondition=job['StoppingCondition'], HyperParameters=job['HyperParameters'] if 'HyperParameters' in job else {}, VpcConfig=job['VpcConfig'], Tags=job['Tags'] if 'Tags' in job else []) else: # Because VpcConfig cannot be empty like HyperParameters or Tags :-/ resp = sm.create_training_job( TrainingJobName=job['TrainingJobName'], AlgorithmSpecification=job['AlgorithmSpecification'], RoleArn=job['RoleArn'], InputDataConfig=job['InputDataConfig'], OutputDataConfig=job['OutputDataConfig'], ResourceConfig=job['ResourceConfig'], StoppingCondition=job['StoppingCondition'], HyperParameters=job['HyperParameters'] if 'HyperParameters' in job else {}, Tags=job['Tags'] if 'Tags' in job else [])

return {'ResponseMetadata': resp['ResponseMetadata']}

$ http post $URL/train/DEMO-imageclassification-2018-05-10-09-58-12 \ TrainingJobName=imageclassification-2018-05-12 \ InstanceType=ml.p3.2xlarge

InstanceCount=2

二、异步

Ref: End-to-End Multiclass Image Classification Example

HyperParameters 修改如下,可用。

training_params = \ { # specify the training docker image "AlgorithmSpecification": { "TrainingImage": training_image, "TrainingInputMode": "File" }, "RoleArn": role, "OutputDataConfig": { "S3OutputPath": 's3://{}/{}/output'.format(bucket, job_name_prefix) }, "ResourceConfig": { "InstanceCount": 1, "InstanceType": "ml.c5.2xlarge", "VolumeSizeInGB": 50 }, "TrainingJobName": job_name, "HyperParameters": { # "image_shape": image_shape, # "num_layers": str(num_layers), # "num_training_samples": str(num_training_samples), # "num_classes": str(num_classes), # "mini_batch_size": str(mini_batch_size), # "epochs": str(epochs), # "learning_rate": str(learning_rate), "train": "dataset", "test": "dataset", "pretrain": "INPUT/my_model.h5", "output": "OUTPUT", "epoch": str(3), "step": str(30) }, "StoppingCondition": { "MaxRuntimeInSeconds": 360000 }, # Training data should be inside a subdirectory called "train" # Validation data should be inside a subdirectory called "validation" # The algorithm currently only supports fullyreplicated model (where data is copied onto each machine) # "InputDataConfig": [ # { # "ChannelName": "train", # "DataSource": { # "S3DataSource": { # "S3DataType": "S3Prefix", # "S3Uri": s3_train, # "S3DataDistributionType": "FullyReplicated" # } # }, # "ContentType": "application/x-recordio", # "CompressionType": "None" # }, # { # "ChannelName": "validation", # "DataSource": { # "S3DataSource": { # "S3DataType": "S3Prefix", # "S3Uri": s3_validation, # "S3DataDistributionType": "FullyReplicated" # } # }, # "ContentType": "application/x-recordio", # "CompressionType": "None" # } # ] }

但 Lambda这里是不能等的,training这边也是希望能实时监控下training log的。怎么办?

第一步

接收任务 from 队列:[AWS] How to Use SQS as an Event Source for AWS Lambda

其实是,SQS 主动触发 该 Lambda 函数。

参考:[AWS] ECS - Fargate pratice step by step

for record in event["Records"]: reqBody = record['body'] dictBody = ast.literal_eval(reqBody) job_id = dictBody["job_id"] print("Job Id: ", job_id) start_index = dictBody["job_details"]["start_index"] print("Start Index: ", start_index) ... ...# Triggering the ECS Fargate Job response = client.run_task( cluster='detection-demo', # name of the cluster launchType = 'FARGATE', taskDefinition='Image-Mask:66', # replace with your task definition name and revision count = 1, platformVersion='LATEST', networkConfiguration={ 'awsvpcConfiguration': { 'subnets': [ 'subnet-37b06788', # replace with your public subnet or a private with NAT 'subnet-37b06788' # Second is optional, but good idea to have two ], 'assignPublicIp': 'ENABLED' } }, overrides={ 'containerOverrides': [ { 'name': 'demo-fargate', 'environment': [ { 'name': 'job_id', 'value': job_id }, { 'name': 'start_index', 'value': start_index }, ... ... ] }, ] })

第三步

写入container的环境变量,然后在runtime code中读取这些个变量们。

if __name__ == '__main__': try: print(os.environ) job_id = os.environ["job_id"] print("Job Id: " + str(job_id)) print("Image masking started for Job id: " + str(job_id) ) current_time = str(int((time.time() + 0.5) * 1000)) start_index = int(os.environ["start_index"]) print("Starting Index: " + str(start_index)) ... ...

三、按次收费

最便宜的GPU,串行,免费。

较好的GPU,并行,按需收费,例如:一次训练十分钟,按次数缴费。

-

选型与定价

Ref: https://aws.amazon.com/sagemaker/pricing/

| Accelerated Computing | vCPU | Memory | Price per Hour | |

|---|---|---|---|---|

| ml.p3.2xlarge | 8 | 61 GiB | $3.825 | |

| ml.p3.8xlarge | 32 | 244 GiB | $14.688 | |

| ml.p3.16xlarge | 64 | 488 GiB | $28.152 | |

| ml.g4dn.xlarge | 4 | 16 GiB | $0.7364 | 1100 USD PER MONTH |

| ml.g4dn.2xlarge | 8 | 32 GiB | $1.0528 | |

| ml.g4dn.4xlarge | 16 | 64 GiB | $1.6856 | |

| ml.g4dn.8xlarge | 32 | 128 GiB | $3.0464 | |

| ml.g4dn.12xlarge | 48 | 192 GiB | $5.4768 | |

| ml.g4dn.16xlarge | 64 | 256 GiB | $6.0928 |

Ref: https://www.amazonaws.cn/en/ec2/instance-types/

Amazon EC2 P3 instances deliver high performance compute in the cloud with up to 8 NVIDIA® V100 Tensor Core GPUs and up to 100 Gbps of networking throughput for machine learning and HPC applications. These instances deliver up to one petaflop of mixed-precision performance per instance to significantly accelerate machine learning and high performance computing applications. Amazon EC2 P3 instances have been proven to reduce machine learning training times from days to minutes, as well as increase the number of simulations completed for high performance computing by 3-4x.

-

- p3.2xlarge: 1 GPU, 8 vCPUs, 61 GiB of memory, up to 10 Gbps network performance

- p3.8xlarge, 4 GPU, 32 vCPUs, 244 GiB of memory, 10 Gbps network performance

- p3.16xlarge, 8 GPU, 64 vCPUs, 488 GiB of memory, 25 Gbps network performance

Amazon EC2 G4 instances deliver a cost-effective GPU instance for deploying machine learning models in production and graphics-intensive applications. G4 instances provide the latest generation NVIDIA T4 GPUs, AWS custom Intel Cascade Lake CPUs, up to 100 Gbps of networking throughput, and up to 1.8 TB of local NVMe storage. These instances deliver up to 65 TFLOPs of FP16 performance to accelerate machine learning inference applications and ray-tracing cores to accelerate graphics workloads such as graphics workstations, video transcoding, and game streaming in the cloud.

- g4dn.xlarge, 1 GPU, 4 vCPUs, 16 GiB of memory, 125 NVMe SSD, up to 25 Gbps network performance

- g4dn.2xlarge, 1 GPU, 8 vCPUs, 32 GiB of memory, 225 NVMe SSD, up to 25 Gbps network performance

- g4dn.4xlarge, 1 GPU, 16 vCPUs, 64 GiB of memory, 225 NVMe SSD, up to 25 Gbps network performance

- g4dn.8xlarge, 1 GPU, 32 vCPUs, 128 GiB of memory, 1x900 NVMe SSD, 50 Gbps network performance

- g4dn.16xlarge, 1 GPU, 64 vCPUs, 256 GiB of memory, 1x900 NVMe SSD, 50 Gbps network performance

- g4dn.12xlarge, 4 GPUs, 48 vCPUs, 192 GiB of memory, 1x900 NVMe SSD, 50 Gbps network performance

-

性能对比

NVIDIA Tesla GPU系列P4、T4、P40以及V100是Tesla GPU系列的明星产品。

作为对比:FP32时,2080 TI 速度是Tesla V100的80%;FP16时,是Tesla V100的82%。在价格上,2080 Ti是Tesla V100的1/5。

| 云服务器吧 | Tesla T4:世界领先的推理加速器 | Tesla V100:通用数据中心 GPU | 适用于超高效、外扩型服务器的 Tesla P4 | 适用于推理吞吐量服务器的 Tesla P40 |

|---|---|---|---|---|

| 单精度性能 (FP32) | 8.1 TFLOPS | 14 TFLOPS (PCIe) 15.7 teraflops (SXM2) |

5.5 TFLOPS | 12 TFLOPS |

| 半精度性能 (FP16) | 65 TFLOPS | 112 TFLOPS (PCIe) 125 TFLOPS (SXM2) |

— | — |

| 整数运算能力 (INT8) | 130 TOPS | — | 22 TOPS* | 47 TOPS* |

| 整数运算能力 (INT4) | 260 TOPS | — | — | — |

| GPU 显存 | 16GB | 32/16GB HBM2 | 8GB | 24GB |

| 显存带宽 | 320GB/秒 | 900GB/秒 | 192GB/秒 | 346GB/秒 |

| 系统接口/外形规格 | PCI Express 半高外形 | PCI Express 双插槽全高外形 SXM2/NVLink | PCI Express 半高外形 | PCI Express 双插槽全高外形 |

| 功率 | 70 W | 250 W (PCIe) 300 W (SXM2) |

50 W/75 W | 250 W |

| 硬件加速视频引擎 | 1 个解码引擎,2 个编码引擎 | — | 1 个解码引擎,2 个编码引擎 | 1 个解码引擎,2 个编码引擎 |

触发"推断"

视频:Invoking a SageMaker model from a Lambda function

代码:https://github.com/juliensimon/aws/tree/master/lambda_frameworks/chalice/predictor

一、部署推断

-

High level 部署

[Elastic Inference]

Deploy the best model on a GPU instance, and with Elastic Inference.

c5.large+eia1.medium give you performance comparable to p2.xlarge at 77% discount.

You'll save $754 per instance per month.

只是加了个 accelerator_type,性能差不多,价格却不同。

Ref: https://gitlab.com/juliensimon/amazon-studio-demos/blob/master/elastic-inference.ipynb

ic_endpoint = ic.deploy(initial_instance_count=1, instance_type='ml.p2.xlarge', # $1.361/hour in eu-west-1 endpoint_name='ic-endpoint') ic_endpoint_ei = ic.deploy(initial_instance_count=1, instance_type='ml.c5.large', # $0.134/hour in eu-west-1 accelerator_type='ml.eia2.medium', # $0.181/hour in eu-west-1 endpoint_name='ic-endpoint-ei')

-

Boto3 部署

(1)model

%%time import boto3 from time import gmtime, strftime sage = boto3.Session().client(service_name='sagemaker')

#############################################################

model_name="DEMO-full-image-classification-model-" + time.strftime('-%Y-%m-%d-%H-%M-%S', time.gmtime()) print(model_name)

#############################################################

info = sage.describe_training_job(TrainingJobName=job_name) model_data = info['ModelArtifacts']['S3ModelArtifacts'] print(model_data) hosting_image = get_image_uri(boto3.Session().region_name, 'image-classification')

#------------------------------------------------------------

primary_container = { 'Image': hosting_image, 'ModelDataUrl': model_data, }

############################################################# create_model_response = sage.create_model( ModelName = model_name, ExecutionRoleArn = role, PrimaryContainer = primary_container) print(create_model_response['ModelArn'])

(2)endpoint config

from time import gmtime, strftime timestamp = time.strftime('-%Y-%m-%d-%H-%M-%S', time.gmtime()) endpoint_config_name = job_name_prefix + '-epc-' + timestamp

endpoint_config_response = sage.create_endpoint_config( EndpointConfigName = endpoint_config_name, ProductionVariants=[{ 'InstanceType':'ml.m4.xlarge', 'InitialInstanceCount':1, 'ModelName':model_name, 'AcceleratorType': 'ml.eia1.large', 'VariantName':'AllTraffic'}]) print('Endpoint configuration name: {}'.format(endpoint_config_name)) print('Endpoint configuration arn: {}'.format(endpoint_config_response['EndpointConfigArn']))

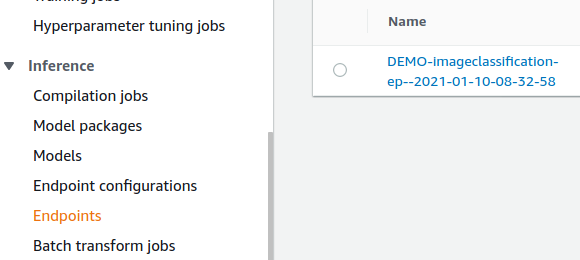

(3)endpoint

%%time import time timestamp = time.strftime('-%Y-%m-%d-%H-%M-%S', time.gmtime()) endpoint_name = job_name_prefix + '-ep-' + timestamp print('Endpoint name: {}'.format(endpoint_name)) endpoint_params = { 'EndpointName': endpoint_name, 'EndpointConfigName': endpoint_config_name, } endpoint_response = sagemaker.create_endpoint(**endpoint_params)

print('EndpointArn = {}'.format(endpoint_response['EndpointArn']))

等待创建完毕。

# get the status of the endpoint response = sagemaker.describe_endpoint(EndpointName=endpoint_name) status = response['EndpointStatus'] print('EndpointStatus = {}'.format(status)) # wait until the status has changed sagemaker.get_waiter('endpoint_in_service').wait(EndpointName=endpoint_name) # print the status of the endpoint endpoint_response = sagemaker.describe_endpoint(EndpointName=endpoint_name) status = endpoint_response['EndpointStatus'] print('Endpoint creation ended with EndpointStatus = {}'.format(status)) if status != 'InService': raise Exception('Endpoint creation failed.')

有了 endpoint server,开始计时收费,可以被触发。

二、Lambda 触发 runtime 服务

Ref: https://www.ec2instances.info/【粗略估计,一个月 $200】

放在内存如下,保证实时性,太贵。还是硬盘里放着好了。

| 单模型 终端节点 |

多模型 终端节点 |

|

| 每月的终端节点总价格 | 171360 USD | 1017 USD |

| 终端节点实例类型 | ml.c5.large | ml.r5.2xlarge |

| 内存容量 (GiB) | 4 | 64 |

| 每小时的终端节点价格 | 0.119 USD | 0.706 USD |

| 每个终端节点的实例数量 | 2 | 2 |

| 1000 个模型所需的终端节点 | 1000 | 1 |

| 终端节点第 90 个百分位延迟 (ms) | 7 | 7 |

End.

for record in event["Records"]: reqBody = record['body'] dictBody = ast.literal_eval(reqBody)

job_id = dictBody["job_id"] print("Job Id: ", job_id)

start_index = dictBody["job_details"]["start_index"] print("Start Index: ", start_index)

max_length = dictBody["job_details"]["max_length"] print("Max Length: ", max_length)

current_model_path = dictBody["job_details"]["model"]["current"] print("Current Model Path: ", current_model_path)

input = dictBody["job_details"]["input"] print("Input: ", input)

output = dictBody["job_details"]["output"] print("Output: ", output)

# Triggering the ECS Fargate Job response = client.run_task( cluster='detection-mask', # name of the cluster launchType = 'FARGATE', taskDefinition='Image-Mask:6', # replace with your task definition name and revision count = 1, platformVersion='LATEST', networkConfiguration={ 'awsvpcConfiguration': { 'subnets': [ 'subnet-37b06739', # replace with your public subnet or a private with NAT 'subnet-37b06739' # Second is optional, but good idea to have two ], 'assignPublicIp': 'ENABLED' } }, overrides={ 'containerOverrides': [ { 'name': 'detection-mask-fargate', 'environment': [ { 'name': 'job_id', 'value': job_id }, { 'name': 'start_index', 'value': start_index }, { 'name': 'max_length', 'value': max_length }, { 'name': 'current_model', 'value': current_model_path }, { 'name': 'input', 'value': input }, { 'name': 'output', 'value': output } ] }, ] })

浙公网安备 33010602011771号

浙公网安备 33010602011771号