[SageMaker] Computer Vision & Large Scale Training ***

SageMaker Fridays Season 2, Episode 6 - Computer vision & large scale training (November 2020)

图像,并且是重头开始训练,这就体现了distributed ml training的价值。

This project explain how to train computer vision models on large scale datasets. Starting from the ImageNet dataset, we use SageMaker to train a model with the built-in algorithm for image classification and 64 GPUs! We also discuss SageMaker features that help you scale such as RecordIO files, pipe mode, distributed training and GPU instances.

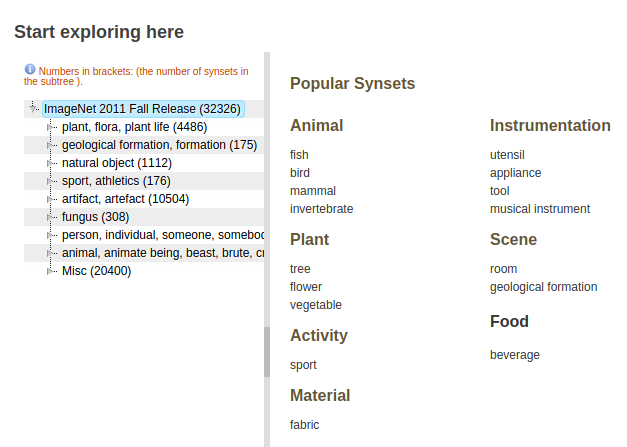

数据集

Dataset: http://image-net.org/explore

实验一

21:00:49:15, data_prep.txt

25:51:49:15, notebook.

8 GPU per instance * 8 instances = 64 GPU

一、MXNet 若干知识点

Part 1: Training MXNet — part 1: MNIST

Part 2: Training on CIFAR-10

Part 3: CIFAR-10 redux

Part 4: Distributed training【牛】

Part 5: Distributed training, EFS edition【牛】

Part 1,一机多卡的情况下,使用更多的卡。

Ref: https://github.com/juliensimon/aws/tree/master/mxnet/mnist

mod = mx.mod.Module(lenet) #mod = mx.mod.Module(lenet, context=mx.gpu(0)) #mod = mx.mod.Module(lenet, context=(mx.gpu(0), mx.gpu(1), mx.gpu(2)))

Part 2,根据挑战的数据集 CIFAR-10,换成 ResNet101。

Fine-tuning means that we’re going to keep all layers and pre-trained weights unchanged, except for the last layer

sym, arg_params, aux_params = mx.model.load_checkpoint("resnext-101",0)

mod = mx.mod.Module(symbol=sym, context=(mx.gpu(0), mx.gpu(1), mx.gpu(2), mx.gpu(3)))

Part 3, full training from scratch 的 学习率

How to focus on improving validation accuracy.

Gradually reducing the learning rate is a key technique in improving validation accuracy.

But we could also do without all these parameters, thanks to the AdaDelta optimizer.

# Use ResNext-110 sym = resnext.get_symbol(10, 110, "3,32,32") mod = mx.mod.Module(symbol=sym, context=(mx.gpu(0), mx.gpu(1), mx.gpu(2), mx.gpu(3))) mod.bind(data_shapes=train_iter.provide_data, label_shapes=train_iter.provide_label) mod.init_params(initializer=mx.init.Xavier()) mod.fit(train_iter, eval_data=valid_iter, optimizer='adadelta', num_epoch=epochs)

Part 4, 暂略

More precisely, it took 12+ hours using all 4 GPUs of a g2.8xlarge instance.

Could we go faster? Sure, I could use a p2.16xlarge instance. That’s as large as GPU servers get.

Even faster? We need distributed training.

As I mentioned before, it took about 12 hours to run 300 epochs on the 4 GPUs of a g2.8xlarge instance.

The combined 32 GPUs of the 4 p2.8xlarge instances did it in 91 minutes!

Part 5, 暂略

show you how to share the data set across all instances with Amazon EFS, a managed service fully compatible with NFS v4.1.

Distributed Deep Learning Made Easy,CloudFormation Stack

以上是原始的方案,2017年。现在有现成的 API 实现了嚒?

二、train_instance_count

Ref: Scale up Training of Your ML Models with Distributed Training on Amazon SageMaker

-

这个参数到底有么有用!?

mnist_estimator = TensorFlow(entry_point='mnist.py', role=role, train_instance_count=1, train_instance_type='ml.p3.2xlarge', framework_version='1.15.2', py_version='py3', distributions={'parameter_server': {'enabled': True}})

Parameter Server - Built In Algorithms, 7:10/15:18

Parameter Server - TensorFlow Script Mode,8:14/15:18【以上属于这个类型】

日志中一些关键的部分:

"num_cpus": 8, "num_gpus": 1, INFO:tensorflow:loss = 0.09097028, step = 29500 (0.269 sec) INFO:tensorflow:loss = 0.09097028, step = 29500 (0.269 sec) INFO:tensorflow:global_step/sec: 369.804 INFO:tensorflow:global_step/sec: 369.804 INFO:tensorflow:loss = 0.15778117, step = 29600 (0.270 sec) INFO:tensorflow:loss = 0.15778117, step = 29600 (0.270 sec) INFO:tensorflow:global_step/sec: 366.008 INFO:tensorflow:global_step/sec: 366.008 INFO:tensorflow:loss = 0.13056618, step = 29700 (0.273 sec) INFO:tensorflow:loss = 0.13056618, step = 29700 (0.273 sec) INFO:tensorflow:global_step/sec: 370.761 INFO:tensorflow:global_step/sec: 370.761 INFO:tensorflow:loss = 0.026432596, step = 29800 (0.270 sec) INFO:tensorflow:loss = 0.026432596, step = 29800 (0.270 sec) INFO:tensorflow:global_step/sec: 369.304 INFO:tensorflow:global_step/sec: 369.304 INFO:tensorflow:loss = 0.059833053, step = 29900 (0.271 sec) INFO:tensorflow:loss = 0.059833053, step = 29900 (0.271 sec)

INFO:tensorflow:Saving dict for global step 30000: accuracy = 0.9753, global_step = 30000, loss = 0.07867345

INFO:tensorflow:Saving dict for global step 30000: accuracy = 0.9753, global_step = 30000, loss = 0.07867345

INFO:tensorflow:Loss for final step: 0.079707995.

INFO:tensorflow:Loss for final step: 0.079707995.

debug 0111: duration is 93.74298906326294

2021-01-11 08:59:35 Uploading - Uploading generated training model 2021-01-11 08:59:35 Completed - Training job completed Training seconds: 189 Billable seconds: 189 wall time is 344.06185269355774

作为比对,当 train_instance_count=2 时,怎么感觉没什么加速效果?

# 看上去各自独立 "num_cpus": 8, "num_gpus": 1,

INFO:tensorflow:loss = 0.0903343, step = 29240 (5.858 sec) INFO:tensorflow:loss = 0.0903343, step = 29240 (5.858 sec) INFO:tensorflow:global_step/sec: 57.0652 INFO:tensorflow:global_step/sec: 57.0652 INFO:tensorflow:loss = 0.07312758, step = 29322 (2.485 sec) INFO:tensorflow:loss = 0.07312758, step = 29322 (2.485 sec) INFO:tensorflow:global_step/sec: 57.8806 INFO:tensorflow:global_step/sec: 57.8806 INFO:tensorflow:global_step/sec: 56.3061 INFO:tensorflow:global_step/sec: 56.3061 INFO:tensorflow:loss = 0.045585766, step = 29465 (2.538 sec) INFO:tensorflow:loss = 0.045585766, step = 29465 (2.538 sec) INFO:tensorflow:global_step/sec: 56.88 INFO:tensorflow:global_step/sec: 56.88 INFO:tensorflow:loss = 0.03344103, step = 29574 (5.872 sec) INFO:tensorflow:loss = 0.03344103, step = 29574 (5.872 sec) INFO:tensorflow:loss = 0.09144282, step = 29608 (2.522 sec) INFO:tensorflow:loss = 0.09144282, step = 29608 (2.522 sec) INFO:tensorflow:global_step/sec: 56.1107 INFO:tensorflow:global_step/sec: 56.1107 INFO:tensorflow:global_step/sec: 57.3806 INFO:tensorflow:global_step/sec: 57.3806 INFO:tensorflow:loss = 0.11538148, step = 29750 (2.487 sec) INFO:tensorflow:loss = 0.11538148, step = 29750 (2.487 sec) INFO:tensorflow:global_step/sec: 56.2365 INFO:tensorflow:global_step/sec: 56.2365 INFO:tensorflow:loss = 0.098466985, step = 29894 (2.564 sec) INFO:tensorflow:loss = 0.098466985, step = 29894 (2.564 sec) INFO:tensorflow:loss = 0.1305417, step = 29906 (5.866 sec) INFO:tensorflow:loss = 0.1305417, step = 29906 (5.866 sec) INFO:tensorflow:global_step/sec: 56.342 INFO:tensorflow:global_step/sec: 56.342 INFO:tensorflow:Saving dict for global step 30002: accuracy = 0.9779, global_step = 30002, loss = 0.073841065 INFO:tensorflow:Saving dict for global step 30002: accuracy = 0.9779, global_step = 30002, loss = 0.073841065 INFO:tensorflow:Loss for final step: 0.15788993. INFO:tensorflow:Loss for final step: 0.15788993. debug 0111: duration is 545.3711259365082 INFO:tensorflow:Loss for final step: 0.07843175. INFO:tensorflow:Loss for final step: 0.07843175. debug 0111: duration is 551.2653501033783 2021-01-11 09:36:18 Completed - Training job completed ProfilerReport-1610356840: IssuesFound Training seconds: 1560 Billable seconds: 1560 wall time is 959.4712088108063

三、SageMaker Distributed Training

官方教程:Distributed Training

三个案例:Distributed Training Jupyter Notebook Examples

For vision (image) models, try MNIST or MaskRCNN. For language (text) models, try BERT.

-

NVIDIA

原来都在这里:

Ref: https://github.com/NVIDIA/DeepLearningExamples

Ref: https://github.com/HerringForks/DeepLearningExamples

| Models | Framework | A100 | AMP | Multi-GPU | Multi-Node | TRT | ONNX | Triton | DLC | NB |

|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-50 | PyTorch | Yes | Yes | Yes | - | Yes | - | Yes | Yes | - |

| ResNeXt-101 | PyTorch | Yes | Yes | Yes | - | Yes | - | Yes | Yes | - |

| SE-ResNeXt-101 | PyTorch | Yes | Yes | Yes | - | Yes | - | Yes | Yes | - |

| Mask R-CNN | PyTorch | Yes | Yes | Yes | - | - | - | - | - | Yes |

| SSD | PyTorch | Yes | Yes | Yes | - | - | - | - | - | Yes |

| ResNet-50 | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| ResNeXt101 | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| SE-ResNeXt-101 | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| Mask R-CNN | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| SSD | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | Yes |

| U-Net Ind | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | Yes |

| U-Net Med | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| U-Net 3D | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| V-Net Med | TensorFlow | Yes | Yes | Yes | - | - | - | - | Yes | - |

| U-Net Med | TensorFlow2 | Yes | Yes | Yes | - | - | - | - | Yes | - |

| Mask R-CNN | TensorFlow2 | Yes | Yes | Yes | - | - | - | - | Yes | - |

| ResNet-50 | MXNet | - | Yes | Yes | - | - | - | - | - | - |

-

MaskRCNN

判断时间缩减的指标 total_steps: 是总的训练的步数,等于epoch*sample_count/batch_size,(sample_count是样本总数,epoch是总的循环次数)

subnets = ['<SUBNET_ID>'] # Should be same as Subnet used for FSx. Example: subnet-0f9XXXX security_group_ids = ['<SECURITY_GROUP_ID>'] # Should be same as Security group used for FSx. sg-03ZZZZZZ job_name = 'tf2-smdataparallel-mrcnn-fsx' # This job name is used as prefix to the sagemaker training job. Makes it easy for your look for your training job in SageMaker Training job console. file_system_id='<FSX_ID>' # FSx file system ID with your training dataset. Example: 'fs-0bYYYYYY'

what is

Amazon FSx Lustre file-system

/* implement */

浙公网安备 33010602011771号

浙公网安备 33010602011771号