[AWS] Deploy on ECS Fargate with Bitbucket Pipelines

bitbucket-pipelines.yml

Ref: https://bitbucket.org/bitbucketpipelines/example-aws-ecs-deploy/src/master/

Ref: Linux常用命令之envsubst

Ref: pipe是什么意思

Ref: cathes是什么意思 - Trying to understand pipelines caching

先看一下官方的案例。

image:

name: atlassian/default-image:2

pipelines:

default:

- step:

name: Build and publish docker image.

services:

- docker # Enable Docker for your repository

script:

# Modify the commands below to build your repository.

# Set the name of the docker image we will be building.

- export IMAGE_NAME="${DOCKERHUB_USERNAME}/${BITBUCKET_REPO_SLUG}:${BITBUCKET_BUILD_NUMBER}"

# Build the docker image and push to Dockerhub.

- docker build -t "$IMAGE_NAME" .

- docker login --username "$DOCKERHUB_USERNAME" --password "$DOCKERHUB_PASSWORD"

- docker push "$IMAGE_NAME"

- step:

name: Deploy to ECS

script:

# Replace the docker image name in the task definition with the newly pushed image.

- export IMAGE_NAME="${DOCKERHUB_USERNAME}/${BITBUCKET_REPO_SLUG}:${BITBUCKET_BUILD_NUMBER}"

- envsubst < task-definition-template.json > task-definition.json

# Update the task definition.

- pipe: atlassian/aws-ecs-deploy:1.0.0

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: $AWS_DEFAULT_REGION

CLUSTER_NAME: 'example-ecs-cluster'

SERVICE_NAME: 'example-ecs-service'

TASK_DEFINITION: 'task-definition.json'

由 task-definition-template.json 生成 task-definition.json

{

"family": "example-ecs-app",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "node-app",

"image": "${IMAGE_NAME}",

"portMappings": [

{

"containerPort": 3000,

"hostPort": 3000,

"protocol": "tcp"

}

],

"essential": true

}

],

"requiresCompatibilities": [

"FARGATE"

],

"cpu": "256",

"memory": "512"

}

实践笔记

Ref: BitBucket CiCd with AWS ECR

Ref: Deploying a Node API to Amazon ECS Fargate with Bitbucket Pipelines [全是文字]

This walk through is a simple step by step guide to deploying a Node API to Amazon ECS Fargate using Bitbucket Pipelines.

I recently had the task of setting up a CI/CD pipeline for a Node API. I looked at using a few different services but because our code is residing in Bitbucket I ultimately settled on Bitbucket Pipelines. This being the first time setting up a pipeline using Bitbucket Pipelines I scoured the inter webs to find a tutorial on how to do this using Amazon ECS Fargate. Lo and behold I did not find any accurate up to date tutorials. This walk through will be broken into 3 sections and assumes you have a basic knowledge of AWS services such as Application Load Balancers, IAM and Amazon ECS.

Creating an Application Load Balancer

Setting up ECS with Fargate

Enable and Configure Bitbucket Pipelines

Lets get started!

Step 1: Creating an Application Load Balancer (ALB)

The first step in the process is an optional step. Using a load balancer is not required for ECS but I highly recommend it. Using a load balancer with ECS has many benefits. One, it allows your ECS containers to use dynamic host port mapping which is a fancy way of saying you can run multiple tasks from the same service. Two, it allows for multiple services to use the same listener port on a single ALB. A load balancer also allows you to connect a domain to your application through Route 53 without having to set a static public ip.

Start by navigating to the EC2 dashboard and selecting Load Balancers from the left panel then select “Create Load Balancer”.

Provide a name for your Load Balancer and leave it as internet facing and ipv4.

Next, add listeners for both HTTP and HTTPS and select at least 2 availability zones.

Because you have set a HTTPS listener, you must provide a certificate type from AWS ACM or from IAM.

Setup any security groups you may need to expose any ports your Node service requires.

Next you will be asked to create a target group for the load balancer. Start by providing a name and selecting the IP target type (this is very important, only the IP type will work for Fargate).

Next, set the protocol to HTTP and the port to which ever port your service runs on ex. 3000

You must provide a health check route for the load balancer to use to check that your service is actually running. This route must return a 200 status. Leave the protocol as HTTP.

Finally you will be asked to register a target. Skip this step for now because ECS will automatically add targets for you.

Step 2: Setting up ECS with Fargate

In this stage of the process you will create and configure an Amazon ECS container service running Fargate. Amazon ECS is a high performance, highly scalable Docker compatible service which allows you to easily run a containerized application. With AWS Fargate you no longer need to worry about managing EC2 instances, Fargate manages everything for you.

First things first, create a repository to store Docker images. Navigate to the AWS ECR dashboard and select “Create Repository”. Copy the repository URI as you will need it later on.

Now navigate to the ECS dashboard and select “Create Cluster”.

Select the Networking Only template (powered by Fargate) and provide a name for the cluster. You can ignore the rest and select “Create”.

After the cluster is created, click on Task Definitions, then “Create new Task Definition”.

Provide a name for the task definition and select an existing task role or create a new one that has the correct permissions (ecsTaskExecutionRole).

Define the required task memory and CPU size needed for your application.

Next, we need to add a container. Select “Create Container” and provide a name. Paste the ECR repository URI into the image block.

Now add the port your app runs on to the port mappings section.

Add any required environment variables below in the Environment section.

You can leave the rest blank and hit “Add” then finish the task definition creation.

Once the task definition is created, navigate to the JSON tab and copy the contents. We will need this later in the process.

Now go back to your cluster and select the Service Tab and select “Create Service”.

Select Fargate and select the Task Definition you just created. The other fields should populate for you.

Next, provide a name for the service and set the min and max healthy percentages. I like to set the minimum to 100 and maximum 200 to ensure the service always has at least 1 task running on new deployments.

Leave the deployment type as Rolling Update and hit Next.

Select your existing VPC you want to use — should be the same used for the other items created. Select at least 2 subnets and select or create a new security group which will allow access to your application port.

If you decided to use a load balancer, select Application Load Balancer and select the load balancer you created earlier. Then select the container you created and hit “Add to Load Balancer”.

You will be asked to configure the container on the load balancer. For the target group name, select the target group you created earlier. If nothing is showing in the list, go back and make sure your target group is set to IP. Leave the rest the same and move to the next step.

Next, you may choose to setup Auto Scaling for our service, you can skip this step for now but for a production service I recommend it.

Review the service and finish the creation. ECS is now configured!

Step 3: Enable and Configure Bitbucket Pipelines

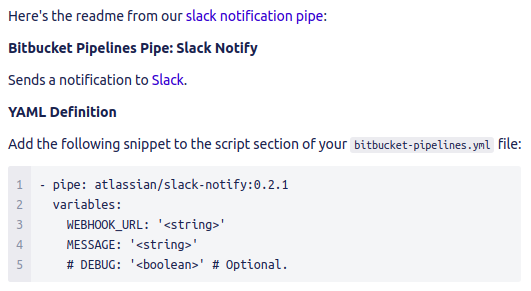

Pipelines is the integrated deployments service provided by Bitbucket. It makes setting up a CI/CD pipeline trivial with the use of Bitbucket pipes. Pipes are pre-configured blocks that allow you to interact with various services such as AWS and Slack. Read more about pipes here.

Navigate to your Bitbucket repository and select Settings → Pipelines → Settings. Here you can enable pipelines for the repo.

Next, under the Pipeline settings navigate to Repository Variables. Here you can add variables to be used within your deployment pipeline.

You need to set variables to allow Pipelines access to your AWS account. Set the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and AWS_DEFAULT_REGION. These value should come from an IAM user with enough permissions to execute ECS tasks.

Next add a bitbucket-pipelines.yml file to your repo. Below is an example file, to learn more about how to setup a pipelines yml file please check it out here. The below file handles a number of things:

Any pushes to the Dev branch will automatically run the application tests. It will setup a MySQL service to use within the tests. In order to do so, you must add a few more variables to our Repository Variables.

The script has two manual deployment steps, one for staging and one for production. Each step handles: testing the app, building the docker image and using a Bitbucket Pipe to push the docker image to the ECS container. Notice in the pipe we specify the cluster name, service name and the name of our task definition file. These variables can be set in the Repository Variables.

image: node:10.15.3

pipelines:

custom: # Pipelines that can only be triggered manually

staging:

- step:

name: Installing

caches:

- node

script:

- rm -rf package-lock.json

- rm -rf node_modules

- npm install

- step:

name: Running Tests

caches:

- node

script:

- npm run test

services:

- mysql

- step:

name: Build Docker Image

services:

- docker

image: atlassian/pipelines-awscli

script:

- echo $(aws ecr get-login --no-include-email --region us-west-2) > login.sh

- sh login.sh

- docker build -f Dockerfile-stage -t $ECR_STAGING_REPO_NAME .

- docker tag $ECR_STAGING_REPO_NAME:latest $ECR_STAGING_REPO_URI:latest

- docker push $ECR_STAGING_REPO_URI:latest

- step:

name: Deploy to Staging

services:

- docker

deployment: staging

script:

- pipe: atlassian/aws-ecs-deploy:1.0.3

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: $AWS_DEFAULT_REGION

CLUSTER_NAME: $ECS_STAGING_CLUSTER_NAME

SERVICE_NAME: $ECS_STAGING_SERVICE_NAME

TASK_DEFINITION: 'staging_task_definition.json'

DEBUG: "true"

production:

- step:

name: Installing

caches:

- node

script:

- rm -rf package-lock.json

- rm -rf node_modules

- npm install

- step:

name: Running Tests

caches:

- node

script:

- npm run test

services:

- mysql

- step:

name: Build Docker Image

services:

- docker

image: atlassian/pipelines-awscli

script:

- echo $(aws ecr get-login --no-include-email --region us-west-2) > login.sh

- sh login.sh

- docker build -f Dockerfile-prod -t $ECR_PRODUCTION_REPO_NAME .

- docker tag $ECR_PRODUCTION_REPO_NAME:latest $ECR_PRODUCTION_REPO_URI:latest

- docker push $ECR_PRODUCTION_REPO_URI:latest

- step:

name: Deploy to Production

services:

- docker

deployment: production

script:

- pipe: atlassian/aws-ecs-deploy:1.0.3

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: $AWS_DEFAULT_REGION

CLUSTER_NAME: $ECS_PRODUCTION_CLUSTER_NAME

SERVICE_NAME: $ECS_PRODUCTION_SERVICE_NAME

TASK_DEFINITION: 'production_task_definition.json'

DEBUG: "true"

branches:

dev:

- step:

name: Installing

caches:

- node

script:

- npm install

- step:

name: Running Tests

caches:

- node

script:

- npm run test

services:

- mysql

definitions:

services:

mysql:

image: mysql:5.7

variables:

MYSQL_DATABASE: $DB_DATABASE

MYSQL_RANDOM_ROOT_PASSWORD: 'yes'

MYSQL_USER: $DB_USER

MYSQL_PASSWORD: $DB_PASSWORD

5. Next you need to commit a Docker file for both staging (Dockerfile-stage) and production (Dockerfile-prod). Below is an example file which uses PM2 to run the Node service.

FROM node:10.15.3

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

# Install app dependencies

RUN npm install

# Bundle app source

COPY . .

# Install PM2

RUN npm install pm2 -g

# set the NODE_ENV to be used in the API

ENV NODE_ENV=staging

# Expose your app port and start app using PM2

EXPOSE 3000

CMD ["pm2-runtime", "server-wrapper.js"]

6. Next you need to create the Task Definition JSON file. Create a file matching the TASK_DEFINITION file name you specified in your bitbucket-pipelines.yml file. Paste the JSON contents found earlier in the Task Definition summary page in the Amazon Web Services portal. This file has a lot of data in it, you can clean it up by removing all the null props and only leaving the following props:

executionRoleArn

containerDefinitions

placementConstraints

taskRoleArn

family

requiresCompatibilities (make sure only FARGATE is listed here)

networkMode

cpu

memory

volumes

7. Once these files are committed to the repository you are ready to do a deployment!

Enjoy!

I hope this walk through will save you some time and get you started on creating your CI/CD pipeline with Bitbucket Pipelines and Amazon ECS. If you have any questions please leave a comment.

image: node:10.15.3

pipelines:

custom: # Pipelines that can only be triggered manually

staging:

- step:

name: Installing

caches:

- node

script:

- rm -rf package-lock.json

- rm -rf node_modules

- npm install

- step:

name: Running Tests

caches:

- node

script:

- npm run test

services:

- mysql

- step:

name: Build Docker Image

services:

- docker

image: atlassian/pipelines-awscli

script:

- echo $(aws ecr get-login --no-include-email --region us-west-2) > login.sh

- sh login.sh

- docker build -f Dockerfile-stage -t $ECR_STAGING_REPO_NAME .

- docker tag $ECR_STAGING_REPO_NAME:latest $ECR_STAGING_REPO_URI:latest

- docker push $ECR_STAGING_REPO_URI:latest

- step:

name: Deploy to Staging

services:

- docker

deployment: staging

script:

- pipe: atlassian/aws-ecs-deploy:1.0.3

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: $AWS_DEFAULT_REGION

CLUSTER_NAME: $ECS_STAGING_CLUSTER_NAME

SERVICE_NAME: $ECS_STAGING_SERVICE_NAME

TASK_DEFINITION: 'staging_task_definition.json'

DEBUG: "true"

production:

- step:

name: Installing

caches:

- node

script:

- rm -rf package-lock.json

- rm -rf node_modules

- npm install

- step:

name: Running Tests

caches:

- node

script:

- npm run test

services:

- mysql

- step:

name: Build Docker Image

services:

- docker

image: atlassian/pipelines-awscli

script:

- echo $(aws ecr get-login --no-include-email --region us-west-2) > login.sh

- sh login.sh

- docker build -f Dockerfile-prod -t $ECR_PRODUCTION_REPO_NAME .

- docker tag $ECR_PRODUCTION_REPO_NAME:latest $ECR_PRODUCTION_REPO_URI:latest

- docker push $ECR_PRODUCTION_REPO_URI:latest

- step:

name: Deploy to Production

services:

- docker

deployment: production

script:

- pipe: atlassian/aws-ecs-deploy:1.0.3

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: $AWS_DEFAULT_REGION

CLUSTER_NAME: $ECS_PRODUCTION_CLUSTER_NAME

SERVICE_NAME: $ECS_PRODUCTION_SERVICE_NAME

TASK_DEFINITION: 'production_task_definition.json'

DEBUG: "true"

branches:

dev:

- step:

name: Installing

caches:

- node

script:

- npm install

- step:

name: Running Tests

caches:

- node

script:

- npm run test

services:

- mysql

definitions:

services:

mysql:

image: mysql:5.7

variables:

MYSQL_DATABASE: $DB_DATABASE

MYSQL_RANDOM_ROOT_PASSWORD: 'yes'

MYSQL_USER: $DB_USER

MYSQL_PASSWORD: $DB_PASSWORD

浙公网安备 33010602011771号

浙公网安备 33010602011771号