[TF Lite] How to convert a custom model to TensorFlow Lite

熱身資源

Resources

Ref: object detection模型转换成TensorFlow Lite,在Android应用, which looks good.

Ref: Tensorflow部署到移动端, no SSD.

Ref: TensorFlow Mobilenet SSD模型压缩并移植安卓上以达到实时检测效果

Ref: https://www.inspirisys.com/objectdetection_in_tensorflowdemo.pdf [Windows version]

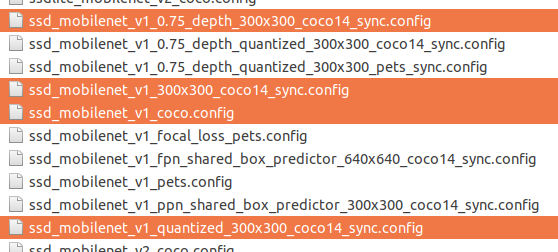

1. Choose one model config

https://github.com/tensorflow/models/tree/master/research/object_detection/samples/configs

How to use it.

$ python /home/Jeff/Desktop/AR/Wrapper/DNN/MobileNet/04_auto_train_cnn/object_detection/legacy/train.py

--logtostderr

--train_dir=/home/Jeff/Desktop/AR/Wrapper/DNN/MobileNet/04_auto_train_cnn/training

--pipeline_config_path=/home/Jeff/Desktop/AR/Wrapper/DNN/MobileNet/04_auto_train_cnn/training/ssd_mobilenet_v1_quantized_300x300_coco14_sync.config

2. Download the pre-trained model

Download from Tensorflow detection model zoo

3. Update your own config file

Ref: TensorFlow:使用Cloud TPU在30分钟内训练出实时移动对象检测器

Ref: [Tensorflow] Object Detection API - build your training environment

(b) no quantized: https://github.com/tensorflow/models/blob/master/research/object_detection/samples/configs/ssd_mobilenet_v1_300x300_coco14_sync.config

非洲小哥的相關博客

三篇系列文章,看上去不錯。已驗證,可以使用.

Setup TensorFlow for Object Detection on Ubuntu 16.04 (Part 1)

Training your Object Detection model on TensorFlow (Part 2)

Convert a TensorFlow frozen graph to a TensorFlow lite (tflite) file (Part 3)

Transfer learning

一、訓練準備

curl -O http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03.tar.gz tar xzf ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03.tar.gz

wget https://raw.githubusercontent.com/tensorflow/models/master/research/object_detection/samples/configs/ssd_mobilenet_v2_quantized_300x300_coco.config

二、開始訓練

/* implement */

三、訓練結束

(a) 得到冷凍模型

python object_detection/export_tflite_ssd_graph.py --pipeline_config_path=training/ssd_mobilenet_v2_quantized_300x300_coco.config --trained_checkpoint_prefix=training/model.ckpt-10 --output_directory=tflite --add_postprocessing_op=true

(b) 轉化爲tflite模型

tflite_convert --graph_def_file=tflite/tflite_graph.pb --output_file=tflite/detect.tflite --output_format=TFLITE --input_shapes=1,300,300,3 --input_arrays=normalized_input_image_tensor --output_arrays='TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3' --inference_type=QUANTIZED_UINT8 --mean_values=128 --std_dev_values=127 --change_concat_input_ranges=false --allow_custom_ops

集成到 Android Studio

一、Resource

官方案例:https://github.com/tensorflow/examples/tree/master/lite/examples/object_detection

使用的是:coco_ssd_mobilenet_v1_1.0_quant_2018_06_29

问题来了,如何把demo中的模型改为非洲小哥的:ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03

二、Release information

为什么有必要升级模型?

Ref: Searching for MobileNetV3

MobileNet v2 seems to have amazing performance progress.

Nov 13th, 2019

We have released MobileNetEdgeTPU SSDLite model.

-

- SSDLite with MobileNetEdgeTPU backbone, which achieves 10% mAP higher than MobileNetV2 SSDLite (24.3 mAP vs 22 mAP) on a Google Pixel4 at comparable latency (6.6ms vs 6.8ms).

Along with the model definition, we are also releasing model checkpoints trained on the COCO dataset.

Thanks to contributors: Yunyang Xiong, Bo Chen, Suyog Gupta, Hanxiao Liu, Gabriel Bender, Mingxing Tan, Berkin Akin, Zhichao Lu, Quoc Le

Oct 15th, 2019

We have released two MobileNet V3 SSDLite models (presented in Searching for MobileNetV3).

-

- SSDLite with MobileNet-V3-Large backbone, which is 27% faster than Mobilenet V2 SSDLite (119ms vs 162ms) on a Google Pixel phone CPU at the same mAP.

- SSDLite with MobileNet-V3-Small backbone, which is 37% faster than MnasNet SSDLite reduced with depth-multiplier (43ms vs 68ms) at the same mAP.

Along with the model definition, we are also releasing model checkpoints trained on the COCO dataset.

Thanks to contributors: Bo Chen, Zhichao Lu, Vivek Rathod, Jonathan Huang

try { detector = TFLiteObjectDetectionAPIModel.create( getAssets(), TF_OD_API_MODEL_FILE, TF_OD_API_LABELS_FILE, TF_OD_API_INPUT_SIZE, TF_OD_API_IS_QUANTIZED); cropSize = TF_OD_API_INPUT_SIZE; } catch (final IOException e) { e.printStackTrace(); LOGGER.e(e, "Exception initializing classifier!"); Toast toast = Toast.makeText( getApplicationContext(), "Classifier could not be initialized", Toast.LENGTH_SHORT); toast.show(); finish(); }

/* implement */

浙公网安备 33010602011771号

浙公网安备 33010602011771号